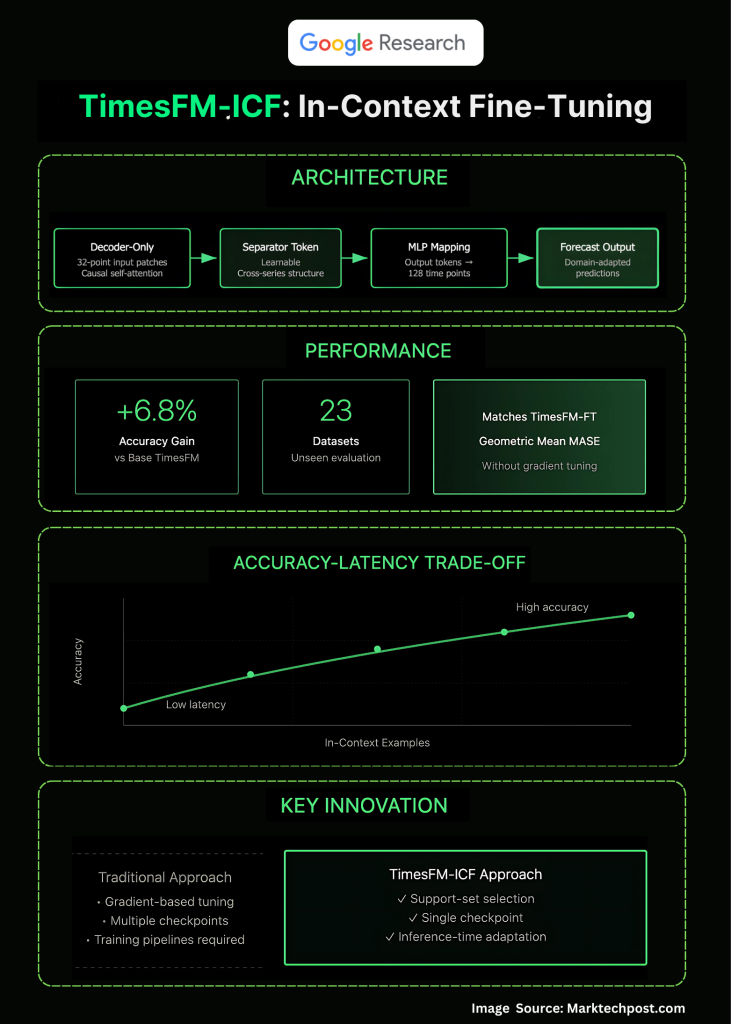

Google Analysis introduces in-context fine-tuning (ICF) for time-series forecasting named as ‘TimesFM-ICF): a continued-pretraining recipe that teaches TimesFM to take advantage of a number of associated sequence offered instantly within the immediate at inference time. The result’s a few-shot forecaster that matches supervised fine-tuning whereas delivering +6.8% accuracy over the bottom TimesFM throughout an OOD benchmark—no per-dataset coaching loop required.

What ache level in forecasting is being eradicated?

Most manufacturing workflows nonetheless commerce off between (a) one mannequin per dataset by way of supervised fine-tuning (accuracy, however heavy MLOps) and (b) zero-shot basis fashions (easy, however not domain-adapted). Google’s new method retains a single pre-trained TimesFM checkpoint however lets it adapt on the fly utilizing a handful of in-context examples from associated sequence throughout inference, avoiding per-tenant coaching pipelines.

How does in-context fine-tuning work underneath the hood?

Begin with TimesFM—a patched, decoder-only transformer that tokenizes 32-point enter patches and de-tokenizes 128-point outputs by way of a shared MLP—and proceed pre-training it on sequences that interleave the goal historical past with a number of “assist” sequence. Now the important thing change launched is a learnable widespread separator token, so cross-example causal consideration can mine construction throughout examples with out conflating developments. The coaching goal stays next-token prediction; what’s new is the context development that teaches the mannequin to motive throughout a number of associated sequence at inference time.

What precisely is “few-shot” right here?

At inference, the person concatenates the goal historical past with kk extra time-series snippets (e.g., related SKUs, adjoining sensors), every delimited by the separator token. The mannequin’s consideration layers are actually explicitly educated to leverage these in-context examples, analogous to LLM few-shot prompting—however for numeric sequences moderately than textual content tokens. This shifts adaptation from parameter updates to immediate engineering over structured sequence.

Does it really match supervised fine-tuning?

On a 23-dataset out-of-domain suite, TimesFM-ICF equals the efficiency of per-dataset TimesFM-FT whereas being 6.8% extra correct than TimesFM-Base (geometric imply of scaled MASE). The weblog additionally reveals the anticipated accuracy–latency trade-off: extra in-context examples yield higher forecasts at the price of longer inference. A “simply make the context longer” ablation signifies that structured in-context examples beat naive long-context alone.

How is that this totally different from Chronos-style approaches?

Chronos tokenizes values right into a discrete vocabulary and demonstrated robust zero-shot accuracy and quick variants (e.g., Chronos-Bolt). Google’s contribution right here is just not one other tokenizer or headroom on zero-shot; it’s making a time-series FM behave like an LLM few-shot learner—studying from cross-series context at inference. That functionality closes the hole between “train-time adaptation” and “prompt-time adaptation” for numeric forecasting.

What are the architectural specifics to observe?

The analysis workforce highlights: (1) separator tokens to mark boundaries, (2) causal self-attention over blended histories and examples, (3) continued patching and shared MLP heads, and (4) continued pre-training to instill cross-example habits. Collectively, these allow the mannequin to deal with assist sequence as informative exemplars moderately than background noise.

Abstract

Google’s in-context fine-tuning turns TimesFM right into a sensible few-shot forecaster: a single pretrained checkpoint that adapts at inference by way of curated assist sequence, delivering fine-tuning-level accuracy with out per-dataset coaching overhead—helpful for multi-tenant, latency-bounded deployments the place choice of assist units turns into the primary management floor

FAQs

1) What’s Google’s “in-context fine-tuning” (ICF) for time sequence?

ICF is sustained pre-training that situations TimesFM to make use of a number of associated sequence positioned within the immediate at inference, enabling few-shot adaptation with out per-dataset gradient updates.

2) How does ICF differ from customary fine-tuning and zero-shot use?

Normal fine-tuning updates weights per dataset; zero-shot makes use of a hard and fast mannequin with solely the goal historical past. ICF retains weights fastened at deployment however learns throughout pre-training how to leverage further in-context examples, matching per-dataset fine-tuning on reported benchmarks.

3) What architectural or coaching modifications have been launched?

TimesFM is continued-pretrained with sequences that interleave goal historical past and assist sequence, separated by particular boundary tokens so causal consideration can exploit cross-series construction; the remainder of the decoder-only TimesFM stack stays intact.

4) What do the outcomes present relative to baselines?

On out-of-domain suites, ICF improves over TimesFM base and reaches parity with supervised fine-tuning; it’s evaluated in opposition to robust TS baselines (e.g., PatchTST) and prior FMs (e.g., Chronos).

Take a look at the Paper and Technical particulars. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication.

For content material partnership/promotions on marktechpost.com, please TALK to us

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments at the moment: learn extra, subscribe to our e-newsletter, and turn out to be a part of the NextTech group at NextTech-news.com