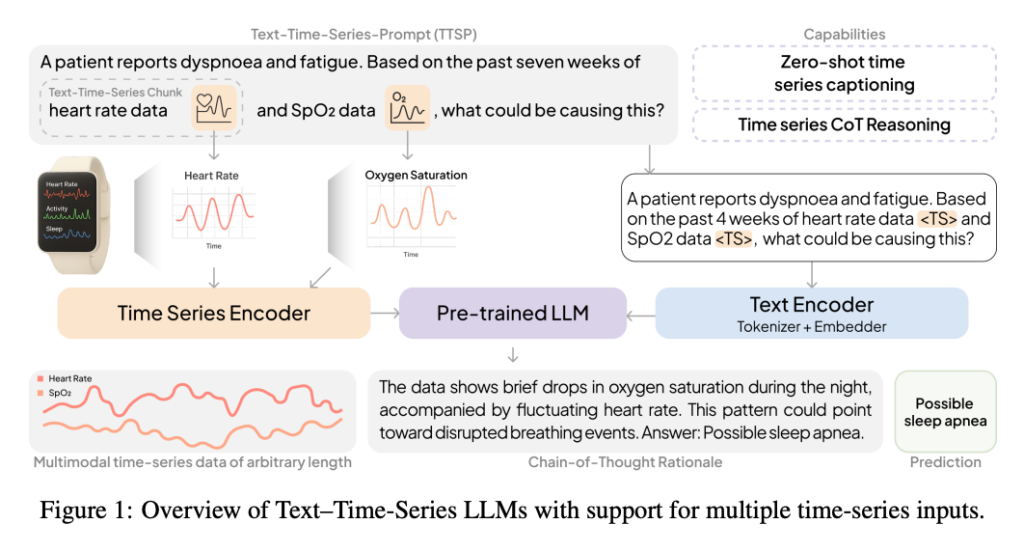

A big improvement is about to rework AI in healthcare. Researchers at Stanford College, in collaboration with ETH Zurich and tech leaders together with Google Analysis and Amazon, have launched OpenTSLM, a novel household of Time-Collection Language Fashions (TSLMs).

This breakthrough addresses a essential limitation in present LLMs by enabling them to interpret and cause over advanced, steady medical time-series information, corresponding to ECGs, EEGs, and wearable sensor streams, a feat the place even frontier fashions like GPT-4o have struggled.

The Crucial Blind Spot: LLM Limitations in Time-Collection Evaluation

Medication is basically temporal. Correct prognosis depends closely on monitoring how very important indicators, biomarkers, and sophisticated indicators evolve. Regardless of the proliferation of digital well being know-how, at present’s most superior AI fashions have struggled to course of this uncooked, steady information.

The core problem lies within the “modality hole”, the distinction between steady indicators (like a heartbeat) and the discrete textual content tokens that LLMs perceive. Earlier makes an attempt to bridge this hole by changing indicators into textual content have confirmed inefficient and tough to scale.

Why Imaginative and prescient-Language Fashions (VLMs) Fail at Time-Collection Information

A typical workaround has been to transform time-series information into static pictures (line plots) and enter them into superior Imaginative and prescient-Language Fashions (VLMs). Nonetheless, the OpenTSLM analysis demonstrates this strategy is surprisingly ineffective for exact medical information evaluation.

VLMs are primarily educated on pure pictures; they acknowledge objects and scenes, not the dense, sequential dynamics of information visualizations. When high-frequency indicators like an ECG are rendered into pixels, essential fine-grained data is misplaced. Refined temporal dependencies and high-frequency modifications, very important for figuring out coronary heart arrhythmias or particular sleep phases, grow to be obscured.

The examine confirms that VLMs battle considerably when analyzing these plots, highlighting that point sequence have to be handled as a definite information modality, not merely an image.

Introducing OpenTSLM: A Native Modality Method

OpenTSLM integrates time sequence as a native modality immediately into pretrained LLMs (corresponding to Llama and Gemma), enabling pure language querying and reasoning over advanced well being information.

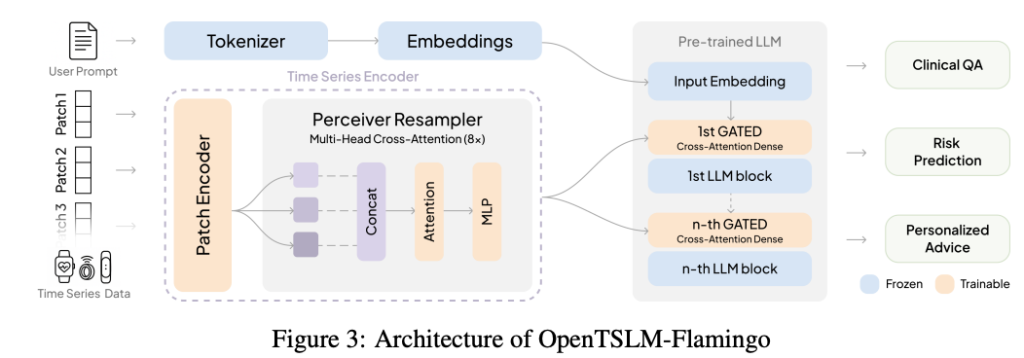

The analysis staff explored two distinct architectures:

Structure Deep Dive: SoftPrompt vs. Flamingo

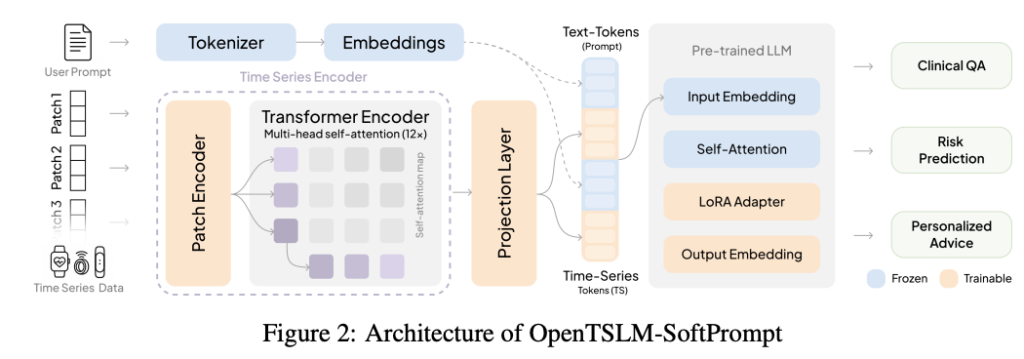

1. OpenTSLM-SoftPrompt (Implicit Modeling)

This strategy encodes time-series information into learnable tokens, that are then mixed with textual content tokens (gentle prompting). Whereas environment friendly for brief information bursts, this methodology scales poorly. Longer sequences require exponentially extra reminiscence, making it impractical for complete evaluation.

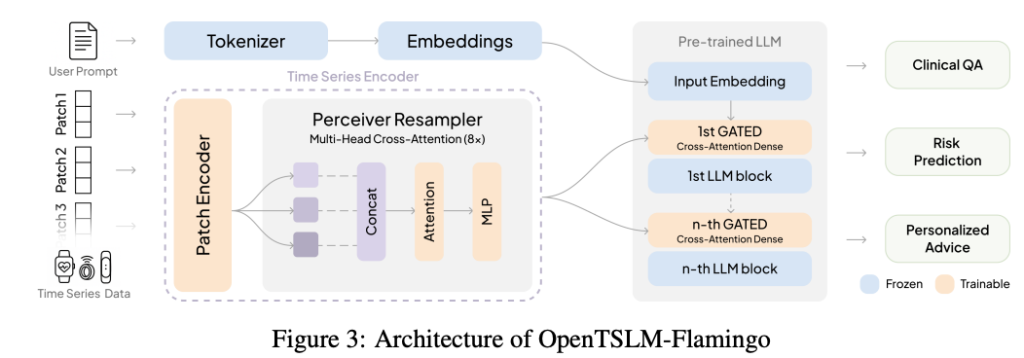

2. OpenTSLM-Flamingo (Specific Modeling)

Impressed by the Flamingo structure, that is the breakthrough answer for scalability. It explicitly fashions time sequence as a separate modality. It makes use of a specialised encoder and a Perceiver Resampler to create a fixed-size illustration of the info, no matter its size, and fuses it with textual content utilizing gated cross-attention.

OpenTSLM-Flamingo maintains secure reminiscence necessities even with in depth information streams. As an example, throughout coaching on advanced ECG information evaluation, the Flamingo variant required solely 40 GB of VRAM, in comparison with 110 GB for the SoftPrompt variant utilizing the identical LLM spine.

Efficiency Breakthroughs: Outperforming GPT-4o

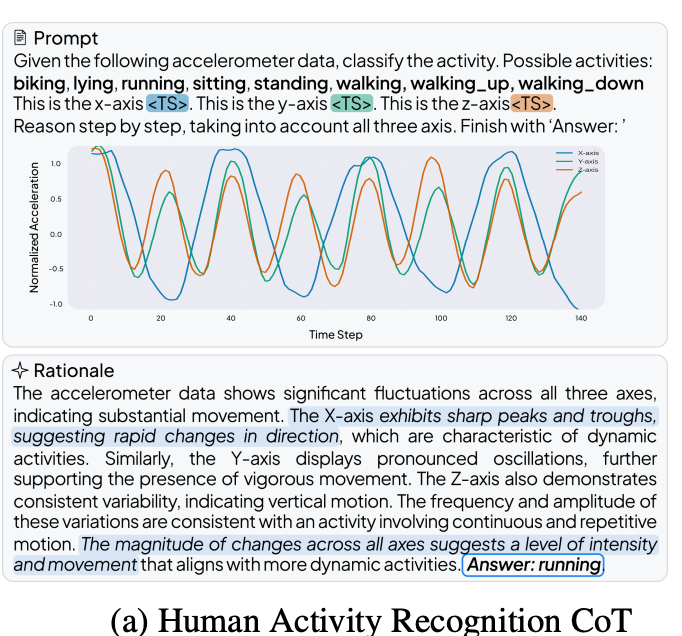

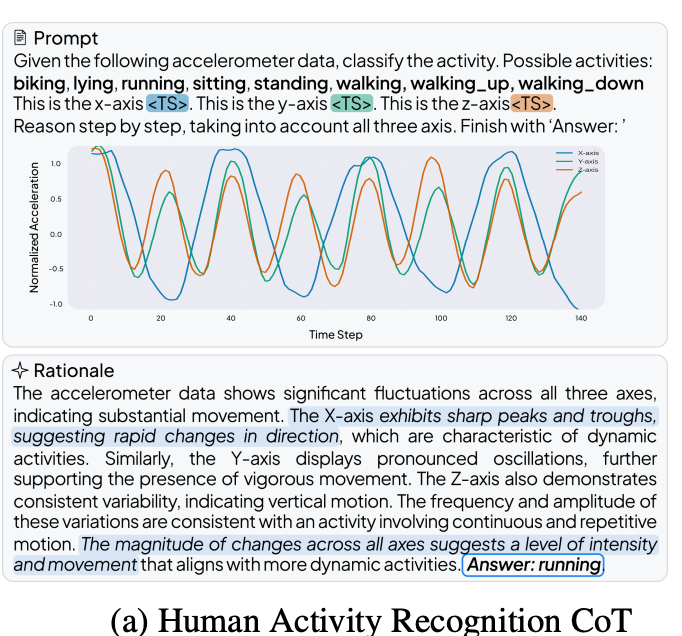

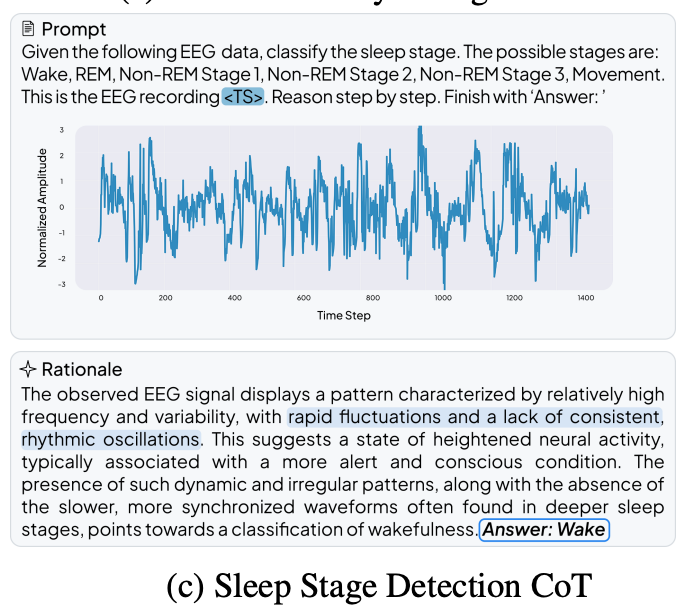

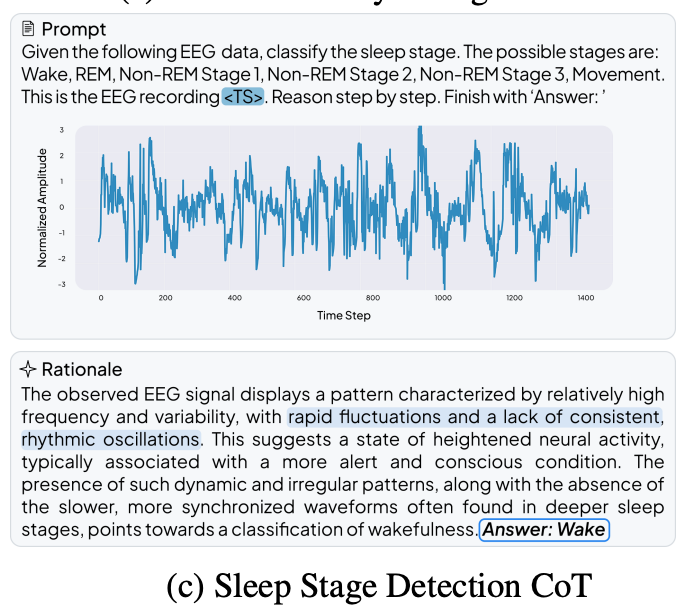

The outcomes display the clear superiority of the specialised TSLM strategy. To benchmark efficiency, the staff created three new Chain-of-Thought (CoT) datasets centered on medical reasoning: HAR-CoT (exercise recognition), Sleep-CoT (EEG sleep staging), and ECG-QA-CoT (ECG query answering).

- Sleep Staging: OpenTSLM achieved a 69.9% F1 rating, vastly outperforming one of the best fine-tuned text-only baseline (9.05%).

- Exercise Recognition: OpenTSLM reached a 65.4% F1 rating

Right here is an instance of human exercise recognition COT.

Right here is an instance of Sleep exercise detection:

Remarkably, even small-scale OpenTSLM fashions (1 billion parameters) considerably surpassed GPT-4o. Whether or not processing the info as textual content tokens (the place GPT-4o scored solely 15.47% on Sleep-CoT) or as pictures, the frontier mannequin did not match the specialised TSLMs.

This discovering underscores that specialised, domain-adapted AI architectures can obtain superior outcomes with out large scale, paving the best way for environment friendly, on-device medical AI deployment.

Scientific Validation at Stanford Hospital: Making certain Belief and Transparency

An important factor of Medical AI is belief. In contrast to conventional fashions that output a single classification, OpenTSLM generates human-readable rationales (Chain-of-Thought), explaining its predictions. This AI transparency is significant for scientific settings.

To validate the standard of this reasoning, an skilled overview was carried out with 5 cardiologists from Stanford Hospital. They assessed the rationales generated by the OpenTSLM-Flamingo mannequin for ECG interpretation.

The analysis discovered that the mannequin supplied an accurate or partially right ECG interpretation in a powerful 92.9% of instances. The mannequin confirmed distinctive energy in integrating scientific context (85.1% optimistic assessments), demonstrating subtle reasoning capabilities over uncooked sensor information.

The Way forward for Multimodal Machine Studying

The introduction of OpenTSLM marks a major development in multimodal machine studying. By successfully bridging the hole between LLMs and time-series information, this analysis lays the muse for general-purpose TSLMs able to dealing with numerous longitudinal information, not simply in healthcare, but additionally in finance, industrial monitoring, and past.

To speed up innovation within the area, the Stanford and ETH Zurich groups have open-sourced all code, datasets, and educated mannequin weights.

Take a look at the Paper right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as nicely.

Jean-marc is a profitable AI enterprise government .He leads and accelerates development for AI powered options and began a pc imaginative and prescient firm in 2006. He’s a acknowledged speaker at AI conferences and has an MBA from Stanford.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments at present: learn extra, subscribe to our e-newsletter, and grow to be a part of the NextTech neighborhood at NextTech-news.com