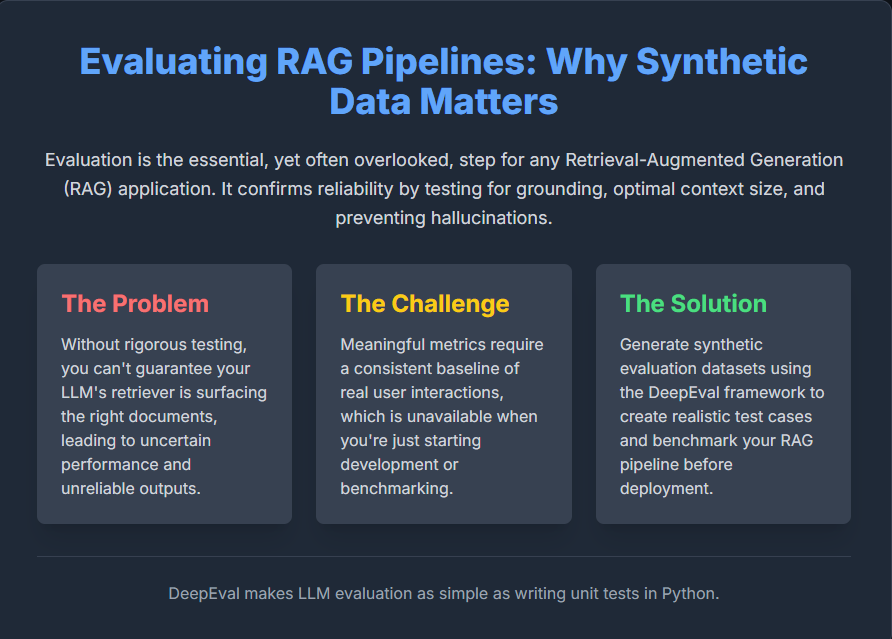

Evaluating LLM purposes, significantly these utilizing RAG (Retrieval-Augmented Era), is essential however usually uncared for. With out correct analysis, it’s virtually unattainable to verify in case your system’s retriever is efficient, if the LLM’s solutions are grounded within the sources (or hallucinating), and if the context measurement is perfect.

Since preliminary testing lacks the mandatory actual person information for a baseline, a sensible resolution is artificial analysis datasets. This text will present you generate these sensible take a look at circumstances utilizing DeepEval, an open-source framework that simplifies LLM analysis, permitting you to benchmark your RAG pipeline earlier than it goes stay. Take a look at the FULL CODES right here.

Putting in the dependencies

!pip set up deepeval chromadb tiktoken pandasOpenAI API Key

Since DeepEval leverages exterior language fashions to carry out its detailed analysis metrics, an OpenAI API key’s required for this tutorial to run.

- If you’re new to the OpenAI platform, you could want so as to add billing particulars and make a small minimal fee (usually $5) to totally activate your API entry.

Defining the textual content

On this step, we’re manually making a textual content variable that may act as our supply doc for producing artificial analysis information.

This textual content combines numerous factual content material throughout a number of domains — together with biology, physics, historical past, house exploration, environmental science, drugs, computing, and historic civilizations — to make sure the LLM has wealthy and different materials to work with.

DeepEval’s Synthesizer will later:

- Break up this textual content into semantically coherent chunks,

- Choose significant contexts appropriate for producing questions, and

- Produce artificial “golden” pairs — (enter, expected_output) — that simulate actual person queries and ultimate LLM responses.

After defining the textual content variable, we put it aside as a .txt file in order that DeepEval can learn and course of it later. You should use some other textual content doc of your selection — resembling a Wikipedia article, analysis abstract, or technical weblog publish — so long as it incorporates informative and well-structured content material. Take a look at the FULL CODES right here.

textual content = """

Crows are among the many smartest birds, able to utilizing instruments and recognizing human faces even after years.

In distinction, the archerfish shows exceptional precision, capturing jets of water to knock bugs off branches.

In the meantime, on this planet of physics, superconductors can carry electrical present with zero resistance -- a phenomenon

found over a century in the past however nonetheless unlocking new applied sciences like quantum computer systems as we speak.

Shifting to historical past, the Library of Alexandria was as soon as the most important heart of studying, however a lot of its assortment was

misplaced in fires and wars, changing into a logo of human curiosity and fragility. In house exploration, the Voyager 1 probe,

launched in 1977, has now left the photo voltaic system, carrying a golden report that captures sounds and pictures of Earth.

Nearer to residence, the Amazon rainforest produces roughly 20% of the world's oxygen, whereas coral reefs -- usually referred to as the

"rainforests of the ocean" -- assist practically 25% of all marine life regardless of protecting lower than 1% of the ocean flooring.

In drugs, MRI scanners use robust magnetic fields and radio waves

to generate detailed pictures of organs with out dangerous radiation.

In computing, Moore's Regulation noticed that the variety of transistors

on microchips doubles roughly each two years, although current advances

in AI chips have shifted that pattern.

The Mariana Trench is the deepest a part of Earth's oceans,

reaching practically 11,000 meters under sea stage, deeper than Mount Everest is tall.

Historical civilizations just like the Sumerians and Egyptians invented

mathematical methods hundreds of years earlier than fashionable algebra emerged.

"""with open("instance.txt", "w") as f:

f.write(textual content)Producing Artificial Analysis Knowledge

On this code, we use the Synthesizer class from the DeepEval library to routinely generate artificial analysis information — additionally referred to as goldens — from an current doc. The mannequin “gpt-4.1-nano” is chosen for its light-weight nature. We offer the trail to our doc (instance.txt), which incorporates factual and descriptive content material throughout numerous matters like physics, ecology, and computing. The synthesizer processes this textual content to create significant query–reply pairs (goldens) that may later be used to check and benchmark LLM efficiency on comprehension or retrieval duties.

The script efficiently generates as much as six artificial goldens. The generated examples are fairly wealthy — as an example, one enter asks to “Evaluate the cognitive talents of corvids in facial recognition duties,” whereas one other explores “Amazon’s oxygen contribution and its function in ecosystems.” Every output features a coherent anticipated reply and contextual snippets derived straight from the doc, demonstrating how DeepEval can routinely produce high-quality artificial datasets for LLM analysis. Take a look at the FULL CODES right here.

from deepeval.synthesizer import Synthesizer

synthesizer = Synthesizer(mannequin="gpt-4.1-nano")

# Generate artificial goldens out of your doc

synthesizer.generate_goldens_from_docs(

document_paths=["example.txt"],

include_expected_output=True

)

# Print generated outcomes

for golden in synthesizer.synthetic_goldens[:3]:

print(golden, "n")Utilizing EvolutionConfig to Management Enter Complexity

On this step, we configure the EvolutionConfig to affect how the DeepEval synthesizer generates extra complicated and numerous inputs. By assigning weights to totally different evolution varieties — resembling REASONING, MULTICONTEXT, COMPARATIVE, HYPOTHETICAL, and IN_BREADTH — we information the mannequin to create questions that fluctuate in reasoning type, context utilization, and depth.

The num_evolutions parameter specifies what number of evolution methods will likely be utilized to every textual content chunk, permitting a number of views to be synthesized from the identical supply materials. This strategy helps generate richer analysis datasets that take a look at an LLM’s potential to deal with nuanced and multi-faceted queries.

The output demonstrates how this configuration impacts the generated goldens. As an example, one enter asks about crows’ software use and facial recognition, prompting the LLM to supply an in depth reply protecting problem-solving and adaptive conduct. One other enter compares Voyager 1’s golden report with the Library of Alexandria, requiring reasoning throughout a number of contexts and historic significance.

Every golden contains the unique context, utilized evolution varieties (e.g., Hypothetical, In-Breadth, Reasoning), and an artificial high quality rating. Even with a single doc, this evolution-based strategy creates numerous, high-quality artificial analysis examples for testing LLM efficiency. Take a look at the FULL CODES right here.

from deepeval.synthesizer.config import EvolutionConfig, Evolution

evolution_config = EvolutionConfig(

evolutions={

Evolution.REASONING: 1/5,

Evolution.MULTICONTEXT: 1/5,

Evolution.COMPARATIVE: 1/5,

Evolution.HYPOTHETICAL: 1/5,

Evolution.IN_BREADTH: 1/5,

},

num_evolutions=3

)

synthesizer = Synthesizer(evolution_config=evolution_config)

synthesizer.generate_goldens_from_docs(["example.txt"])This potential to generate high-quality, complicated artificial information is how we bypass the preliminary hurdle of missing actual person interactions. By leveraging DeepEval’s Synthesizer—particularly when guided by the EvolutionConfig—we transfer far past easy question-and-answer pairs.

The framework permits us to create rigorous take a look at circumstances that probe the RAG system’s limits, protecting all the things from multi-context comparisons and hypothetical situations to complicated reasoning.

This wealthy, custom-built dataset gives a constant and numerous baseline for benchmarking, permitting you to constantly iterate in your retrieval and era parts, construct confidence in your RAG pipeline’s grounding capabilities, and guarantee it delivers dependable efficiency lengthy earlier than it ever handles its first stay question. Take a look at the FULL CODES right here.

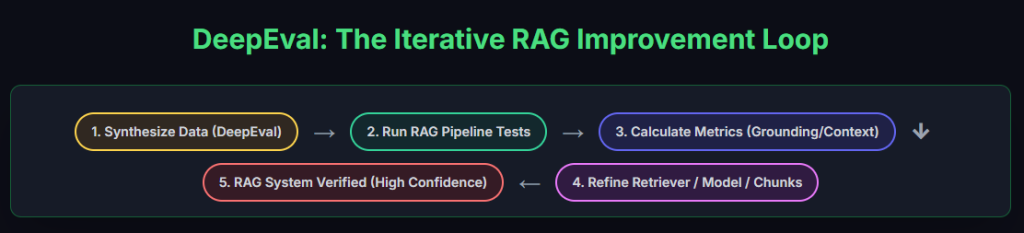

The above Iterative RAG Enchancment Loop makes use of DeepEval’s artificial information to determine a steady, rigorous testing cycle to your pipeline. By calculating important metrics like Grounding and Context, you acquire the mandatory suggestions to iteratively refine your retriever and mannequin parts. This systematic course of ensures you obtain a verified, high-confidence RAG system that maintains reliability earlier than deployment.

Take a look at the FULL CODES right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as effectively.

I’m a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I’ve a eager curiosity in Knowledge Science, particularly Neural Networks and their software in varied areas.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments as we speak: learn extra, subscribe to our publication, and change into a part of the NextTech neighborhood at NextTech-news.com