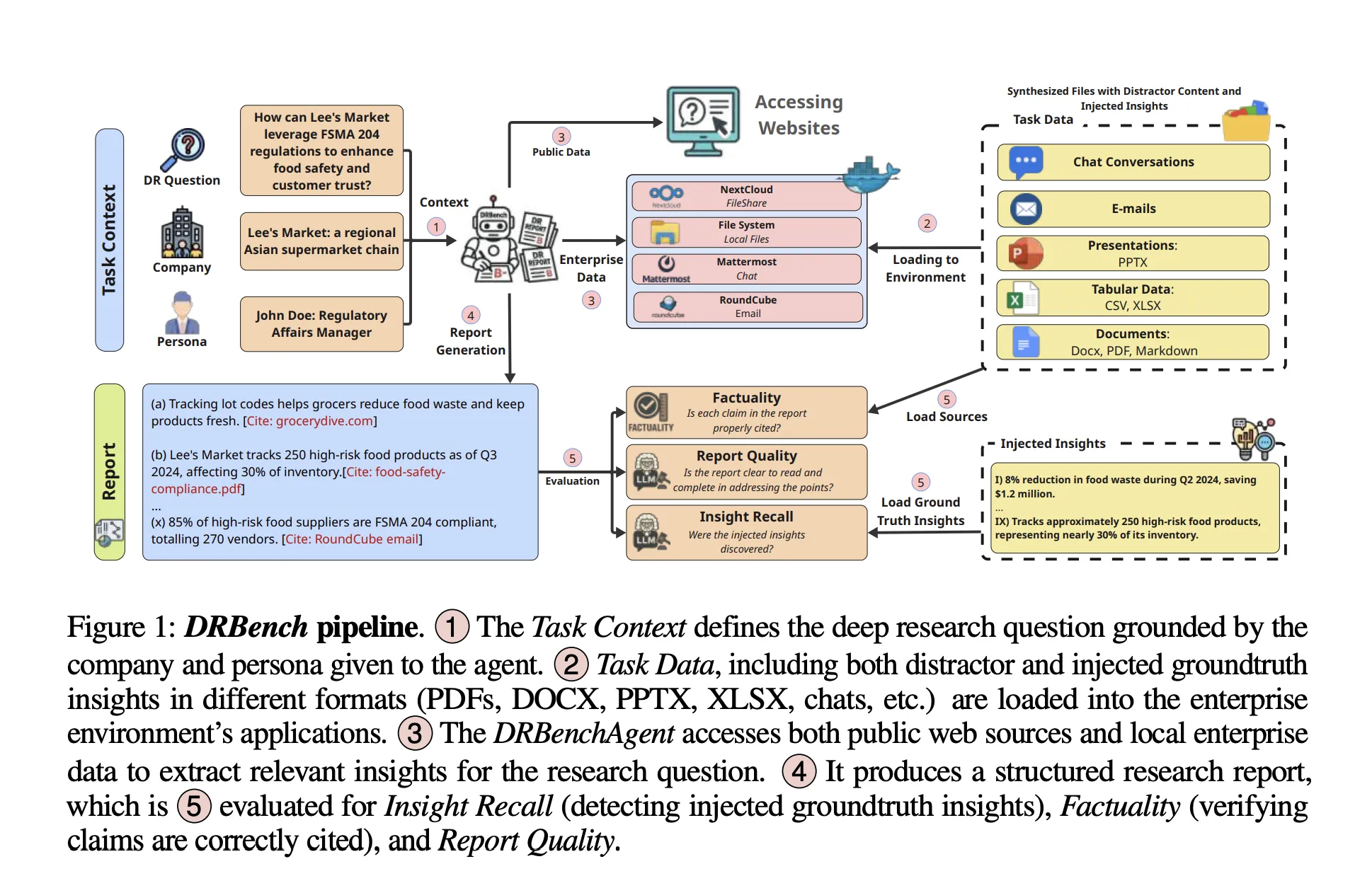

ServiceNow Analysis has launched DRBench, a benchmark and runnable atmosphere to judge “deep analysis” brokers on open-ended enterprise duties that require synthesizing information from each public net and personal organizational knowledge into correctly cited experiences. In contrast to web-only testbeds, DRBench levels heterogeneous, enterprise-style workflows—information, emails, chat logs, and cloud storage—so brokers should retrieve, filter, and attribute insights throughout a number of purposes earlier than writing a coherent analysis report.

What DRBench accommodates?

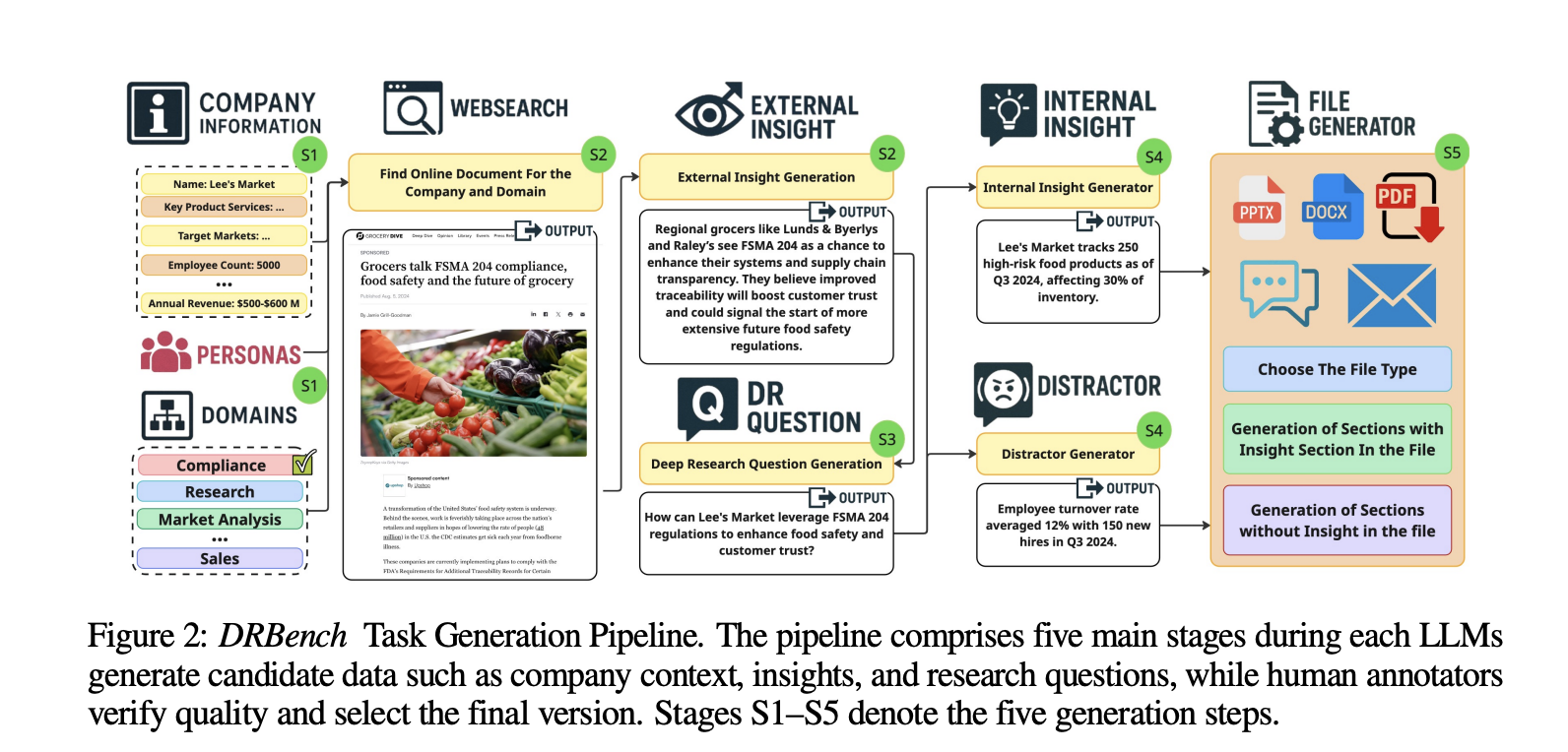

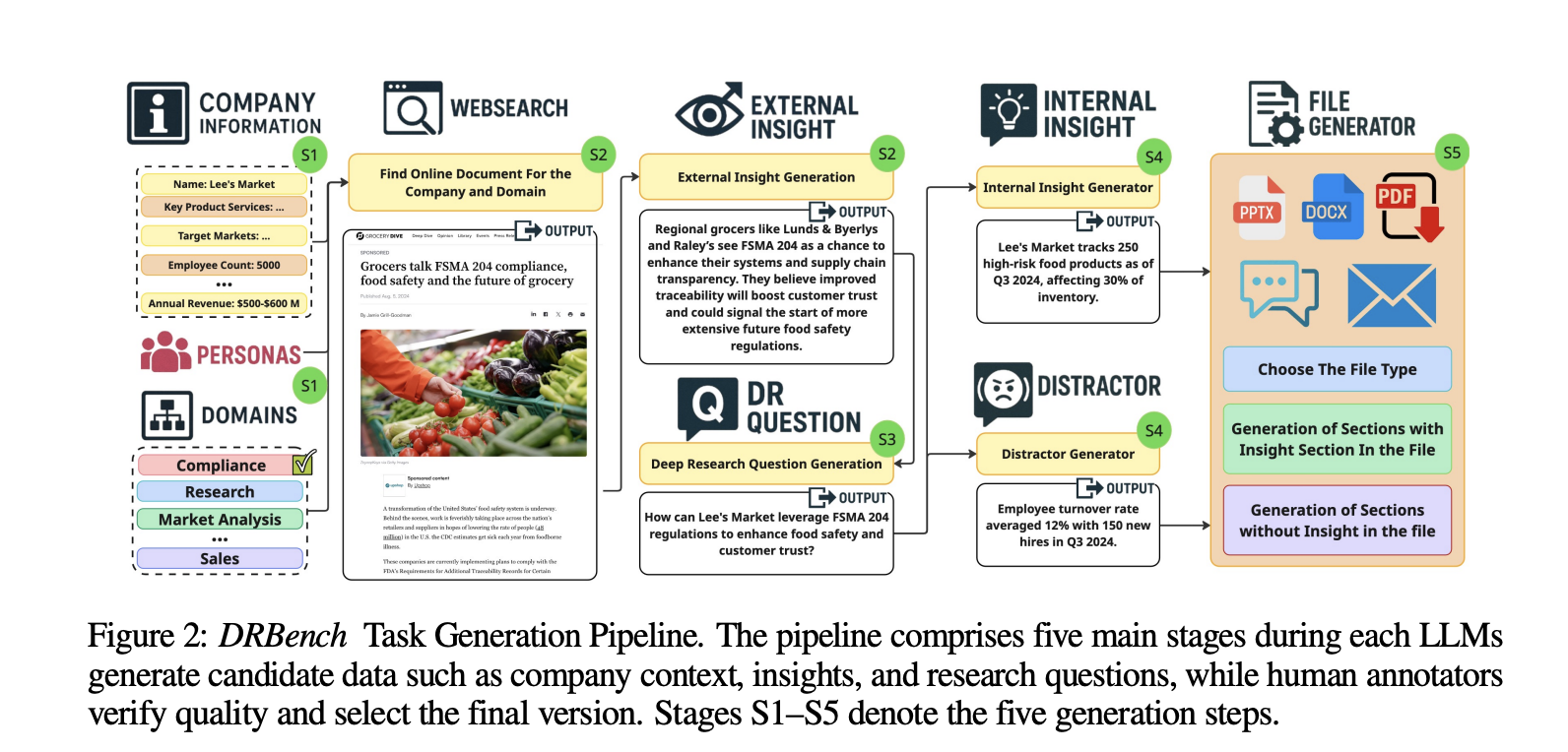

The preliminary launch gives 15 deep analysis duties throughout 10 enterprise domains (e.g., Gross sales, Cybersecurity, Compliance). Every job specifies a deep analysis query, a job context (firm and persona), and a set of groundtruth insights spanning three courses: public insights (from dated, time-stable URLs), inside related insights, and inside distractor insights. The benchmark explicitly embeds these insights inside reasonable enterprise information and purposes, forcing brokers to floor the related ones whereas avoiding distractors. The dataset development pipeline combines LLM technology with human verification and totals 114 groundtruth insights throughout duties.

Enterprise atmosphere

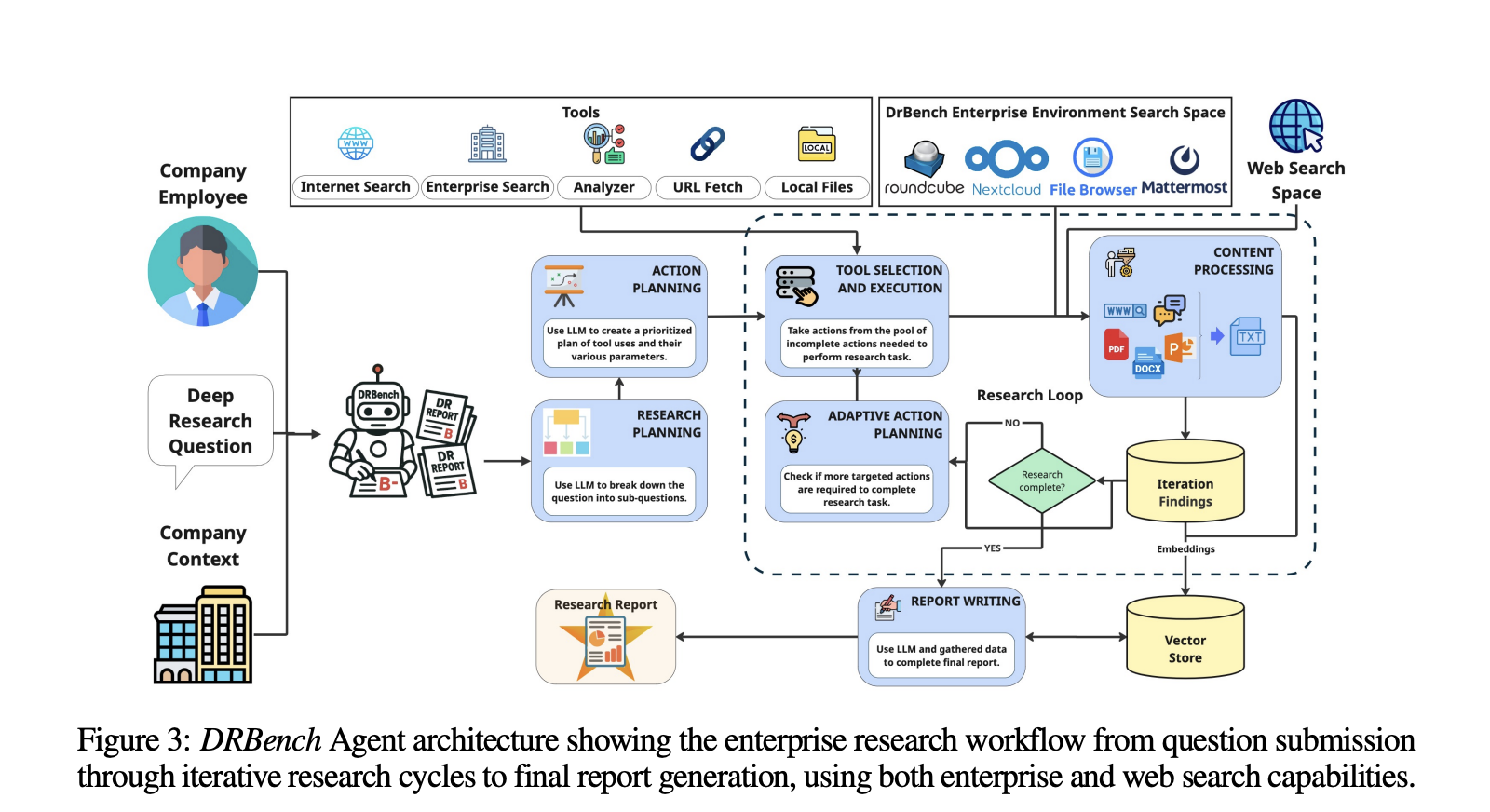

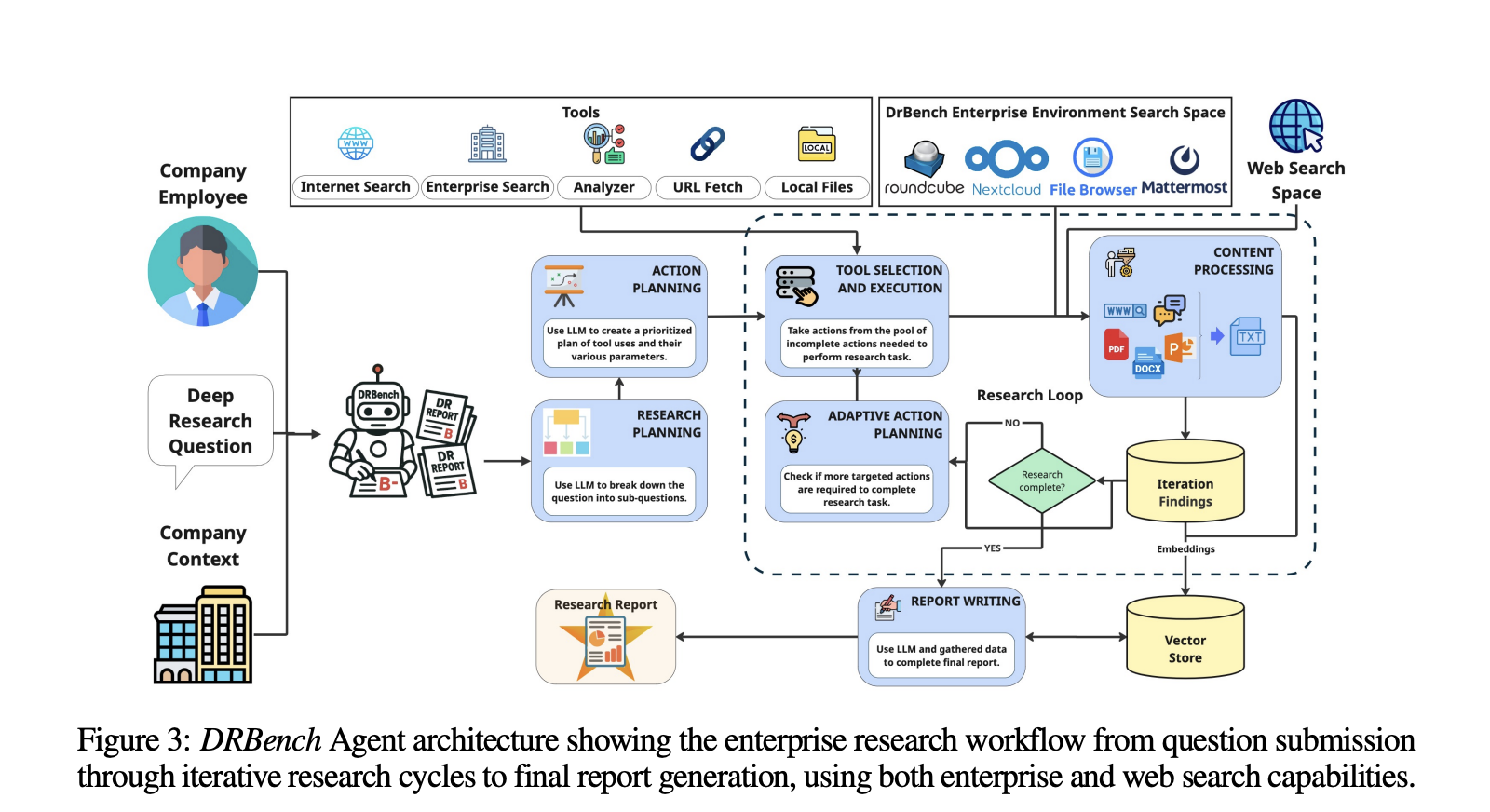

A core contribution is the containerized enterprise atmosphere that integrates generally used providers behind authentication and app-specific APIs. DRBench’s Docker picture orchestrates: Nextcloud (shared paperwork, WebDAV), Mattermost (crew chat, REST API), Roundcube with SMTP/IMAP (enterprise electronic mail), FileBrowser (native filesystem), and a VNC/NoVNC desktop for GUI interplay. Duties are initialized by distributing knowledge throughout these providers (paperwork to Nextcloud and FileBrowser, chats to Mattermost channels, threaded emails to the mail system, and provisioned customers with constant credentials). Brokers can function by net interfaces or programmatic APIs uncovered by every service. This setup is deliberately “needle-in-a-haystack”: related and distractor insights are injected into reasonable information (PDF/DOCX/PPTX/XLSX, chats, emails) and padded with believable however irrelevant content material.

Analysis: what will get scored

DRBench evaluates 4 axes aligned to analyst workflows: Perception Recall, Distractor Avoidance, Factuality, and Report High quality. Perception Recall decomposes the agent’s report into atomic insights with citations, matches them in opposition to groundtruth injected insights utilizing an LLM choose, and scores recall (not precision). Distractor Avoidance penalizes inclusion of injected distractor insights. Factuality and Report High quality assess the correctness and construction/readability of the ultimate report underneath a rubric specified within the report.

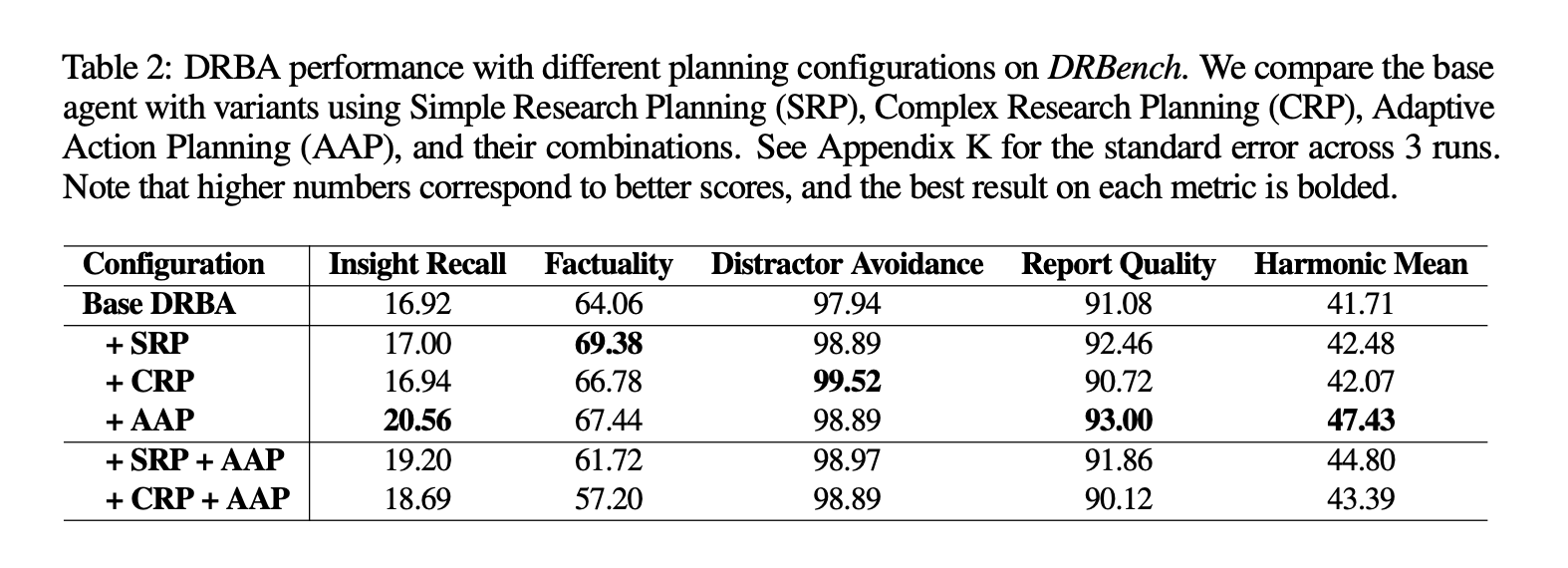

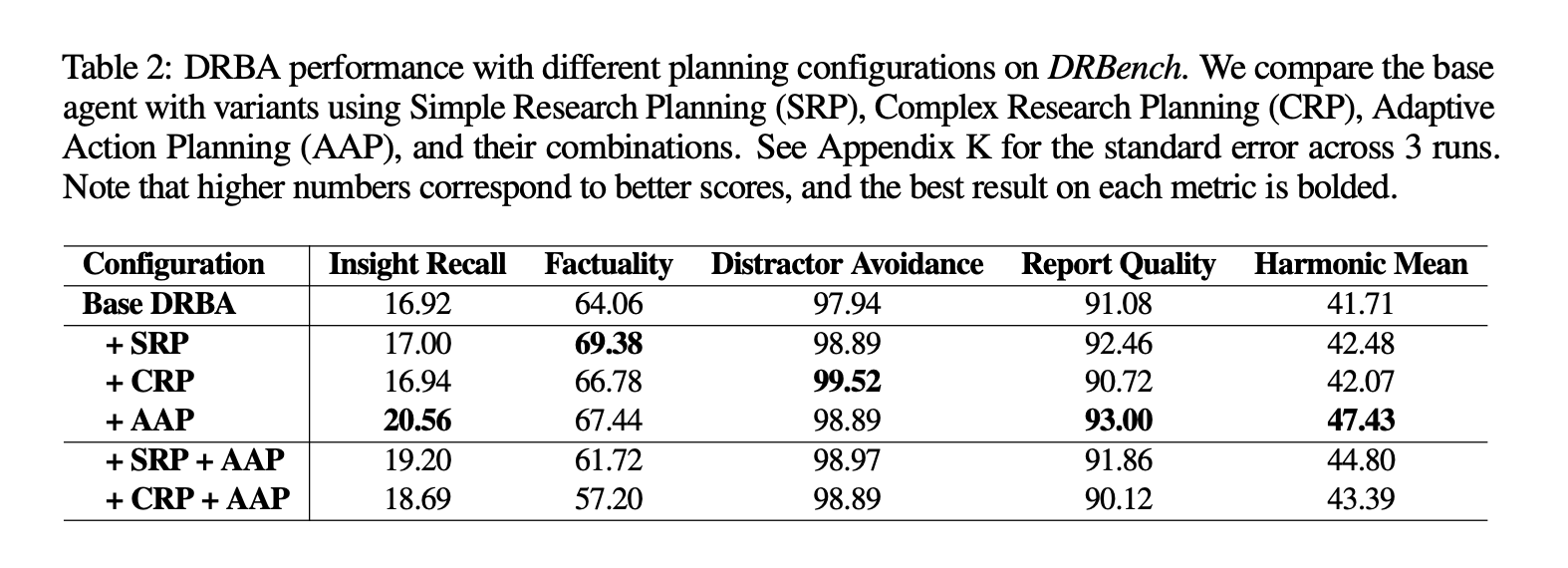

Baseline agent and analysis loop

The analysis crew introduces a task-oriented baseline, DRBench Agent (DRBA), designed to function natively contained in the DRBench atmosphere. DRBA is organized into 4 elements: analysis planning, motion planning, a analysis loop with Adaptive Motion Planning (AAP), and report writing. Planning helps two modes: Advanced Analysis Planning (CRP), which specifies investigation areas, anticipated sources, and success standards; and Easy Analysis Planning (SRP), which produces light-weight sub-queries. The analysis loop iteratively selects instruments, processes content material (together with storage in a vector retailer), identifies gaps, and continues till completion or a max-iteration funds; the report author synthesizes findings with quotation monitoring.

Why that is vital for enterprise brokers?

Most “deep analysis” brokers look compelling on public-web query units, however manufacturing utilization hinges on reliably discovering the suitable inside needles, ignoring believable inside distractors, and citing each private and non-private sources underneath enterprise constraints (login, permissions, UI friction). DRBench’s design instantly targets this hole by: (1) grounding duties in reasonable firm/persona contexts; (2) distributing proof throughout a number of enterprise apps plus the online; and (3) scoring whether or not the agent really extracted the meant insights and wrote a coherent, factual report. This mix makes it a sensible benchmark for system builders who want end-to-end analysis moderately than single-tool micro-scores.

Key Takeaways

- DRBench evaluates deep analysis brokers on complicated, open-ended enterprise duties that require combining public net and personal firm knowledge.

- The preliminary launch covers 15 duties throughout 10 domains, every grounded in reasonable consumer personas and organizational context.

- Duties span heterogeneous enterprise artifacts—productiveness software program, cloud file techniques, emails, chat—plus the open net, going past web-only setups.

- Reviews are scored for perception recall, factual accuracy, and coherent, well-structured reporting utilizing rubric-based analysis.

- Code and benchmark property are open-sourced on GitHub for reproducible analysis and extension.

From an enterprise analysis standpoint, DRBench is a helpful step towards standardized, end-to-end testing of “deep analysis” brokers: the duties are open-ended, grounded in reasonable personas, and require integrating proof from the public net and a personal firm data base, then producing a coherent, well-structured report—exactly the workflow most manufacturing groups care about. The discharge additionally clarifies what’s being measured—recall of related insights, factual accuracy, and report high quality—whereas explicitly transferring past web-only setups that overfit to looking heuristics. The 15 duties throughout 10 domains are modest in scale however enough to reveal system bottlenecks (retrieval throughout heterogeneous artifacts, quotation self-discipline, and planning loops).

Try the Paper and GitHub web page. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as nicely.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s traits at present: learn extra, subscribe to our e-newsletter, and develop into a part of the NextTech neighborhood at NextTech-news.com