Whereas a fundamental Massive Language Mannequin (LLM) agent—one which repeatedly calls exterior instruments—is simple to create, these brokers usually wrestle with lengthy and complicated duties as a result of they lack the power to plan forward and handle their work over time. They are often thought-about “shallow” of their execution.

The deepagents library is designed to beat this limitation by implementing a common structure impressed by superior purposes like Deep Analysis and Claude Code.

This structure provides brokers extra depth by combining 4 key options:

- A Planning Software: Permits the agent to strategically break down a posh activity into manageable steps earlier than appearing.

- Sub-Brokers: Allows the primary agent to delegate specialised components of the duty to smaller, centered brokers.

- Entry to a File System: Gives persistent reminiscence for saving work-in-progress, notes, and remaining outputs, permitting the agent to proceed the place it left off.

- A Detailed Immediate: Offers the agent clear directions, context, and constraints for its long-term aims.

By offering these foundational parts, deepagents makes it simpler for builders to construct highly effective, general-purpose brokers that may plan, handle state, and execute advanced workflows successfully.

On this article, we’ll check out a sensible instance to see how DeepAgents truly work in motion. Try the FULL CODES right here.

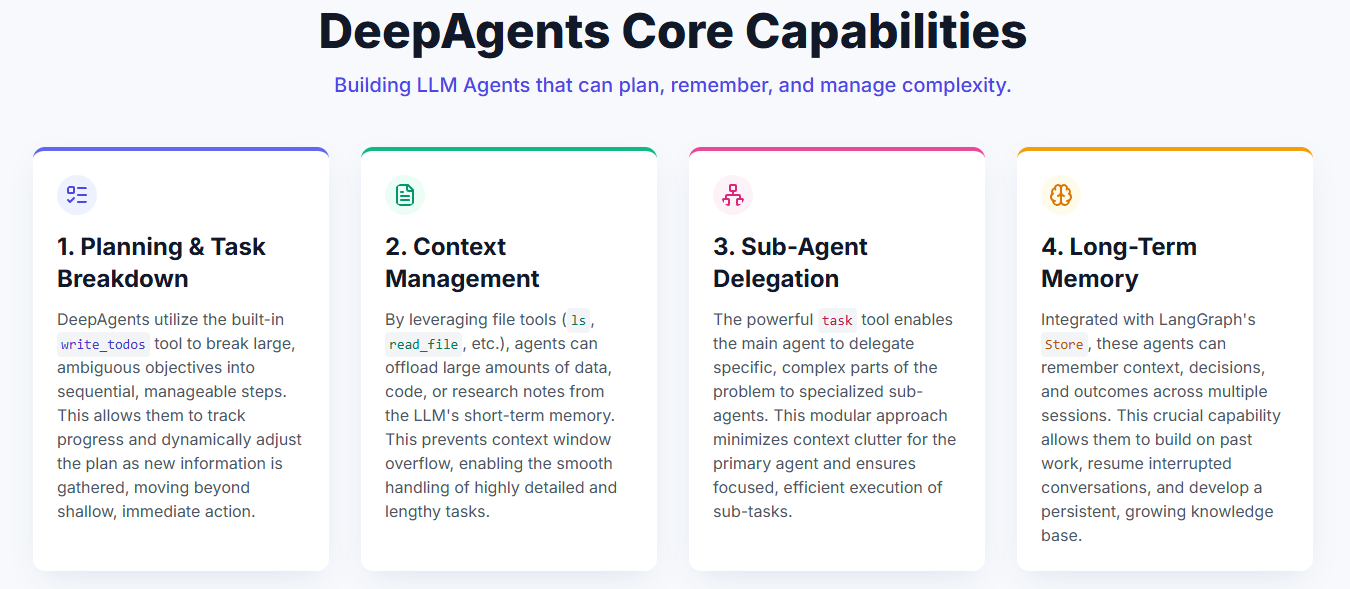

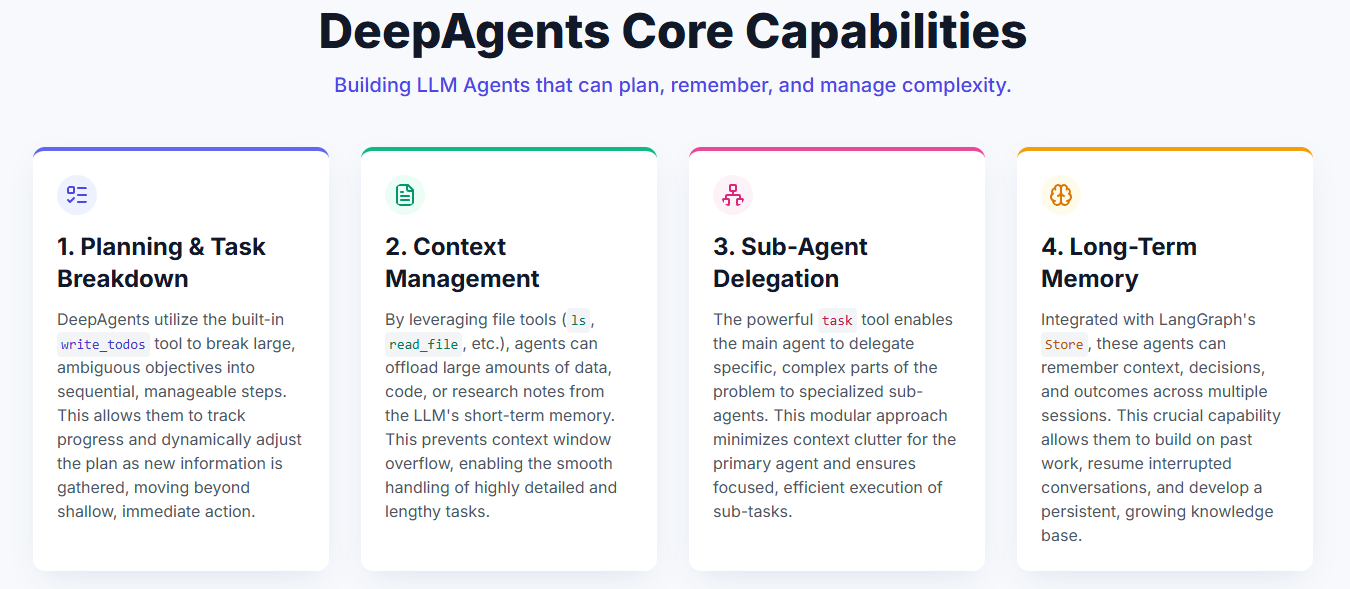

Core Capabilities of DeepAgents

1. Planning and Job Breakdown: DeepAgents include a built-in write_todos device that helps brokers break massive duties into smaller, manageable steps. They will monitor their progress and alter the plan as they study new info.

2. Context Administration: Utilizing file instruments like ls, read_file, write_file, and edit_file, brokers can retailer info exterior their short-term reminiscence. This prevents context overflow and lets them deal with bigger or extra detailed duties easily.

3. Sub-Agent Creation: The built-in activity device permits an agent to create smaller, centered sub-agents. These sub-agents work on particular components of an issue with out cluttering the primary agent’s context.

4. Lengthy-Time period Reminiscence: With help from LangGraph’s Retailer, brokers can keep in mind info throughout classes. This implies they will recall previous work, proceed earlier conversations, and construct on earlier progress.

Organising dependencies

!pip set up deepagents tavily-python langchain-google-genai langchain-openaiAtmosphere Variables

On this tutorial, we’ll use the OpenAI API key to energy our Deep Agent. Nevertheless, for reference, we’ll additionally present how you should utilize a Gemini mannequin as a substitute.

You’re free to decide on any mannequin supplier you like — OpenAI, Gemini, Anthropic, or others — as DeepAgents works seamlessly with totally different backends. Try the FULL CODES right here.

import os

from getpass import getpass

os.environ['TAVILY_API_KEY'] = getpass('Enter Tavily API Key: ')

os.environ['OPENAI_API_KEY'] = getpass('Enter OpenAI API Key: ')

os.environ['GOOGLE_API_KEY'] = getpass('Enter Google API Key: ')Importing the required libraries

import os

from typing import Literal

from tavily import TavilyClient

from deepagents import create_deep_agent

tavily_client = TavilyClient()Instruments

Similar to common tool-using brokers, a Deep Agent will also be outfitted with a set of instruments to assist it carry out duties.

On this instance, we’ll give our agent entry to a Tavily Search device, which it might use to assemble real-time info from the online. Try the FULL CODES right here.

from typing import Literal

from langchain.chat_models import init_chat_model

from deepagents import create_deep_agent

def internet_search(

question: str,

max_results: int = 5,

matter: Literal["general", "news", "finance"] = "common",

include_raw_content: bool = False,

):

"""Run an online search"""

search_docs = tavily_client.search(

question,

max_results=max_results,

include_raw_content=include_raw_content,

matter=matter,

)

return search_docs

Sub-Brokers

Subagents are some of the highly effective options of Deep Brokers. They permit the primary agent to delegate particular components of a posh activity to smaller, specialised brokers — every with its personal focus, instruments, and directions. This helps preserve the primary agent’s context clear and arranged whereas nonetheless permitting for deep, centered work on particular person subtasks.

In our instance, we outlined two subagents:

- policy-research-agent — a specialised researcher that conducts in-depth evaluation on AI insurance policies, laws, and moral frameworks worldwide. It makes use of the internet_search device to assemble real-time info and produces a well-structured, skilled report.

- policy-critique-agent — an editorial agent chargeable for reviewing the generated report for accuracy, completeness, and tone. It ensures that the analysis is balanced, factual, and aligned with regional authorized frameworks.

Collectively, these subagents allow the primary Deep Agent to carry out analysis, evaluation, and high quality evaluation in a structured, modular workflow. Try the FULL CODES right here.

sub_research_prompt = """

You're a specialised AI coverage researcher.

Conduct in-depth analysis on authorities insurance policies, international laws, and moral frameworks associated to synthetic intelligence.

Your reply ought to:

- Present key updates and tendencies

- Embody related sources and legal guidelines (e.g., EU AI Act, U.S. Government Orders)

- Evaluate international approaches when related

- Be written in clear, skilled language

Solely your FINAL message will probably be handed again to the primary agent.

"""

research_sub_agent = {

"identify": "policy-research-agent",

"description": "Used to analysis particular AI coverage and regulation questions in depth.",

"system_prompt": sub_research_prompt,

"instruments": [internet_search],

}

sub_critique_prompt = """

You're a coverage editor reviewing a report on AI governance.

Test the report at `final_report.md` and the query at `query.txt`.

Give attention to:

- Accuracy and completeness of authorized info

- Correct quotation of coverage paperwork

- Balanced evaluation of regional variations

- Readability and neutrality of tone

Present constructive suggestions, however do NOT modify the report instantly.

"""

critique_sub_agent = {

"identify": "policy-critique-agent",

"description": "Critiques AI coverage analysis studies for completeness, readability, and accuracy.",

"system_prompt": sub_critique_prompt,

}System Immediate

Deep Brokers embrace a built-in system immediate that serves as their core set of directions. This immediate is impressed by the system immediate utilized in Claude Code and is designed to be extra general-purpose, offering steerage on how you can use built-in instruments like planning, file system operations, and subagent coordination.

Nevertheless, whereas the default system immediate makes Deep Brokers succesful out of the field, it’s extremely really useful to outline a customized system immediate tailor-made to your particular use case. Immediate design performs an important function in shaping the agent’s reasoning, construction, and total efficiency.

In our instance, we outlined a customized immediate referred to as policy_research_instructions, which transforms the agent into an professional AI coverage researcher. It clearly outlines a step-by-step workflow — saving the query, utilizing the analysis subagent for evaluation, writing the report, and optionally invoking the critique subagent for evaluation. It additionally enforces finest practices resembling Markdown formatting, quotation fashion, {and professional} tone to make sure the ultimate report meets high-quality coverage requirements. Try the FULL CODES right here.

policy_research_instructions = """

You might be an professional AI coverage researcher and analyst.

Your job is to research questions associated to international AI regulation, ethics, and governance frameworks.

1️⃣ Save the consumer's query to `query.txt`

2️⃣ Use the `policy-research-agent` to carry out in-depth analysis

3️⃣ Write an in depth report back to `final_report.md`

4️⃣ Optionally, ask the `policy-critique-agent` to critique your draft

5️⃣ Revise if needed, then output the ultimate, complete report

When writing the ultimate report:

- Use Markdown with clear sections (## for every)

- Embody citations in [Title](URL) format

- Add a ### Sources part on the finish

- Write in skilled, impartial tone appropriate for coverage briefings

"""Important Agent

Right here we outline our foremost Deep Agent utilizing the create_deep_agent() perform. We initialize the mannequin with OpenAI’s gpt-4o, however as proven within the commented-out line, you possibly can simply change to Google’s Gemini 2.5 Flash mannequin should you desire. The agent is configured with the internet_search device, our customized policy_research_instructions system immediate, and two subagents — one for in-depth analysis and one other for critique.

By default, DeepAgents internally makes use of Claude Sonnet 4.5 as its mannequin if none is explicitly specified, however the library permits full flexibility to combine OpenAI, Gemini, Anthropic, or different LLMs supported by LangChain. Try the FULL CODES right here.

mannequin = init_chat_model(mannequin="openai:gpt-4o")

# mannequin = init_chat_model(mannequin="google_genai:gemini-2.5-flash")

agent = create_deep_agent(

mannequin=mannequin,

instruments=[internet_search],

system_prompt=policy_research_instructions,

subagents=[research_sub_agent, critique_sub_agent],

)Invoking the Agent

question = "What are the newest updates on the EU AI Act and its international affect?"

consequence = agent.invoke({"messages": [{"role": "user", "content": query}]})Try the FULL CODES right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be a part of us on telegram as properly.

I’m a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I’ve a eager curiosity in Information Science, particularly Neural Networks and their software in varied areas.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s tendencies right this moment: learn extra, subscribe to our e-newsletter, and turn into a part of the NextTech neighborhood at NextTech-news.com