Small fashions are sometimes blocked by poor instruction tuning, weak software use codecs, and lacking governance. IBM AI workforce launched Granite 4.0 Nano, a small mannequin household that targets native and edge inference with enterprise controls and open licensing. The household consists of 8 fashions in two sizes, 350M and about 1B, with each hybrid SSM and transformer variants, every in base and instruct. Granite 4.0 Nano sequence fashions are launched underneath an Apache 2.0 license with native structure assist on widespread runtimes like vLLM, llama.cpp, and MLX

What’s new in Granite 4.0 Nano sequence?

Granite 4.0 Nano consists of 4 mannequin strains and their base counterparts. Granite 4.0 H 1B makes use of a hybrid SSM primarily based structure and is about 1.5B parameters. Granite 4.0 H 350M makes use of the identical hybrid method at 350M. For optimum runtime portability IBM additionally offers Granite 4.0 1B and Granite 4.0 350M as transformer variations.

| Granite launch | Sizes in launch | Structure | License and governance | Key notes |

|---|---|---|---|---|

| Granite 13B, first watsonx Granite fashions | 13B base, 13B instruct, later 13B chat | Decoder solely transformer, 8K context | IBM enterprise phrases, shopper protections | First public Granite fashions for watsonx, curated enterprise knowledge, English focus |

| Granite Code Fashions (open) | 3B, 8B, 20B, 34B code, base and instruct | Decoder solely transformer, 2 stage code coaching on 116 languages | Apache 2.0 | First absolutely open Granite line, for code intelligence, paper 2405.04324, out there on HF and GitHub |

| Granite 3.0 Language Fashions | 2B and 8B, base and instruct | Transformer, 128K context for instruct | Apache 2.0 | Enterprise LLMs for RAG, software use, summarization, shipped on watsonx and HF |

| Granite 3.1 Language Fashions (HF) | 1B A400M, 3B A800M, 2B, 8B | Transformer, 128K context | Apache 2.0 | Measurement ladder for enterprise duties, each base and instruct, identical Granite knowledge recipe |

| Granite 3.2 Language Fashions (HF) | 2B instruct, 8B instruct | Transformer, 128K, higher lengthy immediate | Apache 2.0 | Iterative high quality bump on 3.x, retains enterprise alignment |

| Granite 3.3 Language Fashions (HF) | 2B base, 2B instruct, 8B base, 8B instruct, all 128K | Decoder solely transformer | Apache 2.0 | Newest 3.x line on HF earlier than 4.0, provides FIM and higher instruction following |

| Granite 4.0 Language Fashions | 3B micro, 3B H micro, 7B H tiny, 32B H small, plus transformer variants | Hybrid Mamba 2 plus transformer for H, pure transformer for compatibility | Apache 2.0, ISO 42001, cryptographically signed | Begin of hybrid era, decrease reminiscence, agent pleasant, identical governance throughout sizes |

| Granite 4.0 Nano Language Fashions | 1B H, 1B H instruct, 350M H, 350M H instruct, 2B transformer, 2B transformer instruct, 0.4B transformer, 0.4B transformer instruct, whole 8 | H fashions are hybrid SSM plus transformer, non H are pure transformer | Apache 2.0, ISO 42001, signed, identical 4.0 pipeline | Smallest Granite fashions, made for edge, native and browser, run on vLLM, llama.cpp, MLX, watsonx |

Structure and coaching

The H variants interleave SSM layers with transformer layers. This hybrid design reduces reminiscence development versus pure consideration, whereas preserving the generality of transformer blocks. The Nano fashions didn’t use a lowered knowledge pipeline. They had been skilled with the identical Granite 4.0 methodology and greater than 15T tokens, then instruction tuned to ship strong software use and instruction following. This carries over strengths from the bigger Granite 4.0 fashions to sub 2B scales.

Benchmarks and aggressive context

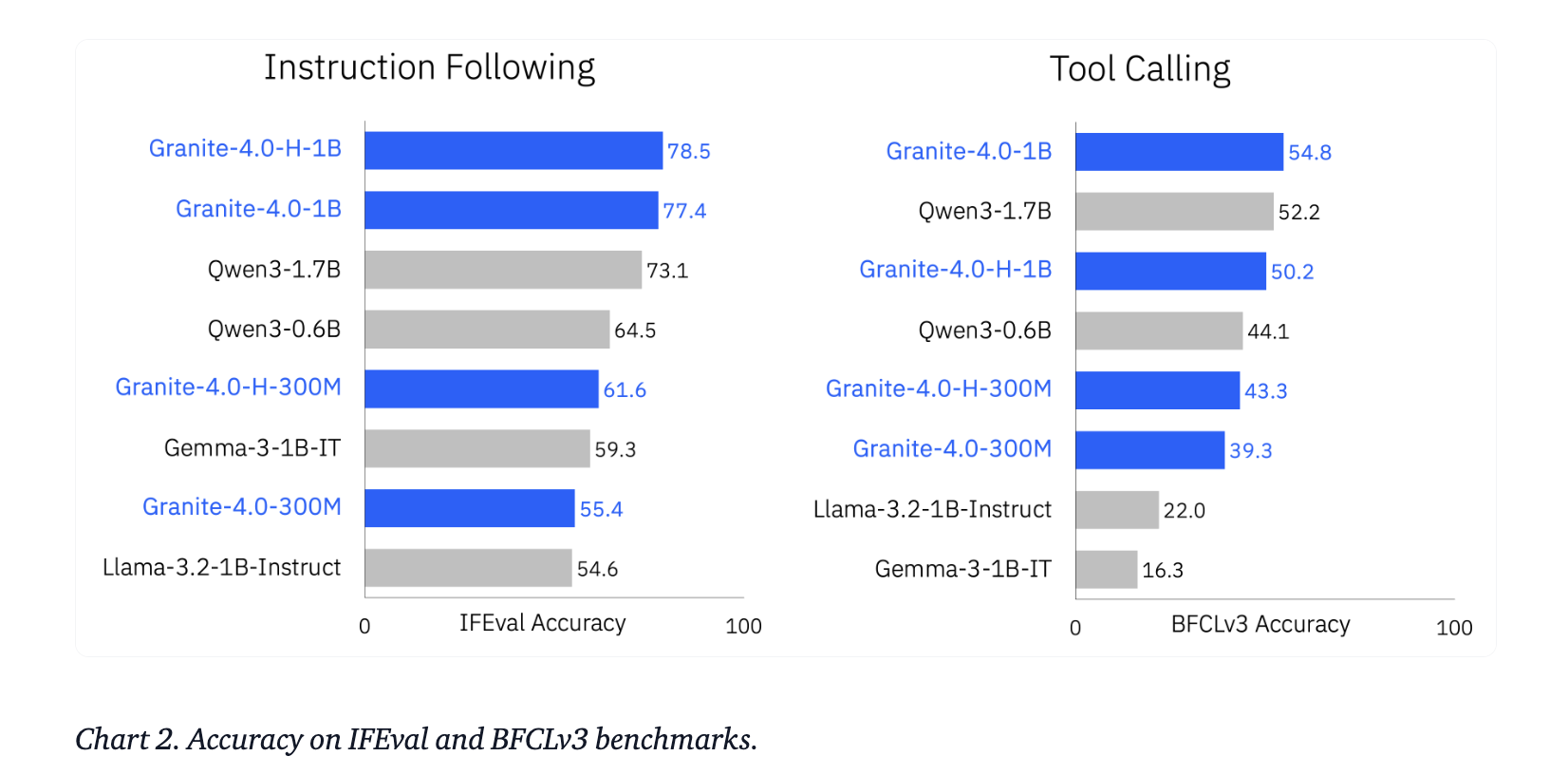

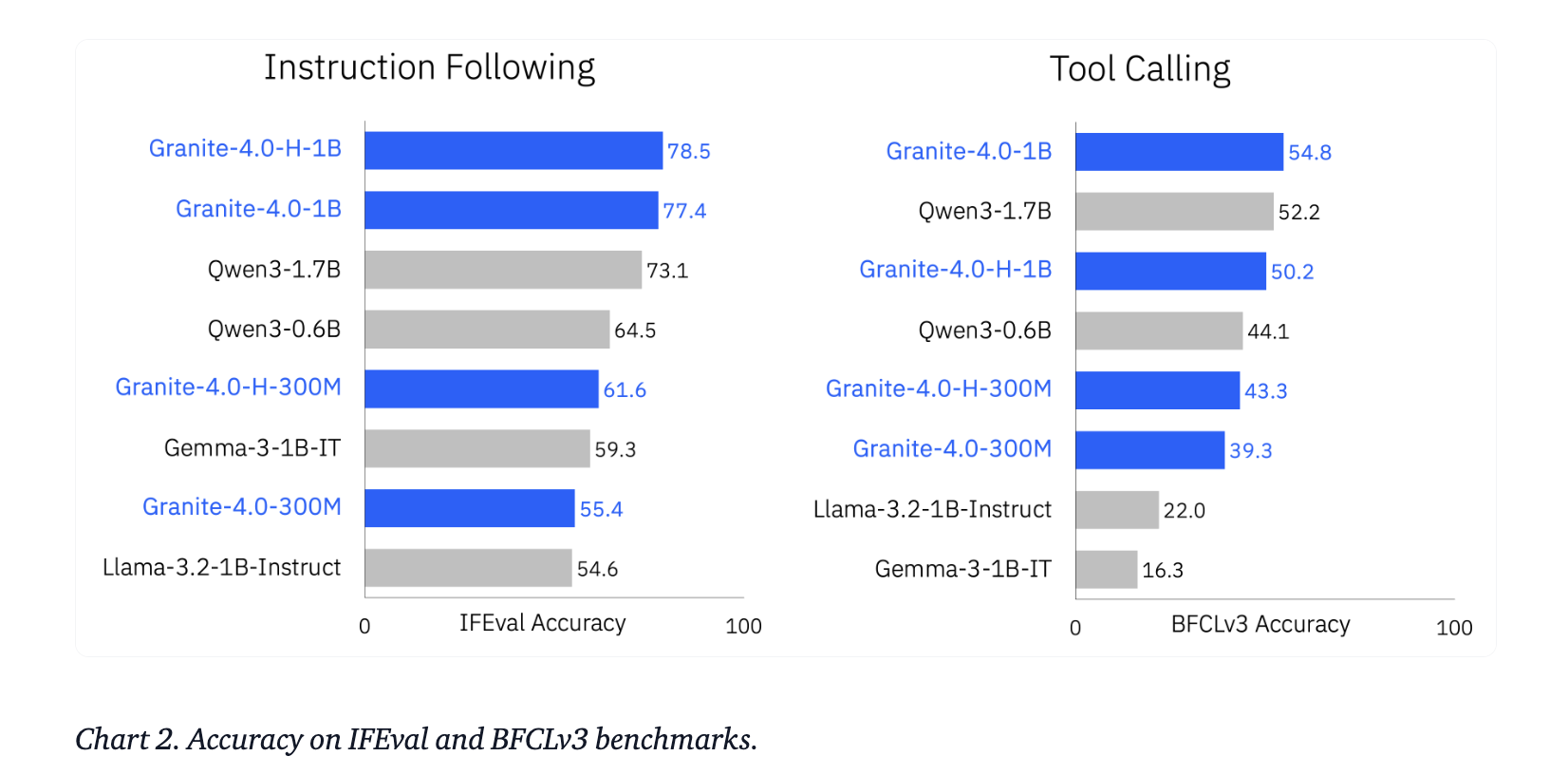

IBM compares Granite 4.0 Nano with different underneath 2B fashions, together with Qwen, Gemma, and LiquidAI LFM. Reported aggregates present a big enhance in capabilities throughout basic information, math, code, and security at comparable parameter budgets. On agent duties, the fashions outperform a number of friends on IFEval and on the Berkeley Operate Calling Leaderboard v3.

Key Takeaways

- IBM launched 8 Granite 4.0 Nano fashions, 350M and about 1B every, in hybrid SSM and transformer variants, in base and instruct, all underneath Apache 2.0.

- The hybrid H fashions, Granite 4.0 H 1B at about 1.5B parameters and Granite 4.0 H 350M at about 350M, reuse the Granite 4.0 coaching recipe on greater than 15T tokens, so functionality is inherited from the bigger household and never a lowered knowledge department.

- IBM workforce reviews that Granite 4.0 Nano is aggressive with different sub 2B fashions corresponding to Qwen, Gemma and LiquidAI LFM on basic, math, code and security, and that it outperforms on IFEval and BFCLv3 which matter for software utilizing brokers.

- All Granite 4.0 fashions, together with Nano, are cryptographically signed, ISO 42001 licensed and launched for enterprise use, which supplies provenance and governance that typical small group fashions don’t present.

- The fashions can be found on Hugging Face and IBM watsonx.ai with runtime assist for vLLM, llama.cpp and MLX, which makes native, edge and browser degree deployments reasonable for early AI engineers and software program groups.

IBM is doing the proper factor right here, it’s taking the identical Granite 4.0 coaching pipeline, the identical 15T token scale, the identical hybrid Mamba 2 plus transformer structure, and pushing it all the way down to 350M and about 1B in order that edge and on gadget workloads can use the precise governance and provenance story that the bigger Granite fashions have already got. The fashions are Apache 2.0, ISO 42001 aligned, cryptographically signed, and already runnable on vLLM, llama.cpp and MLX. General, this can be a clear and auditable strategy to run small LLMs.

Take a look at the Mannequin Weights on HF and Technical particulars. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be a part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments immediately: learn extra, subscribe to our e-newsletter, and change into a part of the NextTech group at NextTech-news.com