How can a small mannequin study to unravel duties it at the moment fails at, with out rote imitation or counting on an accurate rollout? A group of researchers from Google Cloud AI Analysis and UCLA have launched a coaching framework, ‘Supervised Reinforcement Studying’ (SRL), that makes 7B scale fashions really study from very exhausting math and agent trajectories that ordinary supervised fantastic tuning and consequence primarily based reinforcement studying RL can’t study from.

Small open supply fashions comparable to Qwen2.5 7B Instruct fail on the toughest issues in s1K 1.1, even when the instructor hint is nice. If we apply supervised fantastic tuning on the complete DeepSeek R1 fashion options, the mannequin imitates token by token, the sequence is lengthy, the information is only one,000 gadgets, and the ultimate scores drop under the bottom mannequin.

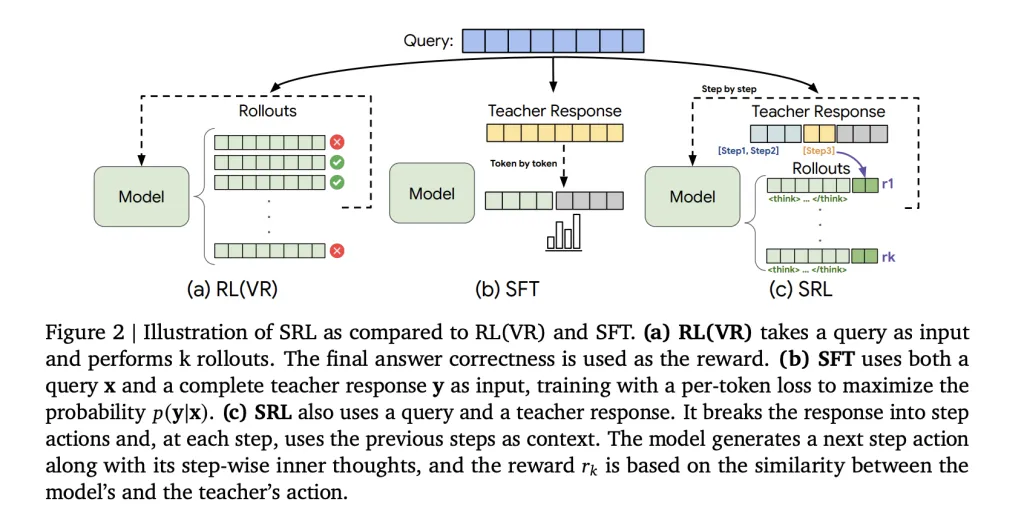

Core thought of ‘Supervised Reinforcement Studying’ SRL

‘Supervised Reinforcement Studying’ (SRL) retains the RL fashion optimization, nevertheless it injects supervision into the reward channel as a substitute of into the loss. Every professional trajectory from s1K 1.1 is parsed right into a sequence of actions. For each prefix of that sequence, the analysis group creates a brand new coaching instance, the mannequin first produces a personal reasoning span wrapped in

Math outcomes

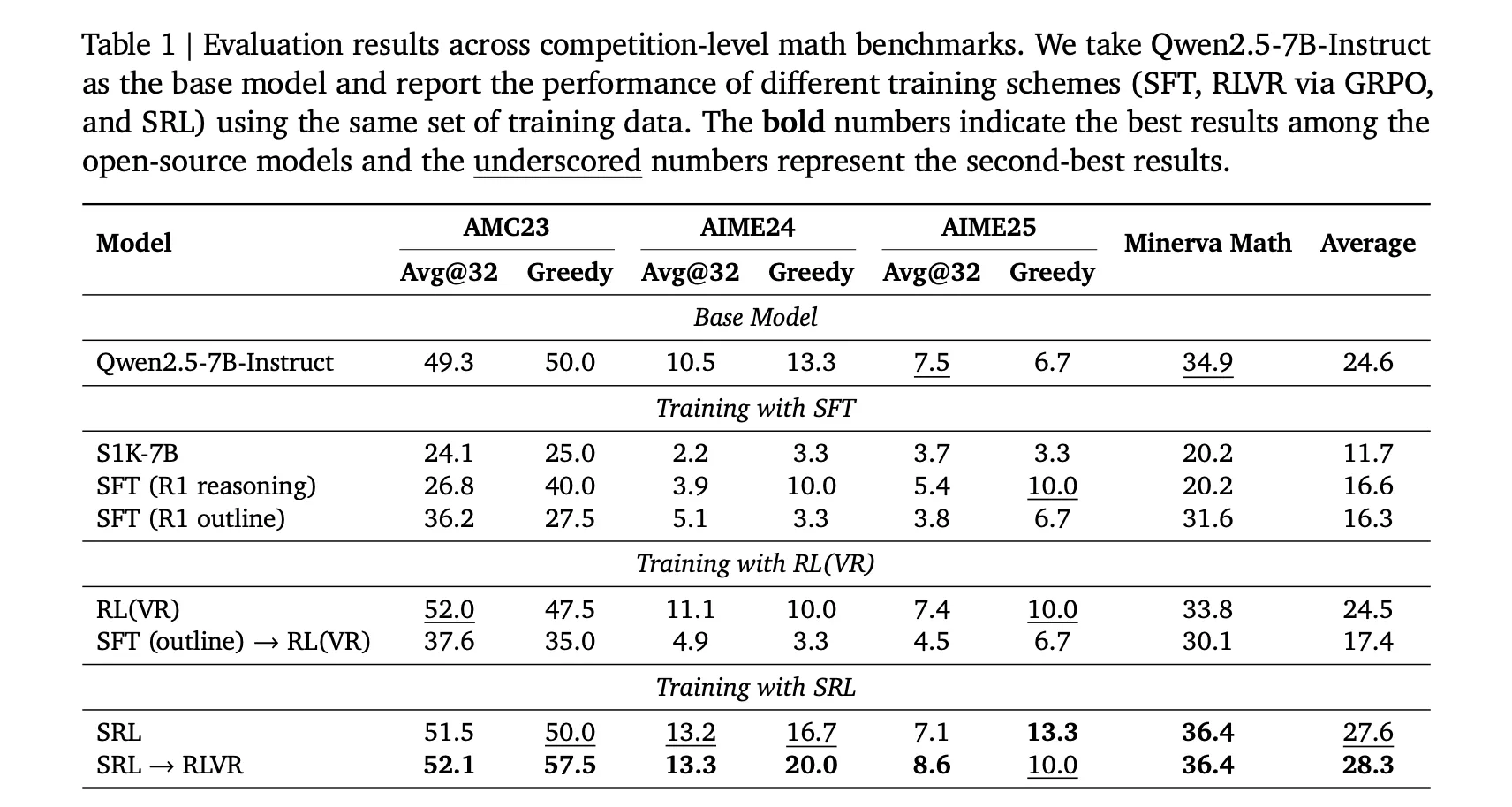

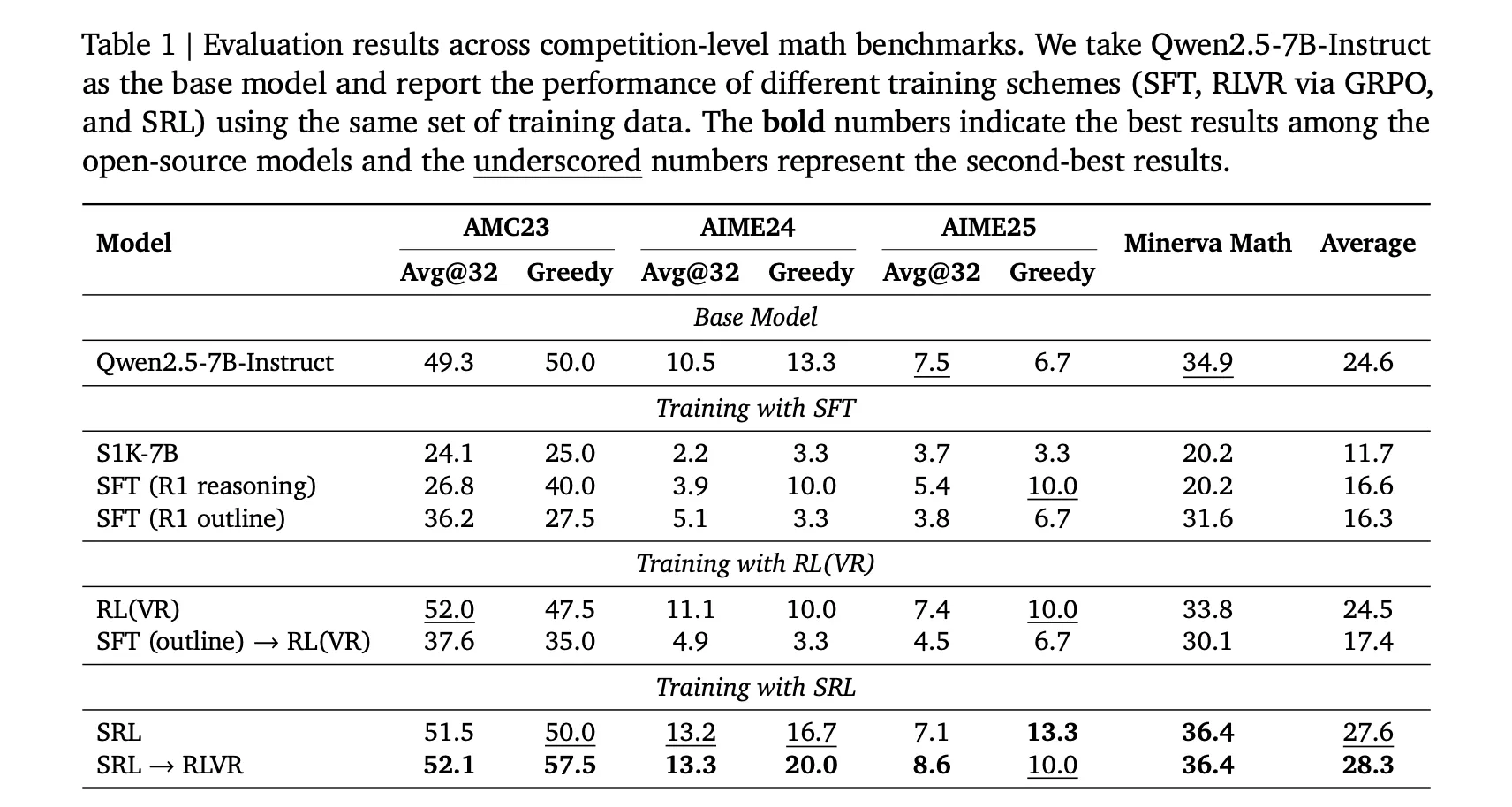

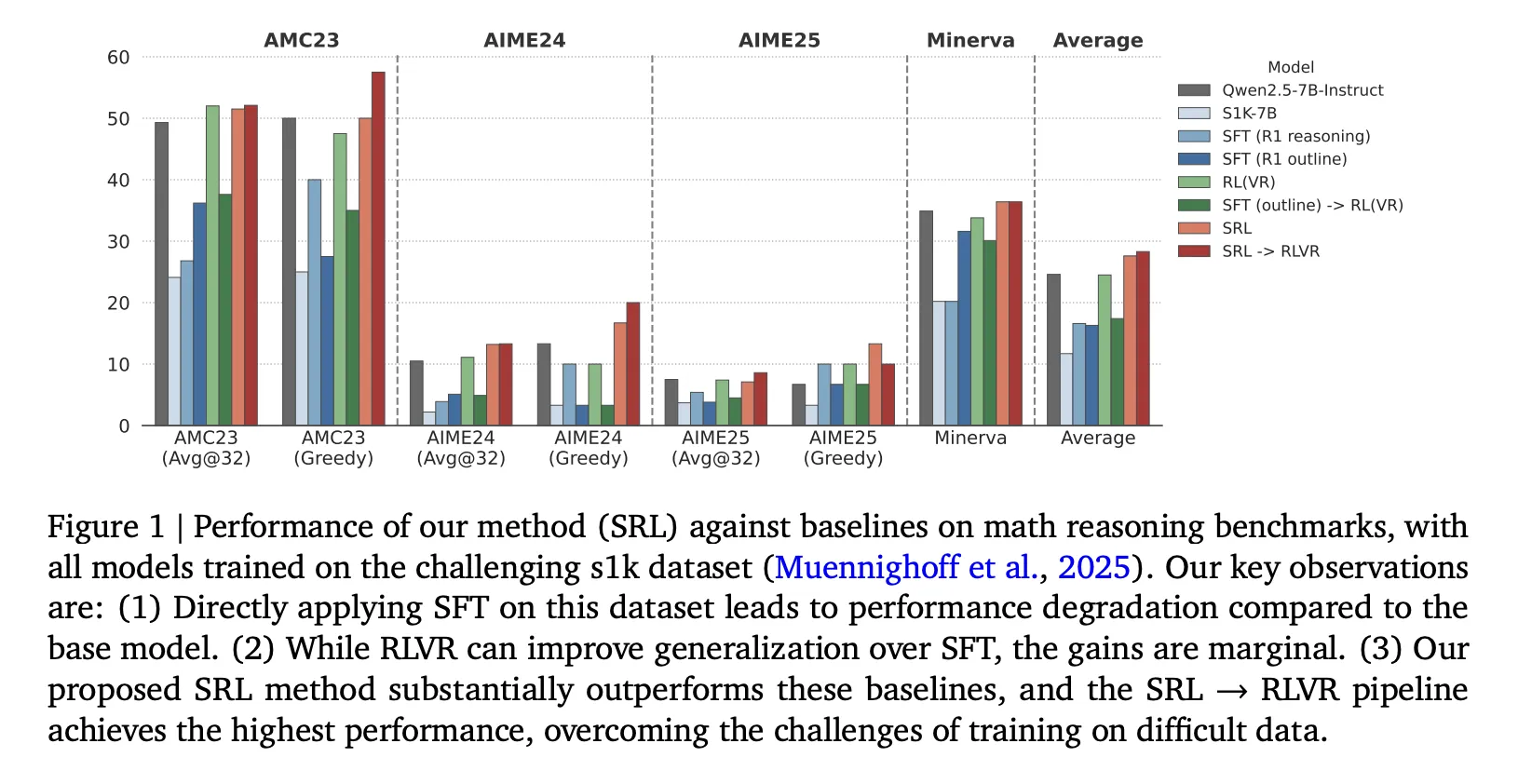

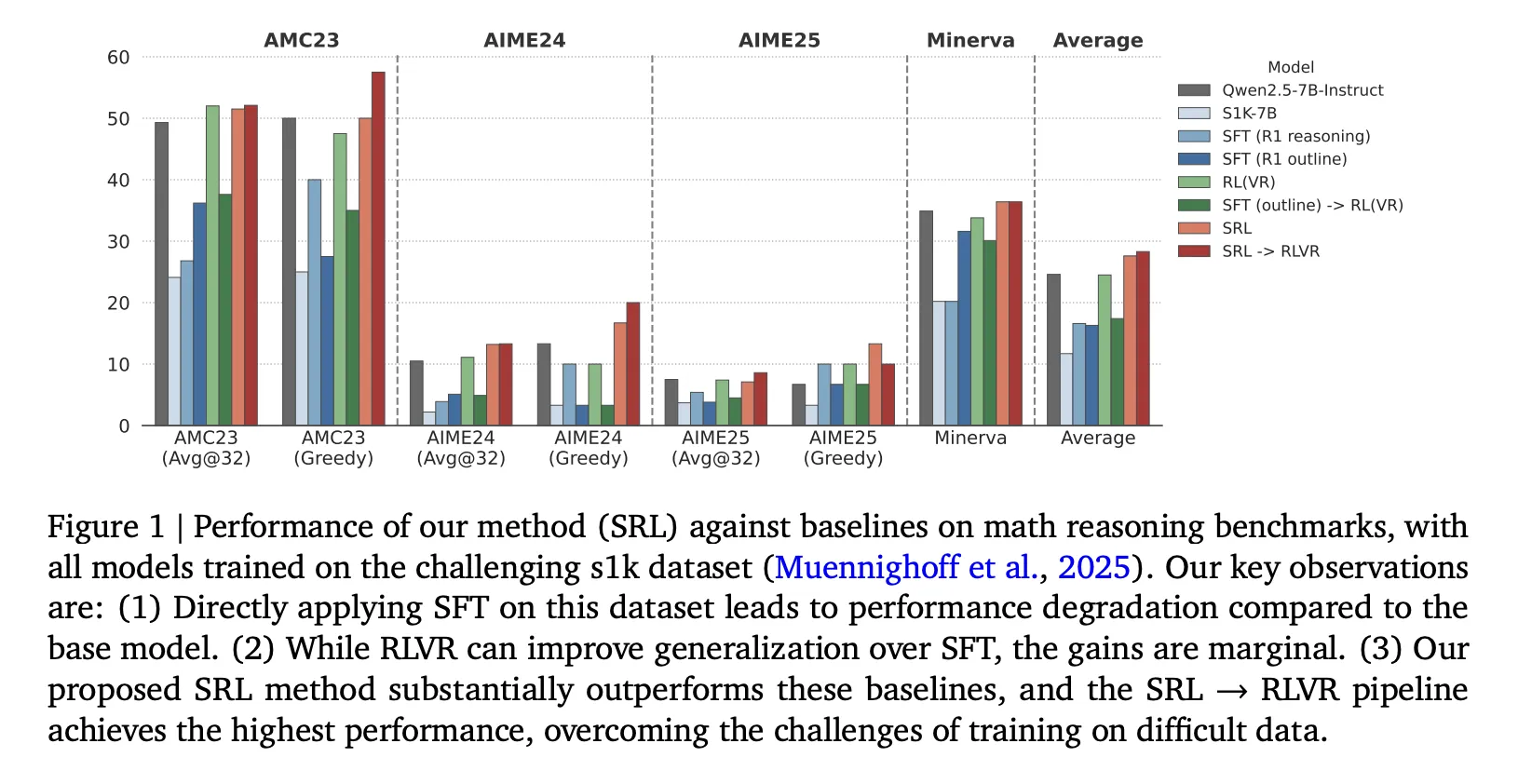

All fashions are initialized from Qwen2.5 7B Instruct and all are skilled on the identical DeepSeek R1 formatted s1K 1.1 set, so comparisons are clear. The precise numbers in Desk 1 are:

- Base Qwen2.5 7B Instruct, AMC23 grasping 50.0, AIME24 grasping 13.3, AIME25 grasping 6.7.

- SRL, AMC23 grasping 50.0, AIME24 grasping 16.7, AIME25 grasping 13.3.

- SRL then RLVR, AMC23 grasping 57.5, AIME24 grasping 20.0, AIME25 grasping 10.0.

That is the important thing enchancment, SRL alone already removes the SFT degradation and raises AIME24 and AIME25, and when RLVR is run after SRL, the system reaches the most effective open supply scores within the analysis. The analysis group is specific that the most effective pipeline is SRL then RLVR, not SRL in isolation.

Software program engineering outcomes

The analysis group additionally applies SRL to Qwen2.5 Coder 7B Instruct utilizing 5,000 verified agent trajectories generated by claude 3 7 sonnet, each trajectory is decomposed into step sensible cases, and in whole 134,000 step gadgets are produced. Analysis is on SWE Bench Verified. The bottom mannequin will get 5.8 % within the oracle file edit mode and three.2 % finish to finish. SWE Fitness center 7B will get 8.4 % and 4.2 %. SRL will get 14.8 % and eight.6 %, which is about 2 occasions the bottom mannequin and clearly increased than the SFT baseline.

Key Takeaways

- SRL reformulates exhausting reasoning as step sensible motion era, the mannequin first produces an inside monologue then outputs a single motion, and solely that motion is rewarded by sequence similarity, so the mannequin will get sign even when the ultimate reply is improper.

- SRL is run on the identical DeepSeek R1 formatted s1K 1.1 information as SFT and RLVR, however not like SFT it doesn’t overfit lengthy demonstrations, and in contrast to RLVR it doesn’t collapse when no rollout is right.

- On math, the precise order that provides the strongest leads to the analysis is, initialize Qwen2.5 7B Instruct with SRL, then apply RLVR, which pushes reasoning benchmarks increased than both technique alone.

- The identical SRL recipe generalizes to agentic software program engineering, utilizing 5,000 verified trajectories from claude 3 7 sonnet 20250219, and it lifts SWE Bench Verified effectively above each the bottom Qwen2.5 Coder 7B Instruct and the SFT fashion SWE Fitness center 7B baseline.

- In comparison with different step sensible RL strategies that want an additional reward mannequin, this SRL retains a GRPO fashion goal and makes use of solely actions from professional trajectories and a light-weight string similarity, so it’s simple to run on small exhausting datasets.

‘Supervised Reinforcement Studying’ (SRL) is a sensible contribution by the analysis group. It retains the GRPO fashion reinforcement studying setup, nevertheless it replaces fragile consequence degree rewards with supervised, step sensible rewards which are computed straight from professional trajectories, so the mannequin all the time receives informative sign, even within the Dexhausting regime the place RLVR and SFT each stall. It will be important that the analysis group reveals SRL on math and on SWE Bench Verified with the identical recipe, and that the strongest configuration is SRL adopted by RLVR, not both one alone. This makes SRL a sensible path for open fashions to study exhausting duties. Total, SRL is a clear bridge between course of supervision and RL that open mannequin groups can undertake instantly.

Take a look at the Paper. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be part of us on telegram as effectively.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s traits immediately: learn extra, subscribe to our publication, and turn into a part of the NextTech group at NextTech-news.com