One of many largest challenges in real-world machine studying is that supervised fashions require labeled knowledge—but in lots of sensible eventualities, the info you begin with is sort of all the time unlabeled. Manually annotating hundreds of samples isn’t simply sluggish; it’s costly, tedious, and sometimes impractical.

That is the place lively studying turns into a game-changer.

Energetic studying is a subset of machine studying by which the algorithm will not be a passive client of knowledge—it turns into an lively participant. As an alternative of labeling the complete dataset upfront, the mannequin intelligently selects which knowledge factors it desires labeled subsequent. It interactively queries a human or oracle for labels on probably the most informative samples, permitting it to study quicker utilizing far fewer annotations. Try the FULL CODES right here.

Right here’s how the workflow sometimes seems to be:

- Start by labeling a small seed portion of the dataset to coach an preliminary, weak mannequin.

- Use this mannequin to generate predictions and confidence scores on the unlabeled knowledge.

- Compute a confidence metric (e.g., likelihood hole) for every prediction.

- Choose solely the lowest-confidence samples—those the mannequin is most uncertain about.

- Manually label these unsure samples and add them to the coaching set.

- Retrain the mannequin and repeat the cycle of predict → rank confidence → label → retrain.

- After a number of iterations, the mannequin can obtain close to–totally supervised efficiency whereas requiring far fewer manually labeled samples.

On this article, we’ll stroll by way of find out how to apply this technique step-by-step and present how lively studying might help you construct high-quality supervised fashions with minimal labeling effort. Try the FULL CODES right here.

Putting in & Importing the libraries

pip set up numpy pandas scikit-learn matplotlib

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

For this tutorial, we shall be utilizing the make_classification dataset from the sklearn library

SEED = 42 # For reproducibility

N_SAMPLES = 1000 # Complete variety of knowledge factors

INITIAL_LABELED_PERCENTAGE = 0.10 # Your constraint: Begin with 10% labeled knowledge

NUM_QUERIES = 20 # Variety of instances we ask the "human" to label a complicated patternNUM_QUERIES = 20 represents the annotation price range in an lively studying setup. In a real-world workflow, this is able to imply the mannequin selects the 20 most complicated samples and sends them to human annotators to label—every annotation costing money and time. In our simulation, we replicate this course of routinely: throughout every iteration, the mannequin selects one unsure pattern, the code immediately retrieves its true label (performing because the human oracle), and the mannequin is retrained with this new data.

Thus, setting NUM_QUERIES = 20 means we’re simulating the good thing about labeling solely 20 strategically chosen samples and observing how a lot the mannequin improves with that restricted however priceless human effort.

Information Technology and Splitting Technique for Energetic Studying

This block handles knowledge era and the preliminary cut up that powers the complete Energetic Studying experiment. It first makes use of make_classification to create 1,000 artificial samples for a two-class drawback. The dataset is then cut up into a ten% held-out check set for ultimate analysis and a 90% pool for coaching. From this pool, solely 10% is stored because the small preliminary labeled set—matching the constraint of beginning with very restricted annotations—whereas the remaining 90% turns into the unlabeled pool. This setup creates the lifelike low-label state of affairs Energetic Studying is designed for, with a big pool of unlabeled samples prepared for strategic querying. Try the FULL CODES right here.

X, y = make_classification(

n_samples=N_SAMPLES, n_features=10, n_informative=5, n_redundant=0,

n_classes=2, n_clusters_per_class=1, flip_y=0.1, random_state=SEED

)

# 1. Cut up into 90% Pool (samples to be queried) and 10% Take a look at (ultimate analysis)

X_pool, X_test, y_pool, y_test = train_test_split(

X, y, test_size=0.10, random_state=SEED, stratify=y

)

# 2. Cut up the 90% Pool into Preliminary Labeled (10% of the pool) and Unlabeled (90% of the pool)

X_labeled_current, X_unlabeled_full, y_labeled_current, y_unlabeled_full = train_test_split(

X_pool, y_pool, test_size=1.0 - INITIAL_LABELED_PERCENTAGE,

random_state=SEED, stratify=y_pool

)

# A set to trace indices within the unlabeled pool for environment friendly querying and elimination

unlabeled_indices_set = set(vary(X_unlabeled_full.form[0]))

print(f"Preliminary Labeled Samples (STARTING N): {len(y_labeled_current)}")

print(f"Unlabeled Pool Samples: {len(unlabeled_indices_set)}")Preliminary Coaching and Baseline Analysis

This block trains the preliminary Logistic Regression mannequin utilizing solely the small labeled seed set and evaluates its accuracy on the held-out check set. The labeled pattern rely and baseline accuracy are then saved as the primary factors within the efficiency historical past, establishing a beginning benchmark earlier than Energetic Studying begins. Try the FULL CODES right here.

labeled_size_history = []

accuracy_history = []

# Practice the baseline mannequin on the small preliminary labeled set

baseline_model = LogisticRegression(random_state=SEED, max_iter=2000)

baseline_model.match(X_labeled_current, y_labeled_current)

# Consider efficiency on the held-out check set

y_pred_init = baseline_model.predict(X_test)

accuracy_init = accuracy_score(y_test, y_pred_init)

# File the baseline level (x=90, y=0.8800)

labeled_size_history.append(len(y_labeled_current))

accuracy_history.append(accuracy_init)

print(f"INITIAL BASELINE (N={labeled_size_history[0]}): Take a look at Accuracy: {accuracy_history[0]:.4f}")Energetic Studying Loop

This block accommodates the guts of the Energetic Studying course of, the place the mannequin iteratively selects probably the most unsure pattern, receives its true label, retrains, and evaluates efficiency. In every iteration, the present mannequin predicts possibilities for all unlabeled samples, identifies the one with the best uncertainty (least confidence), and “queries” its true label—simulating a human annotator. The newly labeled knowledge level is added to the coaching set, a recent mannequin is retrained, and accuracy is recorded. Repeating this cycle for 20 queries demonstrates how focused labeling shortly improves mannequin efficiency with minimal annotation effort. Try the FULL CODES right here.

current_model = baseline_model # Begin the loop with the baseline mannequin

print(f"nStarting Energetic Studying Loop ({NUM_QUERIES} Queries)...")

# -----------------------------------------------

# The Energetic Studying Loop (Question, Annotate, Retrain, Consider)

# Function: Run 20 iterations to show strategic labeling features.

# -----------------------------------------------

for i in vary(NUM_QUERIES):

if not unlabeled_indices_set:

print("Unlabeled pool is empty. Stopping.")

break

# --- A. QUERY STRATEGY: Discover the Least Assured Pattern ---

# 1. Get likelihood predictions from the CURRENT mannequin for all unlabeled samples

possibilities = current_model.predict_proba(X_unlabeled_full)

max_probabilities = np.max(possibilities, axis=1)

# 2. Calculate Uncertainty Rating (1 - Max Confidence)

uncertainty_scores = 1 - max_probabilities

# 3. Establish the index of the pattern with the MAXIMUM uncertainty rating

current_indices_list = checklist(unlabeled_indices_set)

current_uncertainty = uncertainty_scores[current_indices_list]

most_uncertain_idx_in_subset = np.argmax(current_uncertainty)

query_index_full = current_indices_list[most_uncertain_idx_in_subset]

query_uncertainty_score = uncertainty_scores[query_index_full]

# --- B. HUMAN ANNOTATION SIMULATION ---

# That is the one crucial step the place the human annotator intervenes.

# We glance up the true label (y_unlabeled_full) for the pattern the mannequin requested for.

X_query = X_unlabeled_full[query_index_full].reshape(1, -1)

y_query = np.array([y_unlabeled_full[query_index_full]])

# Replace the Labeled Set: Add the brand new annotated pattern (N turns into N+1)

X_labeled_current = np.vstack([X_labeled_current, X_query])

y_labeled_current = np.hstack([y_labeled_current, y_query])

# Take away the pattern from the unlabeled pool

unlabeled_indices_set.take away(query_index_full)

# --- C. RETRAIN and EVALUATE ---

# Practice the NEW mannequin on the bigger, improved labeled set

current_model = LogisticRegression(random_state=SEED, max_iter=2000)

current_model.match(X_labeled_current, y_labeled_current)

# Consider the brand new mannequin on the held-out check set

y_pred = current_model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

# File outcomes for plotting

labeled_size_history.append(len(y_labeled_current))

accuracy_history.append(accuracy)

# Output standing

print(f"nQUERY {i+1}: Labeled Samples: {len(y_labeled_current)}")

print(f" > Take a look at Accuracy: {accuracy:.4f}")

print(f" > Uncertainty Rating: {query_uncertainty_score:.4f}")

final_accuracy = accuracy_history[-1]Last Consequence

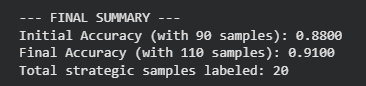

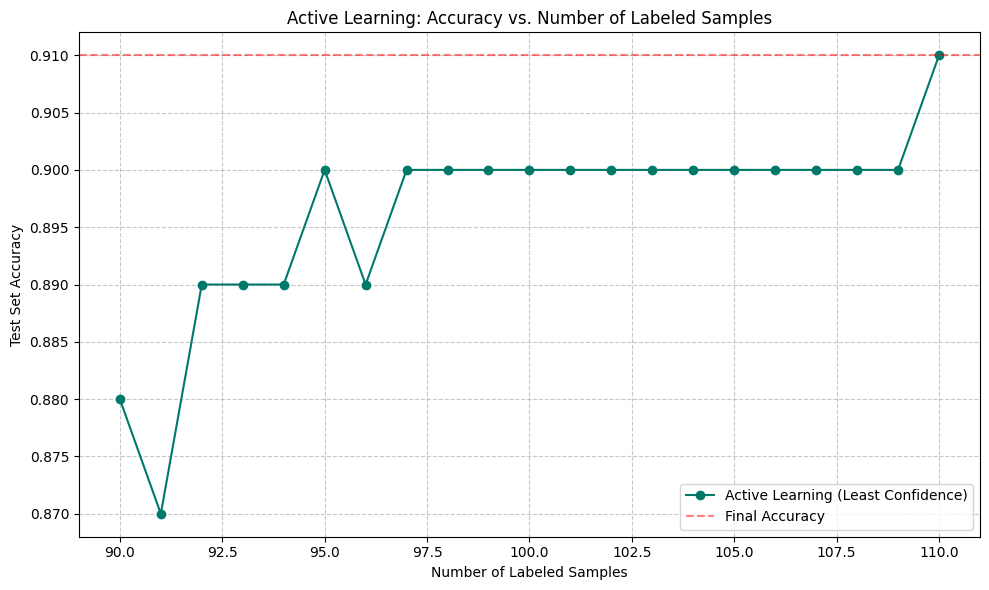

The experiment efficiently validated the effectivity of Energetic Studying. By focusing annotation efforts on solely 20 strategically chosen samples (rising the labeled set from 90 to 110), the mannequin’s efficiency on the unseen Take a look at Set improved from 0.8800 (88%) to 0.9100 (91%).

This 3 share level improve in accuracy was achieved with a minimal improve in annotation effort—roughly a 22% improve within the dimension of the coaching knowledge resulted in a measurable and significant efficiency increase.

In essence, the Energetic Learner acts as an clever curator, guaranteeing that each greenback or minute spent on human labeling gives the utmost doable profit, proving that sensible labeling is much extra priceless than random or bulk labeling. Try the FULL CODES right here.

Plotting the outcomes

plt.determine(figsize=(10, 6))

plt.plot(labeled_size_history, accuracy_history, marker="o", linestyle="-", coloration="#00796b", label="Energetic Studying (Least Confidence)")

plt.axhline(y=final_accuracy, coloration="pink", linestyle="--", alpha=0.5, label="Last Accuracy")

plt.title('Energetic Studying: Accuracy vs. Variety of Labeled Samples')

plt.xlabel('Variety of Labeled Samples')

plt.ylabel('Take a look at Set Accuracy')

plt.grid(True, linestyle="--", alpha=0.7)

plt.legend()

plt.tight_layout()

plt.present()

Try the FULL CODES right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be a part of us on telegram as effectively.

I’m a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I’ve a eager curiosity in Information Science, particularly Neural Networks and their software in varied areas.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s traits right now: learn extra, subscribe to our e-newsletter, and turn into a part of the NextTech neighborhood at NextTech-news.com