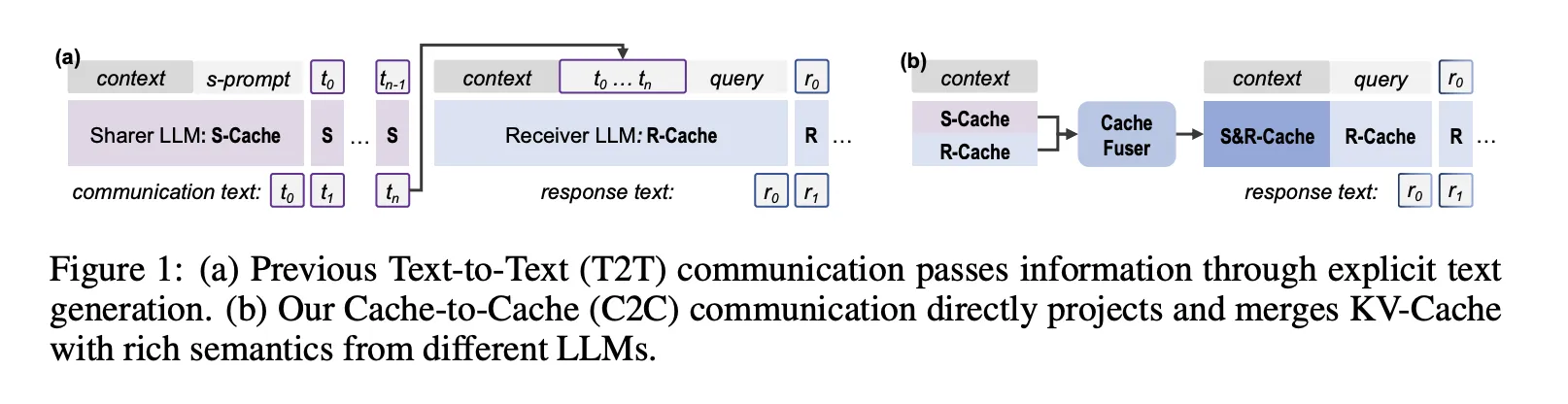

Can giant language fashions collaborate with out sending a single token of textual content? a crew of researchers from Tsinghua College, Infinigence AI, The Chinese language College of Hong Kong, Shanghai AI Laboratory, and Shanghai Jiao Tong College say sure. Cache-to-Cache (C2C) is a brand new communication paradigm the place giant language fashions change data by means of their KV-Cache reasonably than by means of generated textual content.

Textual content communication is the bottleneck in multi LLM programs

Present multi LLM programs principally use textual content to speak. One mannequin writes an evidence, one other mannequin reads it as context.

This design has three sensible prices:

- Inner activations are compressed into brief pure language messages. A lot of the semantic sign within the KV-Cache by no means crosses the interface.

- Pure language is ambiguous. Even with structured protocols, a coder mannequin might encode structural indicators, such because the function of an HTML

tag, that don’t survive a imprecise textual description. - Each communication step requires token by token decoding, which dominates latency in lengthy analytical exchanges.

The C2C work asks a direct query, can we deal with KV-Cache because the communication channel as a substitute.

Oracle experiments, can KV-Cache carry communication

The analysis crew first run two oracle fashion experiments to check whether or not KV-Cache is a helpful medium.

Cache enrichment oracle

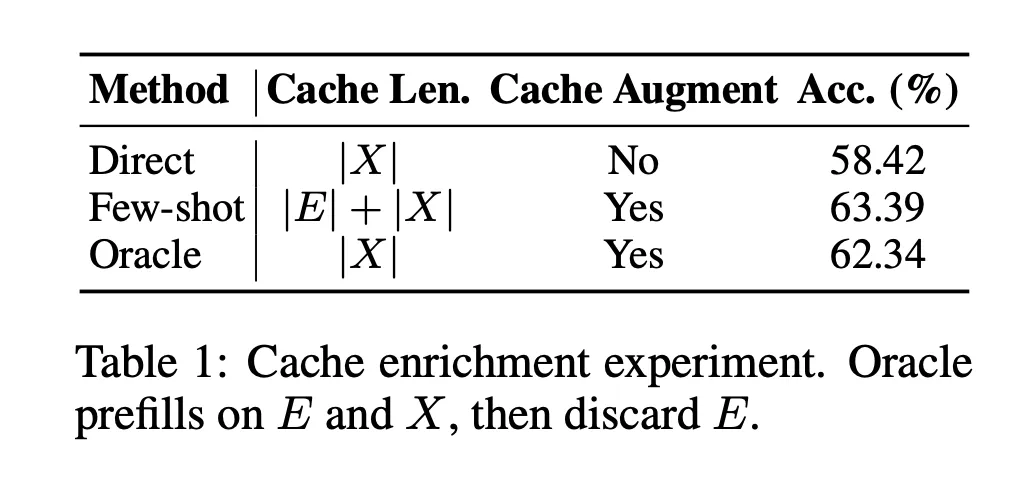

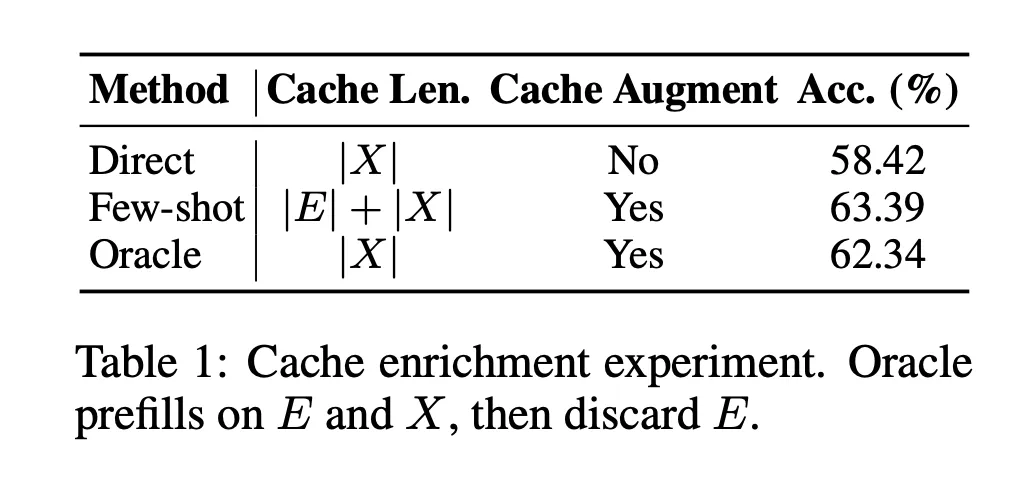

They evaluate three setups on a number of alternative benchmarks:

- Direct, prefill on the query solely.

- Few shot, prefill on exemplars plus query, longer cache.

- Oracle, prefill on exemplars plus query, then discard the exemplar section and maintain solely the query aligned slice of the cache, so cache size is identical as Direct.

Oracle improves accuracy from 58.42 p.c to 62.34 p.c on the identical cache size, whereas Few shot reaches 63.39 p.c. This demonstrates that enriching the query KV-Cache itself, even with out extra tokens, improves efficiency. Layer sensible evaluation exhibits that enriching solely chosen layers is healthier than enriching all layers, which later motivates a gating mechanism.

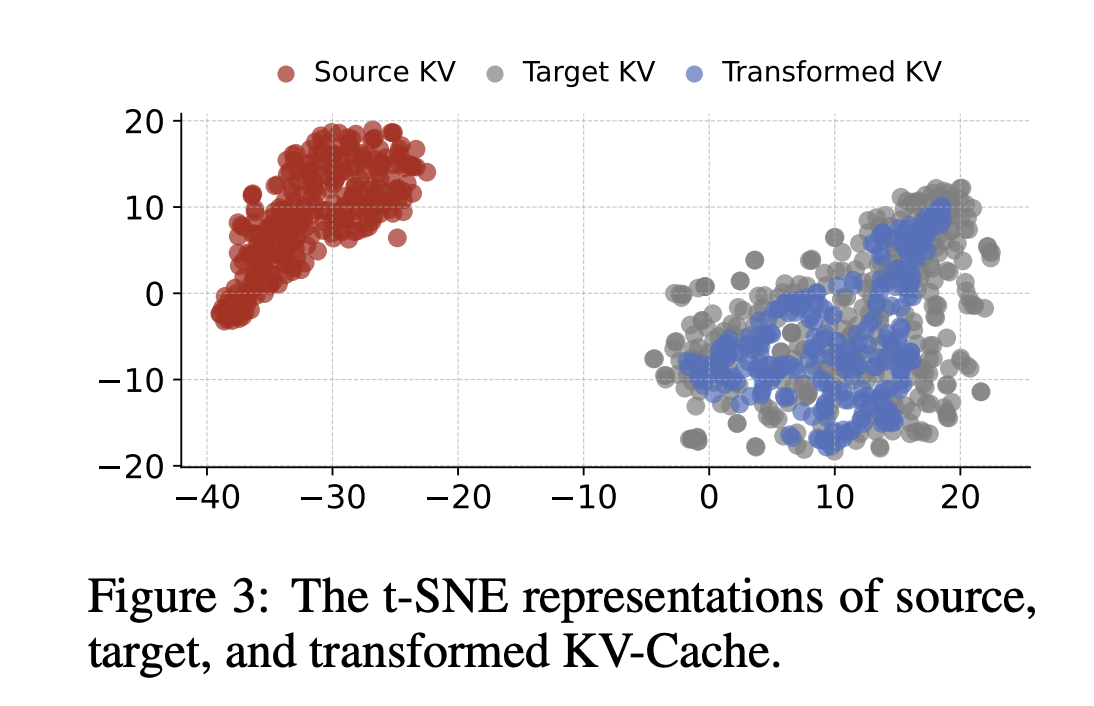

Cache transformation oracle

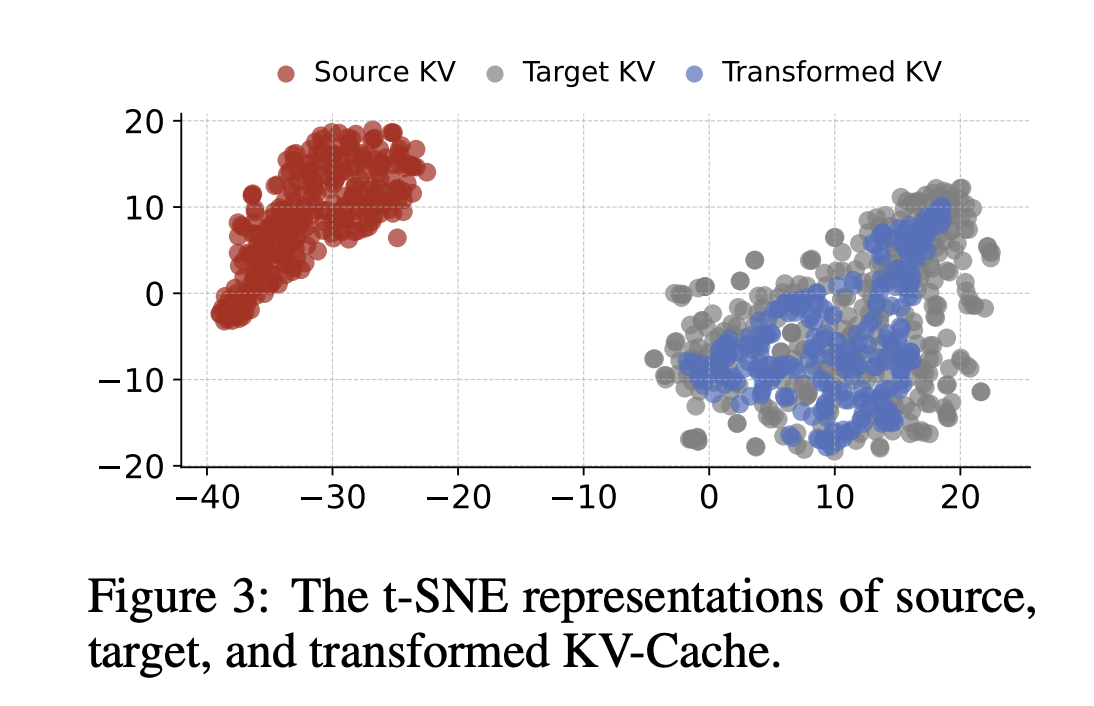

Subsequent, they check whether or not KV-Cache from one mannequin could be reworked into the house of one other mannequin. A 3 layer MLP is skilled to map KV-Cache from Qwen3 4B to Qwen3 0.6B. t SNE plots present that the reworked cache lies contained in the goal cache manifold, however solely in a sub area.

C2C, direct semantic communication by means of KV-Cache

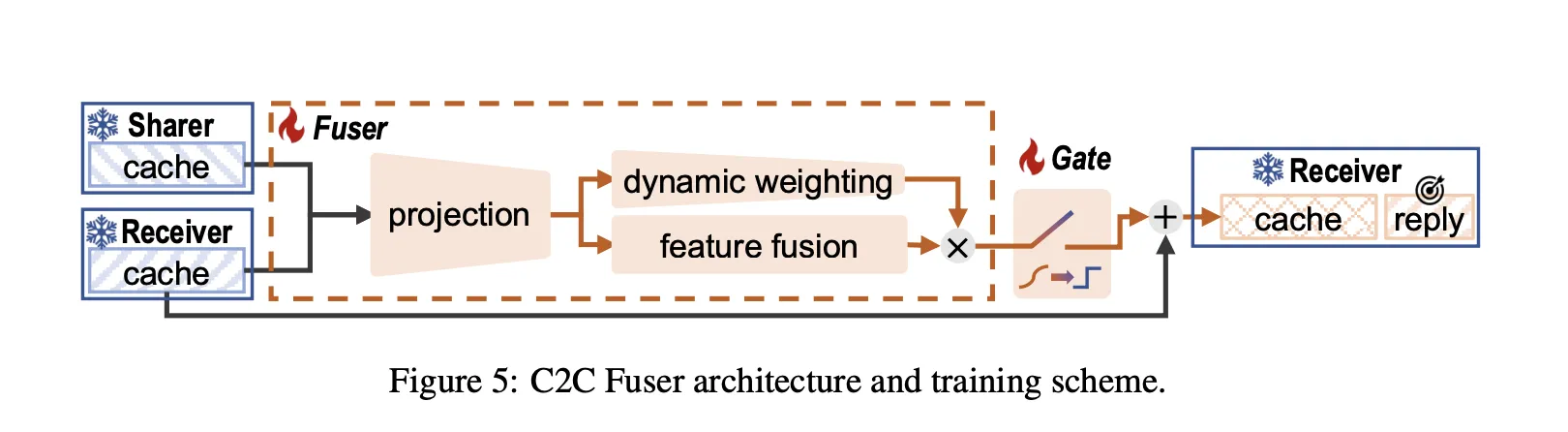

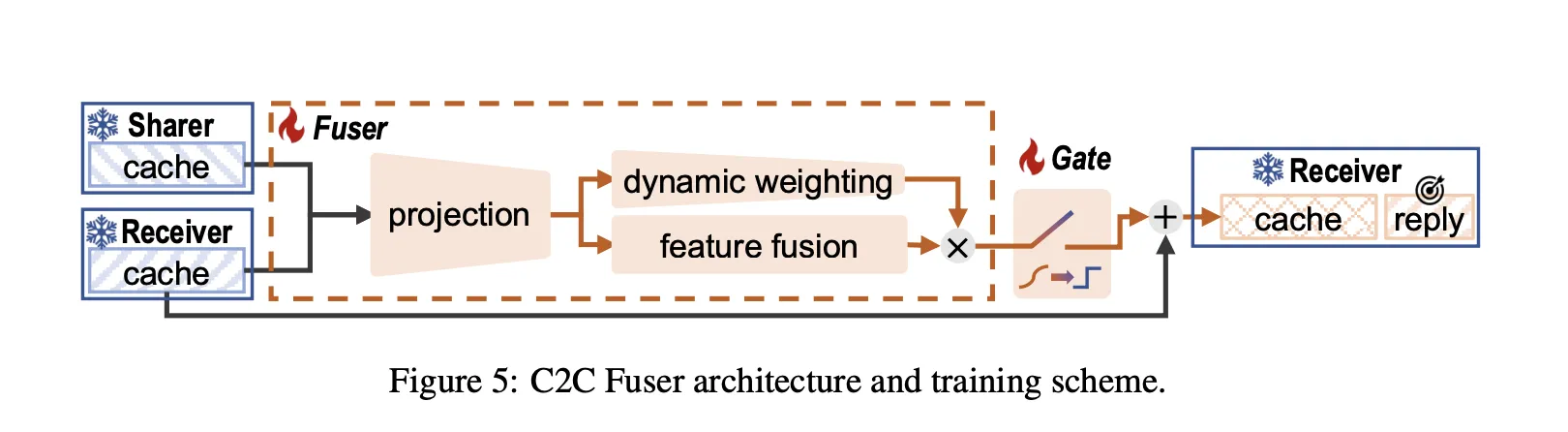

Based mostly on these oracles, the analysis crew defines Cache-to-Cache communication between a Sharer and a Receiver mannequin.

Throughout prefill, each fashions learn the identical enter and produce layer sensible KV-Cache. For every Receiver layer, C2C selects a mapped Sharer layer and applies a C2C Fuser to supply a fused cache. Throughout decoding, the Receiver predicts tokens conditioned on this fused cache as a substitute of its unique cache.

The C2C Fuser follows a residual integration precept and has three modules:

- Projection module concatenates Sharer and Receiver KV-Cache vectors, applies a projection layer, then a function fusion layer.

- Dynamic weighting module modulates heads based mostly on the enter in order that some consideration heads rely extra on Sharer data.

- Learnable gate provides a per layer gate that decides whether or not to inject Sharer context into that layer. The gate makes use of a Gumbel sigmoid throughout coaching and turns into binary at inference.

Sharer and Receiver can come from totally different households and sizes, so C2C additionally defines:

- Token alignment by decoding Receiver tokens to strings and re encoding them with the Sharer tokenizer, then selecting Sharer tokens with maximal string protection.

- Layer alignment utilizing a terminal technique that pairs prime layers first and walks backward till the shallower mannequin is totally lined.

Throughout coaching, each LLMs are frozen. Solely the C2C module is skilled, utilizing a subsequent token prediction loss on Receiver outputs. The principle C2C fusers are skilled on the primary 500k samples of the OpenHermes2.5 dataset, and evaluated on OpenBookQA, ARC Problem, MMLU Redux and C Eval.

Accuracy and latency, C2C versus textual content communication

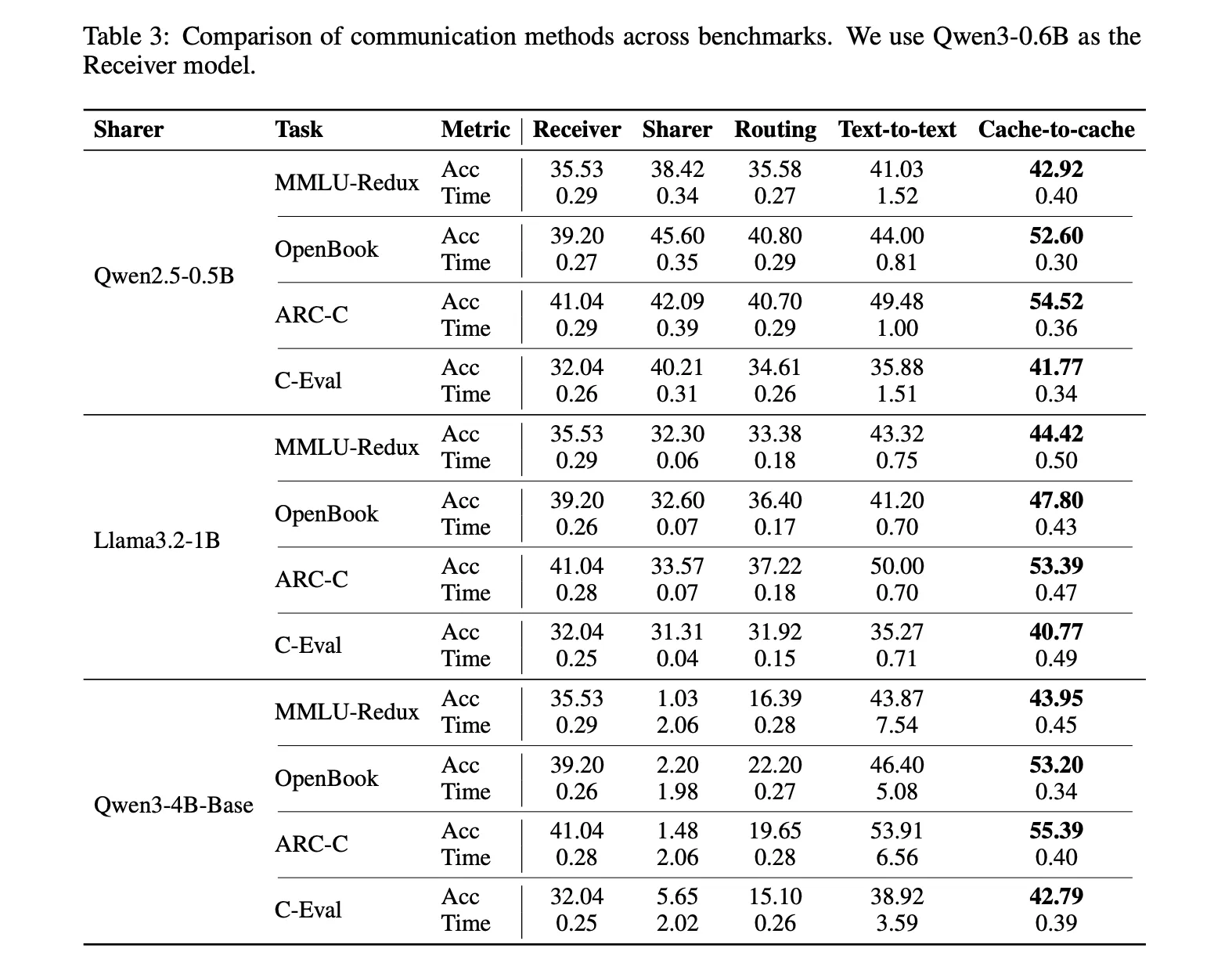

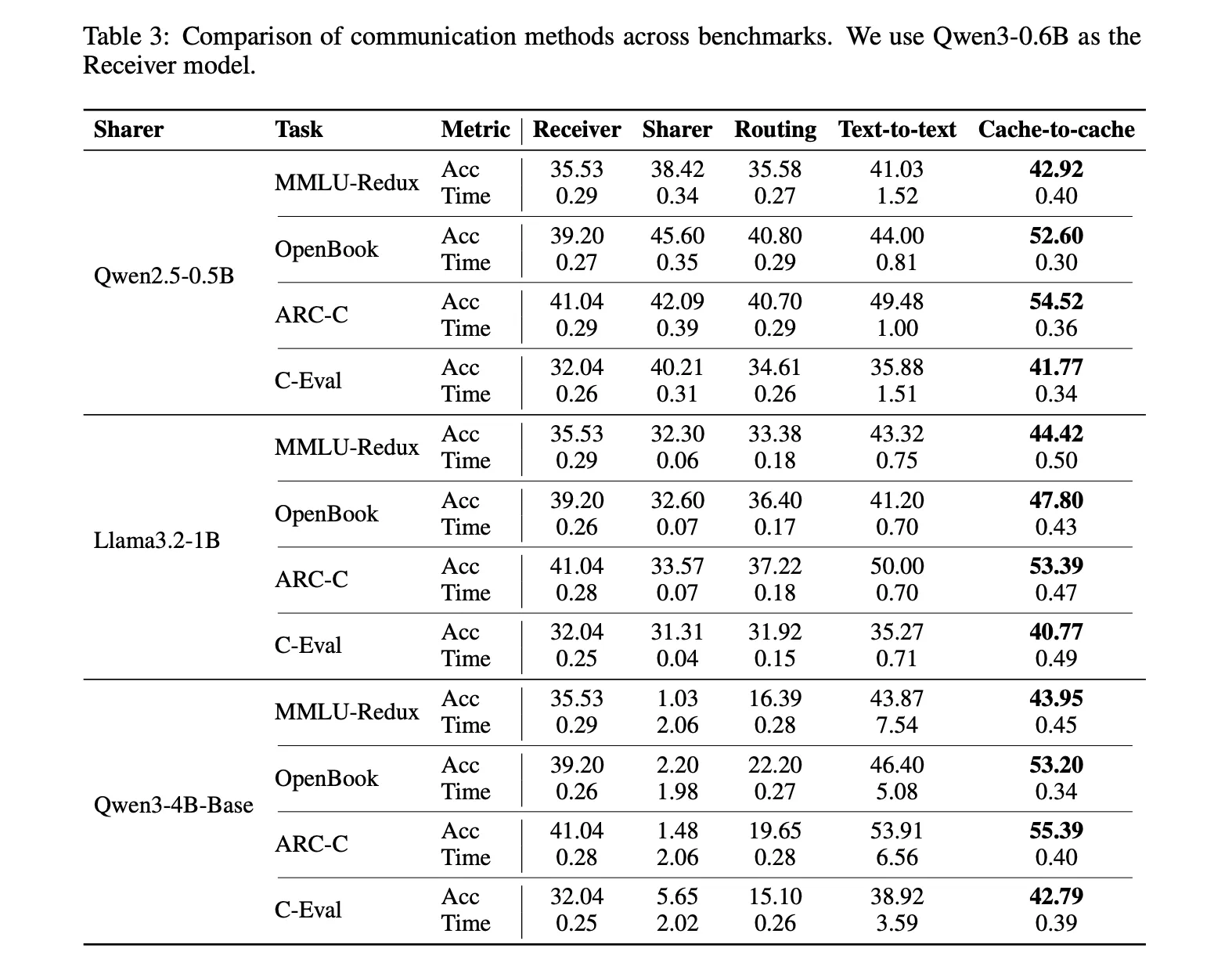

Throughout many Sharer Receiver combos constructed from Qwen2.5, Qwen3, Llama3.2 and Gemma3, C2C constantly improves Receiver accuracy and reduces latency. For outcomes:

- C2C achieves about 8.5 to 10.5 p.c greater common accuracy than particular person fashions.

- C2C outperforms textual content communication by about 3.0 to five.0 p.c on common.

- C2C delivers round 2x common speedup in latency in contrast with textual content based mostly collaboration, and in some configurations the speedup is bigger.

A concrete instance makes use of Qwen3 0.6B as Receiver and Qwen2.5 0.5B as Sharer. On MMLU Redux, the Receiver alone reaches 35.53 p.c, textual content to textual content reaches 41.03 p.c, and C2C reaches 42.92 p.c. Common time per question for textual content to textual content is 1.52 models, whereas C2C stays near the only mannequin at 0.40. Comparable patterns seem on OpenBookQA, ARC Problem and C Eval.

On LongBenchV1, with the identical pair, C2C outperforms textual content communication throughout all sequence size buckets. For sequences of 0 to 4k tokens, textual content communication reaches 29.47 whereas C2C reaches 36.64. Beneficial properties stay for 4k to 8k and for longer contexts.

Key Takeaways

- Cache-to-Cache communication lets a Sharer mannequin ship data to a Receiver mannequin instantly by way of KV-Cache, so collaboration doesn’t want intermediate textual content messages, which removes the token bottleneck and reduces semantic loss in multi mannequin programs.

- Two oracle research present that enriching solely the query aligned slice of the cache improves accuracy at fixed sequence size, and that KV-Cache from a bigger mannequin could be mapped right into a smaller mannequin’s cache house by means of a discovered projector, confirming cache as a viable communication medium.

- C2C Fuser structure combines Sharer and Receiver caches with a projection module, dynamic head weighting and a learnable per layer gate, and integrates every little thing in a residual means, which permits the Receiver to selectively soak up Sharer semantics with out destabilizing its personal illustration.

- Constant accuracy and latency good points are noticed throughout Qwen2.5, Qwen3, Llama3.2 and Gemma3 mannequin pairs, with about 8.5 to 10.5 p.c common accuracy enchancment over a single mannequin, 3 to five p.c good points over textual content to textual content communication, and round 2x sooner responses as a result of pointless decoding is eliminated.

Cache-to-Cache reframes multi LLM communication as a direct semantic switch drawback, not a immediate engineering drawback. By projecting and fusing KV-Cache between Sharer and Receiver with a neural fuser and learnable gating, C2C makes use of the deep specialised semantics of each fashions whereas avoiding express intermediate textual content technology, which is an data bottleneck and a latency price. With 8.5 to 10.5 p.c greater accuracy and about 2x decrease latency than textual content communication, C2C is a robust programs stage step towards KV native collaboration between fashions.

Take a look at the Paper and Codes. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you possibly can be part of us on telegram as nicely.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments right now: learn extra, subscribe to our e-newsletter, and change into a part of the NextTech group at NextTech-news.com