The dexterity hole: from human hand to robotic hand

Observe your individual hand. As you learn this, it’s holding your telephone or clicking your mouse with seemingly easy grace. With over 20 levels of freedom, human fingers possess extraordinary dexterity, which may grip a heavy hammer, rotate a screwdriver, or immediately modify when one thing slips.

With an analogous construction to human fingers, dexterous robotic fingers supply nice potential:

Common adaptability: Dealing with varied objects from delicate needles to basketballs, adapting to every distinctive problem in actual time.

Fantastic manipulation: Executing advanced duties like key rotation, scissor use, and surgical procedures which can be inconceivable with easy grippers.

Ability switch: Their similarity to human fingers makes them ultimate for studying from huge human demonstration knowledge.

Regardless of this potential, most present robots nonetheless depend on easy “grippers” because of the difficulties of dexterous manipulation. The pliers-like grippers are succesful solely of repetitive duties in structured environments. This “dexterity hole” severely limits robots’ position in our day by day lives.

Amongst all manipulation expertise, greedy stands as essentially the most basic. It’s the gateway by which many different capabilities emerge. With out dependable greedy, robots can’t choose up instruments, manipulate objects, or carry out advanced duties. Subsequently, we give attention to equipping dexterous robots with the potential to robustly grasp various objects on this work.

The problem: why dexterous greedy stays elusive

Whereas people can grasp nearly any object with minimal aware effort, the trail to dexterous robotic greedy is fraught with basic challenges which have stymied researchers for many years:

Excessive-dimensional management complexity. With 20+ levels of freedom, dexterous fingers current an astronomically massive management house. Every finger’s motion impacts the whole grasp, making it extraordinarily troublesome to find out optimum finger trajectories and power distributions in real-time. Which finger ought to transfer? How a lot power must be utilized? How you can modify in real-time? These seemingly easy questions reveal the extraordinary complexity of dexterous greedy.

Generalization throughout various object shapes. Totally different objects demand basically completely different grasp methods. For instance, spherical objects require enveloping grasps, whereas elongated objects want precision grips. The system should generalize throughout this huge range of shapes, sizes, and supplies with out specific programming for every class.

Form uncertainty underneath monocular imaginative and prescient. For sensible deployment in day by day life, robots should depend on single-camera techniques—essentially the most accessible and cost-effective sensing answer. Moreover, we can’t assume prior information of object meshes, CAD fashions, or detailed 3D info. This creates basic uncertainty: depth ambiguity, partial occlusions, and perspective distortions make it difficult to precisely understand object geometry and plan applicable grasps.

Our strategy: RobustDexGrasp

To handle these basic challenges, we current RobustDexGrasp, a novel framework that tackles every problem with focused options:

Instructor-student curriculum for high-dimensional management. We skilled our system by a two-stage reinforcement studying course of: first, a “trainer” coverage learns ultimate greedy methods with privileged info (full object form and tactile sensors) by intensive exploration in simulation. Then, a “scholar” coverage learns from the trainer utilizing solely real-world notion (single-view level cloud, noisy joint positions) and adapts to real-world disturbances.

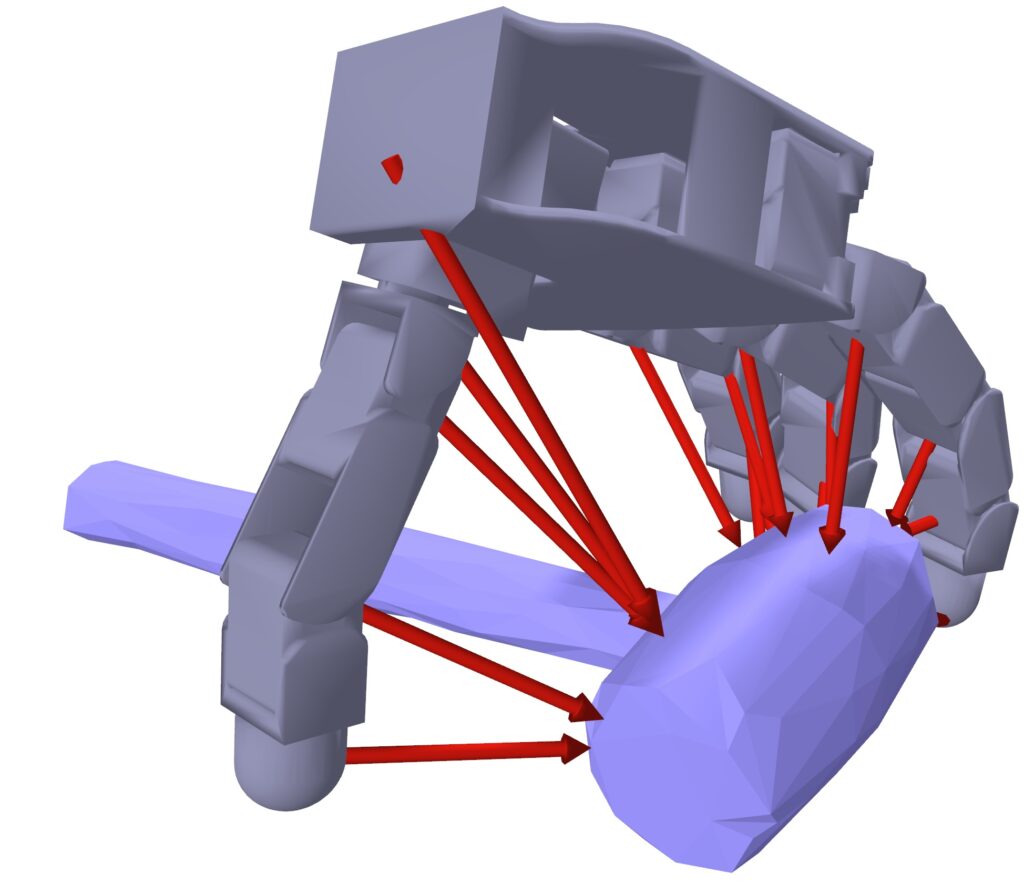

Hand-centric “instinct” for form generalization. As an alternative of capturing full 3D form options, our methodology creates a easy “psychological map” that solely solutions one query: “The place are the surfaces relative to my fingers proper now?” This intuitive strategy ignores irrelevant particulars (like colour or ornamental patterns) and focuses solely on what issues for the grasp. It’s the distinction between memorizing each element of a chair versus simply realizing the place to place your fingers to carry it—one is environment friendly and adaptable, the opposite is unnecessarily difficult.

Multi-modal notion for uncertainty discount. As an alternative of counting on imaginative and prescient alone, we mix the digicam’s view with the hand’s “physique consciousness” (proprioception—realizing the place its joints are) and reconstructed “contact sensation” to cross-check and confirm what it’s seeing. It’s like the way you may squint at one thing unclear, then attain out to the touch it to make sure. This multi-sense strategy permits the robotic to deal with tough objects that will confuse vision-only techniques—greedy a clear glass turns into doable as a result of the hand “is aware of” it’s there, even when the digicam struggles to see it clearly.

The outcomes: from laboratory to actuality

Skilled on simply 35 simulated objects, our system demonstrates wonderful real-world capabilities:

Generalization: It achieved a 94.6% success charge throughout a various take a look at set of 512 real-world objects, together with difficult gadgets like skinny bins, heavy instruments, clear bottles, and delicate toys.

Robustness: The robotic might preserve a safe grip even when a major exterior power (equal to a 250g weight) was utilized to the grasped object, exhibiting far higher resilience than earlier state-of-the-art strategies.

Adaptation: When objects have been unintentionally bumped or slipped from its grasp, the coverage dynamically adjusted finger positions and forces in real-time to recuperate, showcasing a stage of closed-loop management beforehand troublesome to realize.

Past choosing issues up: enabling a brand new period of robotic manipulation

RobustDexGrasp represents an important step towards closing the dexterity hole between people and robots. By enabling robots to know almost any object with human-like reliability, we’re unlocking new potentialities for robotic purposes past greedy itself. We demonstrated how it may be seamlessly built-in with different AI modules to carry out advanced, long-horizon manipulation duties:

Greedy in muddle: Utilizing an object segmentation mannequin to establish the goal object, our methodology allows the hand to select a particular merchandise from a crowded pile regardless of interference from different objects.

Activity-oriented greedy: With a imaginative and prescient language mannequin because the high-level planner and our methodology offering the low-level greedy talent, the robotic hand can execute grasps for particular duties, comparable to cleansing up the desk or enjoying chess with a human.

Dynamic interplay: Utilizing an object monitoring module, our methodology can efficiently management the robotic hand to know objects transferring on a conveyor belt.

Trying forward, we intention to beat present limitations, comparable to dealing with very small objects (which requires a smaller, extra anthropomorphic hand) and performing non-prehensile interactions like pushing. The journey to true robotic dexterity is ongoing, and we’re excited to be a part of it.

Learn the work in full

Hui Zhang

is a PhD candidate at ETH Zurich.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s tendencies at present: learn extra, subscribe to our e-newsletter, and turn into a part of the NextTech neighborhood at NextTech-news.com