Maya Analysis has launched Maya1, a 3B parameter textual content to speech mannequin that turns textual content plus a brief description into controllable, expressive speech whereas operating in actual time on a single GPU.

What Maya1 Really Does?

Maya1 is a state-of-the-art speech mannequin for expressive voice technology. It’s constructed to seize actual human emotion and exact voice design from textual content inputs.

The core interface has 2 inputs:

- A pure language voice description, for instance ‘Feminine voice in her 20s with a British accent, energetic, clear diction” or “Demon character, male voice, low pitch, gravelly timbre, gradual pacing’.

- The textual content that ought to be spoken

The mannequin combines each indicators and generates audio that matches the content material and the described model. You may as well insert inline emotion tags contained in the textual content, reminiscent of

Maya1 outputs 24 kHz mono audio and helps actual time streaming, which makes it appropriate for assistants, interactive brokers, video games, podcasts and reside content material.

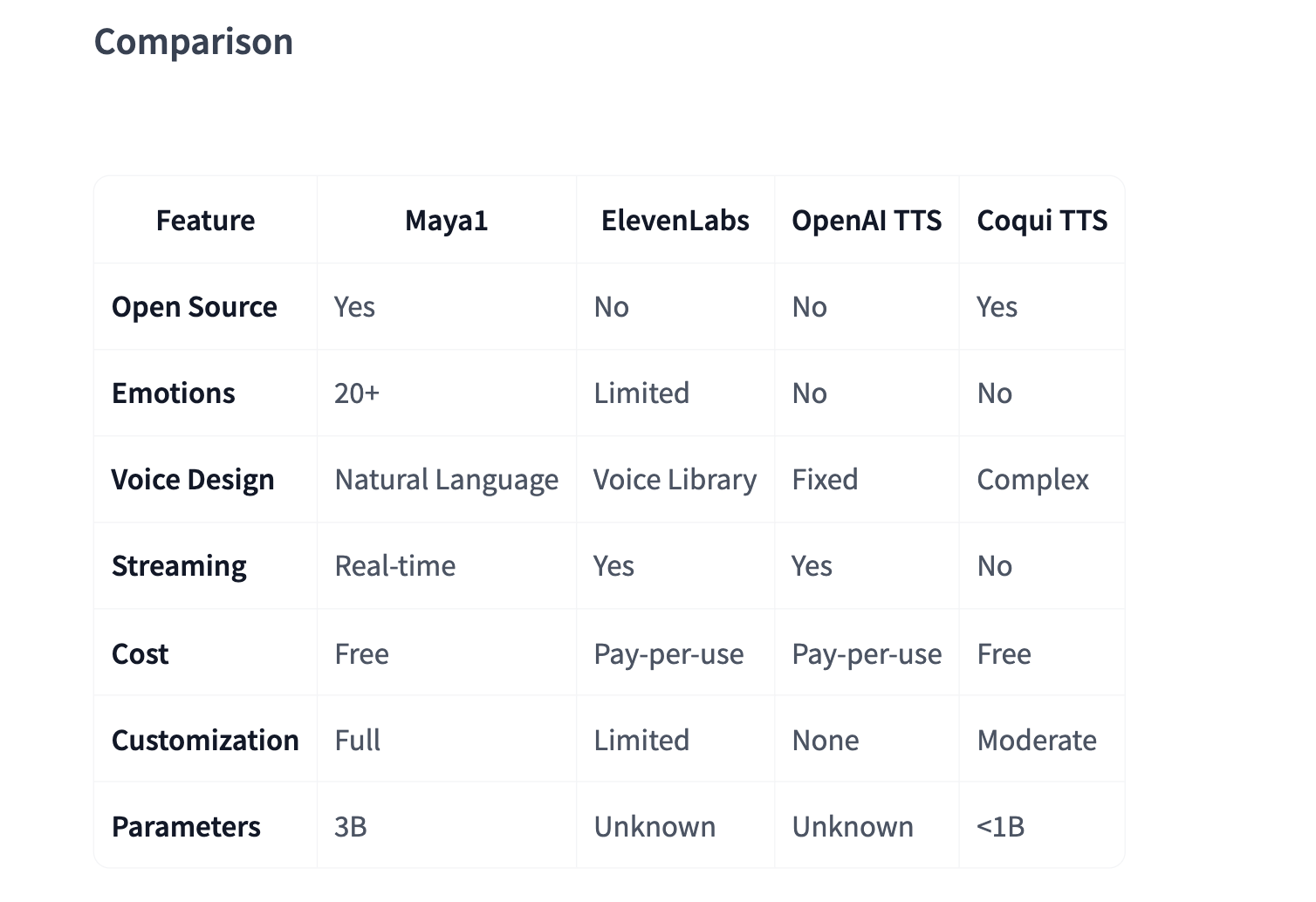

The Maya Analysis staff claims that the mannequin outperforms prime proprietary techniques whereas remaining absolutely open supply underneath the Apache 2.0 license.

Structure and SNAC Codec

Maya1 is a 3B parameter decoder solely transformer with a Llama model spine. As a substitute of predicting uncooked waveforms, it predicts tokens from a neural audio codec named SNAC.

The technology circulate is

textual content → tokenize → generate SNAC codes (7 tokens per body) → decode → 24 kHz audio

SNAC makes use of a multi scale hierarchical construction at about 12, 23 and 47 Hz. This retains the autoregressive sequence compact whereas preserving element. The codec is designed for actual time streaming at about 0.98 kbps.

The essential level is that the transformer operates on discrete codec tokens as a substitute of uncooked samples. A separate SNAC decoder, for instance hubertsiuzdak/snac_24khz, reconstructs the waveform. This separation makes technology extra environment friendly and simpler to scale than direct waveform prediction.

Coaching Knowledge And Voice Conditioning

Maya1 is pretrained on an web scale English speech corpus to be taught broad acoustic protection and pure coarticulation. It’s then high quality tuned on a curated proprietary dataset of studio recordings that embody human verified voice descriptions, greater than 20 emotion tags per pattern, a number of English accents, and character or position variations.

The documented knowledge pipeline consists of:

- 24 kHz mono resampling with about minus 23 LUFS loudness

- Voice exercise detection with silence trimming between 1 and 14 seconds

- Compelled alignment utilizing Montreal Compelled Aligner for phrase boundaries

- MinHash LSH textual content deduplication

- Chromaprint primarily based audio deduplication

- SNAC encoding with 7 token body packing

The Maya Analysis staff evaluated a number of methods to situation the mannequin on a voice description. Easy colon codecs and key worth tag codecs both induced the mannequin to talk the outline or didn’t generalize nicely. The most effective performing format makes use of an XML model attribute wrapper that encodes the outline and textual content in a pure manner whereas remaining sturdy.

In follow, this implies builders can describe voices in free type textual content, near how they’d transient a voice actor, as a substitute of studying a customized parameter schema.

Inference And Deployment On A Single GPU

The reference Python script on Hugging Face masses the mannequin with AutoModelForCausalLM.from_pretrained("maya-research/maya1", torch_dtype=torch.bfloat16, device_map="auto") and makes use of the SNAC decoder from SNAC.from_pretrained("hubertsiuzdak/snac_24khz").

The Maya Analysis staff recommends a single GPU with 16 GB or extra of VRAM, for instance A100, H100 or a shopper RTX 4090 class card.

For manufacturing, they supply a vllm_streaming_inference.py script that integrates with vLLM. It helps Automated Prefix Caching for repeated voice descriptions, a WebAudio ring buffer, multi GPU scaling and sub 100 millisecond latency targets for actual time use.

Past the core repository, they’ve launched:

- A Hugging Face House that exposes an interactive browser demo the place customers enter textual content and voice descriptions and take heed to output

- GGUF quantized variants of Maya1 for lighter deployments utilizing

llama.cpp - A ComfyUI node that wraps Maya1 as a single node, with emotion tag helpers and SNAC integration

These tasks reuse the official mannequin weights and interface, in order that they keep in line with the principle implementation.

Key Takeaways

- Maya1 is a 3B parameter, decoder solely, Llama model textual content to speech mannequin that predicts SNAC neural codec tokens as a substitute of uncooked waveforms, and outputs 24 kHz mono audio with streaming assist.

- The mannequin takes 2 inputs, a pure language voice description and the goal textual content, and helps greater than 20 inline emotion tags reminiscent of

- Maya1 is educated with a pipeline that mixes giant scale English pretraining and studio high quality high quality tuning with loudness normalization, voice exercise detection, pressured alignment, textual content deduplication, audio deduplication and SNAC encoding.

- The reference implementation runs on a single 16 GB plus GPU utilizing

torch_dtype=torch.bfloat16, integrates with a SNAC decoder, and has a vLLM primarily based streaming server with Automated Prefix Caching for low latency deployment. - Maya1 is launched underneath the Apache 2.0 license, with official weights, Hugging Face House demo, GGUF quantized variants and ComfyUI integration, which makes expressive, emotion wealthy, controllable textual content to speech accessible for business and native use.

Maya1 pushes open supply textual content to speech into territory that was beforehand dominated by proprietary APIs. A 3B parameter Llama model decoder that predicts SNAC codec tokens, runs on a single 16 GB GPU with vLLM streaming and Automated Prefix Caching, and exposes greater than 20 inline feelings with pure language voice design, is a sensible constructing block for actual time brokers, video games and instruments. General, Maya1 reveals that expressive, controllable TTS will be each open and manufacturing prepared.

Try the Mannequin Weights and Demo. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be a part of us on telegram as nicely.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments at the moment: learn extra, subscribe to our e-newsletter, and grow to be a part of the NextTech group at NextTech-news.com