Giant language mannequin brokers are beginning to retailer all the things they see, however can they really enhance their insurance policies at take a look at time from these experiences moderately than simply replaying context home windows?

Researchers from College of Illinois Urbana Champaign and Google DeepMind suggest Evo-Reminiscence, a streaming benchmark and agent framework that targets this actual hole. Evo-Reminiscence evaluates test-time studying with self-evolving reminiscence, asking whether or not brokers can accumulate and reuse methods from steady job streams as an alternative of relying solely on static conversational logs.

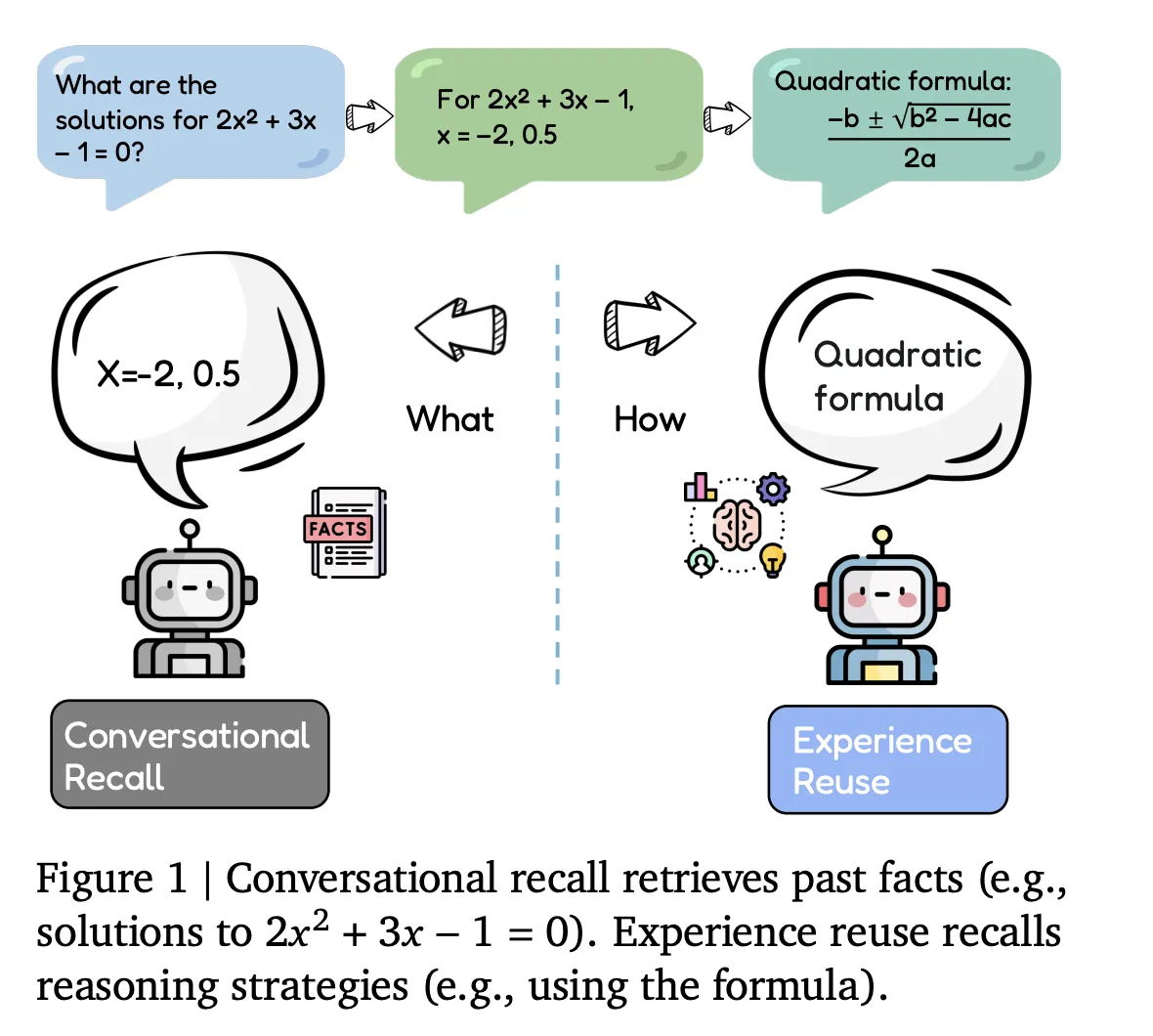

Conversational Recall vs Expertise Reuse

Most present brokers implement conversational recall. They retailer dialogue historical past, software traces, and retrieved paperwork, that are then reintegrated into the context window for future queries. This sort of reminiscence serves as a passive buffer, able to recovering info or recalling earlier steps, nevertheless it doesn’t actively modify the agent’s strategy for associated duties.

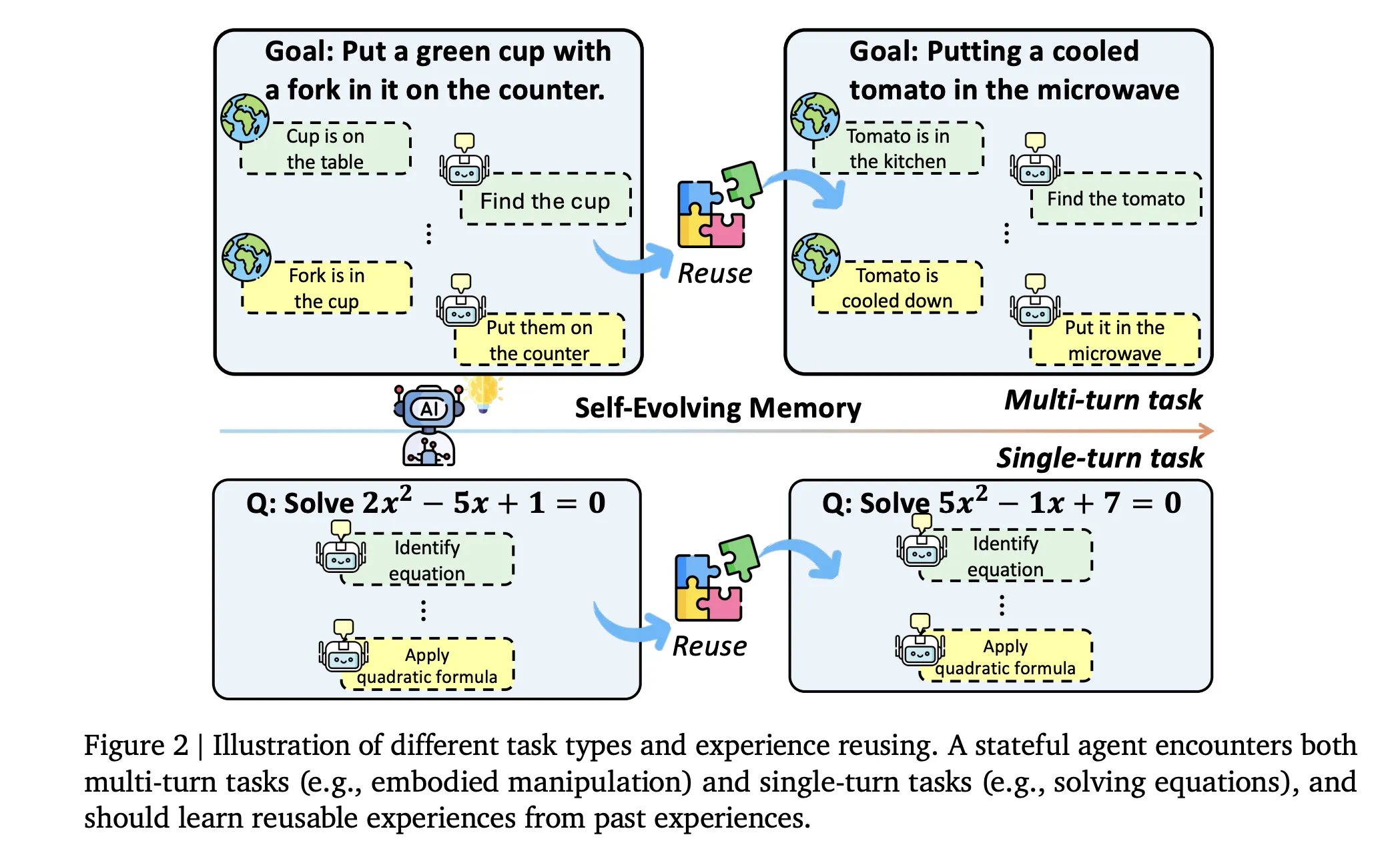

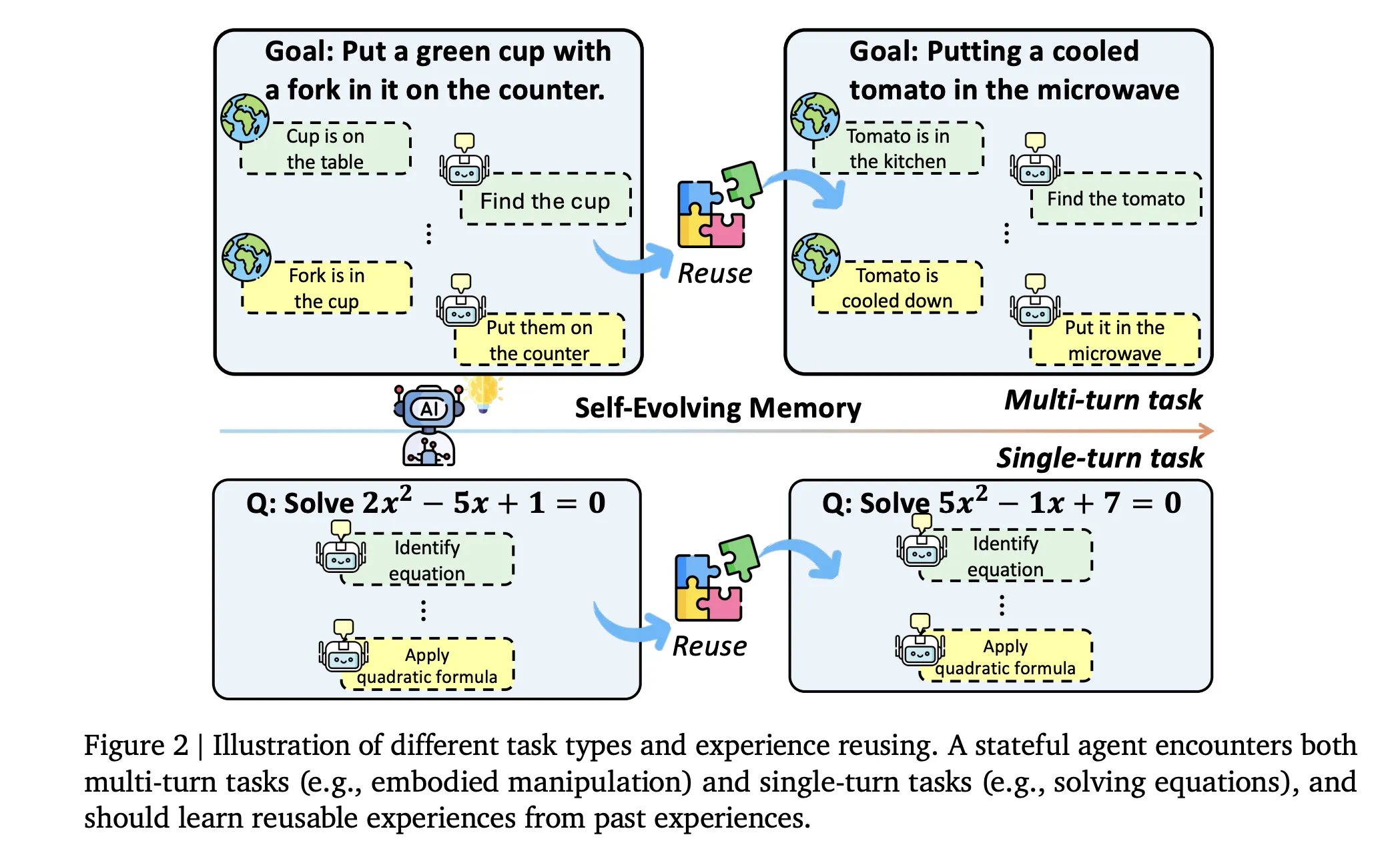

Evo-Reminiscence as an alternative focuses on expertise reuse. Right here every interplay is handled as an expertise that encodes not solely inputs and outputs, but in addition whether or not a job succeeded and which methods had been efficient. The benchmark checks if brokers can retrieve these experiences in later duties, apply them as reusable procedures, and refine the reminiscence over time.

Benchmark Design and Activity Streams

The analysis crew formalizes a reminiscence augmented agent as a tuple ((F, U, R, C)). The bottom mannequin (F) generates outputs. The retrieval module (R) searches a reminiscence retailer. The context constructor (C) synthesizes a working immediate from the present enter and retrieved objects. The replace operate (U) writes new expertise entries and evolves the reminiscence after each step.

Evo-Reminiscence restructures standard benchmarks into sequential job streams. Every dataset turns into an ordered sequence of duties the place early objects carry methods which are helpful for later ones. The suite covers AIME 24, AIME 25, GPQA Diamond, MMLU-Professional economics, engineering, philosophy, and ToolBench for software use, together with multi flip environments from AgentBoard together with AlfWorld, BabyAI, ScienceWorld, Jericho, and PDDL planning.

Analysis is finished alongside 4 axes. Single flip duties use actual match or reply accuracy. Embodied environments report success price and progress price. Step effectivity measures common steps per profitable job. Sequence robustness checks whether or not efficiency is steady when job order modifications.

ExpRAG, a Minimal Expertise Reuse Baseline

To set a decrease certain, the analysis crew outline ExpRAG. Every interplay turns into a structured expertise textual content with template ⟨xi,yi^,fi⟩the place xi is enter, yi^ is mannequin output and fi is suggestions, for instance a correctness sign. At a brand new step (t), the agent retrieves related experiences from reminiscence utilizing a similarity rating and concatenates them with the present enter as in-context examples. Then it appends the brand new expertise into reminiscence.

ExpRAG doesn’t change the agent management loop. It’s nonetheless a single shot name to the spine, however now augmented with explicitly saved prior duties. The design is deliberately easy in order that any positive factors on Evo-Reminiscence may be attributed to job degree expertise retrieval, to not new planning or software abstractions.

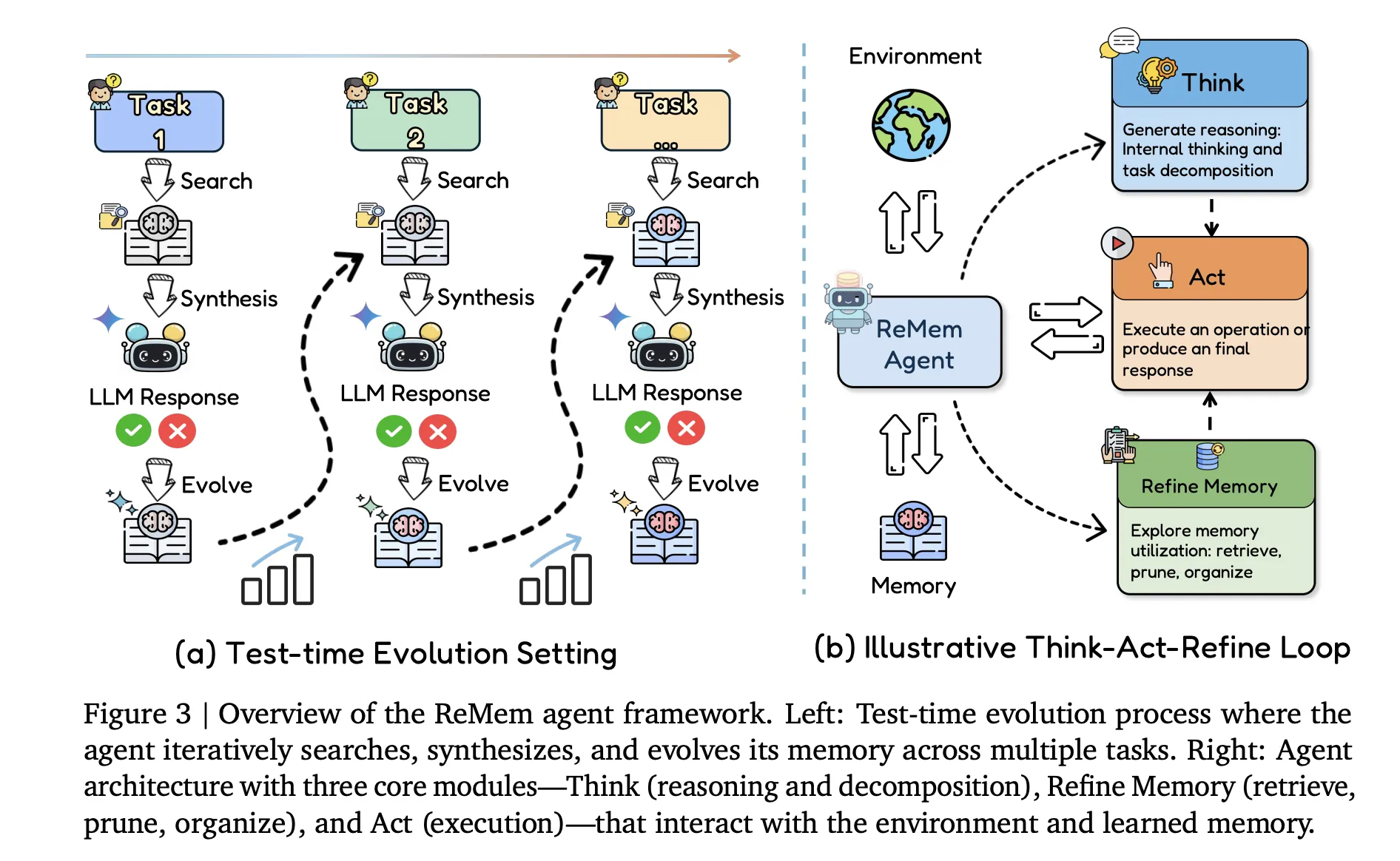

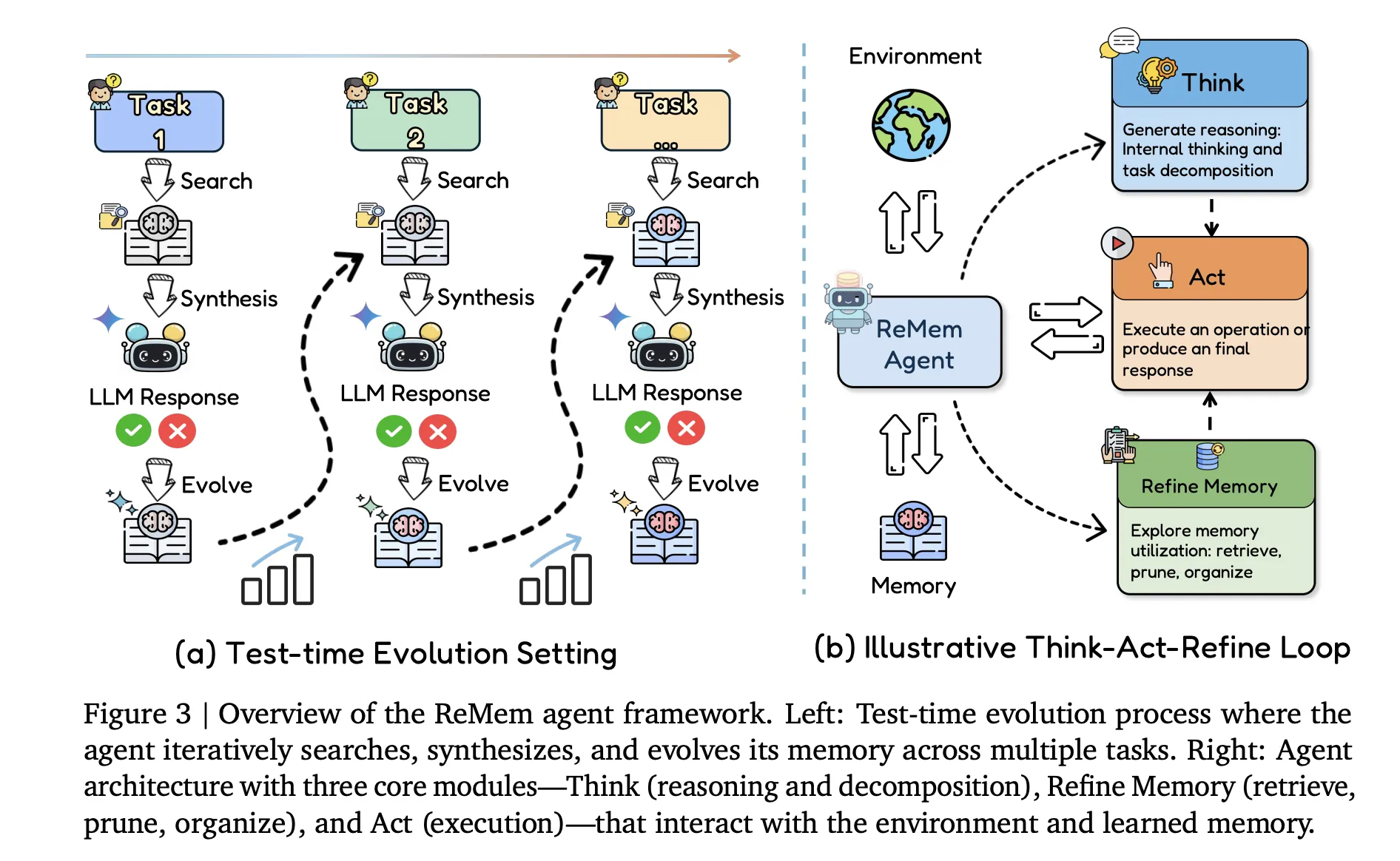

ReMem, Motion Suppose Reminiscence Refine

The primary contribution on the agent aspect is ReMem, an motion–suppose–reminiscence refine pipeline constructed on high of the identical spine fashions. At every inside step, given the present enter, reminiscence state and previous reasoning traces, the agent chooses one in all three operations:

- Suppose generates intermediate reasoning traces that decompose the duty.

- Act emits an setting motion or closing reply seen to the person.

- Refine performs meta reasoning on reminiscence by retrieving, pruning and reorganizing expertise entries.

This loop induces a Markov choice course of the place the state consists of the question, present reminiscence and ongoing ideas. Inside a step the agent can interleave a number of Suppose and Refine operations, and the step terminates when an Act operation is issued. In distinction to plain ReAct fashion brokers, reminiscence is now not a set buffer. It turns into an express object that the agent causes about and edits throughout inference.

Outcomes on Reasoning, Instruments and Embodied Environments

The analysis crew instantiate all strategies on Gemini 2.5 Flash and Claude 3.7 Sonnet beneath a unified search–predict–evolve protocol. This isolates the impact of reminiscence structure, since prompting, search and suggestions are held fixed throughout baselines.

On single flip benchmarks, evolving reminiscence strategies produce constant however average positive factors. For Gemini 2.5 Flash, ReMem reaches common actual match 0.65 throughout AIME 24, AIME 25, GPQA Diamond and MMLU Professional subsets, and 0.85 and 0.71 API and accuracy on ToolBench. ExpRAG additionally performs strongly, with common 0.60, and outperforms a number of extra complicated designs corresponding to Agent Workflow Reminiscence and Dynamic Cheatsheet variants.

The impression is bigger in multi flip environments. On Claude 3.7 Sonnet, ReMem reaches success and progress 0.92 and 0.96 on AlfWorld, 0.73 and 0.83 on BabyAI, 0.83 and 0.95 on PDDL and 0.62 and 0.89 on ScienceWorld, giving common 0.78 success and 0.91 progress throughout datasets. On Gemini 2.5 Flash, ReMem achieves common 0.50 success and 0.64 progress, enhancing over historical past and ReAct fashion baselines in all 4 environments.

Step effectivity can be improved. In AlfWorld, common steps to finish a job drop from 22.6 for a historical past baseline to 11.5 for ReMem. Light-weight designs corresponding to ExpRecent and ExpRAG scale back steps as nicely, which signifies that even easy job degree expertise reuse could make behaviour extra environment friendly with out architectural modifications to the spine.

An extra evaluation hyperlinks positive factors to job similarity inside every dataset. Utilizing embeddings from the retriever encoder, the analysis crew compute common distance from duties to their cluster middle. ReMem’s margin over a historical past baseline correlates strongly with this similarity measure, with reported Pearson correlation about 0.72 on Gemini 2.5 Flash and 0.56 on Claude 3.7 Sonnet. Structured domains corresponding to PDDL and AlfWorld present bigger enhancements than various units like AIME 25 or GPQA Diamond.

Key Takeaways

- Evo-Reminiscence is a complete streaming benchmark that converts commonplace datasets into ordered job, so brokers can retrieve, combine and replace reminiscence over time moderately than depend on static conversational recall.

- The framework formalizes reminiscence augmented brokers as a tuple ((F, U, R, C)) and implements greater than 10 consultant reminiscence modules, together with retrieval primarily based, workflow and hierarchical recollections, evaluated on 10 single flip and multi flip datasets throughout reasoning, query answering, software use and embodied environments.

- ExpRAG offers a minimal expertise reuse baseline that shops every job interplay as a structured textual content document with enter, mannequin output and suggestions, then retrieves related experiences as in context exemplars for brand new duties, already giving constant enhancements over pure historical past primarily based baselines.

- ReMem extends the usual ReAct fashion loop with an express Suppose, Act, Refine Reminiscence management cycle, which lets the agent actively retrieve, prune and reorganize its reminiscence throughout inference, resulting in increased accuracy, increased success price and fewer steps on each single flip reasoning and lengthy horizon interactive environments.

- Throughout Gemini 2.5 Flash and Claude 3.7 Sonnet backbones, self evolving recollections corresponding to ExpRAG and particularly ReMem make smaller fashions behave like stronger brokers at take a look at time, enhancing actual match, success and progress metrics with none retraining of base mannequin weights.

Editorial Notes

Evo Reminiscence is a helpful step for evaluating self evolving reminiscence in LLM brokers. It forces fashions to function on sequential job streams as an alternative of remoted prompts. It compares greater than 10 reminiscence architectures beneath a single framework. Easy strategies like ExpRAG already present clear positive factors. ReMem’s motion, suppose, refine reminiscence loop improves actual match, success and progress with out retraining base weights. Total, this analysis work makes take a look at time evolution a concrete design goal for LLM agent techniques

Try the Paper. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as nicely.

Michal Sutter is an information science skilled with a Grasp of Science in Information Science from the College of Padova. With a strong basis in statistical evaluation, machine studying, and information engineering, Michal excels at reworking complicated datasets into actionable insights.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s traits right now: learn extra, subscribe to our publication, and change into a part of the NextTech neighborhood at NextTech-news.com