Microsoft has lately launched its newest customized AI processor, the Maia 200, and the timing, or ought to I say circumstances, of its launch couldn’t be extra coincidental. Inference, the stage the place a skilled AI mannequin generates responses and different outputs, has turn out to be the most costly a part of operating AI methods at scale. To deal with that concern sq. on, Microsoft designed the Maia 200 from the bottom up with the only real objective of constructing inference extra environment friendly and cost-effective, and the outcomes are very evident.

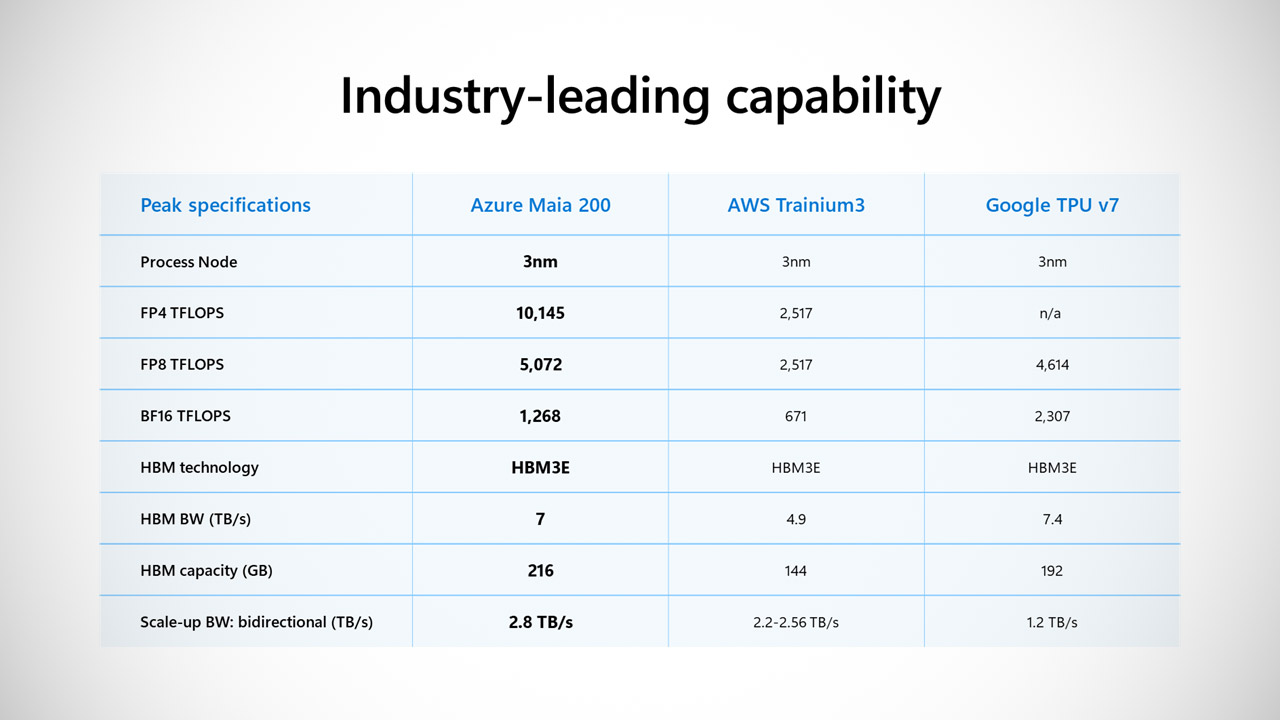

The Maia 200 chip was designed utilizing TSMC’s 3nm course of node, leading to a tiny chip with a tremendous 140 billion transistors. Each gives a scorching 10 petaFLOPS of computing in FP4 accuracy and a still-not-slow 5 petaFLOPS in FP8. Reminiscence can be no slacker, with 216GB of HBM3e operating at 7 TB/s bandwidth and 272MB of on-chip SRAM neatly organized in a multitier hierarchy. What in regards to the energy draw? The TDP for your complete system-on-chip bundle will not be minor, at 750W. These stats are undoubtedly making the rivals look a bit inexperienced across the gills.

ASUS ROG Xbox Ally – 7” 1080p 120Hz Touchscreen Gaming Handheld, 3-month Xbox Sport Move Premium…

- XBOX EXPERIENCE BROUGHT TO LIFE BY ROG The Xbox gaming legacy meets ROG’s a long time of premium {hardware} design within the ROG Xbox Ally. Boot straight into…

- XBOX GAME BAR INTEGRATION Launch Sport Bar with a faucet of the Xbox button or play your favourite titles natively from platforms like Xbox Sport Move,…

- ALL YOUR GAMES, ALL YOUR PROGRESS Powered by Home windows 11, the ROG Xbox Ally provides you entry to your full library of PC video games from Xbox and different sport…

Microsoft is making the Maia 200 appear to be a monster by evaluating it to the competitors. For starters, it has 3 times the FP4 efficiency of Amazon’s third-generation Trainium processor, and its FP8 efficiency rivals Google’s seventh-generation TPU. It’s greater than delighted to report {that a} single node can deal with the biggest fashions we have now in the present day whereas nonetheless leaving alternative for ever bigger and higher fashions sooner or later. In addition they promise 30% larger efficiency per greenback than the Maia 100, which noticed some real-world utility.

The chip has already been deployed in Azure’s US Central space close to Des Moines, Iowa, with US West 3 close to Phoenix, Arizona, set to observe shortly. It’s greater than only a shiny new processor; it’s already supporting actual workloads for Microsoft’s Superintelligence crew, corresponding to artificial information era and reinforcement studying, and it powers the Copilot options everyone knows and love, in addition to OpenAI fashions like GPT-5.2. And should you’re a developer or scholar searching for something like this, you’re in luck: a preview SDK is offered that interacts with PyTorch and comprises a Triton compiler, an optimized kernel library, and a low-level language for fine-tuning fashions throughout various {hardware}.

Scott Guthrie, Microsoft’s head of cloud and AI, is happy with the Maia 200, calling it essentially the most environment friendly inference system the enterprise has ever produced. The emphasis stays on reducing the real-world price of manufacturing AI tokens at scale. To be sincere, each huge cloud supplier is below the identical strain: Nvidia’s GPUs have lengthy been the go-to for a lot of the heavy lifting, however provide and pricing are catching up, forcing everybody to attempt one thing completely different. Microsoft is following Amazon and Google on this change, and the Maia 200 is basically a chip that has gone all-in on inference reasonably than trying to be a jack-of-all-trades.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s developments in the present day: learn extra, subscribe to our publication, and turn out to be a part of the NextTech group at NextTech-news.com