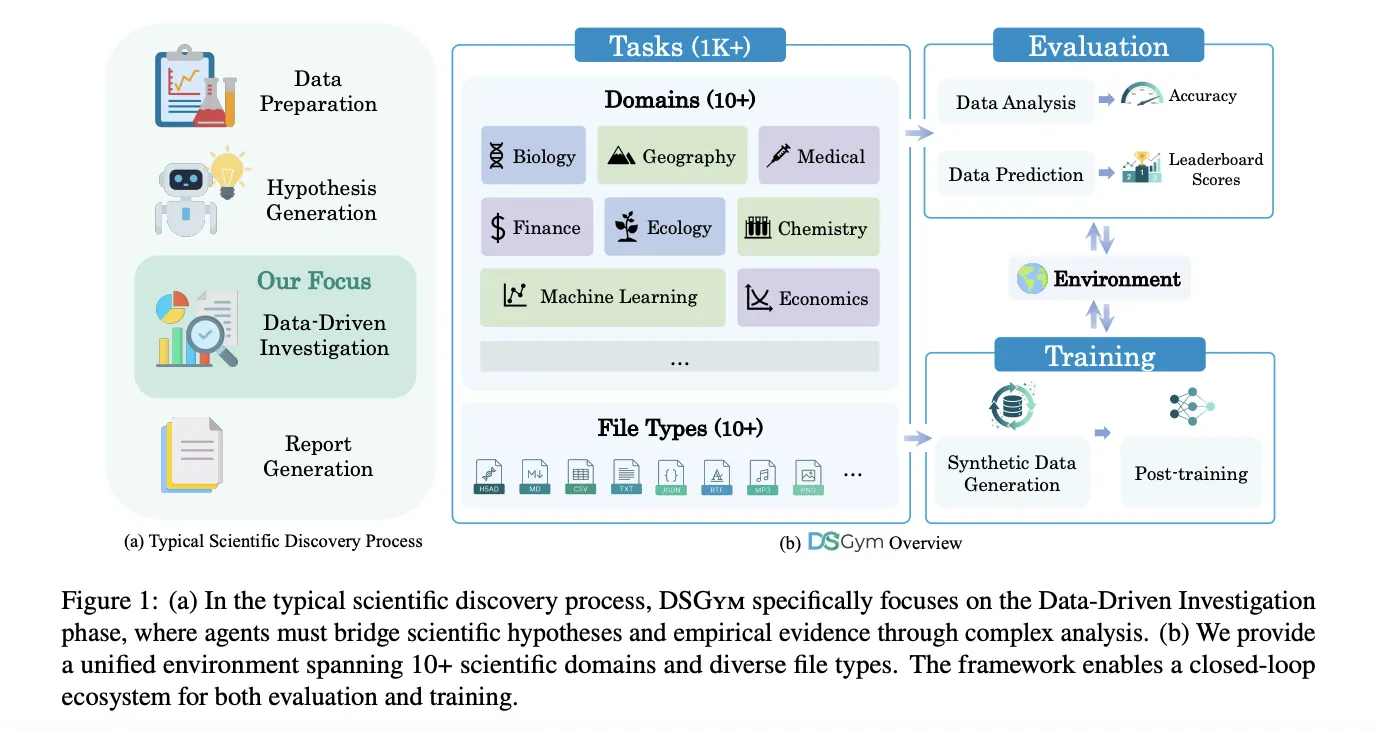

Knowledge science brokers ought to examine datasets, design workflows, run code, and return verifiable solutions, not simply autocomplete Pandas code. DSGym, launched by researchers from Stanford College, Collectively AI, Duke College, and Harvard College, is a framework that evaluates and trains such brokers throughout greater than 1,000 knowledge science challenges with professional curated floor reality and a constant submit coaching pipeline.

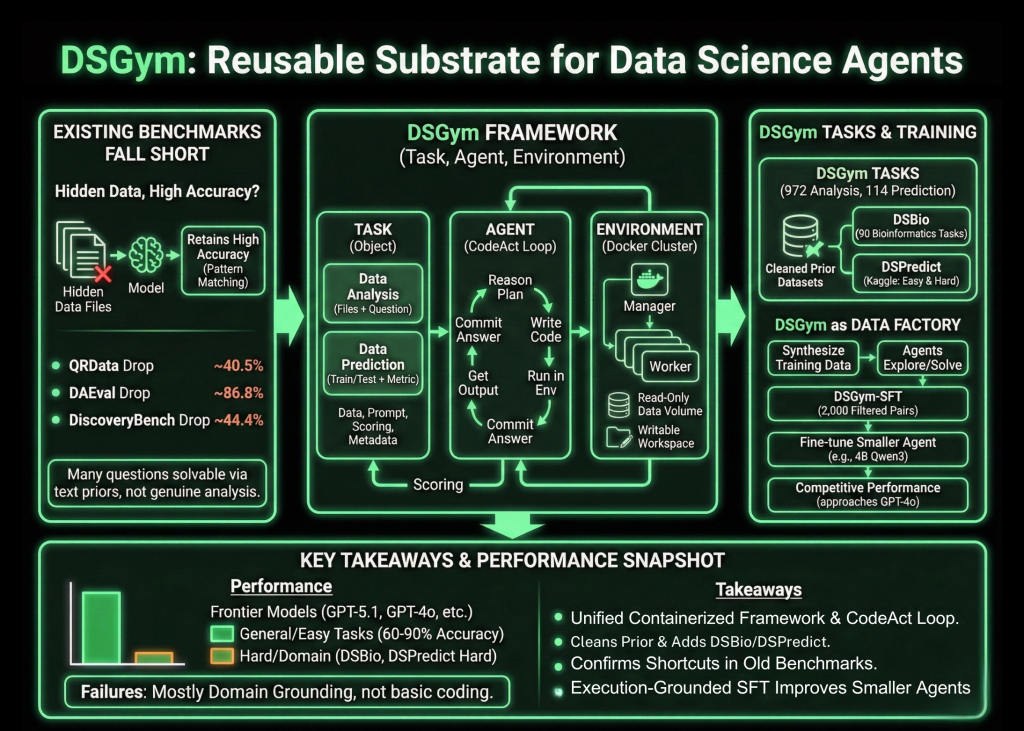

Why present benchmarks fall brief?

The analysis crew first probe present benchmarks that declare to check knowledge conscious brokers. When knowledge recordsdata are hidden, fashions nonetheless retain excessive accuracy. On QRData the common drop is 40.5 %, on DAEval it’s 86.8 %, and on DiscoveryBench it’s 44.4 %. Many questions are solvable utilizing priors and sample matching on the textual content alone as an alternative of real knowledge evaluation, they usually additionally discover annotation errors and inconsistent numerical tolerances.

Process, Agent, and Setting

DSGym standardizes analysis into three objects, Process, Agent, and Setting. Duties are both Knowledge Evaluation or Knowledge Prediction. Knowledge Evaluation duties present a number of recordsdata together with a pure language query that should be answered by way of code. Knowledge Prediction duties present prepare and take a look at splits together with an specific metric and require the agent to construct a modeling pipeline and output predictions.

Every job is packed right into a Process Object that holds the info recordsdata, question immediate, scoring perform, and metadata. Brokers work together by way of a CodeAct type loop. At every flip, the agent writes a reasoning block that describes its plan, a code block that runs contained in the setting, and a solution block when it is able to commit. The Setting is carried out as a supervisor and employee cluster of Docker containers, the place every employee mounts knowledge as learn solely volumes, exposes a writable workspace, and ships with area particular Python libraries.

DSGym Duties, DSBio, and DSPredict

On prime of this runtime, DSGym Duties aggregates and refines present datasets and provides new ones. The analysis crew clear QRData, DAEval, DABStep, MLEBench Lite, and others by dropping unscorable objects and making use of a shortcut filter that removes questions solved simply by a number of fashions with out knowledge entry.

To cowl scientific discovery, they introduce DSBio, a collection of 90 bioinformatics duties derived from peer reviewed papers and open supply datasets. Duties cowl single cell evaluation, spatial and multi-omics, and human genetics, with deterministic numerical or categorical solutions supported by professional reference notebooks.

DSPredict targets modeling on actual Kaggle competitions. A crawler collects latest competitions that settle for CSV submissions and fulfill dimension and readability guidelines. After preprocessing, the suite is break up into DSPredict Simple with 38 playground type and introductory competitions, and DSPredict Onerous with 54 excessive complexity challenges. In whole, DSGym Duties consists of 972 knowledge evaluation duties and 114 prediction duties.

What present brokers can and can’t do

The analysis covers closed supply fashions equivalent to GPT-5.1, GPT-5, and GPT-4o, open weights fashions equivalent to Qwen3-Coder-480B, Qwen3-235B-Instruct, and GPT-OSS-120B, and smaller fashions equivalent to Qwen2.5-7B-Instruct and Qwen3-4B-Instruct. All are run with the identical CodeAct agent, temperature 0, and instruments disabled.

On cleaned basic evaluation benchmarks, equivalent to QRData Verified, DAEval Verified, and the simpler break up of DABStep, prime fashions attain between 60 % and 90 % actual match accuracy. On DABStep Onerous, accuracy drops for each mannequin, which reveals that multi step quantitative reasoning over monetary tables continues to be brittle.

DSBio exposes a extra extreme weak point. Kimi-K2-Instruct achieves the very best total accuracy of 43.33 %. For all fashions, between 85 and 96 % of inspected failures on DSBio are area grounding errors, together with misuse of specialised libraries and incorrect organic interpretations, quite than primary coding errors.

On MLEBench Lite and DSPredict Simple, most frontier fashions obtain close to excellent Legitimate Submission Price above 80 %. On DSPredict Onerous, legitimate submissions hardly ever exceed 70 % and medal charges on Kaggle leaderboards are close to 0 %. This sample helps the analysis crew’s statement of a simplicity bias the place brokers cease after a baseline answer as an alternative of exploring extra aggressive fashions and hyperparameters.

DSGym as an information manufacturing facility and coaching floor

The identical setting may also synthesize coaching knowledge. Ranging from a subset of QRData and DABStep, the analysis crew ask brokers to discover datasets, suggest questions, clear up them with code, and document trajectories, which yields 3,700 artificial queries. A decide mannequin filters these to a set of two,000 top quality question plus trajectory pairs referred to as DSGym-SFT, and fine-tuning a 4B Qwen3 based mostly mannequin on DSGym-SFT produces an agent that reaches aggressive efficiency with GPT-4o on standardized evaluation benchmarks regardless of having far fewer parameters.

Key Takeaways

- DSGym offers a unified Process, Agent, and Setting framework, with containerized execution and a CodeAct type loop, to judge knowledge science brokers on actual code based mostly workflows as an alternative of static prompts.

- The benchmark suite, DSGym-Duties, consolidates and cleans prior datasets and provides DSBio and DSPredict, reaching 972 knowledge evaluation duties and 114 prediction duties throughout domains equivalent to finance, bioinformatics, and earth science.

- Shortcut evaluation on present benchmarks reveals that eradicating knowledge entry solely reasonably reduces accuracy in lots of instances, which confirms that prior evaluations typically measure sample matching on textual content quite than real knowledge evaluation.

- Frontier fashions obtain sturdy efficiency on cleaned basic evaluation duties and on simpler prediction duties, however they carry out poorly on DSBio and DSPredict-Onerous, the place most errors come from area grounding points and conservative, underneath tuned modeling pipelines.

- The DSGym-SFT dataset, constructed from 2,000 filtered artificial trajectories, permits a 4B Qwen3 based mostly agent to method GPT-4o degree accuracy on a number of evaluation benchmarks, which reveals that execution grounded supervision on structured duties is an efficient approach to enhance knowledge science brokers.

Try the Paper, and Repo. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as properly.

Michal Sutter is an information science skilled with a Grasp of Science in Knowledge Science from the College of Padova. With a stable basis in statistical evaluation, machine studying, and knowledge engineering, Michal excels at reworking advanced datasets into actionable insights.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s traits at this time: learn extra, subscribe to our e-newsletter, and turn out to be a part of the NextTech group at NextTech-news.com