Moonshot AI has launched Kimi K2.5 as an open supply visible agentic intelligence mannequin. It combines a big Combination of Consultants language spine, a local imaginative and prescient encoder, and a parallel multi agent system referred to as Agent Swarm. The mannequin targets coding, multimodal reasoning, and deep net analysis with robust benchmark outcomes on agentic, imaginative and prescient, and coding suites.

Mannequin Structure and Coaching

Kimi K2.5 is a Combination of Consultants mannequin with 1T whole parameters and about 32B activated parameters per token. The community has 61 layers. It makes use of 384 consultants, with 8 consultants chosen per token plus 1 shared knowledgeable. The eye hidden dimension is 7168 and there are 64 consideration heads.

The mannequin makes use of MLA consideration and the SwiGLU activation perform. The tokenizer vocabulary dimension is 160K. The utmost context size throughout coaching and inference is 256K tokens. This helps lengthy device traces, lengthy paperwork, and multi step analysis workflows.

Imaginative and prescient is dealt with by a MoonViT encoder with about 400M parameters. Visible tokens are skilled along with textual content tokens in a single multimodal spine. Kimi K2.5 is obtained by continuous pretraining on about 15T tokens of blended imaginative and prescient and textual content information on high of Kimi K2 Base. This native multimodal coaching is vital as a result of the mannequin learns joint construction over pictures, paperwork, and language from the beginning.

The launched checkpoints help commonplace inference stacks equivalent to vLLM, SGLang, and KTransformers with transformers model 4.57.1 or newer. Quantized INT4 variants can be found, reusing the tactic from Kimi K2 Considering. This enables deployment on commodity GPUs with decrease reminiscence budgets.

Coding and Multimodal Capabilities

Kimi K2.5 is positioned as a powerful open supply coding mannequin, particularly when code technology is dependent upon visible context. The mannequin can learn UI mockups, design screenshots, and even movies, then emit structured frontend code with structure, styling, and interplay logic.

Moonshot exhibits examples the place the mannequin reads a puzzle picture, causes in regards to the shortest path, after which writes code that produces a visualized resolution. This demonstrates cross modal reasoning, the place the mannequin combines picture understanding, algorithmic planning, and code synthesis in a single movement.

As a result of K2.5 has a 256K context window, it might preserve lengthy specification histories in context. A sensible workflow for builders is to combine design belongings, product docs, and present code in a single immediate. The mannequin can then refactor or lengthen the codebase whereas preserving visible constraints aligned with the unique design.

Agent Swarm and Parallel Agent Reinforcement Studying

A key characteristic of Kimi K2.5 is Agent Swarm. This can be a multi agent system skilled with Parallel Agent Reinforcement Studying, PARL. On this setup an orchestrator agent decomposes a fancy aim into many subtasks. It then spins up area particular sub brokers to work in parallel.

Kimi group experiences that K2.5 can handle as much as 100 sub brokers inside a activity. It helps as much as 1,500 coordinated steps or device calls in a single run. This parallelism offers about 4.5 instances quicker completion in contrast with a single agent pipeline on vast search duties.

PARL introduces a metric referred to as Crucial Steps. The system rewards insurance policies that scale back the variety of serial steps wanted to resolve the duty. This discourages naive sequential planning and pushes the agent to separate work into parallel branches whereas nonetheless sustaining consistency.

One instance by the Kimi group is a analysis workflow the place the system wants to find many area of interest creators. The orchestrator makes use of Agent Swarm to spawn a lot of researcher brokers. Every agent explores totally different areas of the online, and the system merges outcomes right into a structured desk.

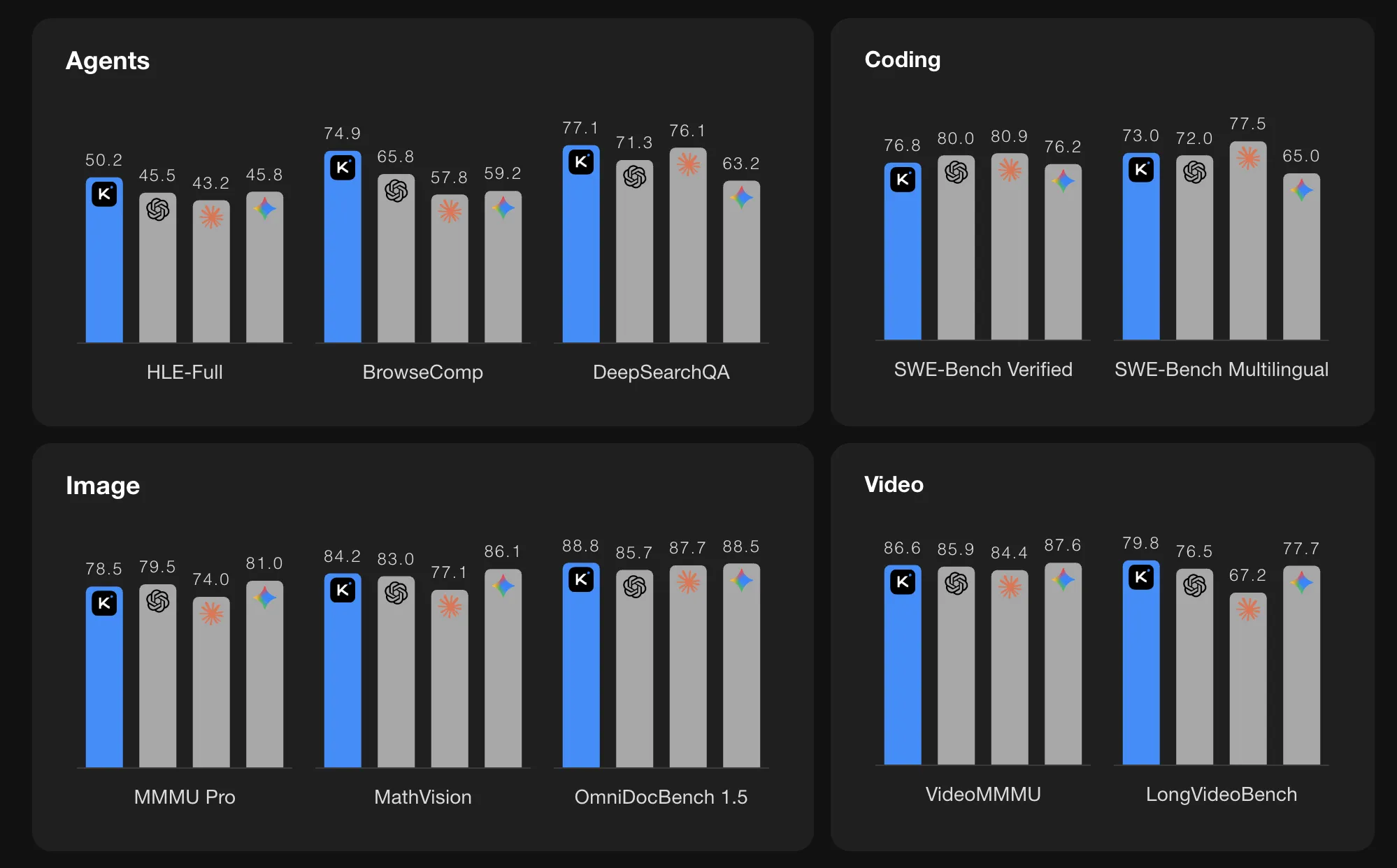

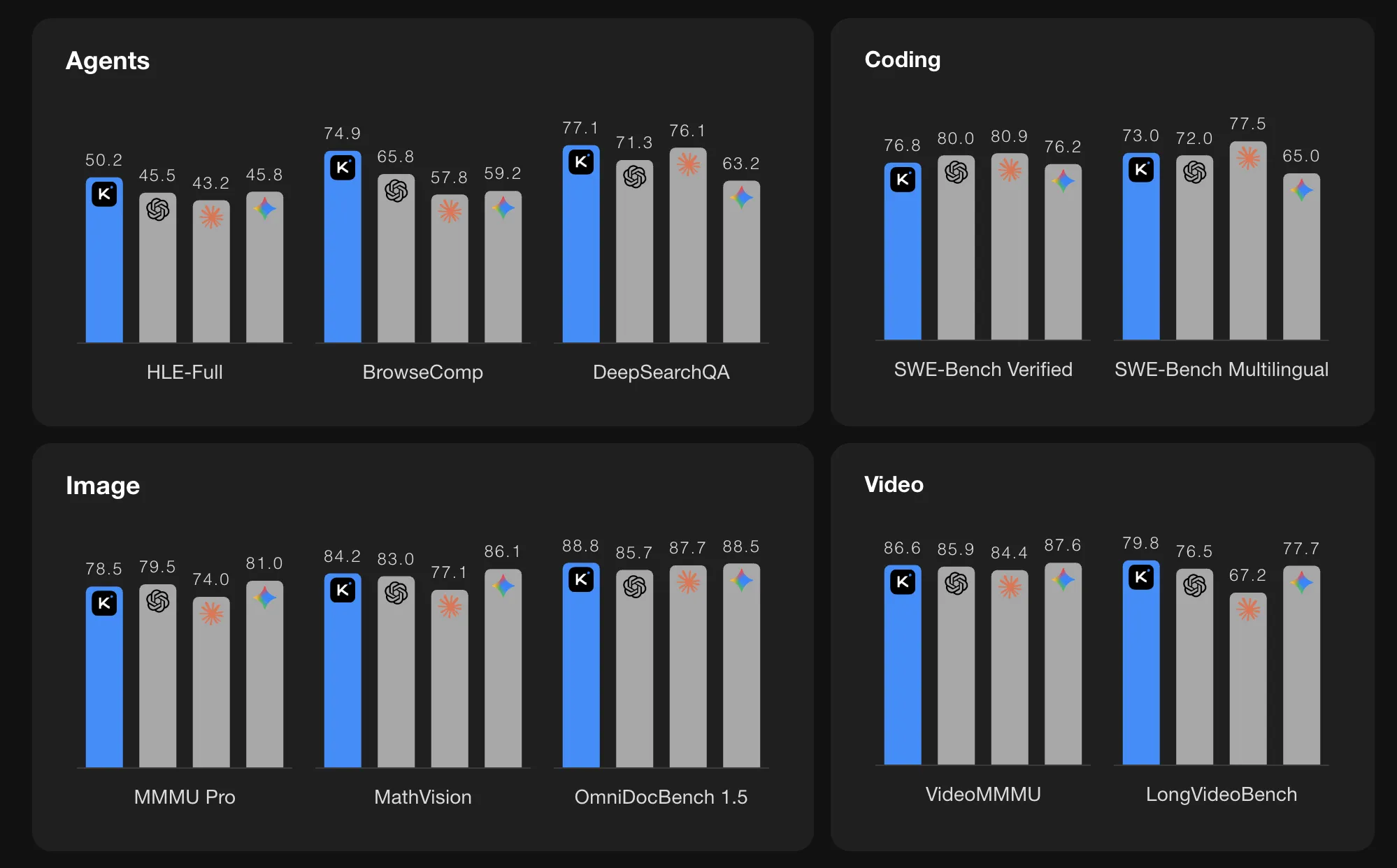

Benchmark Efficiency

On agentic benchmarks, Kimi K2.5 experiences robust numbers. On HLE Full with instruments the rating is 50.2. On BrowseComp with context administration the rating is 74.9. In Agent Swarm mode the BrowseComp rating will increase additional to 78.4 and WideSearch metrics additionally enhance. The Kimi group compares these values with GPT 5.2, Claude 4.5, Gemini 3 Professional, and DeepSeek V3, and K2.5 exhibits the best scores among the many listed fashions on these particular agentic suites.

On imaginative and prescient and video benchmarks K2.5 additionally experiences excessive scores. MMMU Professional is 78.5 and VideoMMMU is 86.6. The mannequin performs nicely on OmniDocBench, OCRBench, WorldVQA, and different doc and scene understanding duties. These outcomes point out that the MoonViT encoder and lengthy context coaching are efficient for actual world multimodal issues, equivalent to studying complicated paperwork and reasoning over movies.

For coding benchmarks it lists SWE Bench Verified at 76.8, SWE Bench Professional at 50.7, SWE Bench Multilingual at 73.0, Terminal Bench 2.0 at 50.8, and LiveCodeBench v6 at 85.0. These numbers place K2.5 among the many strongest open supply coding fashions presently reported on these duties.

On lengthy context language benchmarks, K2.5 reaches 61.0 on LongBench V2 and 70.0 on AA LCR below commonplace analysis settings. For reasoning benchmarks it achieves excessive scores on AIME 2025, HMMT 2025 February, GPQA Diamond, and MMLU Professional when utilized in pondering mode.

Key Takeaways

- Combination of Consultants at trillion scale: Kimi K2.5 makes use of a Combination of Consultants structure with 1T whole parameters and about 32B energetic parameters per token, 61 layers, 384 consultants, and 256K context size, optimized for lengthy multimodal and gear heavy workflows.

- Native multimodal coaching with MoonViT: The mannequin integrates a MoonViT imaginative and prescient encoder of about 400M parameters and is skilled on about 15T blended imaginative and prescient and textual content tokens, so pictures, paperwork, and language are dealt with in a single unified spine.

- Parallel Agent Swarm with PARL: Agent Swarm, skilled with Parallel Agent Reinforcement Studying, can coordinate as much as 100 sub brokers and about 1,500 device calls per activity, giving round 4.5 instances quicker execution versus a single agent on vast analysis duties.

- Robust benchmark ends in coding, imaginative and prescient, and brokers: K2.5 experiences 76.8 on SWE Bench Verified, 78.5 on MMMU Professional, 86.6 on VideoMMMU, 50.2 on HLE Full with instruments, and 74.9 on BrowseComp, matching or exceeding listed closed fashions on a number of agentic and multimodal suites.

Try the Technical particulars and Mannequin Weight. Additionally, be at liberty to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you’ll be able to be a part of us on telegram as nicely.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s traits as we speak: learn extra, subscribe to our publication, and develop into a part of the NextTech group at NextTech-news.com