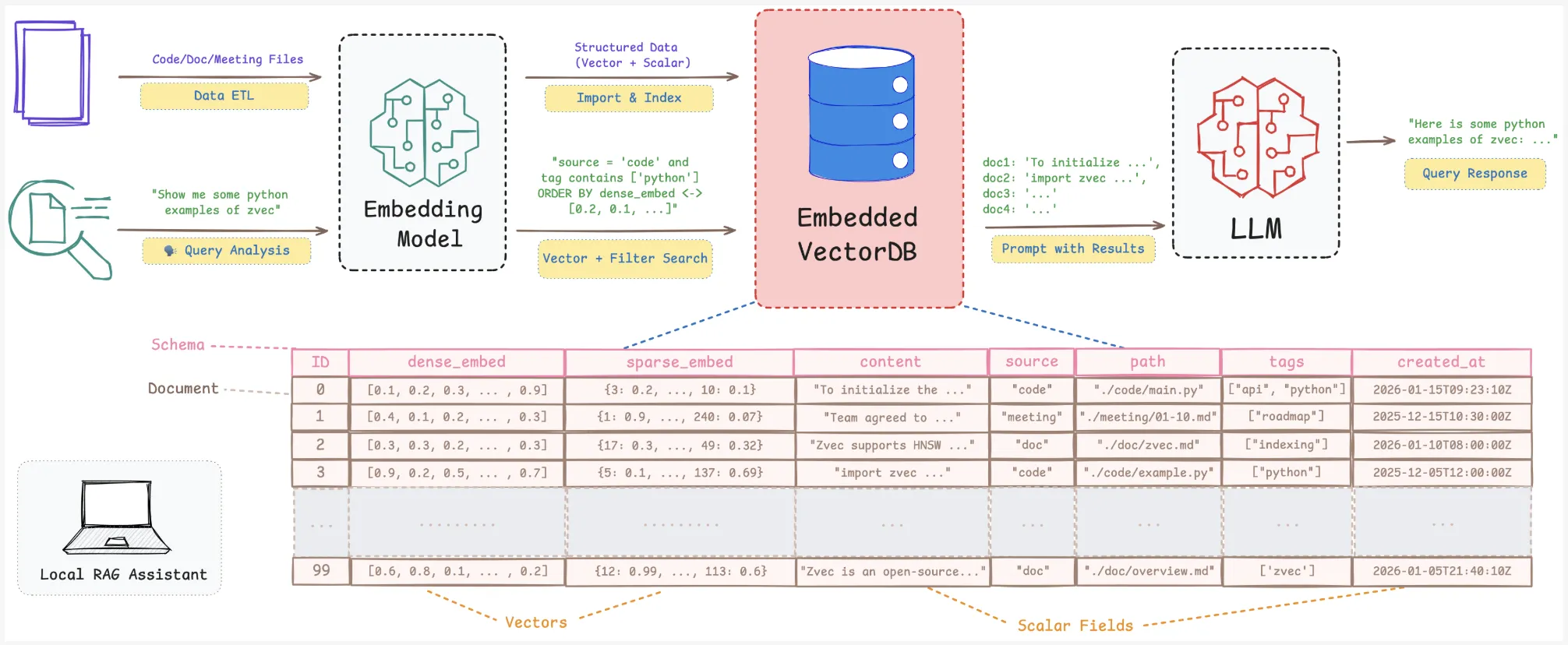

Alibaba Tongyi Lab analysis staff launched ‘Zvec’, an open supply, in-process vector database that targets edge and on-device retrieval workloads. It’s positioned as ‘the SQLite of vector databases’ as a result of it runs as a library inside your software and doesn’t require any exterior service or daemon. It’s designed for retrieval augmented technology (RAG), semantic search, and agent workloads that should run regionally on laptops, cellular gadgets, or different constrained {hardware}/edge gadgets

The core concept is easy. Many purposes now want vector search and metadata filtering however don’t need to run a separate vector database service. Conventional server model techniques are heavy for desktop instruments, cellular apps, or command line utilities. An embedded engine that behaves like SQLite however for embeddings suits this hole.

Why embedded vector search issues for RAG?

RAG and semantic search pipelines want greater than a naked index. They want vectors, scalar fields, full CRUD, and secure persistence. Native data bases change as recordsdata, notes, and mission states change.

Index libraries comparable to Faiss present approximate nearest neighbor search however don’t deal with scalar storage, crash restoration, or hybrid queries. You find yourself constructing your individual storage and consistency layer. Embedded extensions comparable to DuckDB-VSS add vector search to DuckDB however expose fewer index and quantization choices and weaker useful resource management for edge situations. Service based mostly techniques comparable to Milvus or managed vector clouds require community calls and separate deployment, which is commonly overkill for on-device instruments.

Zvec claims to slot in particularly for these native situations. It offers you a vector-native engine with persistence, useful resource governance, and RAG oriented options, packaged as a light-weight library.

Core structure: in-process and vector-native

Zvec is carried out as an embedded library. You put in it with pip set up zvec and open collections straight in your Python course of. There is no such thing as a exterior server or RPC layer. You outline schemas, insert paperwork, and run queries by the Python API.

The engine is constructed on Proxima, Alibaba Group’s excessive efficiency, manufacturing grade, battle examined vector search engine. Zvec wraps Proxima with a less complicated API and embedded runtime. The mission is launched underneath the Apache 2.0 license.

Present help covers Python 3.10 to three.12 on Linux x86_64, Linux ARM64, and macOS ARM64.

The design targets are express:

- Embedded execution in course of

- Vector native indexing and storage

- Manufacturing prepared persistence and crash security

This makes it appropriate for edge gadgets, desktop purposes, and zero-ops deployments.

Developer workflow: from set up to semantic search

The quickstart documentation reveals a brief path from set up to question.

- Set up the package deal:

pip set up zvec - Outline a

CollectionSchemawith a number of vector fields and non-compulsory scalar fields. - Name

create_and_opento create or open the gathering on disk. - Insert

Docobjects that comprise an ID, vectors, and scalar attributes. - Construct an index and run a

VectorQueryto retrieve nearest neighbors.

Instance:

import zvec

# Outline assortment schema

schema = zvec.CollectionSchema(

identify="instance",

vectors=zvec.VectorSchema("embedding", zvec.DataType.VECTOR_FP32, 4),

)

# Create assortment

assortment = zvec.create_and_open(path="./zvec_example", schema=schema,)

# Insert paperwork

assortment.insert([

zvec.Doc(id="doc_1", vectors={"embedding": [0.1, 0.2, 0.3, 0.4]}),

zvec.Doc(id="doc_2", vectors={"embedding": [0.2, 0.3, 0.4, 0.1]}),

])

# Search by vector similarity

outcomes = assortment.question(

zvec.VectorQuery("embedding", vector=[0.4, 0.3, 0.3, 0.1]),

topk=10

)

# Outcomes: checklist of {'id': str, 'rating': float, ...}, sorted by relevance

print(outcomes)Outcomes come again as dictionaries that embody IDs and similarity scores. This is sufficient to construct a neighborhood semantic search or RAG retrieval layer on high of any embedding mannequin.

Efficiency: VectorDBBench and eight,000+ QPS

Zvec is optimized for prime throughput and low latency on CPUs. It makes use of multithreading, cache pleasant reminiscence layouts, SIMD directions, and CPU prefetching.

In VectorDBBench on the Cohere 10M dataset, with comparable {hardware} and matched recall, Zvec experiences greater than 8,000 QPS. That is greater than 2× the earlier leaderboard #1, ZillizCloud, whereas additionally considerably decreasing index construct time in the identical setup.

These metrics present that an embedded library can attain cloud degree efficiency for prime quantity similarity search, so long as the workload resembles the benchmark circumstances.

RAG capabilities: CRUD, hybrid search, fusion, reranking

The characteristic set is tuned for RAG and agentic retrieval.

Zvec helps:

- Full CRUD on paperwork so the native data base can change over time.

- Schema evolution to regulate index methods and fields.

- Multi vector retrieval for queries that mix a number of embedding channels.

- A in-built reranker that helps weighted fusion and Reciprocal Rank Fusion.

- Scalar vector hybrid search that pushes scalar filters into the index execution path, with non-compulsory inverted indexes for scalar attributes.

This lets you construct on gadget assistants that blend semantic retrieval, filters comparable to consumer, time, or sort, and a number of embedding fashions, all inside one embedded engine.

Key Takeaways

- Zvec is an embedded, in-process vector database positioned because the ‘SQLite of vector database’ for on-device and edge RAG workloads.

- It’s constructed on Proxima, Alibaba’s excessive efficiency, manufacturing grade, battle examined vector search engine, and is launched underneath Apache 2.0 with Python help on Linux x86_64, Linux ARM64, and macOS ARM64.

- Zvec delivers >8,000 QPS on VectorDBBench with the Cohere 10M dataset, attaining greater than 2× the earlier leaderboard #1 (ZillizCloud) whereas additionally decreasing index construct time.

- The engine gives express useful resource governance through 64 MB streaming writes, non-compulsory mmap mode, experimental

memory_limit_mb, and configurableconcurrency,optimize_threads, andquery_threadsfor CPU management. - Zvec is RAG prepared with full CRUD, schema evolution, multi vector retrieval, in-built reranking (weighted fusion and RRF), and scalar vector hybrid search with non-compulsory inverted indexes, plus an ecosystem roadmap concentrating on LangChain, LlamaIndex, DuckDB, PostgreSQL, and actual gadget deployments.

Try the Technical particulars and Repo. Additionally, be at liberty to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you possibly can be part of us on telegram as nicely.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s traits at the moment: learn extra, subscribe to our publication, and turn into a part of the NextTech neighborhood at NextTech-news.com