February 12 — Xiaomi founder Lei Jun introduced the open-source launch of Xiaomi-Robotics-0, a 4.7-billion-parameter embodied Imaginative and prescient-Language-Motion (VLA) mannequin. Code, mannequin weights, and technical documentation at the moment are obtainable on GitHub and Hugging Face.

The mannequin adopts a Combination-of-Transformers structure, decoupling a Imaginative and prescient-Language Mannequin (VLM) from a 16-layer Diffusion Transformer (DiT). The VLM handles instruction comprehension and spatial reasoning, whereas the DiT “motor cortex” generates high-frequency steady movement sequences through circulate matching.

The structure achieves 80 milliseconds inference latency, helps 30Hz real-time management, and might run in actual time on a consumer-grade GPU such because the RTX 4090.

Coaching follows a two-stage pre-training strategy:

- An Motion Proposal mechanism forces the VLM to collectively predict multimodal motion distributions throughout visible understanding, aligning function and motion areas.

- The VLM is frozen whereas the DiT is educated to generate exact movement sequences.

Submit-training introduces asynchronous inference and a Λ-shaped consideration masking technique, decoupling inference from execution timing whereas prioritizing present visible suggestions.

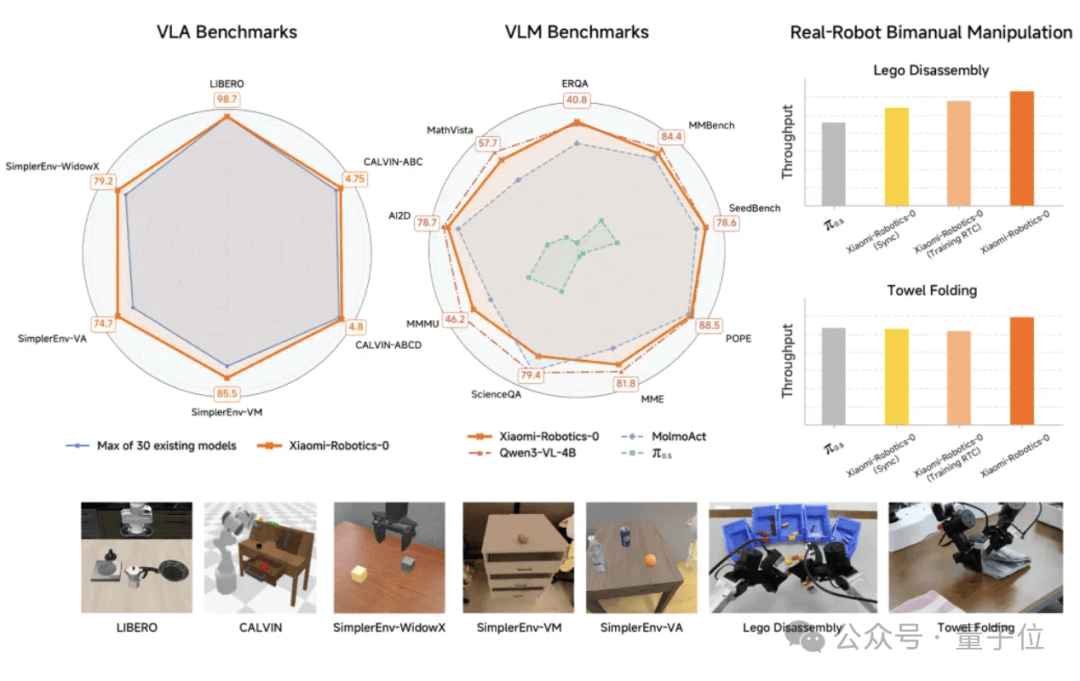

In simulation, Xiaomi-Robotics-0 outperformed greater than 30 benchmark fashions—together with π0, OpenVLA, RT-1, and RT-2—throughout LIBERO, CALVIN, and SimplerEnv datasets, reaching a number of new SOTA outcomes. On the Libero-Object job, it reached a 100% success price.

In real-world deployment, a dual-arm robotic powered by the mannequin demonstrated steady hand-eye coordination in long-horizon, high-DoF duties resembling block disassembly and towel folding, whereas retaining object detection and visible question-answering capabilities.

Supply: QbitAI

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s tendencies at present: learn extra, subscribe to our publication, and turn out to be a part of the NextTech group at NextTech-news.com