For the previous few years, the AI world has adopted a easy rule: in order for you a Massive Language Mannequin (LLM) to unravel a more durable drawback, make its Chain-of-Thought (CoT) longer. However new analysis from the College of Virginia and Google proves that ‘pondering lengthy’ will not be the identical as ‘pondering arduous’.

The analysis group reveals that merely including extra tokens to a response can really make an AI much less correct. As an alternative of counting phrases, the Google researchers introduce a brand new measurement: the Deep-Considering Ratio (DTR).

The Failure of ‘Token Maxing‘

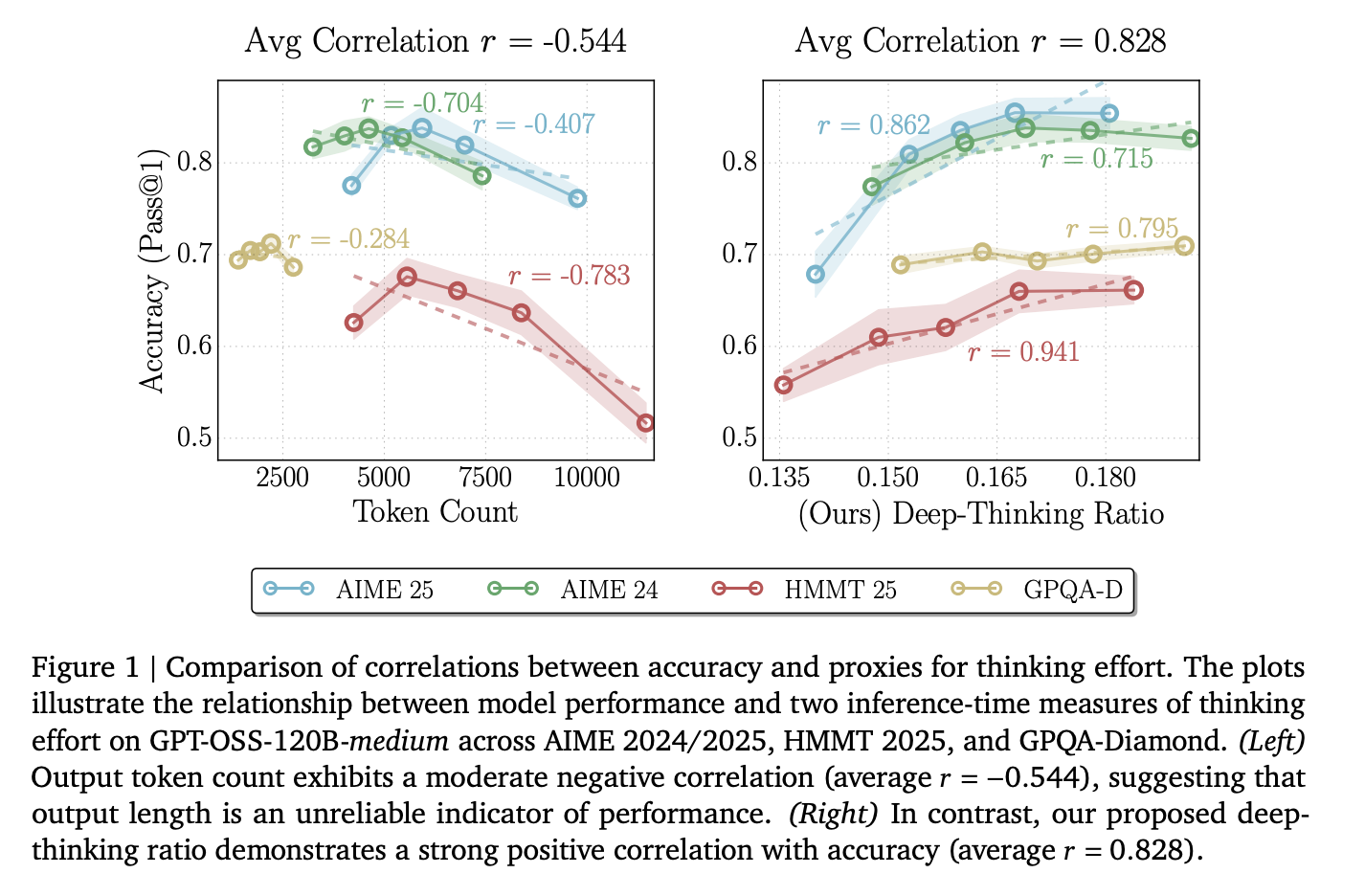

Engineers usually use token depend as a proxy for the hassle an AI places right into a activity. Nonetheless, the researchers discovered that uncooked token depend has a mean correlation of r= -0.59 with accuracy.

This destructive quantity signifies that because the mannequin generates extra textual content, it’s extra more likely to be improper. This occurs due to ‘overthinking,’ the place the mannequin will get caught in loops, repeats redundant steps, or amplifies its personal errors. Counting on size alone wastes costly compute on uninformative tokens.

What are Deep-Considering Tokens?

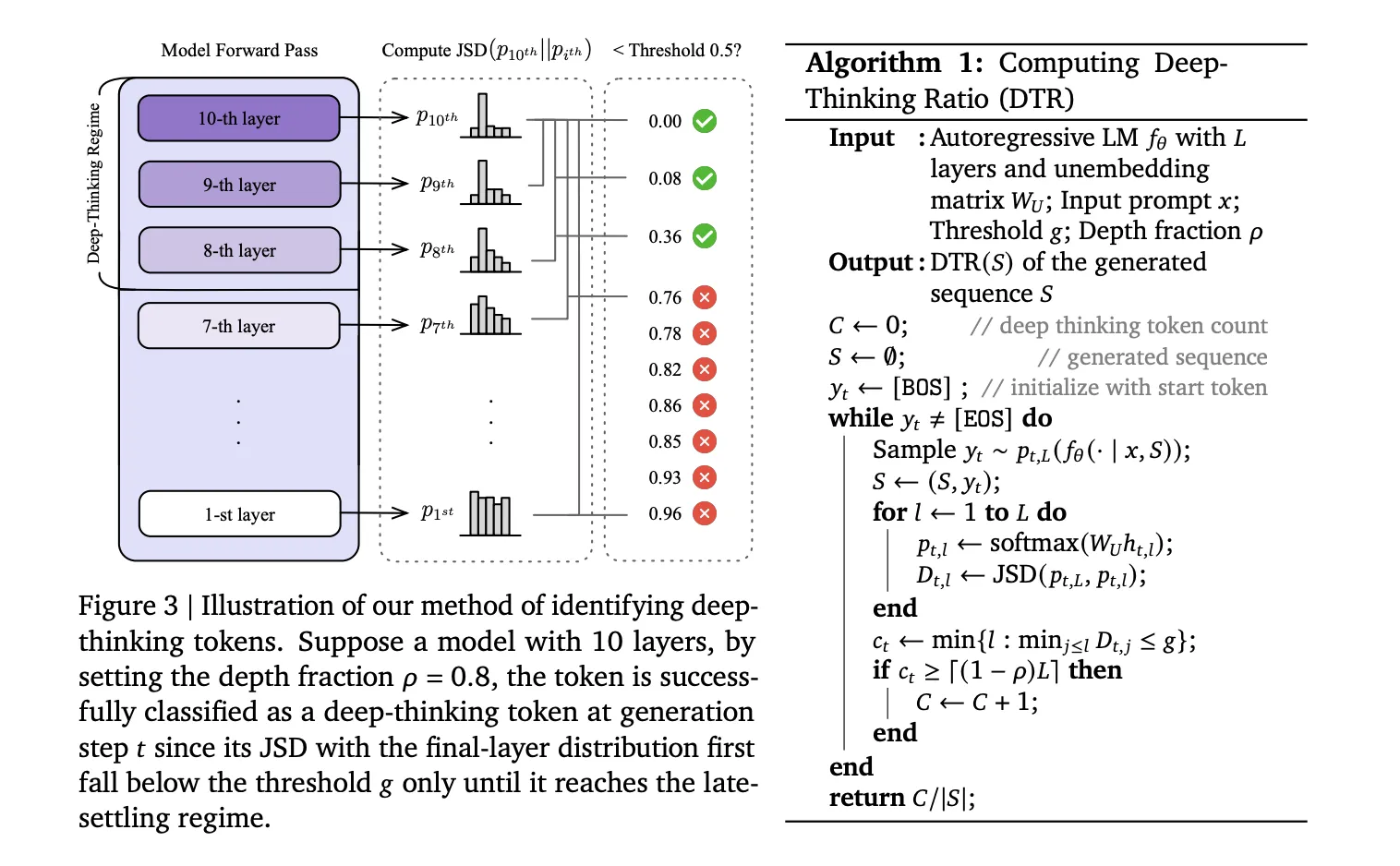

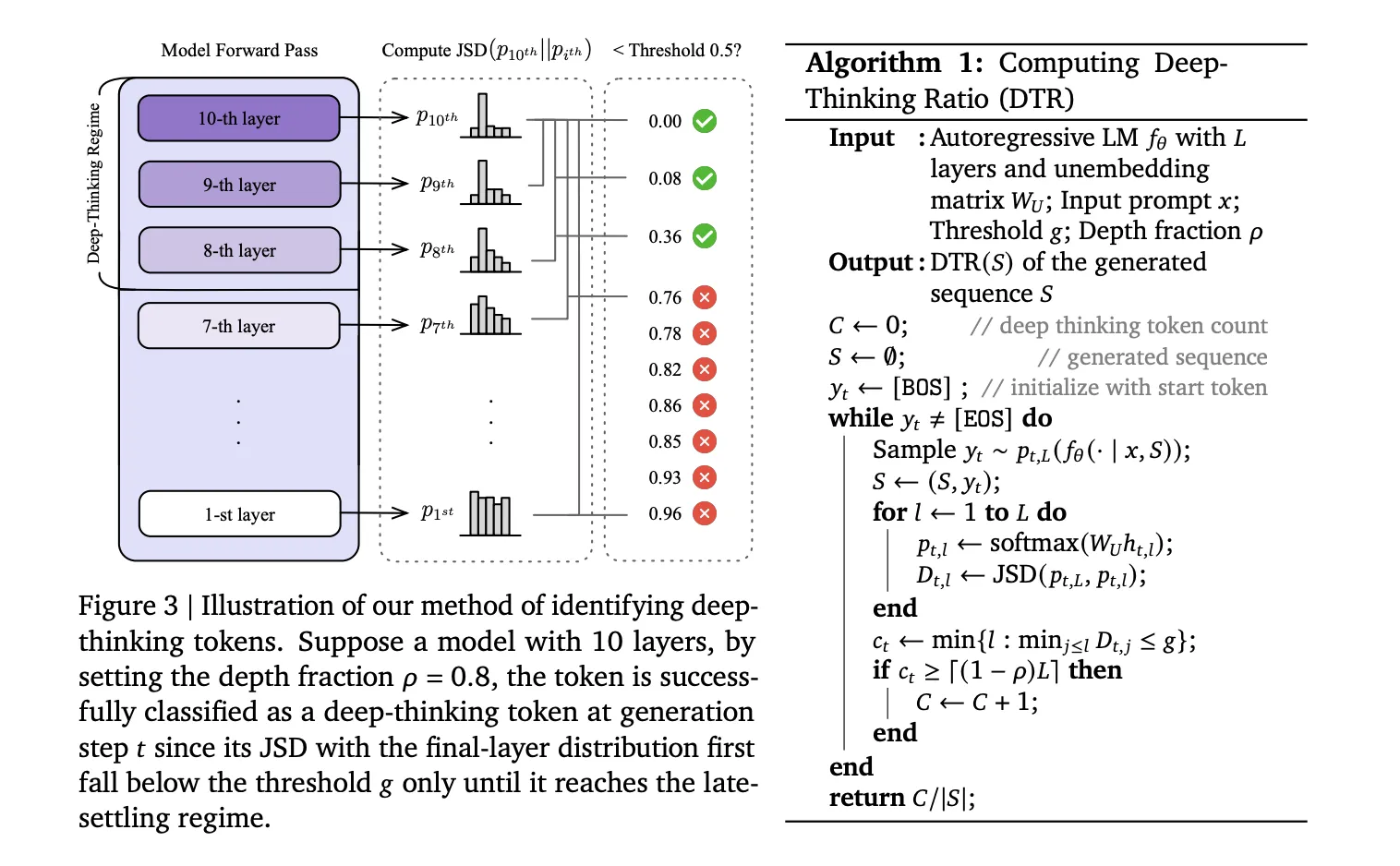

The analysis group argued that actual ‘pondering’ occurs contained in the layers of the mannequin, not simply within the last output. When a mannequin predicts a token, it processes knowledge by way of a sequence of transformer layers (L).

- Shallow Tokens: For simple phrases, the mannequin’s prediction stabilizes early. The ‘guess’ doesn’t change a lot from layer 5 to layer 36.

- Deep-Considering Tokens: For troublesome logic or math symbols, the prediction shifts considerably within the deeper layers.

Methods to Measure Depth

To establish these tokens, the analysis group makes use of a way to peek on the mannequin’s inside ‘drafts’ at each layer. They mission the intermediate hidden states (htl) into the vocabulary house utilizing the mannequin’s unembedding matrix (WU). This produces a chance distribution (pt,l) for each layer.

They then calculate the Jensen-Shannon Divergence (JSD) between the intermediate layer distribution and the ultimate layer distribution (pt,L):

Dt,l := JSD(pt,L || pt,l)

A token is a deep-thinking token if its prediction solely settles within the ‘late regime’—outlined by a depth fraction (⍴). Of their assessments, they set ⍴= 0.85, which means the token solely stabilized within the last 15% of the layers.

The Deep-Considering Ratio (DTR) is the share of those ‘arduous’ tokens in a full sequence. Throughout fashions like DeepSeek-R1-70B, Qwen3-30B-Considering, and GPT-OSS-120B, DTR confirmed a powerful common optimistic correlation of r = 0.683 with accuracy.

Suppose@n: Higher Accuracy at 50% the Price

The analysis group used this modern strategy to create Suppose@n, a brand new strategy to scale AI efficiency throughout inference.

Most devs use Self-Consistency (Cons@n), the place they pattern 48 completely different solutions and use majority voting to select the most effective one. That is very costly as a result of you must generate each single token for each reply.

Suppose@n adjustments the sport through the use of ‘early halting’:

- The mannequin begins producing a number of candidate solutions.

- After simply 50 prefix tokens, the system calculates the DTR for every candidate.

- It instantly stops producing the ‘unpromising’ candidates with low DTR.

- It solely finishes the candidates with excessive deep-thinking scores.

The Outcomes on AIME 2025

| Methodology | Accuracy | Avg. Price (okay tokens) |

| Cons@n (Majority Vote) | 92.7% | 307.6 |

| Suppose@n (DTR-based Choice) | 94.7% | 155.4 |

On the AIME 25 math benchmark, Suppose@n achieved greater accuracy than customary voting whereas lowering the inference value by 49%.

Key Takeaways

- Token depend is a poor predictor of accuracy: Uncooked output size has a mean destructive correlation (r = -0.59) with efficiency, which means longer reasoning traces usually sign ‘overthinking’ fairly than greater high quality.

- Deep-thinking tokens outline true effort: In contrast to easy tokens that stabilize in early layers, deep-thinking tokens are these whose inside predictions bear important revision in deeper mannequin layers earlier than converging.

- The Deep-Considering Ratio (DTR) is a superior metric: DTR measures the proportion of deep-thinking tokens in a sequence and reveals a sturdy optimistic correlation with accuracy (common r = 0.683), constantly outperforming length-based or confidence-based baselines.

- Suppose@n permits environment friendly test-time scaling: By prioritizing and ending solely the samples with excessive deep-thinking ratios, the Suppose@n technique matches or exceeds the efficiency of normal majority voting (Cons@n).

- Huge value discount through early halting: As a result of DTR could be estimated from a brief prefix of simply 50 tokens, unpromising generations could be rejected early, lowering whole inference prices by roughly 50%.

Try the Paper. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as nicely.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s developments right now: learn extra, subscribe to our publication, and turn into a part of the NextTech neighborhood at NextTech-news.com