Anthropic not too long ago launched a information on efficient Context Engineering for AI Brokers — a reminder that context is a crucial but restricted useful resource. The standard of an agent usually relies upon much less on the mannequin itself and extra on how its context is structured and managed. Even a weaker LLM can carry out properly with the proper context, however no state-of-the-art mannequin can compensate for a poor one.

Manufacturing-grade AI programs want greater than good prompts — they want construction: an entire ecosystem of context that shapes reasoning, reminiscence, and decision-making. Fashionable agent architectures now deal with context not as a line in a immediate, however as a core design layer.

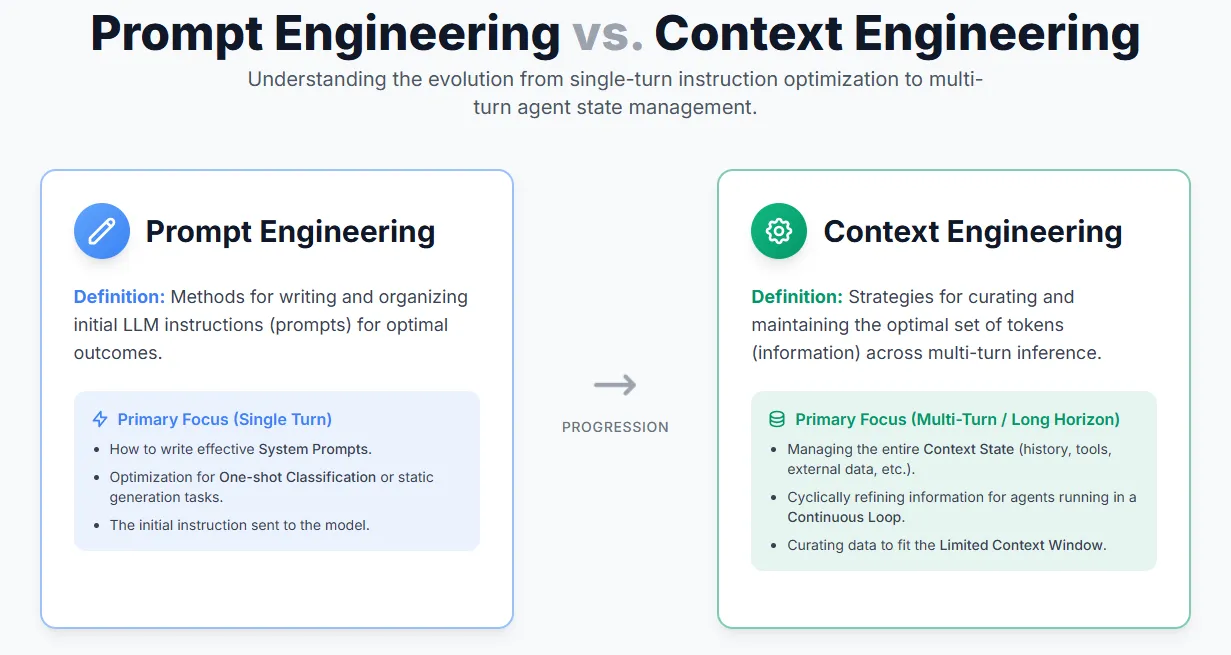

Distinction between Context Engineering & Immediate Engineering

Immediate engineering focuses on crafting efficient directions to information an LLM’s conduct — basically, the right way to write and construction prompts for the most effective output.

Context engineering, alternatively, goes past prompts. It’s about managing all the set of knowledge the mannequin sees throughout inference — together with system messages, instrument outputs, reminiscence, exterior knowledge, and message historical past. As AI brokers evolve to deal with multi-turn reasoning and longer duties, context engineering turns into the important thing self-discipline for curating and sustaining what really issues throughout the mannequin’s restricted context window.

Why is Context Engineering Necessary?

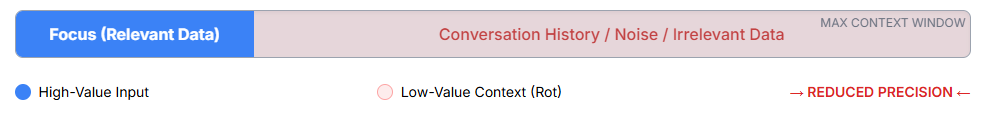

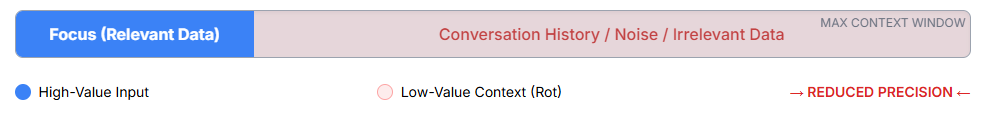

LLMs, like people, have restricted consideration — the extra data they’re given, the tougher it turns into for them to remain targeted and recall particulars precisely. This phenomenon, referred to as context rot, signifies that merely growing the context window doesn’t assure higher efficiency.

As a result of LLMs function on the transformer structure, each token should “attend” to each different token, which rapidly strains their consideration as context grows. Consequently, lengthy contexts could cause diminished precision and weaker long-range reasoning.

That’s why context engineering is essential: it ensures that solely essentially the most related and helpful data is included in an agent’s restricted context, permitting it to purpose successfully and keep targeted even in complicated, multi-turn duties.

What Makes Context Efficient?

Good context engineering means becoming the proper data—not essentially the most—into the mannequin’s restricted consideration window. The objective is to maximise helpful sign whereas minimizing noise.

Right here’s the right way to design efficient context throughout its key elements:

System Prompts

- Hold them clear, particular, and minimal — sufficient to outline desired conduct, however not so inflexible they break simply.

- Keep away from two extremes:

- Overly complicated, hardcoded logic (too brittle)

- Imprecise, high-level directions (too broad)

- Use structured sections (like ,

, ## Output format) to enhance readability and modularity. - Begin with a minimal model and iterate based mostly on take a look at outcomes.

Instruments

- Instruments act because the agent’s interface to its surroundings.

- Construct small, distinct, and environment friendly instruments — keep away from bloated or overlapping performance.

- Guarantee enter parameters are clear, descriptive, and unambiguous.

- Fewer, well-designed instruments result in extra dependable agent conduct and simpler upkeep.

Examples (Few-Shot Prompts)

- Use various, consultant examples, not exhaustive lists.

- Give attention to displaying patterns, not explaining each rule.

- Embrace each good and unhealthy examples to make clear conduct boundaries.

Information

- Feed domain-specific data — APIs, workflows, knowledge fashions, and so forth.

- Helps the mannequin transfer from textual content prediction to decision-making.

Reminiscence

- Offers the agent continuity and consciousness of previous actions.

- Brief-term reminiscence: reasoning steps, chat historical past

- Lengthy-term reminiscence: firm knowledge, person preferences, discovered details

Software Outcomes

- Feed instrument outputs again into the mannequin for self-correction and dynamic reasoning.

Context Engineering Agent Workflow

Dynamic Context Retrieval (The “Simply-in-Time” Shift)

- JIT Technique: Brokers transition from static, pre-loaded knowledge (conventional RAG) to autonomous, dynamic context administration.

- Runtime Fetching: Brokers use instruments (e.g., file paths, queries, APIs) to retrieve solely essentially the most related knowledge on the actual second it’s wanted for reasoning.

- Effectivity and Cognition: This method drastically improves reminiscence effectivity and adaptability, mirroring how people use exterior group programs (like file programs and bookmarks).

- Hybrid Retrieval: Subtle programs, like Claude Code, make use of a hybrid technique, combining JIT dynamic retrieval with pre-loaded static knowledge for optimum velocity and flexibility.

- Engineering Problem: This requires cautious instrument design and considerate engineering to stop brokers from misusing instruments, chasing dead-ends, or losing context.

Lengthy-Horizon Context Upkeep

These strategies are important for sustaining coherence and goal-directed conduct in duties that span prolonged durations and exceed the LLM’s restricted context window.

Compaction (The Distiller):

- Preserves conversational move and demanding particulars when the context buffer is full.

- Summarizes outdated message historical past and restarts the context, usually discarding redundant knowledge like outdated uncooked instrument outcomes.

Structured Word-Taking (Exterior Reminiscence):

- Gives persistent reminiscence with minimal context overhead.

- The agent autonomously writes persistent exterior notes (e.g., to a NOTES.md file or a devoted reminiscence instrument) to trace progress, dependencies, and strategic plans.

Sub-Agent Architectures (The Specialised Workforce):

- Handles complicated, deep exploration duties with out polluting the primary agent’s working reminiscence.

- Specialised sub-agents carry out deep work utilizing remoted context home windows, then return solely a condensed, distilled abstract (e.g., 1-2k tokens) to the primary coordinating agent.

I’m a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I’ve a eager curiosity in Information Science, particularly Neural Networks and their utility in numerous areas.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies at this time: learn extra, subscribe to our e-newsletter, and develop into a part of the NextTech group at NextTech-news.com