Qwen3-Max-Considering is Alibaba’s new flagship reasoning mannequin. It doesn’t solely scale parameters, it additionally modifications how inference is finished, with specific management over considering depth and inbuilt instruments for search, reminiscence, and code execution.

Mannequin scale, knowledge, and deployment

Qwen3-Max-Considering is a trillion-parameter MoE flagship LLM pretrained on 36T tokens and constructed on the Qwen3 household as the highest tier reasoning mannequin. The mannequin targets lengthy horizon reasoning and code, not solely informal chat. It runs with a context window of 260k tokens, which helps repository scale code, lengthy technical studies, and multi doc evaluation inside a single immediate.

Qwen3-Max-Considering is a closed mannequin served by way of Qwen-Chat and Alibaba Cloud Mannequin Studio with an OpenAI appropriate HTTP API. The identical endpoint will be referred to as in a Claude model software schema, so present Anthropic or Claude Code flows can swap in Qwen3-Max-Considering with minimal modifications. There are not any public weights, so utilization is API based mostly, which matches its positionin

Good Check Time Scaling and expertise cumulative reasoning

Most giant language fashions enhance reasoning by easy take a look at time scaling, for instance better of N sampling with a number of parallel chains of thought. That strategy will increase high quality however value grows virtually linearly with the variety of samples. Qwen3-Max-Considering introduces an expertise cumulative, multi spherical take a look at time scaling technique.

As an alternative of solely sampling extra in parallel, the mannequin iterates inside a single dialog, reusing intermediate reasoning traces as structured expertise. After every spherical, it extracts helpful partial conclusions, then focuses subsequent computation on unresolved elements of the query. This course of is managed by an specific considering price range that builders can modify through API parameters similar to enable_thinking and extra configuration fields.

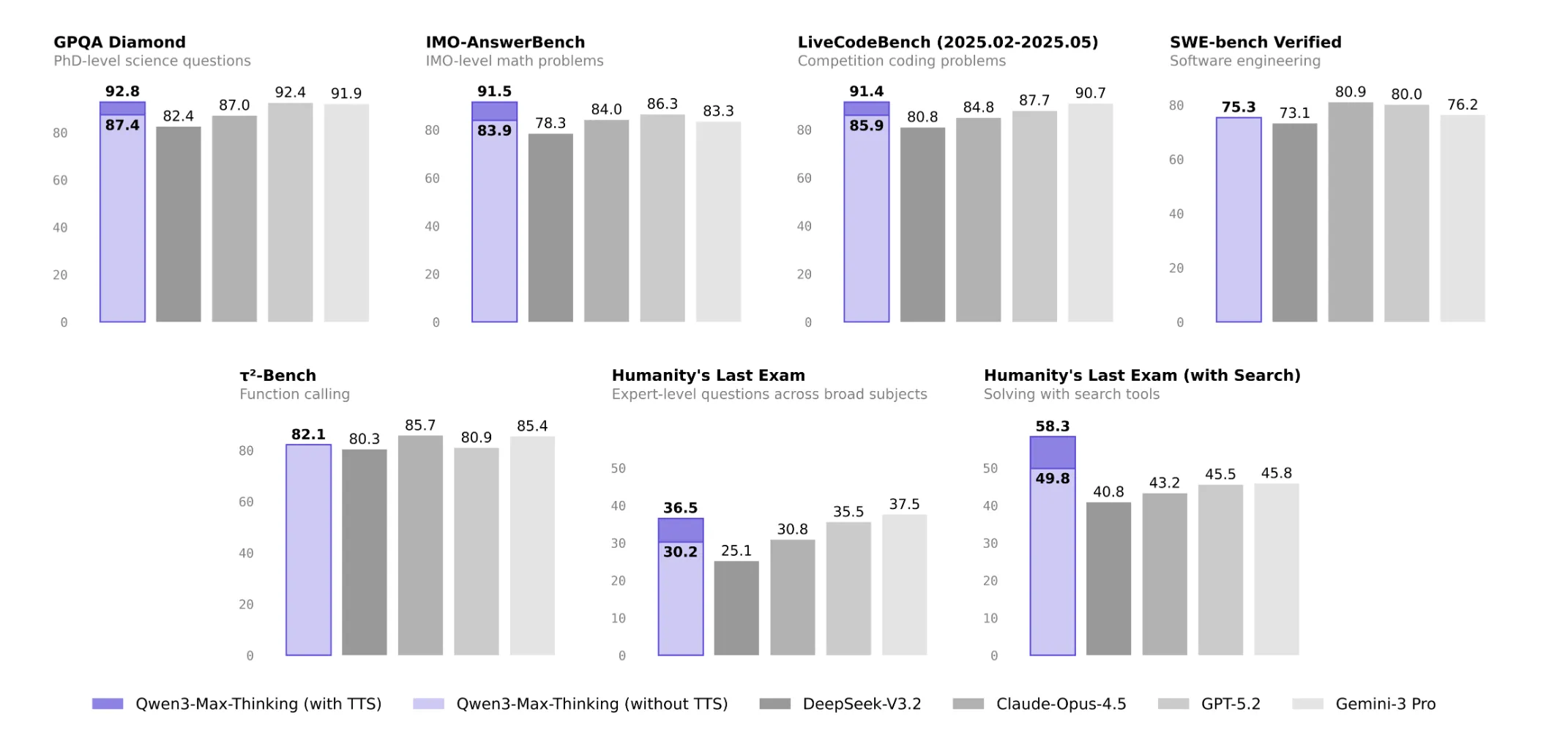

The reported impact is that accuracy rises with no proportional enhance in token depend. For instance, Qwen’s personal ablations present GPQA Diamond rising from round 90 degree accuracy to about 92.8, and LiveCodeBench v6 rising from about 88.0 to 91.4 underneath the expertise cumulative technique at related token budgets. That is essential as a result of it means larger reasoning high quality will be pushed by extra environment friendly scheduling of compute, not solely by extra samples.

Native agent stack with Adaptive Instrument Use

Qwen3-Max-Considering integrates three instruments as top quality capabilities: Search, Reminiscence, and a Code Interpreter. Search connects to internet retrieval so the mannequin can fetch recent pages, extract content material, and floor its solutions. Reminiscence shops person or session particular state, which helps personalised reasoning over longer workflows. The Code Interpreter executes Python, which permits numeric verification, knowledge transforms, and program synthesis with runtime checks.

The mannequin makes use of Adaptive Instrument Use to resolve when to invoke these instruments throughout a dialog. Instrument calls are interleaved with inside considering segments, reasonably than being orchestrated by an exterior agent. This design reduces the necessity for separate routers or planners and tends to scale back hallucinations, as a result of the mannequin can explicitly fetch lacking info or confirm calculations as a substitute of guessing.

Instrument potential can also be benchmarked. On Tau² Bench, which measures operate calling and power orchestration, Qwen3-Max-Considering studies a rating of 82.1, comparable with different frontier fashions on this class.

Benchmark profile throughout information, reasoning, and search

On 19 public benchmarks, Qwen3-Max-Considering is positioned at or close to the identical degree as GPT 5.2 Considering, Claude Opus 4.5, and Gemini 3 Professional. For information duties, reported scores embody 85.7 on MMLU-Professional, 92.8 on MMLU-Redux, and 93.7 on C-Eval, the place Qwen leads the group on Chinese language language analysis.

For exhausting reasoning, it information 87.4 on GPQA, 98.0 on HMMT Feb 25, 94.7 on HMMT Nov 25, and 83.9 on IMOAnswerBench, which places it within the high tier of present math and science fashions. On coding and software program engineering it reaches 85.9 on LiveCodeBench v6 and 75.3 on SWE Verified.

Within the base HLE configuration Qwen3-Max-Considering scores 30.2, beneath Gemini 3 Professional at 37.5 and GPT 5.2 Considering at 35.5. In a software enabled HLE setup, the official comparability desk that features internet search integration exhibits Qwen3-Max-Considering at 49.8, forward of GPT 5.2 Considering at 45.5 and Gemini 3 Professional at 45.8. With its most aggressive expertise cumulative take a look at time scaling configuration on HLE with instruments, Qwen3-Max-Considering reaches 58.3 whereas GPT 5.2 Considering stays at 45.5, though that larger quantity is for a heavier inference mode than the usual comparability desk.

Key Takeaways

- Qwen3-Max-Considering is a closed, API solely flagship reasoning mannequin from Alibaba, constructed on a greater than 1 trillion parameter spine skilled on about 36 trillion tokens with a 262144 token context window.

- The mannequin introduces expertise cumulative take a look at time scaling, the place it reuses intermediate reasoning throughout a number of rounds, enhancing benchmarks similar to GPQA Diamond and LiveCodeBench v6 at related token budgets.

- Qwen3-Max-Considering integrates Search, Reminiscence, and a Code Interpreter as native instruments and makes use of Adaptive Instrument Use so the mannequin itself decides when to browse, recall state, or execute Python throughout a dialog.

- On public benchmarks it studies aggressive scores with GPT 5.2 Considering, Claude Opus 4.5, and Gemini 3 Professional, together with sturdy outcomes on MMLU Professional, GPQA, HMMT, IMOAnswerBench, LiveCodeBench v6, SWE Bench Verified, and Tau² Bench..

Try the API and Technical particulars. Additionally, be at liberty to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as properly.

Michal Sutter is a knowledge science skilled with a Grasp of Science in Information Science from the College of Padova. With a stable basis in statistical evaluation, machine studying, and knowledge engineering, Michal excels at reworking complicated datasets into actionable insights.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s tendencies immediately: learn extra, subscribe to our e-newsletter, and turn into a part of the NextTech group at NextTech-news.com