Do you really want a large VLM when dense Qwen3-VL 4B/8B (Instruct/Considering) with FP8 runs in low VRAM but retains 256K→1M context and the total functionality floor? Alibaba’s Qwen staff has expanded its multimodal lineup with dense Qwen3-VL fashions at 4B and 8B scales, every delivery in two job profiles—Instruct and Considering—plus FP8-quantized checkpoints for low-VRAM deployment. The drop arrives as a smaller, edge-friendly complement to the beforehand launched 30B (MoE) and 235B (MoE) tiers and retains the identical functionality floor: picture/video understanding, OCR, spatial grounding, and GUI/agent management.

What’s within the launch?

SKUs and variants: The brand new additions comprise 4 dense fashions—Qwen3-VL-4B and Qwen3-VL-8B, every in Instruct and Considering editions—alongside FP8 variations of the 4B/8B Instruct and Considering checkpoints. The official announcement explicitly frames these as “compact, dense” fashions with decrease VRAM utilization and full Qwen3-VL capabilities retained.

Context size and functionality floor: The mannequin playing cards listing native 256K context with expandability to 1M, and doc the total function set: long-document and video comprehension, 32-language OCR, 2D/3D spatial grounding, visible coding, and agentic GUI management on desktop and cellular. These attributes carry over to the brand new 4B/8B SKUs.

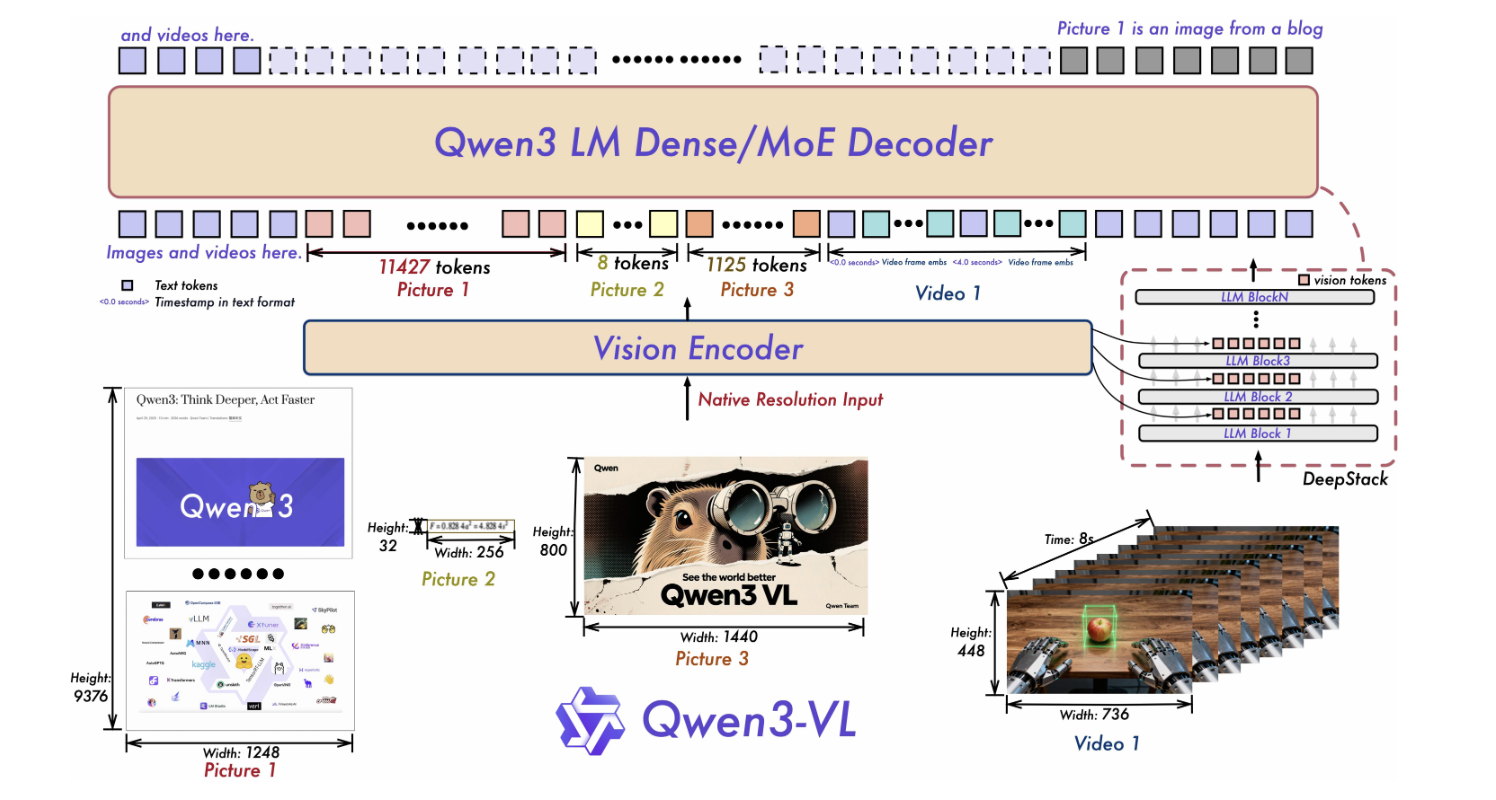

Structure notes: Qwen3-VL highlights three core updates: Interleaved-MRoPE for strong positional encoding over time/width/peak (long-horizon video), DeepStack for fusing multi-level ViT options and sharpening picture–textual content alignment, and Textual content–Timestamp Alignment past T-RoPE for occasion localization in video. These design particulars seem within the new playing cards as effectively, signaling architectural continuity throughout sizes.

Undertaking timeline: The Qwen3-VL GitHub “Information” part information the publication of Qwen3-VL-4B (Instruct/Considering) and Qwen3-VL-8B (Instruct/Considering) on Oct 15, 2025, following earlier releases of the 30B MoE tier and organization-wide FP8 availability.

FP8: deployment-relevant particulars

Numerics and parity declare: The FP8 repositories state fine-grained FP8 quantization with block measurement 128, with efficiency metrics almost an identical to the unique BF16 checkpoints. For groups evaluating precision trade-offs on multimodal stacks (imaginative and prescient encoders, cross-modal fusion, long-context consideration), having vendor-produced FP8 weights reduces re-quantization and re-validation burden.

Tooling standing: The 4B-Instruct-FP8 card notes that Transformers doesn’t but load these FP8 weights instantly, and recommends vLLM or SGLang for serving; the cardboard contains working launch snippets. Individually, the vLLM recipes information recommends FP8 checkpoints for H100 reminiscence effectivity. Collectively, these level to speedy, supported paths for low-VRAM inference.

Key Takeaways

- Qwen launched dense Qwen3-VL 4B and 8B fashions, every in Instruct and Considering variants, with FP8 checkpoints.

- FP8 makes use of fine-grained FP8 (block measurement 128) with near-BF16 metrics; Transformers loading just isn’t but supported—use vLLM/SGLang.

- Functionality floor is preserved: 256K→1M context, 32-language OCR, spatial grounding, video reasoning, and GUI/agent management.

- Mannequin Card-reported sizes: Qwen3-VL-4B ≈ 4.83B params; Qwen3-VL-8B-Instruct ≈ 8.77B params.

Qwen’s resolution to ship dense Qwen3-VL 4B/8B in each Instruct and Considering kinds with FP8 checkpoints is the sensible a part of the story: lower-VRAM, deployment-ready weights (fine-grained FP8, block measurement 128) and specific serving steering (vLLM/SGLang) makes it simply deployable. The aptitude floor—256K context expandable to 1M, 32-language OCR, spatial grounding, video understanding, and agent management—stays intact at these smaller scales, which issues greater than leaderboard rhetoric for groups concentrating on single-GPU or edge budgets.

Take a look at the Mannequin on Hugging Face and GitHub Repo. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as effectively.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies as we speak: learn extra, subscribe to our e-newsletter, and turn into a part of the NextTech neighborhood at NextTech-news.com