How do you construct a single imaginative and prescient language motion mannequin that may management many alternative twin arm robots in the actual world? LingBot-VLA is Ant Group Robbyant’s new Imaginative and prescient Language Motion basis mannequin that targets sensible robotic manipulation in the actual world. It’s skilled on about 20,000 hours of teleoperated bimanual knowledge collected from 9 twin arm robotic embodiments and is evaluated on the massive scale GM-100 benchmark throughout 3 platforms. The mannequin is designed for cross morphology generalization, knowledge environment friendly publish coaching, and excessive coaching throughput on commodity GPU clusters.

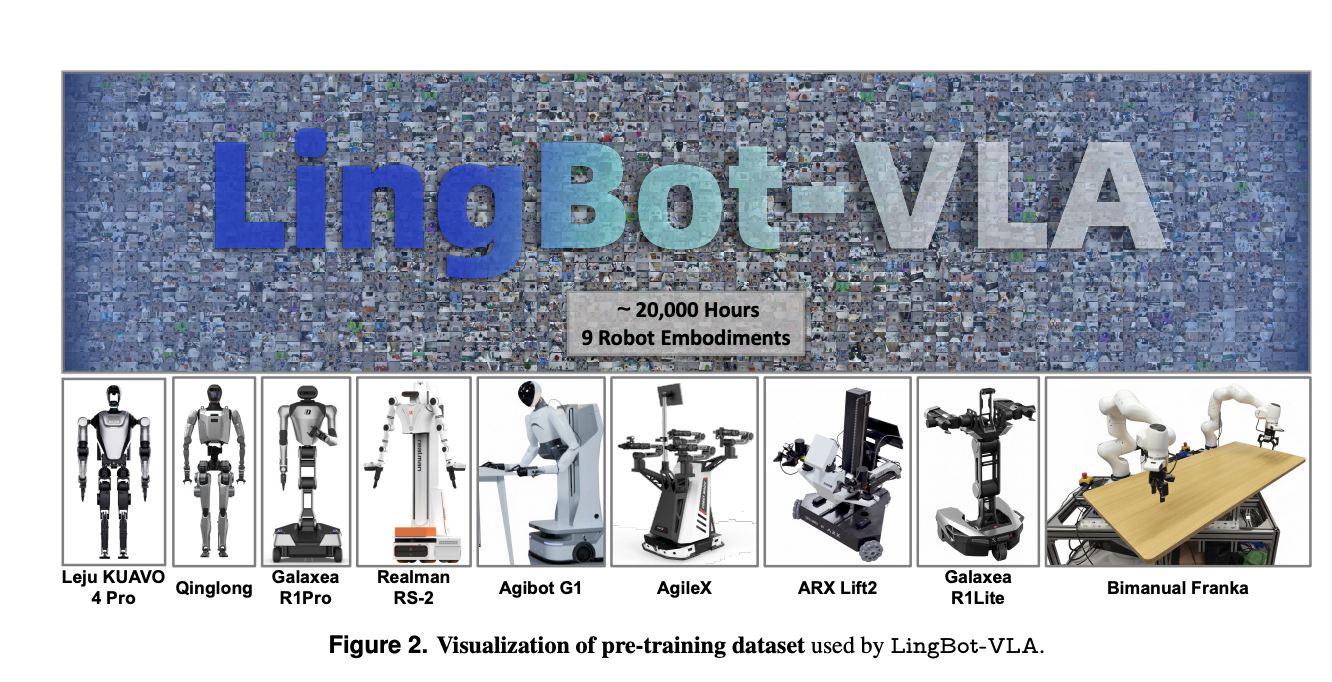

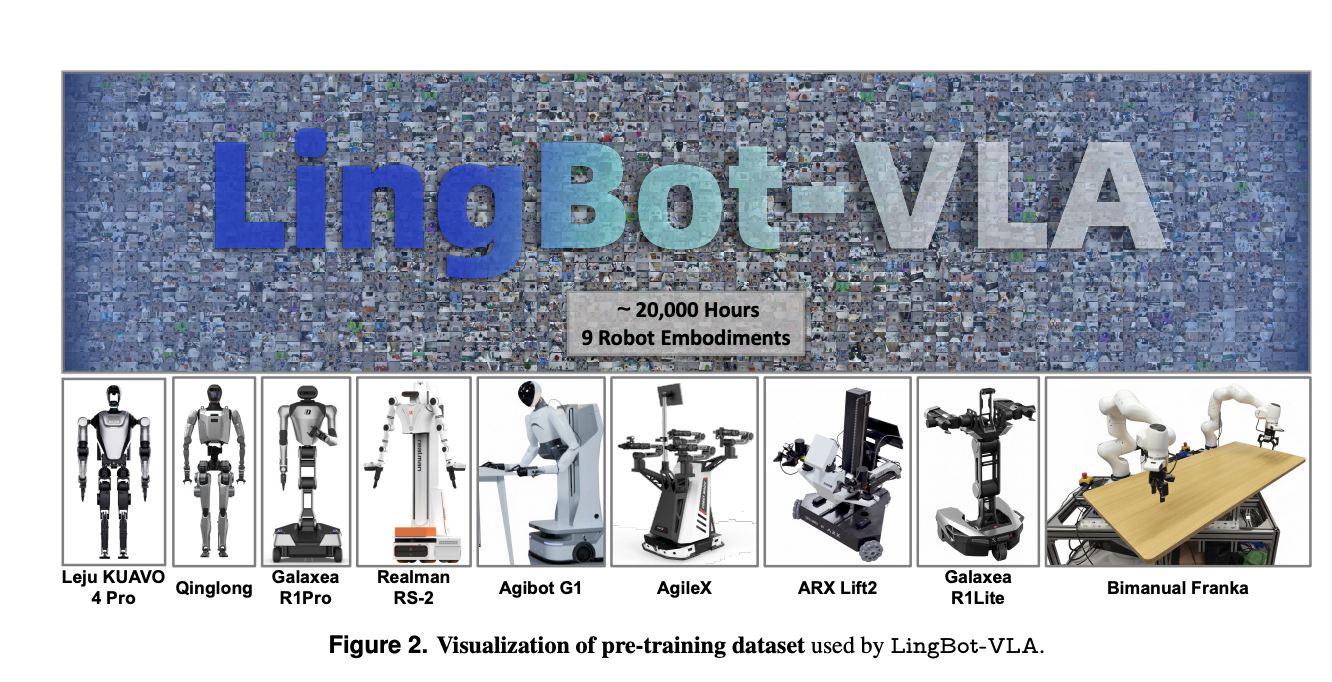

Giant scale twin arm dataset throughout 9 robotic embodiments

The pre-training dataset is constructed from actual world teleoperation on 9 fashionable twin arm configurations. These embody AgiBot G1, AgileX, Galaxea R1Lite, Galaxea R1Pro, Realman Rs 02, Leju KUAVO 4 Professional, Qinglong humanoid, ARX Lift2, and a Bimanual Franka setup. All programs have twin 6 or 7 diploma of freedom arms with parallel grippers and a number of RGB-D cameras that present multi view observations

Teleoperation makes use of VR management for AgiBot G1 and isomorphic arm management for AgileX. For every scene the recorded movies from all views are segmented by human annotators into clips that correspond to atomic actions. Static frames firstly and finish of every clip are eliminated to cut back redundancy. Job degree and sub process degree language directions are then generated with Qwen3-VL-235B-A22B. This pipeline yields synchronized sequences of photographs, directions, and motion trajectories for pre-training.

To characterize motion variety the analysis crew visualizes probably the most frequent atomic actions in coaching and checks by phrase clouds. About 50 p.c of atomic actions within the check set don’t seem inside the high 100 most frequent actions within the coaching set. This hole ensures that analysis stresses cross process generalization moderately than frequency based mostly memorization.

Structure, Combination of Transformers, and Circulation Matching actions

LingBot-VLA combines a robust multimodal spine with an motion knowledgeable by a Combination of Transformers structure. The imaginative and prescient language spine is Qwen2.5-VL. It encodes multi-view operational photographs and the pure language instruction right into a sequence of multimodal tokens. In parallel, the motion knowledgeable receives robotic proprioceptive state and chunks of previous actions. Each branches share a self consideration module that performs layer smart joint sequence modeling over statement and motion tokens.

At every time step the mannequin types an statement sequence that concatenates tokens from 3 digicam views, the duty instruction, and the robotic state. The motion sequence is a future motion chunk with a temporal horizon set to 50 throughout pre-training. The coaching goal is conditional Circulation Matching. The mannequin learns a vector discipline that transports Gaussian noise to the bottom reality motion trajectory alongside a linear likelihood path. This offers a steady motion illustration and produces clean, temporally coherent management appropriate for exact twin arm manipulation.

LingBot-VLA makes use of blockwise causal consideration over the joint sequence. Statement tokens can attend to one another bidirectionally. Motion tokens can attend to all statement tokens and solely to previous motion tokens. This masks prevents info leakage from future actions into present observations whereas nonetheless permitting the motion knowledgeable to use the total multimodal context at every determination step.

Spatial notion by way of LingBot Depth distillation

Many VLA fashions wrestle with depth reasoning when depth sensors fail or return sparse measurements. LingBot-VLA addresses this by integrating LingBot-Depth, a separate spatial notion mannequin based mostly on Masked Depth Modeling. LingBot-Depth is skilled in a self supervised method on a big RGB-D corpus and learns to reconstruct dense metric depth when elements of the depth map are masked, usually in areas the place bodily sensors are inclined to fail.

In LingBot-VLA the visible queries from every digicam view are aligned with LingBot-Depth tokens by a projection layer and a distillation loss. Cross consideration maps VLM queries into the depth latent area and the coaching minimizes their distinction from LingBot-Depth options. This injects geometry conscious info into the coverage and improves efficiency on duties that require correct 3D spatial reasoning, reminiscent of insertion, stacking, and folding below muddle and occlusion.

GM-100 actual world benchmark throughout 3 platforms

The principle analysis makes use of GM-100, an actual world benchmark with 100 manipulation duties and 130 filtered teleoperated trajectories per process on every of three {hardware} platforms. Experiments evaluate LingBot-VLA with π0.5, GR00T N1.6, and WALL-OSS below a shared publish coaching protocol. All strategies high quality tune from public checkpoints with the identical dataset, batch measurement 256, and 20 epochs. Success Price measures completion of all subtasks inside 3 minutes and Progress Rating tracks partial completion.

On GM-100, LingBot-VLA with depth achieves state-of-the-art averages throughout the three platforms. The common Success Price is 17.30 p.c and the common Progress Rating is 35.41 p.c. π0.5 reaches 13.02 p.c SR (success charge) and 27.65 p.c PS (progress rating). GR00T N1.6 and WALL-OSS are decrease at 7.59 p.c SR, 15.99 p.c PS and 4.05 p.c SR, 10.35 p.c PS respectively. LingBot-VLA with out depth already outperforms GR00T N1.6 and WALL-OSS and the depth variant provides additional beneficial properties.

In RoboTwin 2.0 simulation with 50 duties, fashions are skilled on 50 demonstrations per process in clear scenes and 500 per process in randomized scenes. LingBot-VLA with depth reaches 88.56 p.c common Success Price in clear scenes and 86.68 p.c in randomized scenes. π0.5 reaches 82.74 p.c and 76.76 p.c in the identical settings. This exhibits constant beneficial properties from the identical structure and depth integration when area randomization is robust.

Scaling habits and knowledge environment friendly publish coaching

The analysis crew analyzes scaling legal guidelines by various pre-training knowledge from 3,000 to twenty,000 hours on a subset of 25 duties. Each Success Price and Progress Rating enhance monotonically with knowledge quantity, with no saturation on the largest scale studied. That is the primary empirical proof that VLA fashions preserve favorable scaling on actual robotic knowledge at this measurement.

Additionally they examine knowledge effectivity of publish coaching on AgiBot G1 utilizing 8 consultant GM-100 duties. With solely 80 demonstrations per process LingBot-VLA already surpasses π0.5 that makes use of the total 130 demonstration set, in each Success Price and Progress Rating. As extra trajectories are added the efficiency hole widens. This confirms that the pre-trained coverage transfers with solely dozens to round 100 process particular trajectories, which immediately reduces adaptation price for brand new robots or duties.

Coaching throughput and open supply toolkit

LingBot-VLA comes with a coaching stack optimized for multi-node effectivity. The codebase makes use of a FSDP type technique for parameters and optimizer states, hybrid sharding for the motion knowledgeable, combined precision with float32 reductions and bfloat16 storage, and operator degree acceleration with fused consideration kernels and torch compile.

On an 8 GPU setup the analysis crew reported throughput of 261 samples per second per GPU for Qwen2.5-VL-3B and PaliGemma-3B-pt-224 mannequin configurations. This corresponds to a 1.5 instances to 2.8 instances speedup in contrast with present VLA oriented codebases reminiscent of StarVLA, Dexbotic, and OpenPI evaluated on the identical Libero based mostly benchmark. Throughput scales near linearly when shifting from 8 to 256 GPUs. The total publish coaching toolkit is launched as open supply.

Key Takeaways

- LingBot-VLA is a Qwen2.5-VL based mostly imaginative and prescient language motion basis mannequin skilled on about 20,000 hours of actual world twin arm teleoperation throughout 9 robotic embodiments, which allows sturdy cross morphology and cross process generalization.

- The mannequin integrates LingBot Depth by characteristic distillation so imaginative and prescient tokens are aligned with a depth completion knowledgeable, which considerably improves 3D spatial understanding for insertion, stacking, folding, and different geometry delicate duties.

- On the GM-100 actual world benchmark, LingBot-VLA with depth achieves about 17.30 p.c common Success Price and 35.41 p.c common Progress Rating, which is greater than π0.5, GR00T N1.6, and WALL OSS below the identical publish coaching protocol.

- LingBot-VLA exhibits excessive knowledge effectivity in publish coaching, since on AgiBot G1 it might probably surpass π0.5 that makes use of 130 demonstrations per process whereas utilizing solely about 80 demonstrations per process, and efficiency continues to enhance as extra trajectories are added.

Try the Paper, Mannequin Weight, Repo and Venture Web page. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as effectively.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments at present: learn extra, subscribe to our publication, and turn out to be a part of the NextTech group at NextTech-news.com