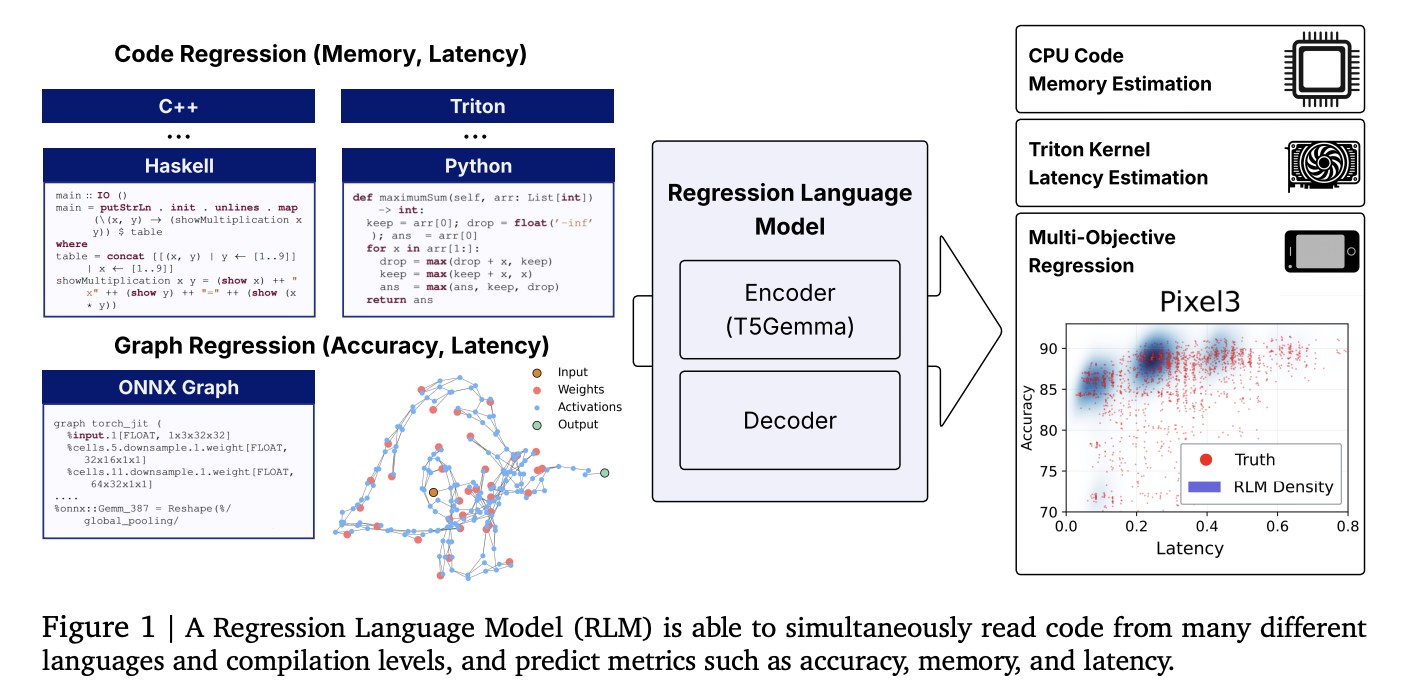

Researchers from Cornell and Google introduce a unified Regression Language Mannequin (RLM) that predicts numeric outcomes instantly from code strings—protecting GPU kernel latency, program reminiscence utilization, and even neural community accuracy and latency—with out hand-engineered options. A 300M-parameter encoder–decoder initialized from T5-Gemma achieves sturdy rank correlations throughout heterogeneous duties and languages, utilizing a single text-to-number decoder that emits digits with constrained decoding.

What precisely is new?

- Unified code-to-metric regression: One RLM predicts (i) peak reminiscence from high-level code (Python/C/C++ and extra), (ii) latency for Triton GPU kernels, and (iii) accuracy and hardware-specific latency from ONNX graphs—by studying uncooked textual content representations and decoding numeric outputs. No function engineering, graph encoders, or zero-cost proxies are required.

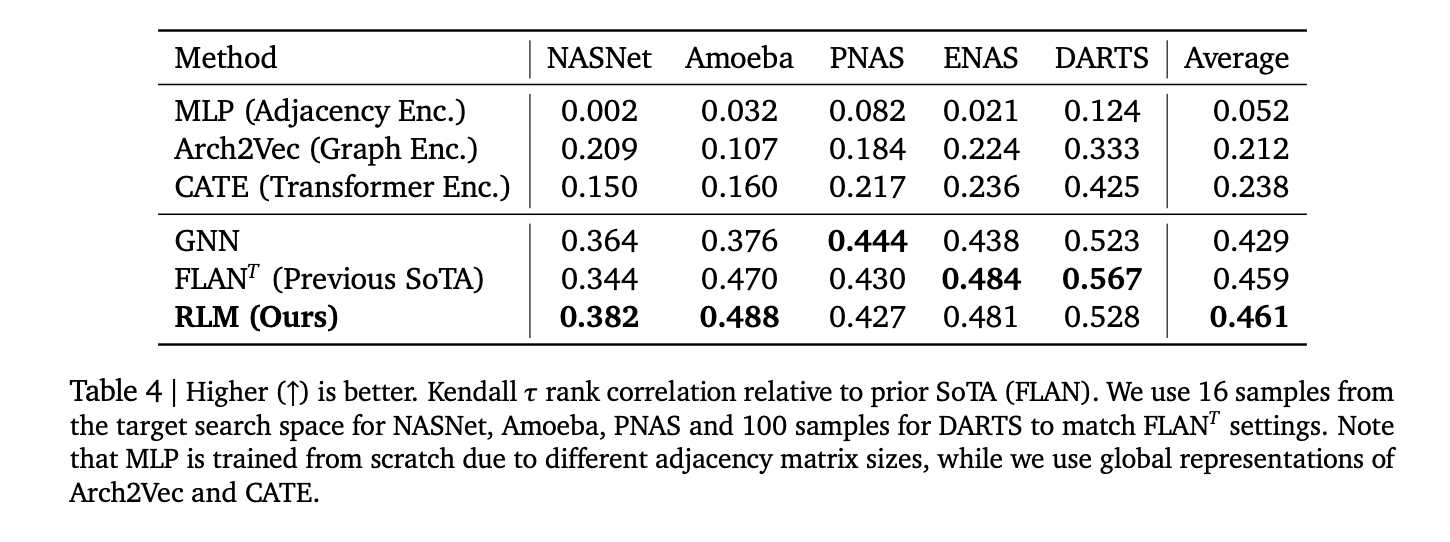

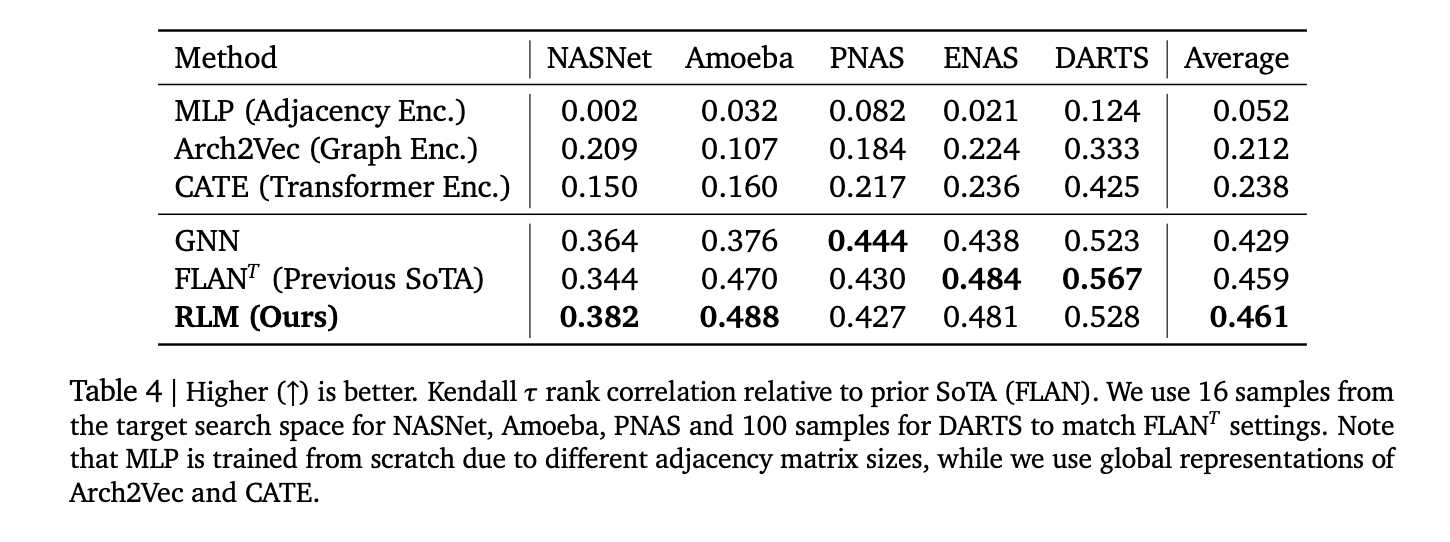

- Concrete outcomes: Reported correlations embody Spearman ρ ≈ 0.93 on APPS LeetCode reminiscence, ρ ≈ 0.52 for Triton kernel latency, ρ > 0.5 common throughout 17 CodeNet languages, and Kendall τ ≈ 0.46 throughout 5 traditional NAS areas—aggressive with and in some instances surpassing graph-based predictors.

- Multi-objective decoding: As a result of the decoder is autoregressive, the mannequin circumstances later metrics on earlier ones (e.g., accuracy → per-device latencies), capturing practical trade-offs alongside Pareto fronts.

Why is that this necessary?

Efficiency prediction pipelines in compilers, GPU kernel choice, and NAS sometimes depend on bespoke options, syntax bushes, or GNN encoders which might be brittle to new ops/languages. Treating regression as next-token prediction over numbers standardizes the stack: tokenize inputs as plain textual content (supply code, Triton IR, ONNX), then decode calibrated numeric strings digit-by-digit with constrained sampling. This reduces upkeep price and improves switch to new duties through fine-tuning.

Knowledge and benchmarks

- Code-Regression dataset (HF): Curated to help code-to-metric duties spanning APPS/LeetCode runs, Triton kernel latencies (KernelBook-derived), and CodeNet reminiscence footprints.

- NAS/ONNX suite: Architectures from NASBench-101/201, FBNet, As soon as-for-All (MB/PN/RN), Twopath, Hiaml, Inception, and NDS are exported to ONNX textual content to foretell accuracy and device-specific latency.

How does it work?

- Spine: Encoder–decoder with a T5-Gemma encoder initialization (~300M params). Inputs are uncooked strings (code or ONNX). Outputs are numbers emitted as signal/exponent/mantissa digit tokens; constrained decoding enforces legitimate numerals and helps uncertainty through sampling.

- Ablations: (i) Language pretraining accelerates convergence and improves Triton latency prediction; (ii) decoder-only numeric emission outperforms MSE regression heads even with y-normalization; (iii) realized tokenizers specialised for ONNX operators enhance efficient context; (iv) longer contexts assist; (v) scaling to a bigger Gemma encoder additional improves correlation with sufficient tuning.

- Coaching code. The regress-lm library gives text-to-text regression utilities, constrained decoding, and multi-task pretraining/fine-tuning recipes.

Stats that issues

- APPS (Python) reminiscence: Spearman ρ > 0.9.

- CodeNet (17 languages) reminiscence: common ρ > 0.5; strongest languages embody C/C++ (~0.74–0.75).

- Triton kernels (A6000) latency: ρ ≈ 0.52.

- NAS rating: common Kendall τ ≈ 0.46 throughout NASNet, Amoeba, PNAS, ENAS, DARTS; aggressive with FLAN and GNN baselines.

Key Takeaways

- Unified code-to-metric regression works. A single ~300M-parameter T5Gemma-initialized mannequin (“RLM”) predicts: (a) reminiscence from high-level code, (b) Triton GPU kernel latency, and (c) mannequin accuracy + machine latency from ONNX—instantly from textual content, no hand-engineered options.

- The analysis reveals Spearman ρ > 0.9 on APPS reminiscence, ≈0.52 on Triton latency, >0.5 common throughout 17 CodeNet languages, and Kendall-τ ≈ 0.46 on 5 NAS areas.

- Numbers are decoded as textual content with constraints. As an alternative of a regression head, RLM emits numeric tokens with constrained decoding, enabling multi-metric, autoregressive outputs (e.g., accuracy adopted by multi-device latencies) and uncertainty through sampling.

- The Code-Regression dataset unifies APPS/LeetCode reminiscence, Triton kernel latency, and CodeNet reminiscence; the regress-lm library gives the coaching/decoding stack.

It is extremely attention-grabbing how this work reframes efficiency prediction as text-to-number era: a compact T5Gemma-initialized RLM reads supply (Python/C++), Triton kernels, or ONNX graphs and emits calibrated numerics through constrained decoding. The reported correlations—APPS reminiscence (ρ>0.9), Triton latency on RTX A6000 (~0.52), and NAS Kendall-τ ≈0.46—are sturdy sufficient to matter for compiler heuristics, kernel pruning, and multi-objective NAS triage with out bespoke options or GNNs. The open dataset and library make replication simple and decrease the barrier to fine-tuning on new {hardware} or languages.

Try the Paper, GitHub Web page and Dataset Card. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you’ll be able to be part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s traits immediately: learn extra, subscribe to our publication, and turn out to be a part of the NextTech neighborhood at NextTech-news.com