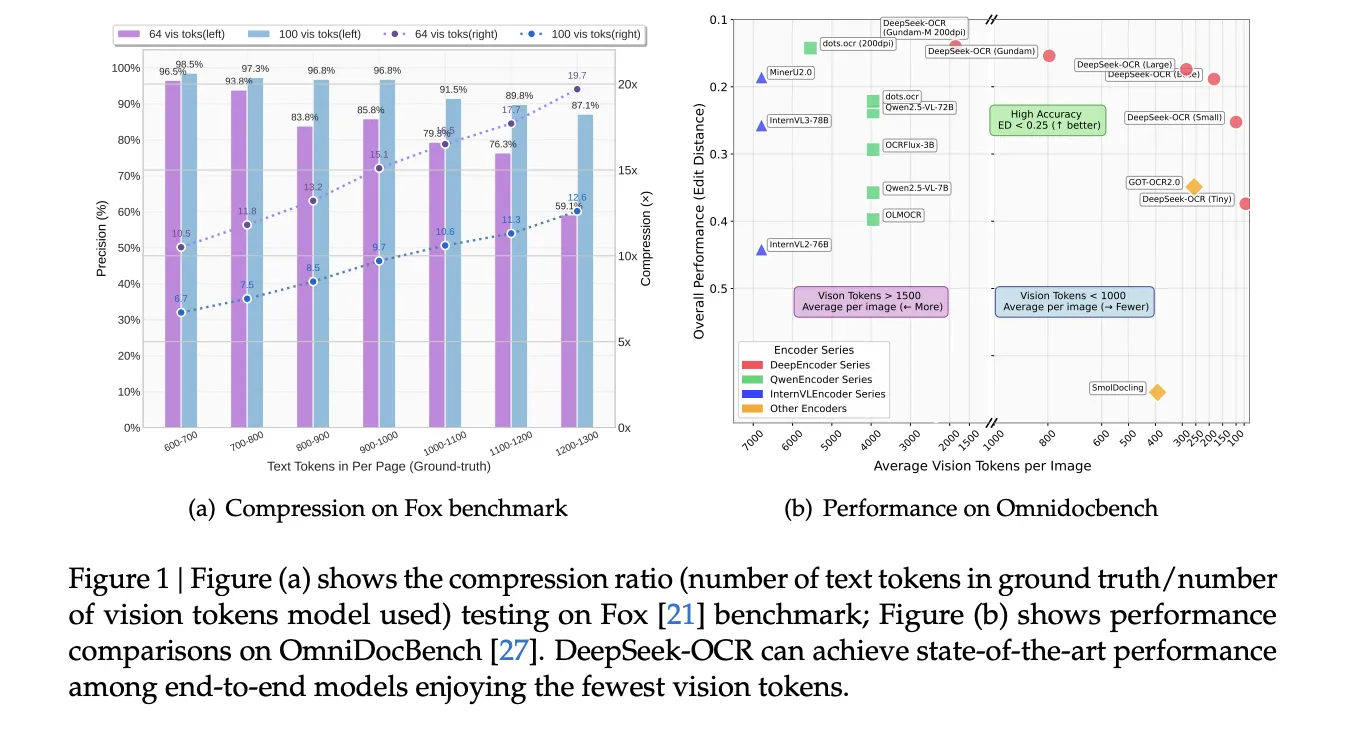

DeepSeek-AI launched 3B DeepSeek-OCR, an finish to finish OCR and doc parsing Imaginative and prescient-Language Mannequin (VLM) system that compresses lengthy textual content right into a small set of imaginative and prescient tokens, then decodes these tokens with a language mannequin. The strategy is straightforward, pictures carry compact representations of textual content, which reduces sequence size for the decoder. The analysis staff reviews 97% decoding precision when textual content tokens are inside 10 occasions the imaginative and prescient tokens on Fox benchmark, and helpful habits even at 20 occasions compression. It additionally reviews aggressive outcomes on OmniDocBench with far fewer tokens than widespread baselines.

Structure, what is definitely new?

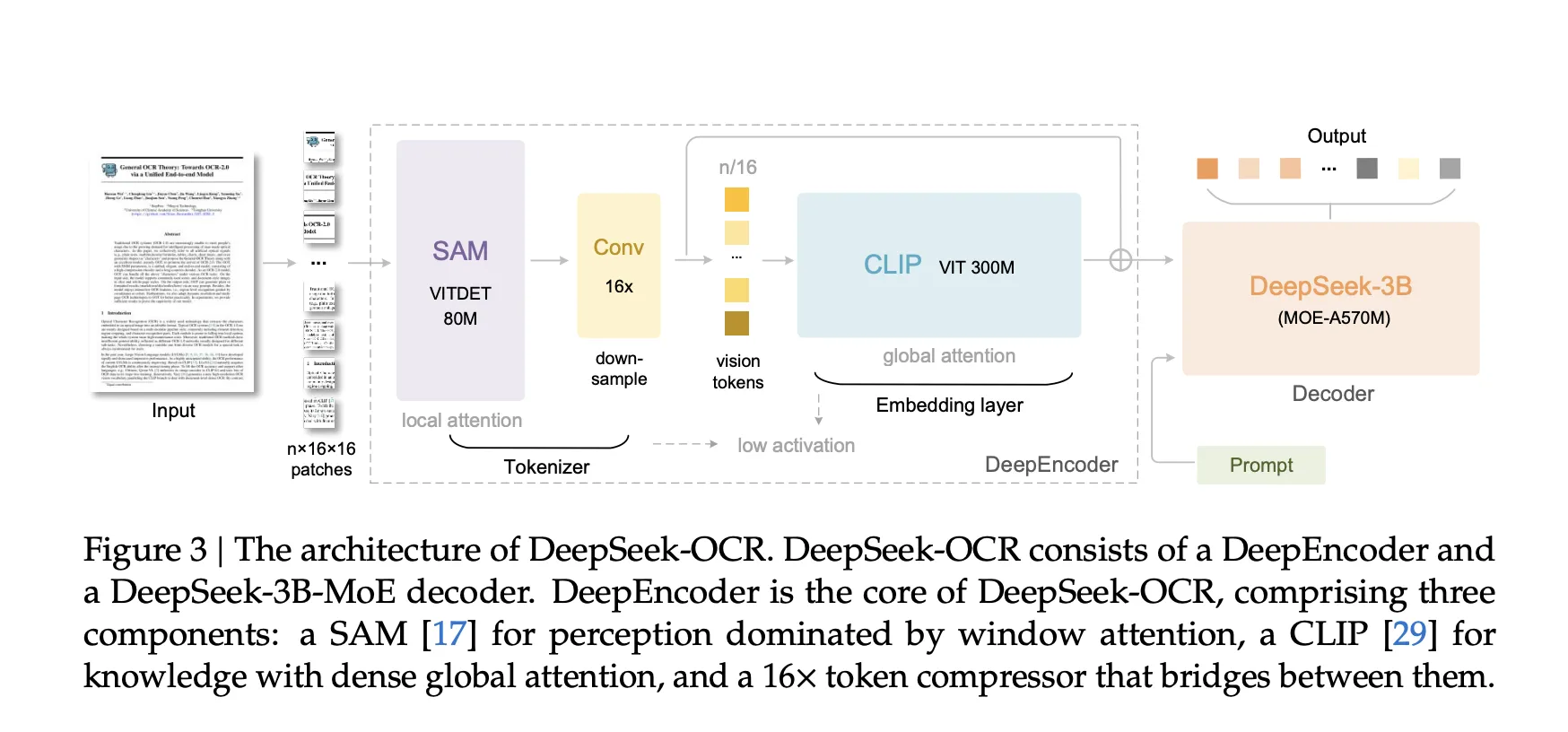

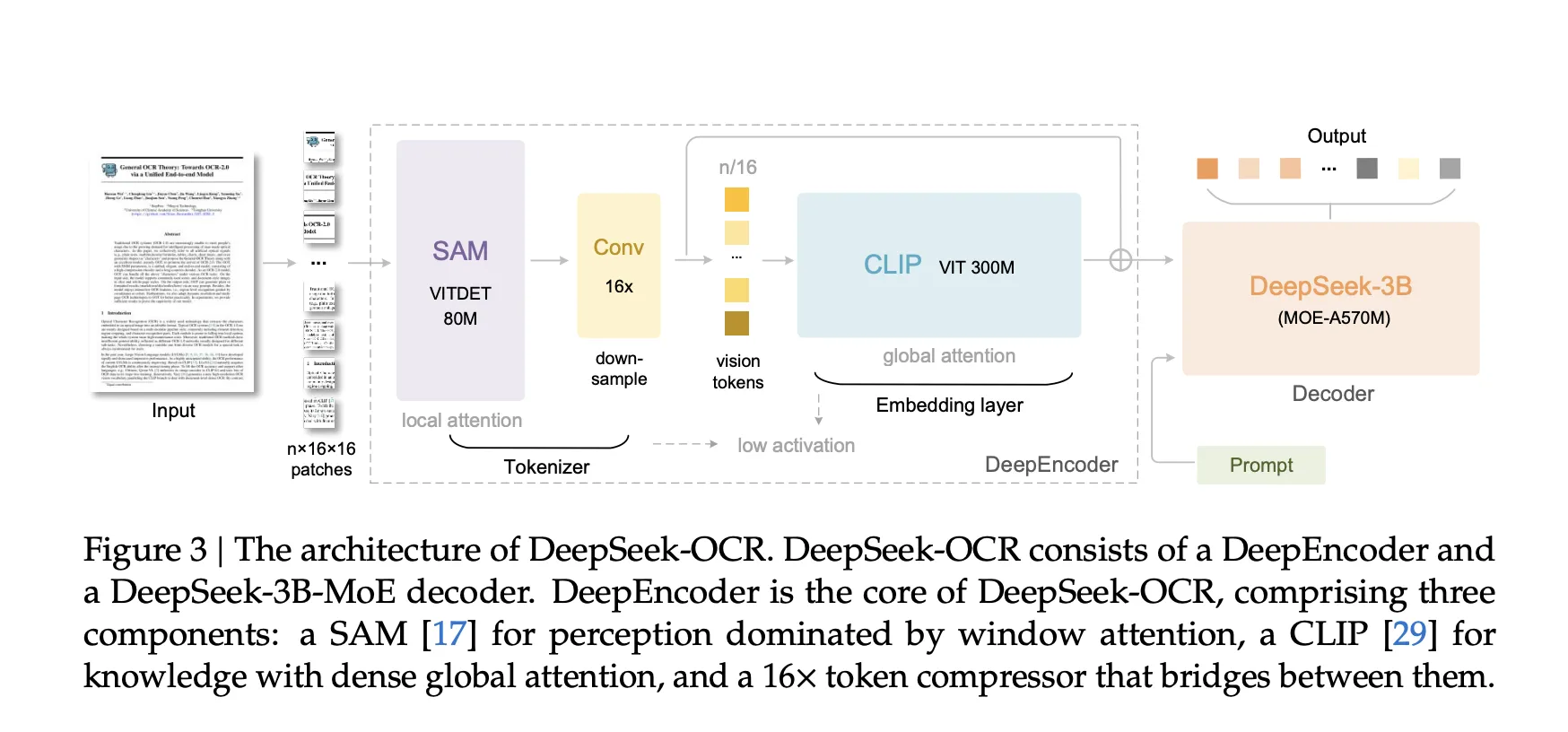

DeepSeek-OCR-3B has two parts, a imaginative and prescient encoder named DeepEncoder and a Combination of Consultants decoder named DeepSeek3B-MoE-A570M. The encoder is designed for top decision inputs with low activation price and with few output tokens. It makes use of a window consideration stage based mostly on SAM for native notion, a 2 layer convolutional compressor for 16× token downsampling, and a dense international consideration stage based mostly on CLIP for visible information aggregation. This design retains activation reminiscence managed at excessive decision, and retains the imaginative and prescient token depend low. The decoder is a 3B parameter MoE mannequin (named as DeepSeek3B-MoE-A570M) with about 570M lively parameters per token.

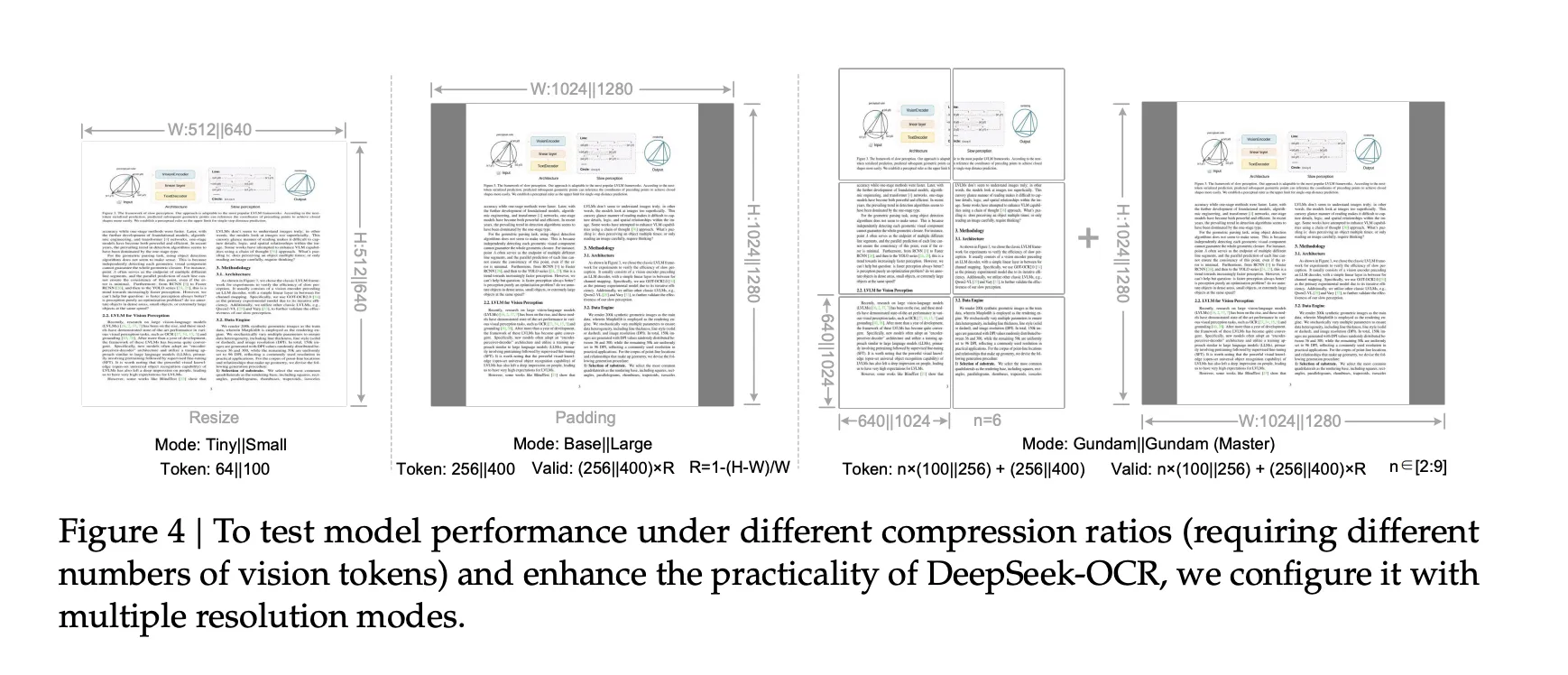

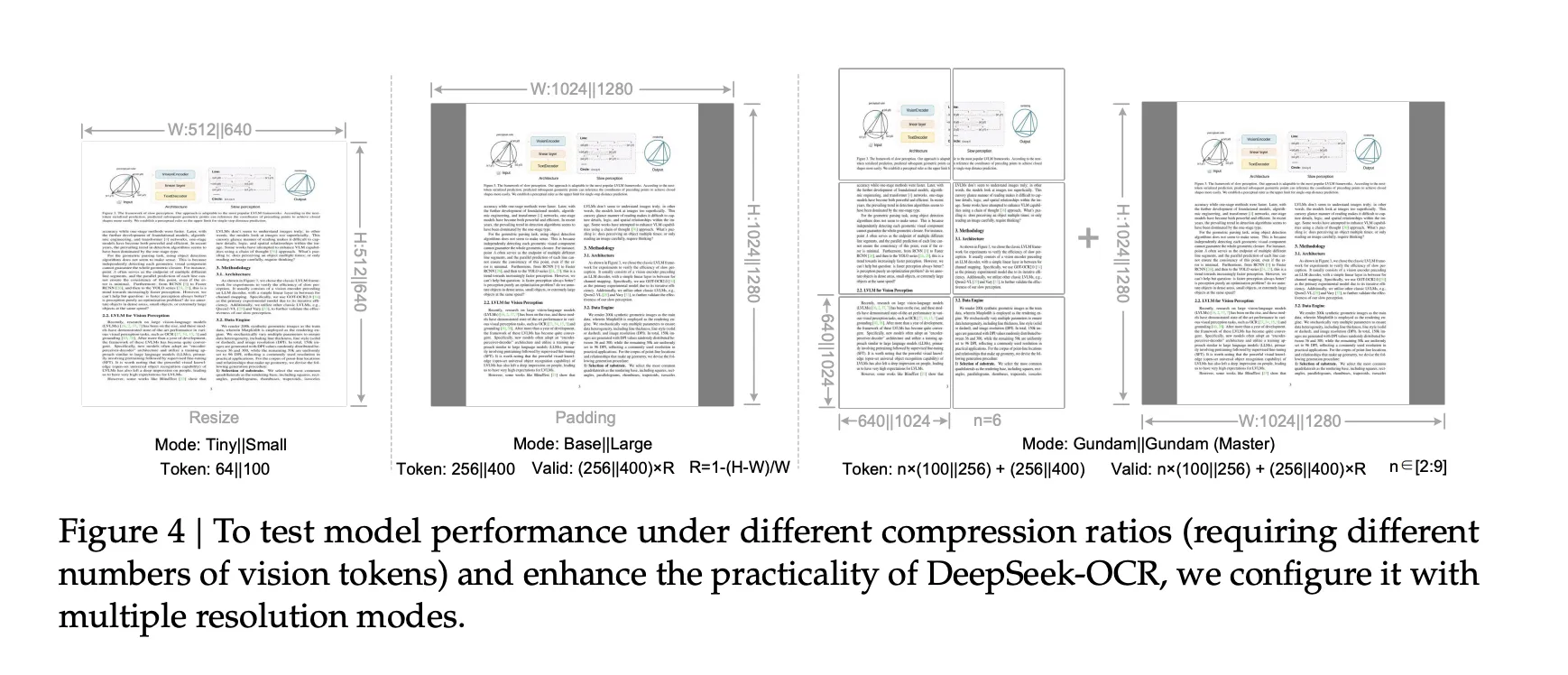

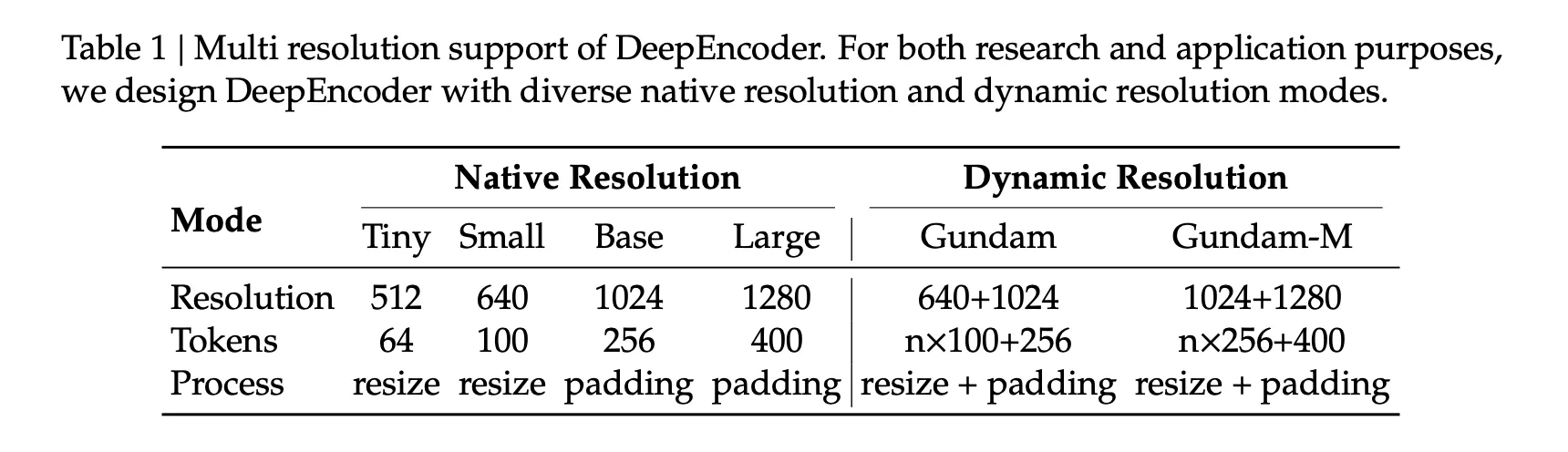

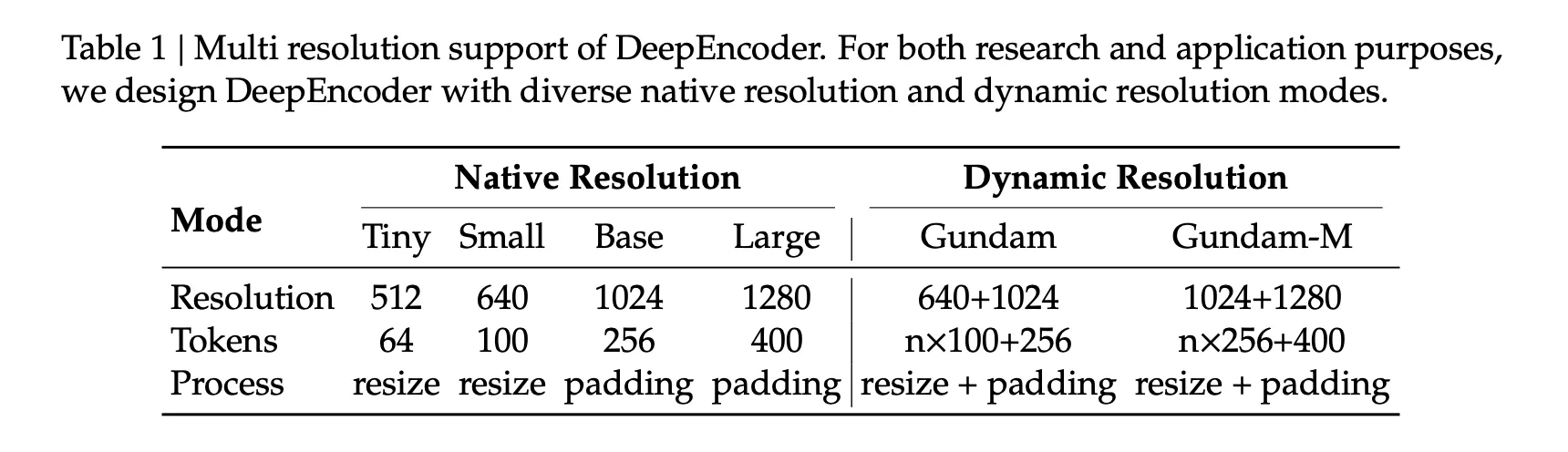

Multi decision modes, engineered for token budgets

DeepEncoder helps native modes and dynamic modes. Native modes are Tiny with 64 tokens at 512 by 512 pixels, Small with 100 tokens at 640 by 640, Base with 256 tokens at 1024 by 1024, and Giant with 400 tokens at 1280 by 1280. Dynamic modes named Gundam and Gundam-Grasp combine tiled native views with a worldwide view. Gundam yields n×100 plus 256 tokens, or n×256 plus 400 tokens, with n within the vary 2 to 9. For padded modes, the analysis staff offers a system for legitimate tokens, which is decrease than the uncooked token depend, and is dependent upon the facet ratio. These modes let AI builders and researchers align token budgets with web page complexity.

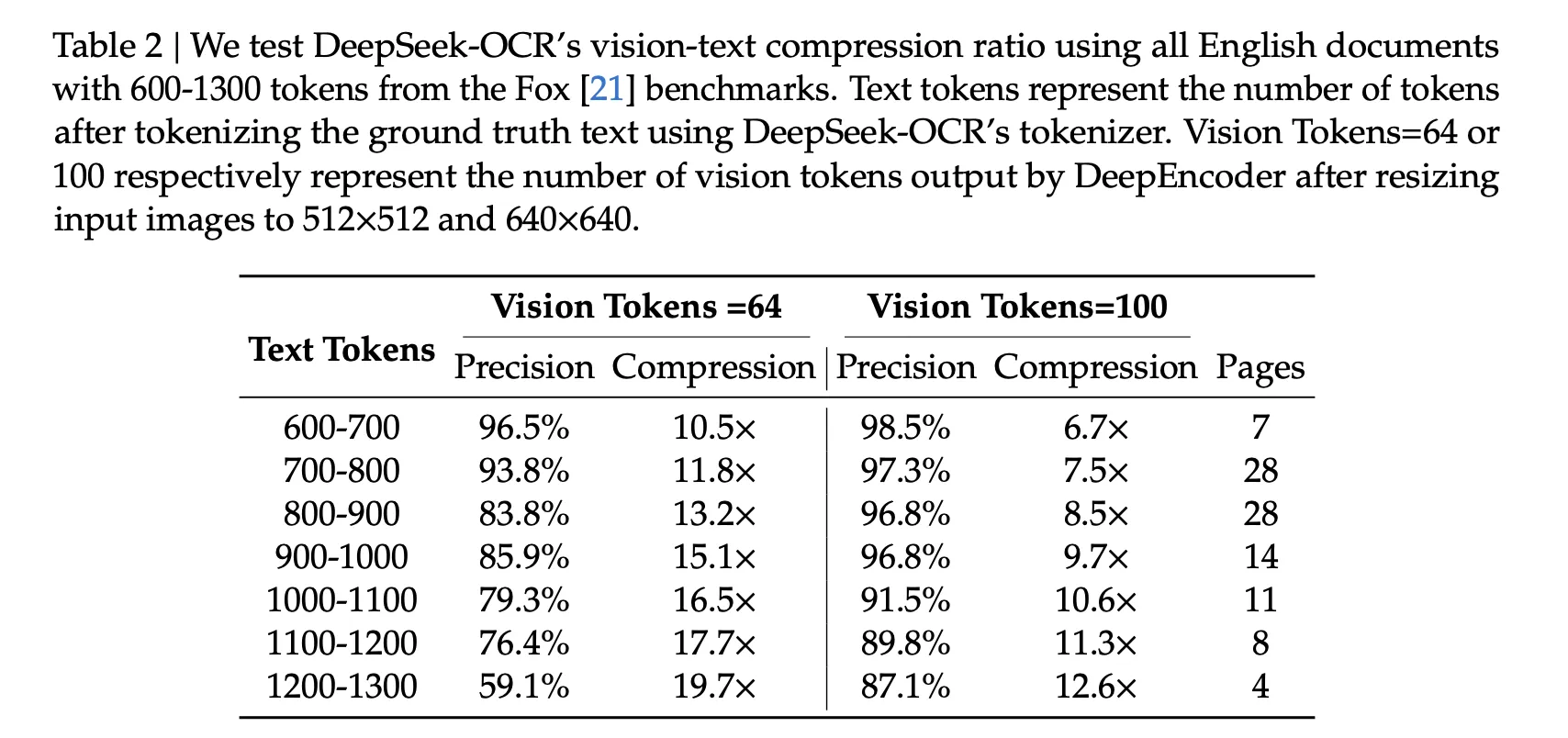

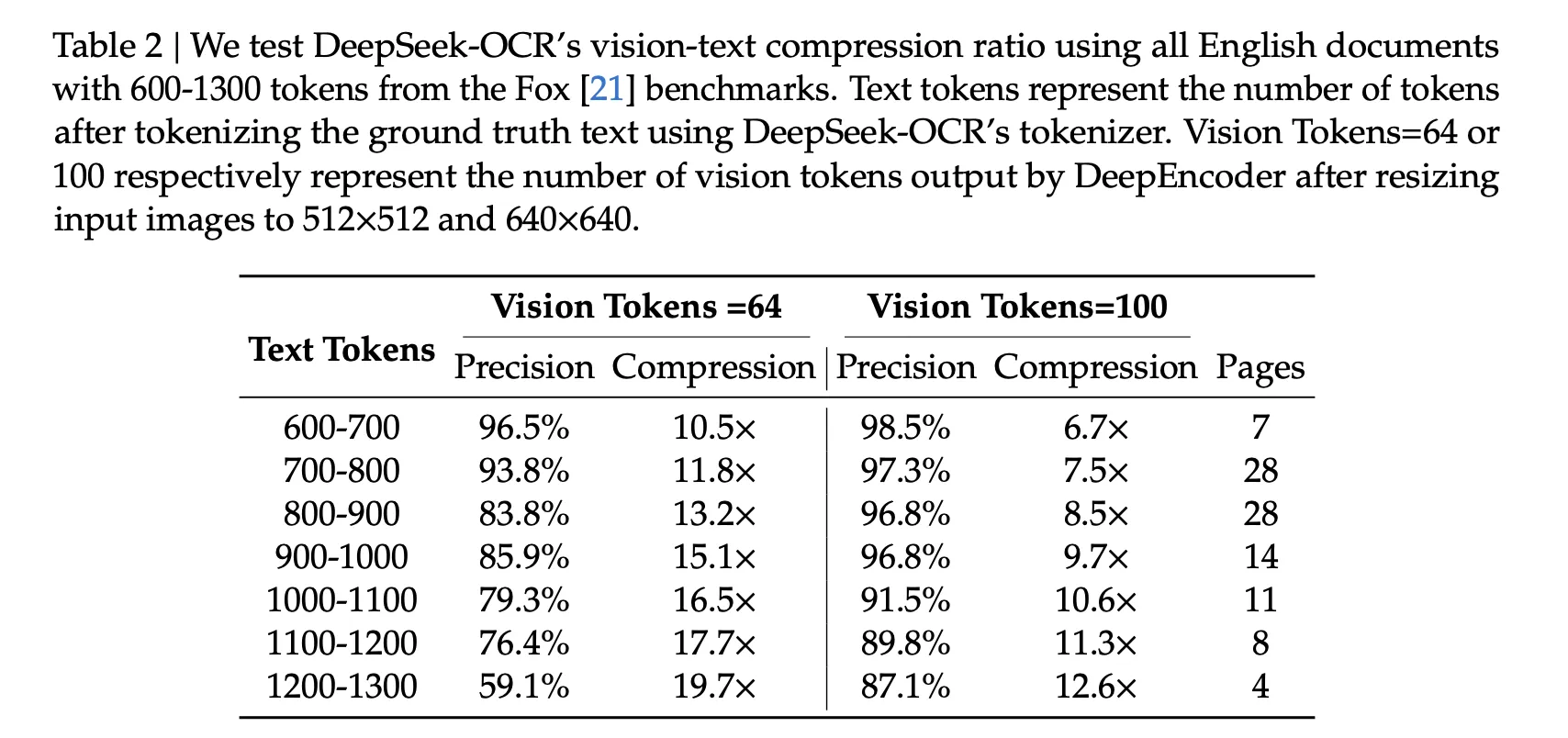

Compression outcomes, what the numbers say…..

The Fox benchmark research measures precision as actual textual content match after decoding. With 100 imaginative and prescient tokens, pages with 600 to 700 textual content tokens attain 98.5% precision at 6.7× compression. Pages with 900 to 1000 textual content tokens attain 96.8% precision at 9.7× compression. With 64 imaginative and prescient tokens, precision decreases as compression will increase, for instance 59.1% at about 19.7× for 1200 to 1300 textual content tokens. These values come immediately from Desk 2.

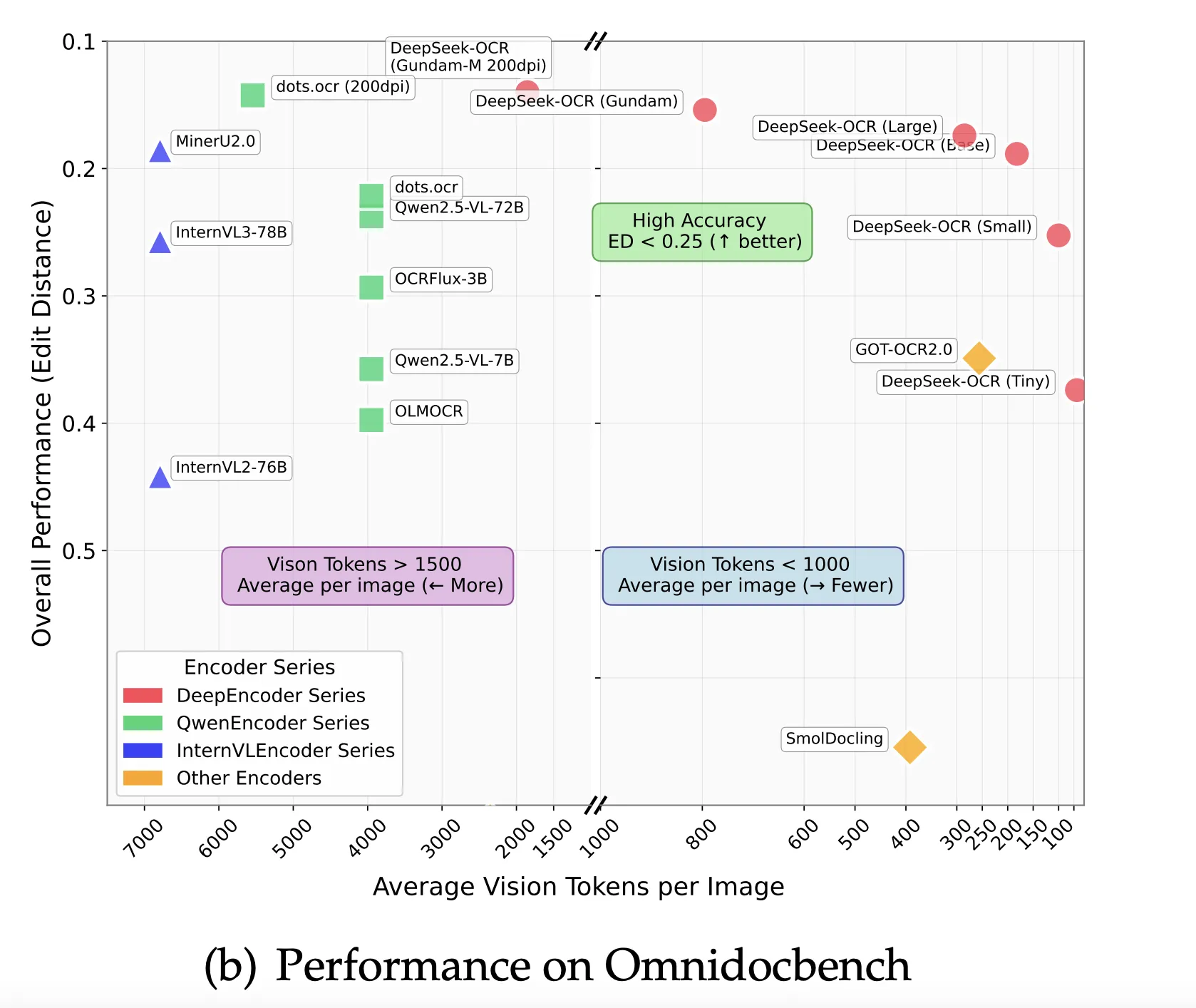

On OmniDocBench, the summary reviews that DeepSeek-OCR surpasses GOT-OCR 2.0 when utilizing solely 100 imaginative and prescient tokens per web page, and that beneath 800 imaginative and prescient tokens it outperforms MinerU 2.0, which makes use of over 6000 tokens per web page on common. The benchmark part presents total efficiency by way of edit distance.

Coaching particulars that matter….

The analysis staff describes a two part coaching pipeline. It first trains DeepEncoder with subsequent token prediction on OCR 1.0 and OCR 2.0 information and 100M LAION samples, then trains the complete system with pipeline parallelism throughout 4 partitions. For {hardware}, the run used 20 nodes, every with 8 A100 40G GPUs, and used AdamW. The staff reviews a coaching pace of 90B tokens per day on textual content solely information, and 70B tokens per day on multimodal information. In manufacturing, it reviews the power to generate over 200k pages per day on a single A100 40G node.

The way to consider it in a sensible stack

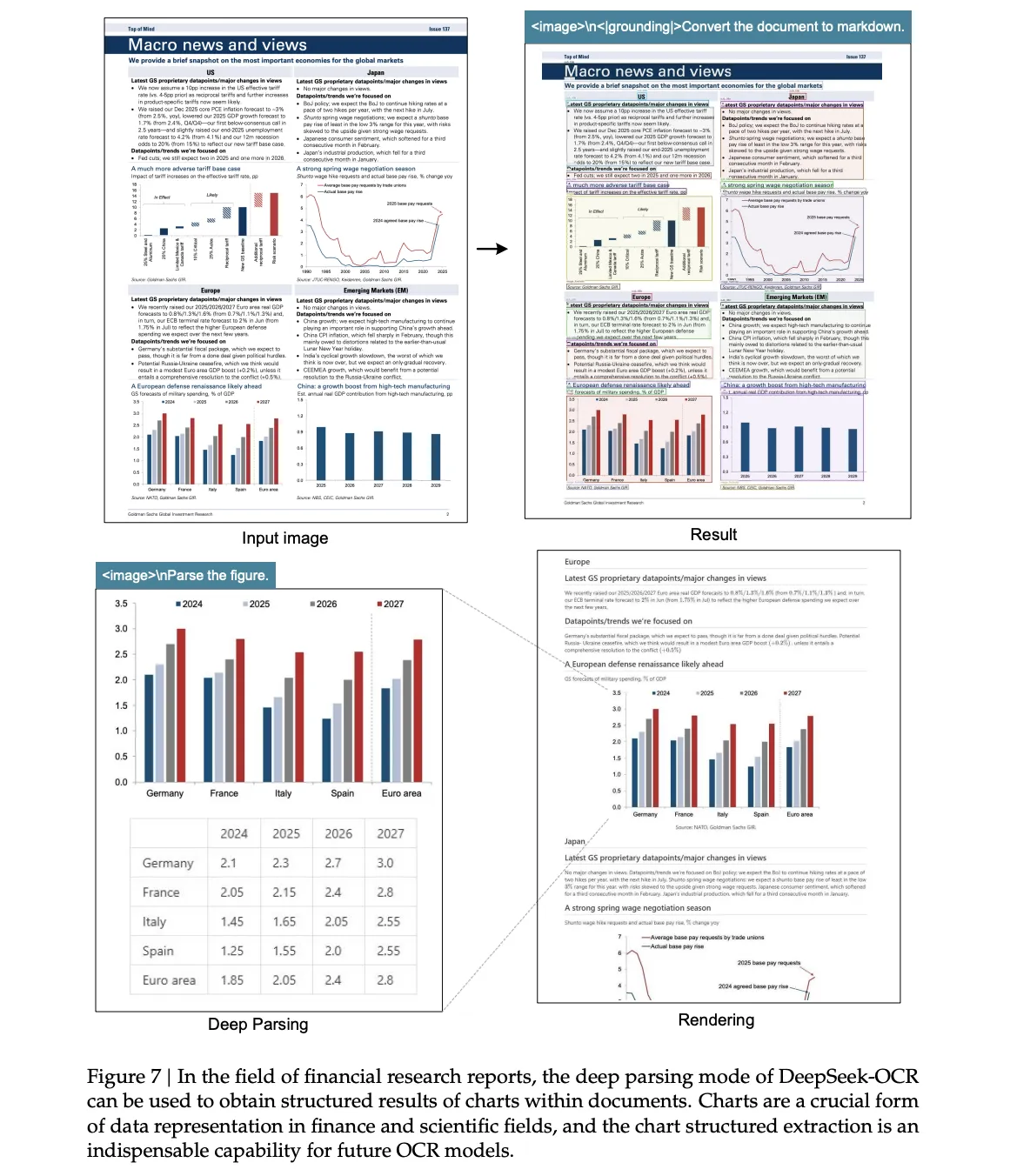

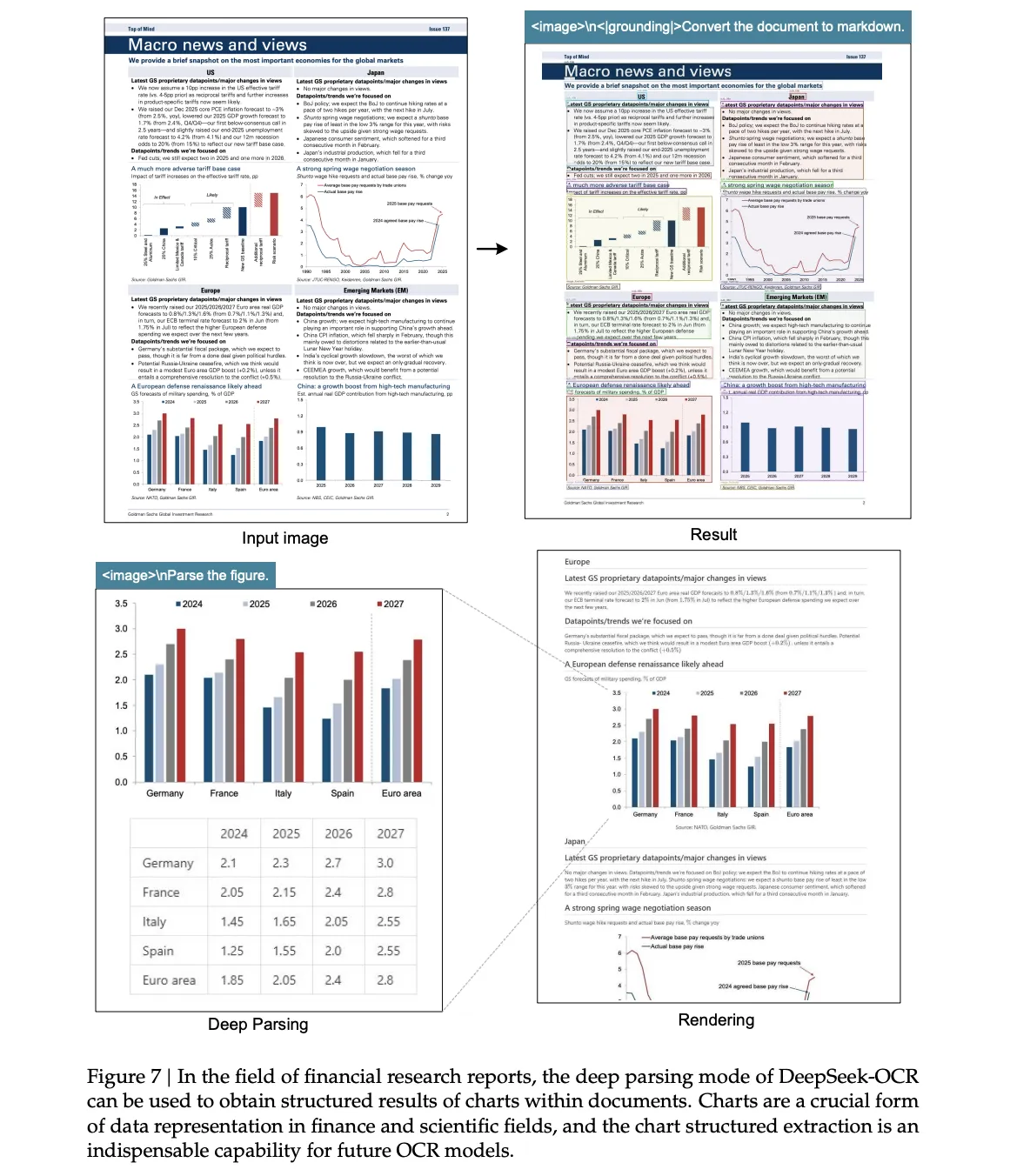

In case your goal paperwork are typical reviews or books, begin with Small mode at 100 tokens, then alter upward provided that the edit distance is unacceptable. In case your pages include dense small fonts or very excessive token counts, use a Gundam mode, because it combines international and native fields of view with specific token budgeting. In case your workload consists of charts, tables, or chemical constructions, evaluate the “Deep parsing” qualitative part, which exhibits conversions to HTML tables and SMILES and structured geometry, then design outputs which might be straightforward to validate.

Key Takeaways

- DeepSeek OCR targets token effectivity utilizing optical context compression with close to lossless decoding at about 10 occasions compression, and round 60 p.c precision at about 20 occasions compression.

- The HF launch expose specific token budgets, Tiny makes use of 64 tokens at 512 by 512, Small makes use of 100 tokens at 640 by 640, Base makes use of 256 tokens at 1024 by 1024, Giant makes use of 400 tokens at 1280 by 1280, and Gundam composes n views at 640 by 640 plus one international view at 1024 by 1024.

- The system construction is a DeepEncoder that compresses pages into imaginative and prescient tokens and a DeepSeek3B MoE decoder with about 570M lively parameters, as described by the analysis staff within the technical report.

- The Hugging Face mannequin card paperwork a examined setup for quick use, Python 3.12.9, CUDA 11.8, PyTorch 2.6.0, Transformers 4.46.3, Tokenizers 0.20.3, and Flash Consideration 2.7.3.

DeepSeek OCR is a sensible step for doc AI, it treats pages as compact optical carriers that scale back decoder sequence size with out discarding most data, the mannequin card and technical report describe 97 p.c decoding precision at about 10 occasions compression on Fox benchmark, which is the important thing declare to check in actual workloads. The launched mannequin is a 3B MoE decoder with a DeepEncoder entrance finish, packaged for Transformers, with examined variations for PyTorch 2.6.0, CUDA 11.8, and Flash Consideration 2.7.3, which lowers setup price for engineers. The repository exhibits a single 6.67 GB safetensors shard, which fits widespread GPUs. Total, DeepSeek OCR operationalizes optical context compression with a 3B MoE decoder, reviews about 97% decoding precision at 10x compression on Fox, gives specific token finances modes, and features a examined Transformers setup, validate the throughput declare in your personal pipeline.

Take a look at the Technical Paper, Mannequin on HF and GitHub Repo. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be part of us on telegram as effectively.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies at present: learn extra, subscribe to our e-newsletter, and turn out to be a part of the NextTech group at NextTech-news.com