What if, as a substitute of re-sampling one agent, you may push Gemini-2.5 Professional to 34.1% on HLE by mixing 12–15 tool-using brokers that share notes and cease early? Google Cloud AI Analysis, with collaborators from MIT, Harvard, and Google DeepMind, launched TUMIX (Instrument-Use Combination)—a test-time framework that ensembles heterogeneous agent kinds (text-only, code, search, guided variants) and lets them share intermediate solutions over a couple of refinement rounds, then cease early through an LLM-based decide. The outcome: greater accuracy at decrease value on onerous reasoning benchmarks equivalent to HLE, GPQA-Diamond, and AIME (2024/2025).

So, What precisely is completely different new?

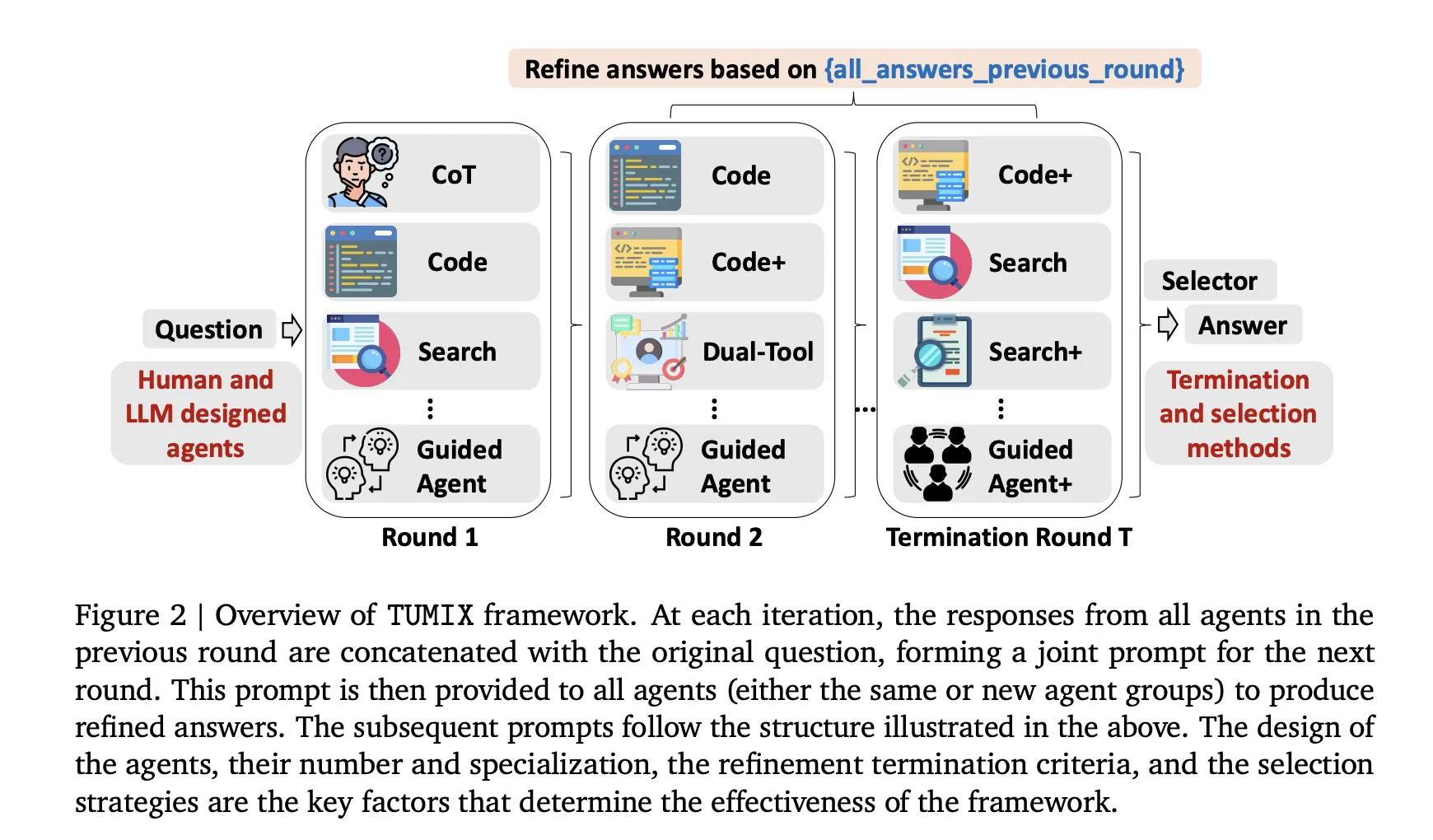

- Combination over modality, not simply extra samples: TUMIX runs ~15 agent kinds spanning Chain-of-Thought (CoT), code execution, internet search, dual-tool brokers, and guided variants. Every spherical, each agent sees (a) the unique query and (b) different brokers’ earlier solutions, then proposes a refined reply. This message-passing raises common accuracy early whereas variety progressively collapses—so stopping issues.

- Adaptive early-termination: An LLM-as-Choose halts refinement as soon as solutions exhibit robust consensus (with a minimal spherical threshold). This preserves accuracy at ~49% of the inference value vs. fixed-round refinement; token value drops to ~46% as a result of late rounds are token-heavier.

- Auto-designed brokers: Past human-crafted brokers, TUMIX prompts the bottom LLM to generate new agent sorts; mixing these with the handbook set yields an extra ~+1.2% common elevate with out further value. The empirical “candy spot” is ~12–15 agent kinds.

How does it work?

TUMIX runs a bunch of heterogeneous brokers—text-only Chain-of-Thought, code-executing, web-searching, and guided variants—in parallel, then iterates a small variety of refinement rounds the place every agent situations on the unique query plus the opposite brokers’ prior rationales and solutions (structured note-sharing). After every spherical, an LLM-based decide evaluates consensus/consistency to resolve early termination; if confidence is inadequate, one other spherical is triggered, in any other case the system finalizes through easy aggregation (e.g., majority vote or selector). This mixture-of-tool-use design trades brute-force re-sampling for various reasoning paths, enhancing protection of right candidates whereas controlling token/software budgets; empirically, advantages saturate round 12–15 agent kinds, and stopping early preserves variety and lowers value with out sacrificing accuracy

Lets talk about the Outcomes

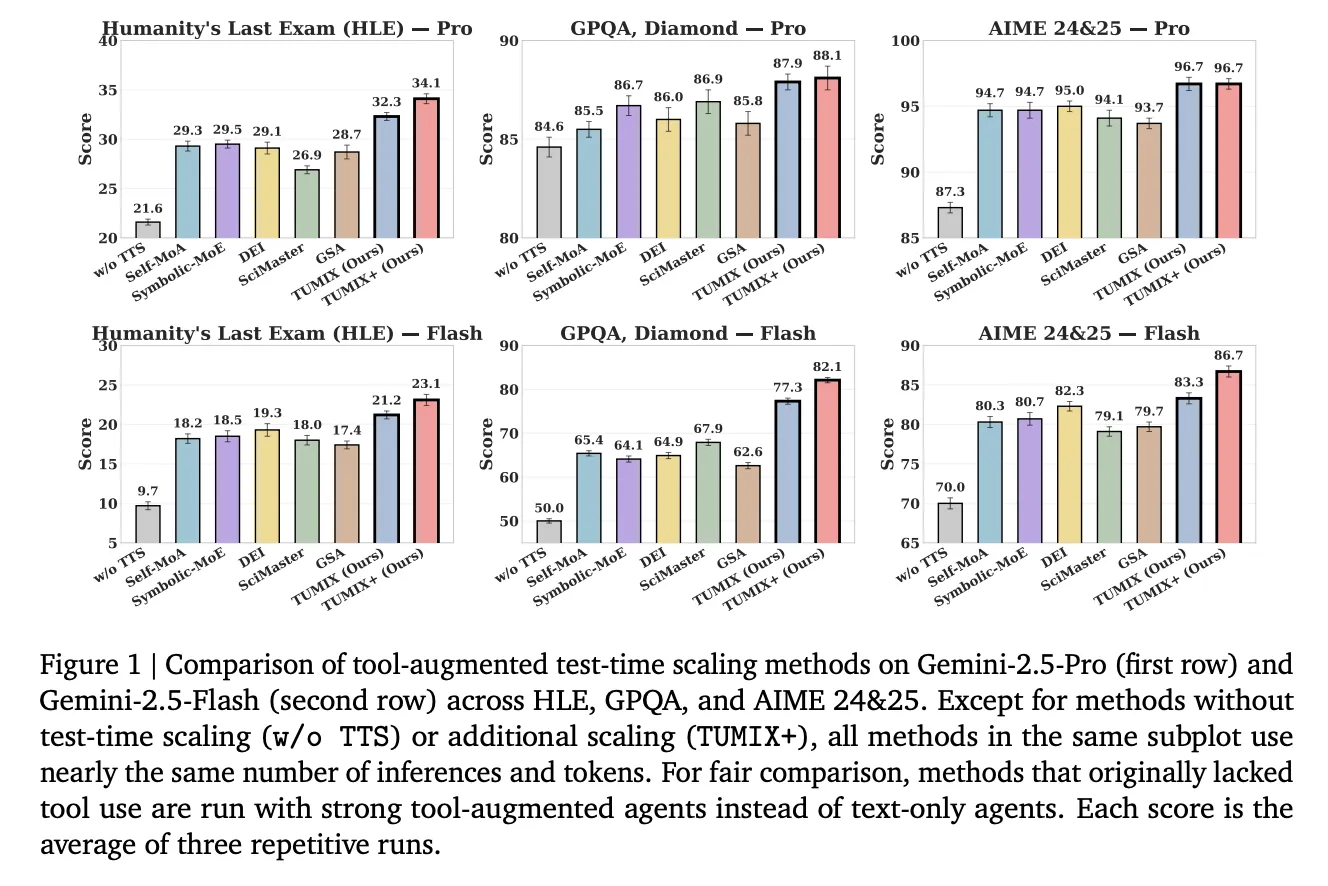

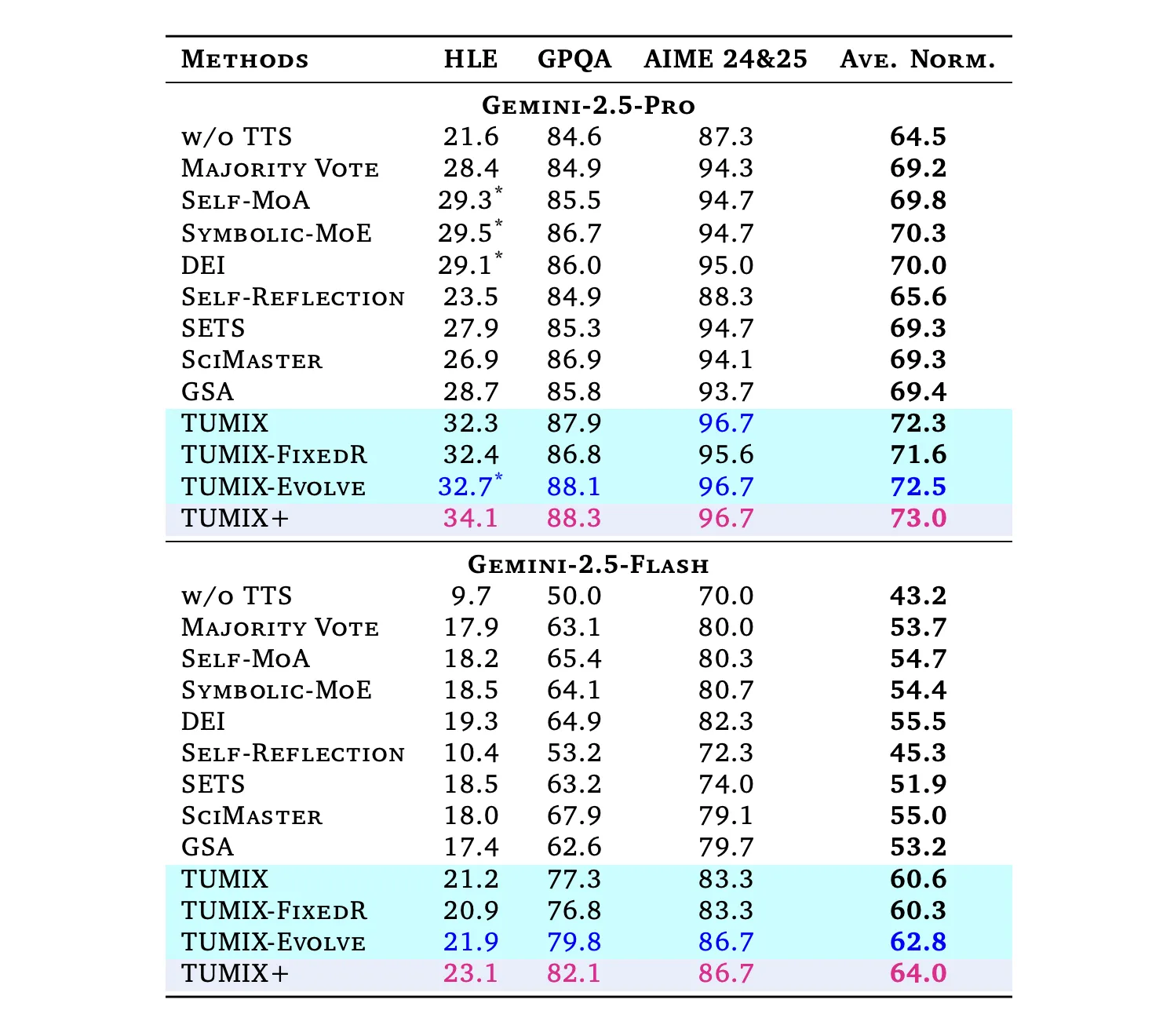

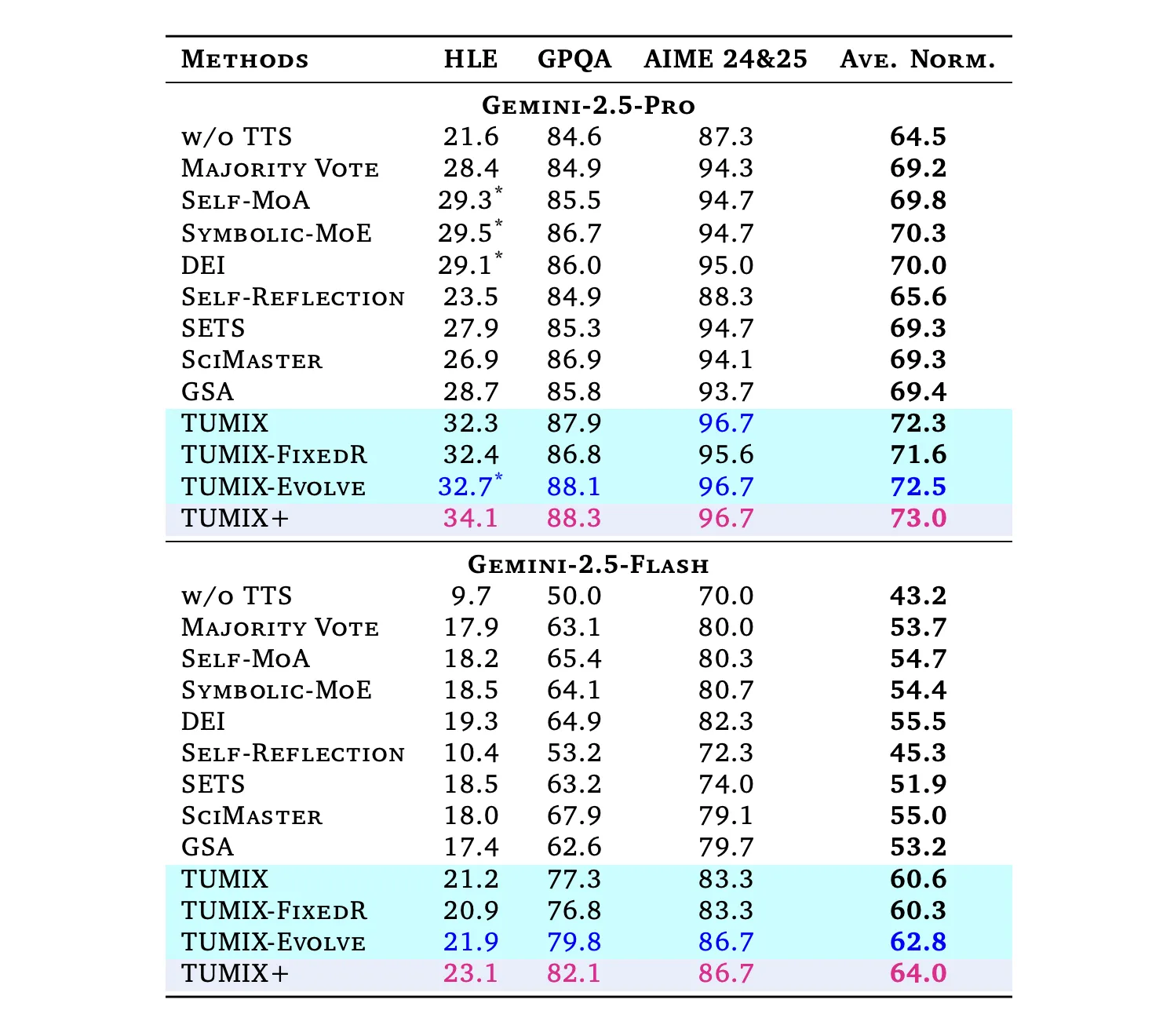

Below comparable inference budgets to robust tool-augmented baselines (Self-MoA, Symbolic-MoE, DEI, SciMaster, GSA), TUMIX yields the finest common accuracy; a scaled variant (TUMIX+) pushes additional with extra compute:

- HLE (Humanity’s Final Examination): Professional: 21.6% → 34.1% (TUMIX+); Flash: 9.7% → 23.1%.

(HLE is a 2,500-question, tough, multi-domain benchmark finalized in 2025.) - GPQA-Diamond: Professional: as much as 88.3%; Flash: as much as 82.1%. (GPQA-Diamond is the toughest 198-question subset authored by area specialists.)

- AIME 2024/25: Professional: 96.7%; Flash: 86.7% with TUMIX(+) at check time.

Throughout duties, TUMIX averages +3.55% over the perfect prior tool-augmented test-time scaling baseline at comparable value, and +7.8% / +17.4% over no-scaling for Professional/Flash, respectively.

TUMIX is a superb strategy from Google as a result of it frames test-time scaling as a search drawback over heterogeneous software insurance policies quite than brute-force sampling. The parallel committee (textual content, code, search) improves candidate protection, whereas the LLM-judge permits early-stop that preserves variety and reduces token/software spend—helpful beneath latency budgets. The HLE features (34.1% with Gemini-2.5 Professional) align with the benchmark’s finalized 2,500-question design, and the ~12–15 agent kinds “candy spot” signifies choice—not technology—is the limiting issue.

Take a look at the Paper. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be a part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies at the moment: learn extra, subscribe to our publication, and turn into a part of the NextTech neighborhood at NextTech-news.com