How a lot compression ratio and throughput would you recuperate by coaching a format-aware graph compressor and delivery solely a self-describing graph to a common decoder? Meta AI launched OpenZL, an open-source framework that builds specialised, format-aware compressors from high-level knowledge descriptions and emits a self-describing wire format {that a} common decoder can learn—decoupling compressor evolution from reader rollouts. The method is grounded in a graph mannequin of compression that represents pipelines as directed acyclic graphs (DAGs) of modular codecs.

So, What’s new?

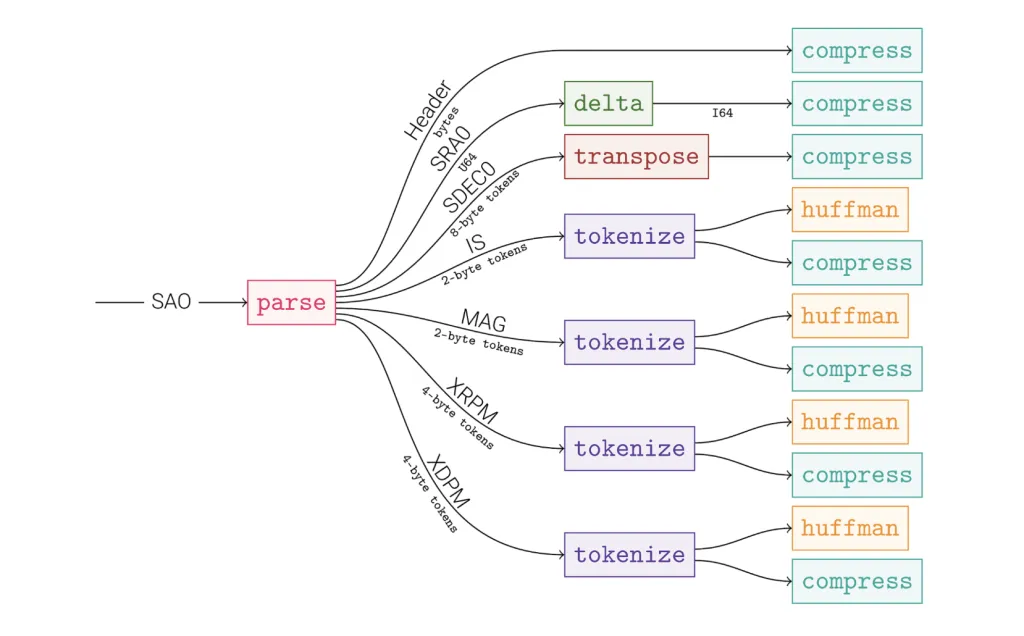

OpenZL formalizes compression as a computational graph: nodes are codecs/graphs, edges are typed message streams, and the finalized graph is serialized with the payload. Any body produced by any OpenZL compressor might be decompressed by the common decoder, as a result of the graph specification travels with the information. This design goals to mix the ratio/throughput advantages of domain-specific codecs with the operational simplicity of a single, secure decoder binary.

How does it work?

- Describe knowledge → construct a graph. Builders provide a knowledge description; OpenZL composes parse/group/rework/entropy levels right into a DAG tailor-made to that construction. The result’s a self-describing body: compressed bytes plus the graph spec.

- Common decode path. Decoding procedurally follows the embedded graph, eradicating the necessity to ship new readers when compressors evolve.

Tooling and APIs

- SDDL (Easy Information Description Language): Constructed-in elements and APIs allow you to decompose inputs into typed streams from a pre-compiled knowledge description; out there in C and Python surfaces underneath

openzl.ext.graphs.SDDL. - Language bindings: Core library and bindings are open-sourced; the repo paperwork C/C++ and Python utilization, and the ecosystem is already including neighborhood bindings (e.g., Rust

openzl-sys).

How does it Carry out?

The analysis workforce reviews that OpenZL achieves superior compression ratios and speeds versus state-of-the-art general-purpose codecs throughout quite a lot of real-world datasets. It additionally notes inner deployments at Meta with constant measurement and/or velocity enhancements and shorter compressor improvement timelines. The general public supplies do not assign a single common numeric issue; outcomes are introduced as Pareto enhancements depending on knowledge and pipeline configuration.

OpenZL makes format-aware compression operationally sensible: compressors are expressed as DAGs, embedded as a self-describing graph in every body, and decoded by a common decoder, eliminating reader rollouts. Total, OpenZL encodes a codec DAG in every body and decodes through a common reader; Meta reviews Pareto features over zstd/xz on actual datasets.

Try the Paper, GitHub Web page and Technical particulars. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies in the present day: learn extra, subscribe to our e-newsletter, and turn out to be a part of the NextTech neighborhood at NextTech-news.com