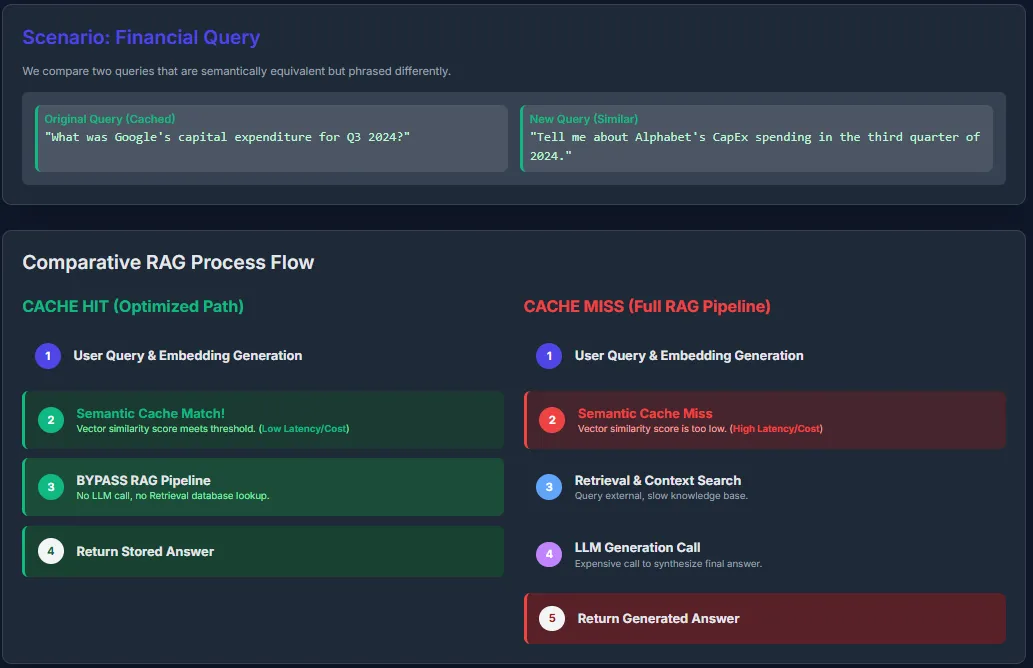

Semantic caching in LLM (Massive Language Mannequin) functions optimizes efficiency by storing and reusing responses primarily based on semantic similarity fairly than precise textual content matches. When a brand new question arrives, it’s transformed into an embedding and in contrast with cached ones utilizing similarity search. If an in depth match is discovered (above a similarity threshold), the cached response is returned immediately—skipping the costly retrieval and era course of. In any other case, the complete RAG pipeline runs, and the brand new query-response pair is added to the cache for future use.

In a RAG setup, semantic caching sometimes saves responses just for questions which have truly been requested, not each doable question. This helps scale back latency and API prices for repeated or barely reworded questions. On this article, we’ll check out a brief instance demonstrating how caching can considerably decrease each price and response time in LLM-based functions. Try the FULL CODES right here.

Semantic caching capabilities by storing and retrieving responses primarily based on the which means of person queries fairly than their precise wording. Every incoming question is transformed right into a vector embedding that represents its semantic content material. The system then performs a similarity search—typically utilizing Approximate Nearest Neighbor (ANN) strategies—to match this embedding with these already saved within the cache.

If a sufficiently related query-response pair exists (i.e., its similarity rating exceeds an outlined threshold), the cached response is returned instantly, bypassing costly retrieval or era steps. In any other case, the complete RAG pipeline executes, retrieving paperwork and producing a brand new reply, which is then saved within the cache for future use. Try the FULL CODES right here.

In a RAG software, semantic caching solely shops responses for queries which have truly been processed by the system—there’s no pre-caching of all doable questions. Every question that reaches the LLM and produces a solution can create a cache entry containing the question’s embedding and corresponding response.

Relying on the system’s design, the cache might retailer simply the ultimate LLM outputs, the retrieved paperwork, or each. To keep up effectivity, cache entries are managed via insurance policies like time-to-live (TTL) expiration or Least Just lately Used (LRU) eviction, making certain that solely current or steadily accessed queries stay in reminiscence over time. Try the FULL CODES right here.

Putting in dependencies

Organising the dependencies

import os

from getpass import getpass

os.environ['OPENAI_API_KEY'] = getpass('Enter OpenAI API Key: ')For this tutorial, we can be utilizing OpenAI, however you should use any LLM supplier.

from openai import OpenAI

consumer = OpenAI()Operating Repeated Queries With out Caching

On this part, we run the identical question 10 occasions straight via the GPT-4.1 mannequin to watch how lengthy it takes when no caching mechanism is utilized. Every name triggers a full LLM computation and response era, resulting in repetitive processing for similar inputs. Try the FULL CODES right here.

This helps set up a baseline for complete time and value earlier than we implement semantic caching within the subsequent half.

import time

def ask_gpt(question):

begin = time.time()

response = consumer.responses.create(

mannequin="gpt-4.1",

enter=question

)

finish = time.time()

return response.output[0].content material[0].textual content, finish - beginquestion = "Clarify the idea of semantic caching in simply 2 strains."

total_time = 0

for i in vary(10):

_, period = ask_gpt(question)

total_time += period

print(f"Run {i+1} took {period:.2f} seconds")

print(f"nTotal time for 10 runs: {total_time:.2f} seconds")

Regardless that the question stays the identical, each name nonetheless takes between 1–3 seconds, leading to a complete of ~22 seconds for 10 runs. This inefficiency highlights why semantic caching might be so precious — it permits us to reuse earlier responses for semantically similar queries and save each time and API price. Try the FULL CODES right here.

Implementing Semantic Caching for Quicker Responses

On this part, we improve the earlier setup by introducing semantic caching, which permits our software to reuse responses for semantically related queries as a substitute of repeatedly calling the GPT-4.1 API.

Right here’s the way it works: every incoming question is transformed right into a vector embedding utilizing the text-embedding-3-small mannequin. This embedding captures the semantic which means of the textual content. When a brand new question arrives, we calculate its cosine similarity with embeddings already saved in our cache. If a match is discovered with a similarity rating above the outlined threshold (e.g., 0.85), the system immediately returns the cached response — avoiding one other API name.

If no sufficiently related question exists within the cache, the mannequin generates a contemporary response, which is then saved together with its embedding for future use. Over time, this method dramatically reduces each response time and API prices, particularly for steadily requested or rephrased queries. Try the FULL CODES right here.

import numpy as np

from numpy.linalg import norm

semantic_cache = []

def get_embedding(textual content):

emb = consumer.embeddings.create(mannequin="text-embedding-3-small", enter=textual content)

return np.array(emb.information[0].embedding)

def cosine_similarity(a, b):

return np.dot(a, b) / (norm(a) * norm(b))

def ask_gpt_with_cache(question, threshold=0.85):

query_embedding = get_embedding(question)

# Test similarity with current cache

for cached_query, cached_emb, cached_resp in semantic_cache:

sim = cosine_similarity(query_embedding, cached_emb)

if sim > threshold:

print(f"🔁 Utilizing cached response (similarity: {sim:.2f})")

return cached_resp, 0.0 # no API time

# In any other case, name GPT

begin = time.time()

response = consumer.responses.create(

mannequin="gpt-4.1",

enter=question

)

finish = time.time()

textual content = response.output[0].content material[0].textual content

# Retailer in cache

semantic_cache.append((question, query_embedding, textual content))

return textual content, finish - beginqueries = [

"Explain semantic caching in simple terms.",

"What is semantic caching and how does it work?",

"How does caching work in LLMs?",

"Tell me about semantic caching for LLMs.",

"Explain semantic caching simply.",

]

total_time = 0

for q in queries:

resp, t = ask_gpt_with_cache(q)

total_time += t

print(f"⏱️ Question took {t:.2f} secondsn")

print(f"nTotal time with caching: {total_time:.2f} seconds")

Within the output, the primary question took round 8 seconds as there was no cache and the mannequin needed to generate a contemporary response. When the same query was requested subsequent, the system recognized a excessive semantic similarity (0.86) and immediately reused the cached reply, saving time. Some queries, like “How does caching work in LLMs?” and “Inform me about semantic caching for LLMs,” had been sufficiently completely different, so the mannequin generated new responses, every taking on 10 seconds. The ultimate question was almost similar to the primary one (similarity 0.97) and was served from cache immediately.

Try the FULL CODES right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you possibly can be a part of us on telegram as effectively.

I’m a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I’ve a eager curiosity in Information Science, particularly Neural Networks and their software in numerous areas.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies at the moment: learn extra, subscribe to our e-newsletter, and develop into a part of the NextTech group at NextTech-news.com