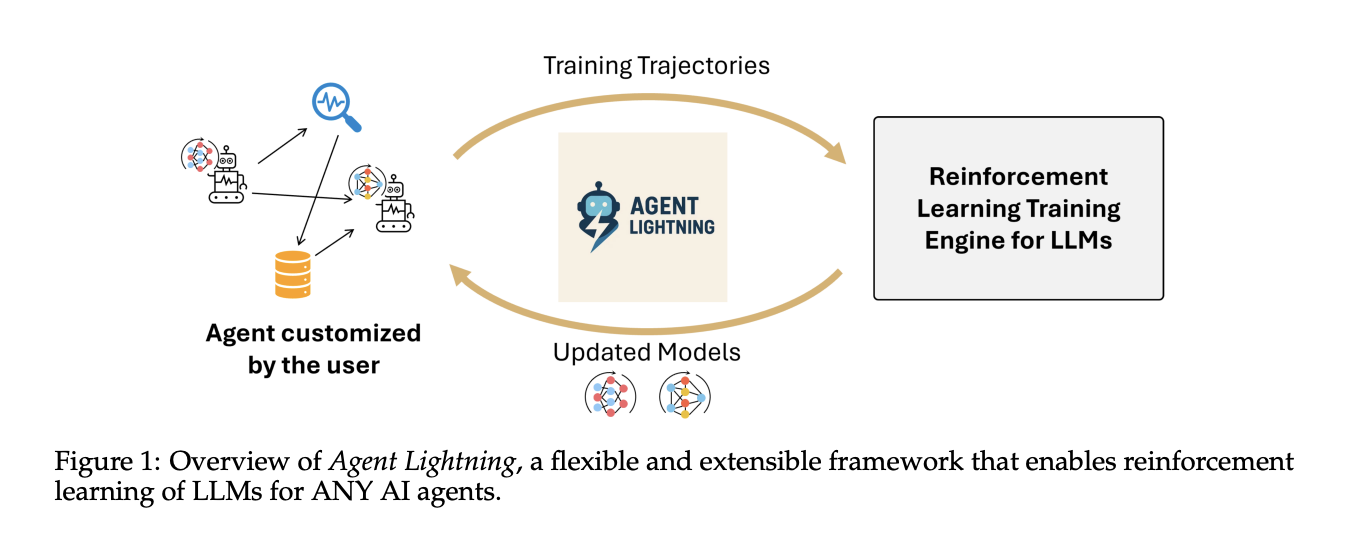

How do you exchange actual agent traces into reinforcement studying RL transitions to enhance coverage LLMs with out altering your present agent stack? Microsoft AI workforce releases Agent Lightning to assist optimize multi-agent techniques. Agent Lightning is a open-sourced framework that makes reinforcement studying work for any AI agent with out rewrites. It separates coaching from execution, defines a unified hint format, and introduces LightningRL, a hierarchical methodology that converts advanced agent runs into transitions that customary single flip RL trainers can optimize.

What Agent Lightning does?

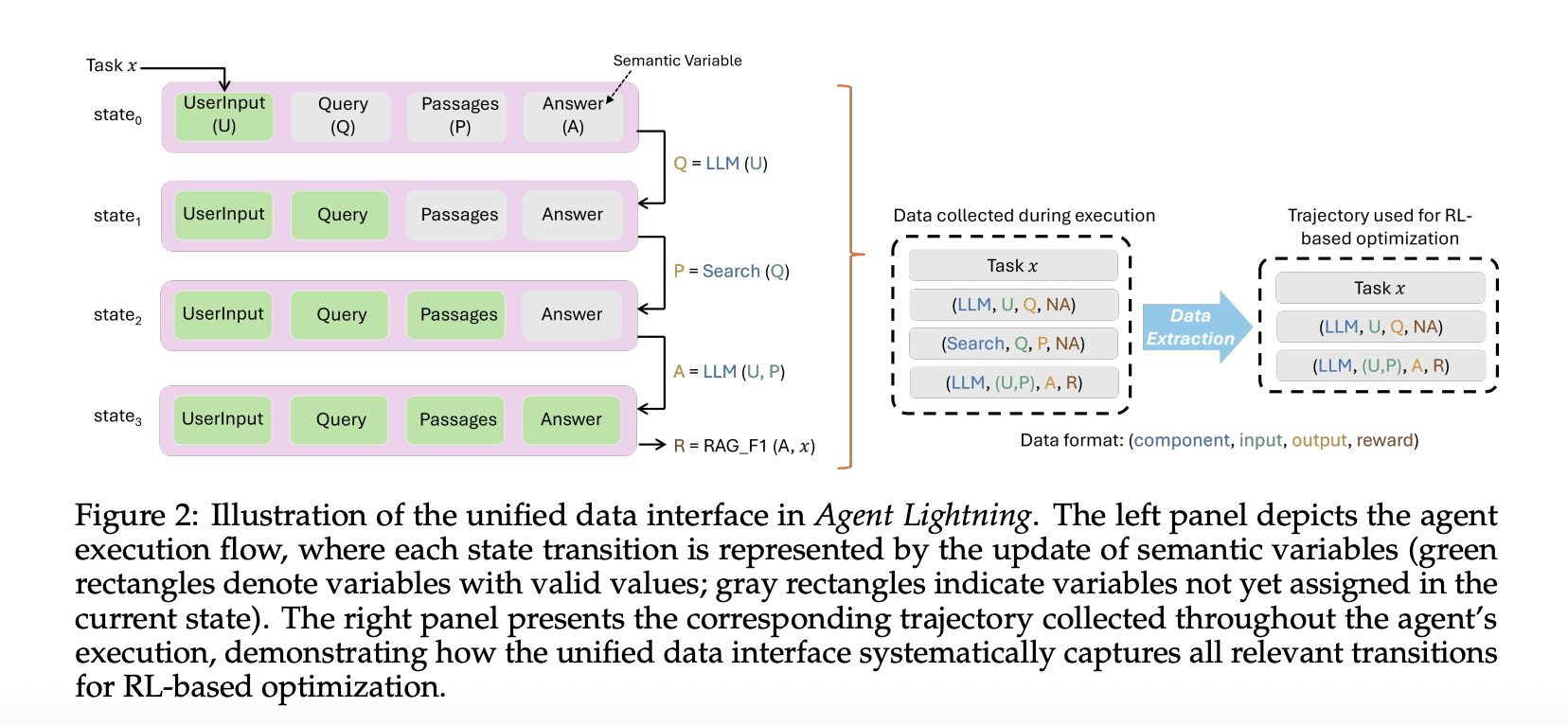

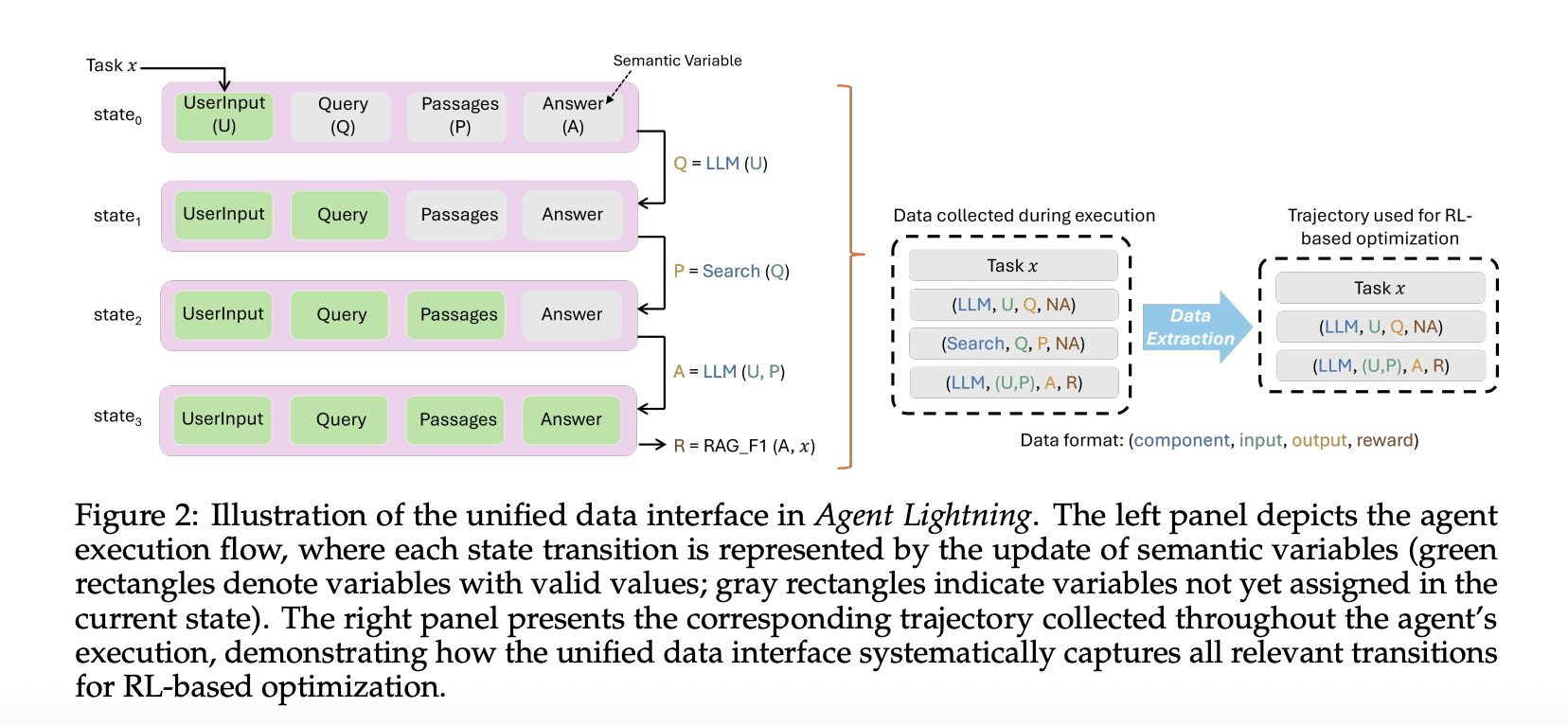

The framework fashions an agent as a call course of. It formalizes the agent as {a partially} observable Markov resolution course of the place the remark is the present enter to the coverage LLM, the motion is the mannequin name, and the reward could be terminal or intermediate. From every run it extracts solely the calls made by the coverage mannequin, together with inputs, outputs, and rewards. This trims away different framework noise and yields clear transitions for coaching.

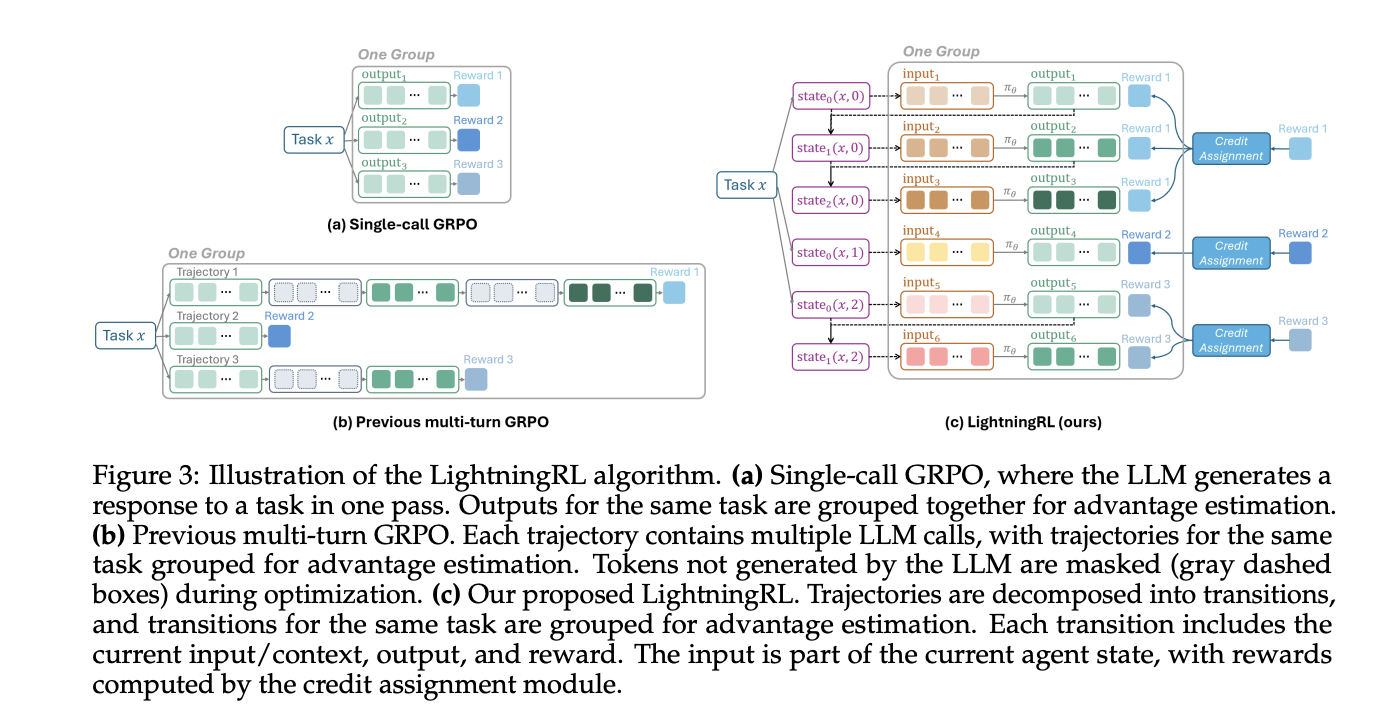

LightningRL performs credit score project throughout multi step episodes, then optimizes the coverage with a single flip RL goal. The analysis workforce describes compatibility with single flip RL strategies. In observe, groups typically use trainers that implement PPO or GRPO, reminiscent of VeRL, which inserts this interface.

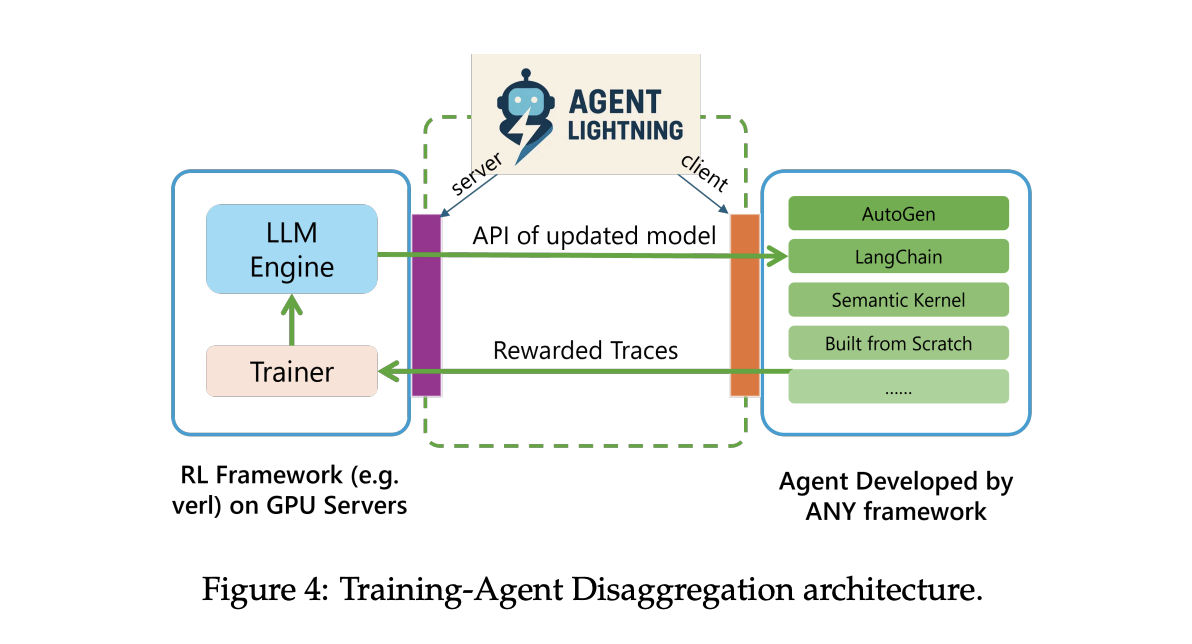

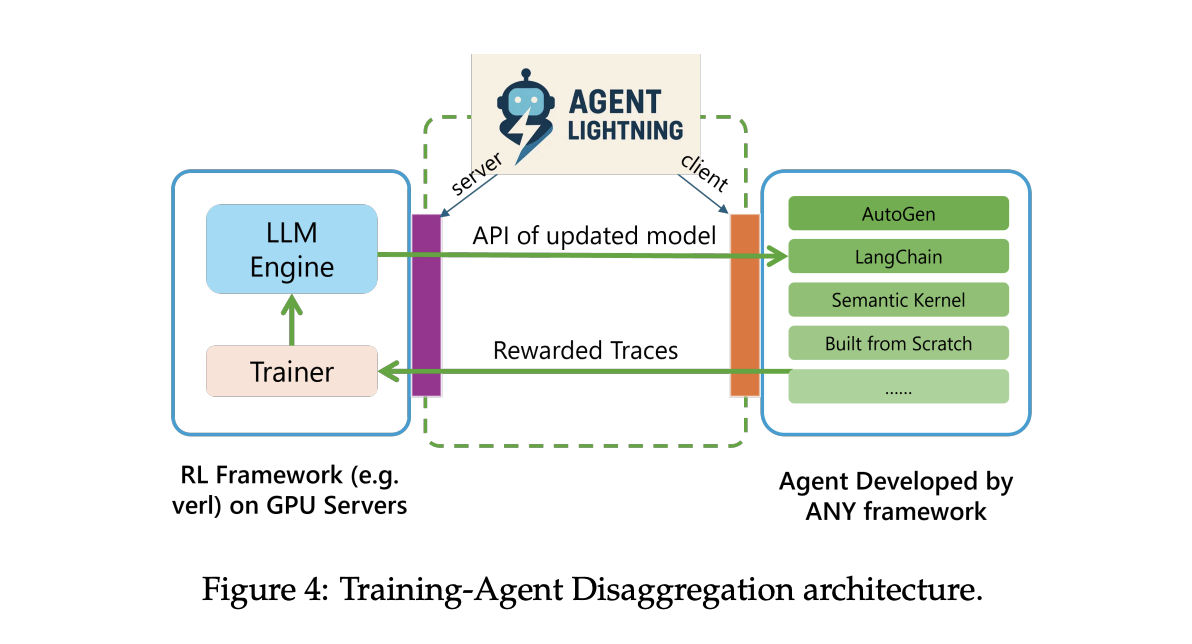

System structure

Agent Lightning makes use of Coaching Agent Disaggregation. A Lightning Server runs coaching and serving, and exposes an OpenAI like API for the up to date mannequin. A Lightning Shopper runs the agent runtime the place it already lives, captures traces of prompts, software calls, and rewards, and streams them again to the server. This retains instruments, browsers, shells, and different dependencies near manufacturing whereas the GPU coaching stays within the server tier.

The runtime helps two tracing paths. A default path makes use of OpenTelemetry spans, so you may pipe agent telemetry by way of customary collectors. There may be additionally a light-weight embedded tracer for groups that don’t need to deploy OpenTelemetry. Each paths find yourself in the identical retailer for coaching.

Unified information interface

Agent Lightning data every mannequin name and every software name as a span with inputs, outputs, and metadata. The algorithm layer adapts spans into ordered triplets of immediate, response, and reward. This selective extraction permits you to optimize one agent in a multi agent workflow, or a number of brokers directly, with out touching orchestration code. The identical traces may also drive computerized immediate optimization or supervised finetuning.

Experiments and datasets

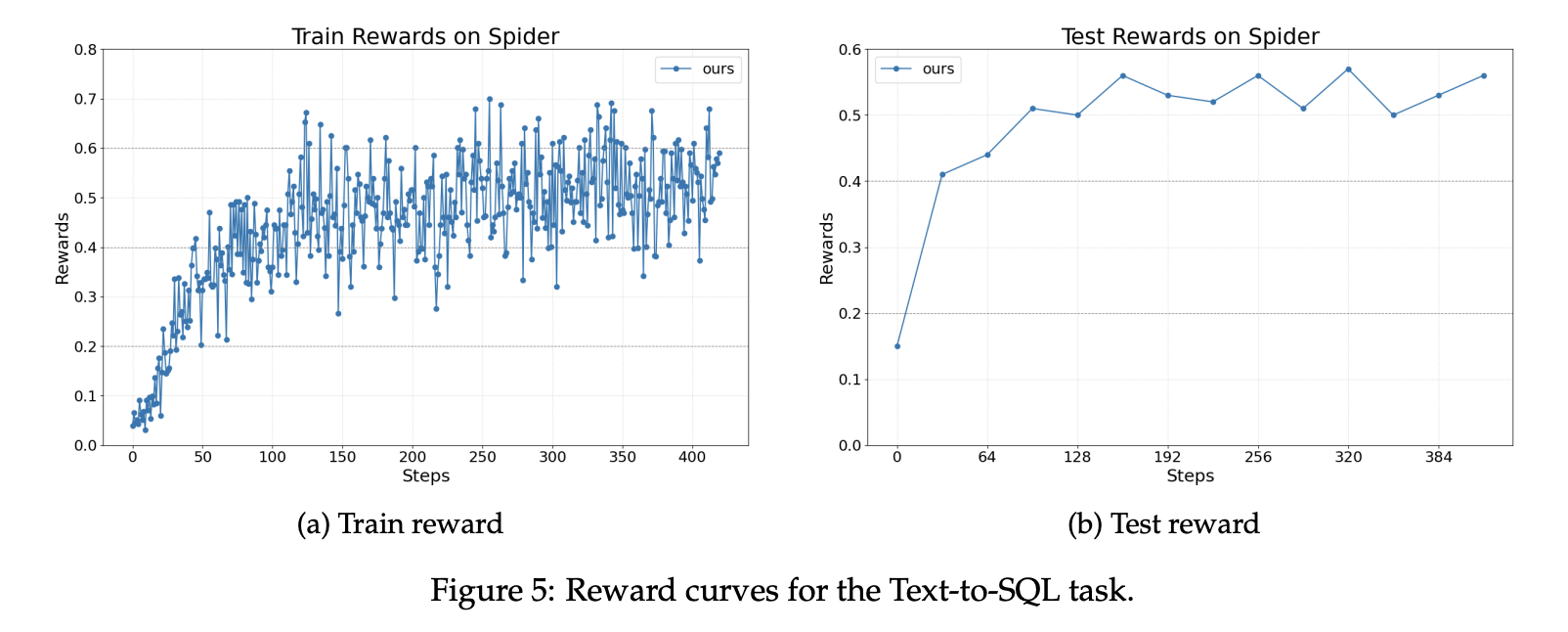

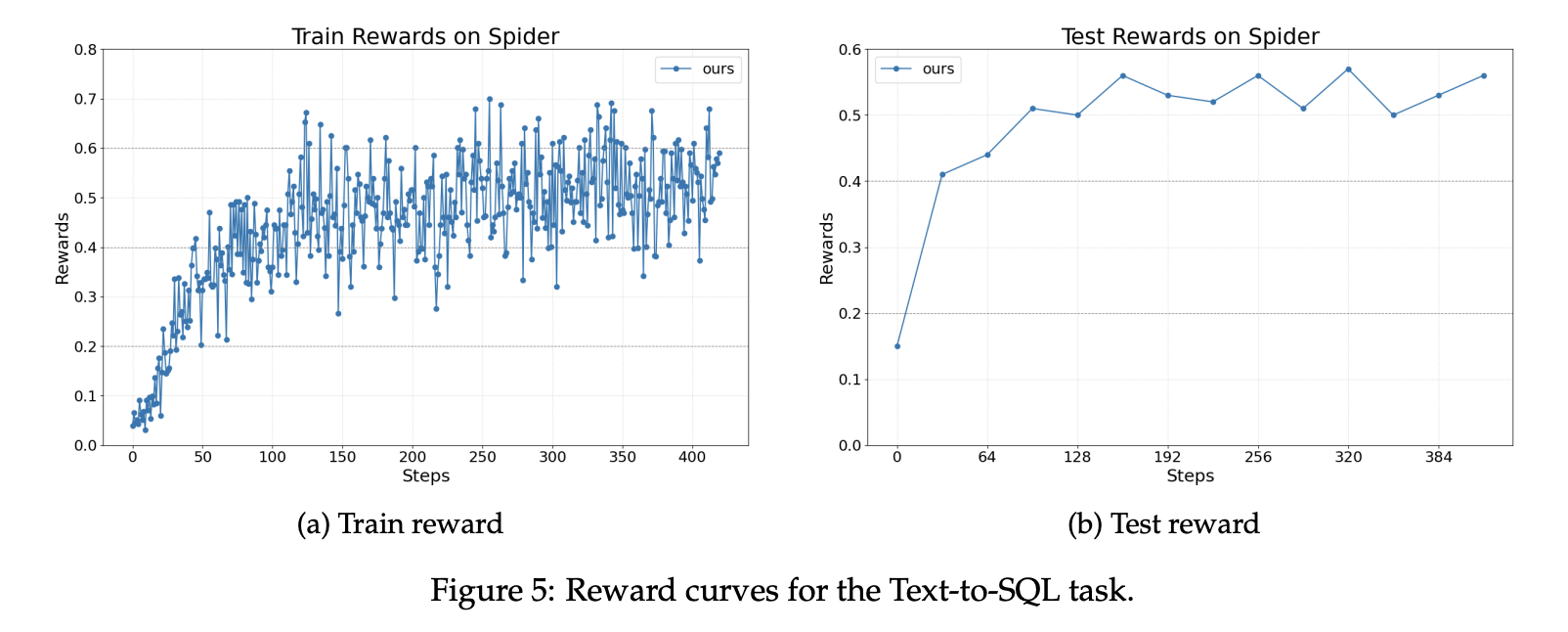

The analysis workforce experiences three duties. For textual content to SQL, the workforce makes use of the Spider benchmark. Spider comprises greater than 10,000 questions throughout 200 databases that span 138 domains. The coverage mannequin is Llama 3.2 3B Instruct. The implementation makes use of LangChain with a author agent, a rewriter agent, and a checker. The author and the rewriter are optimized, and the checker is left fastened. Rewards enhance steadily throughout coaching and at take a look at time.

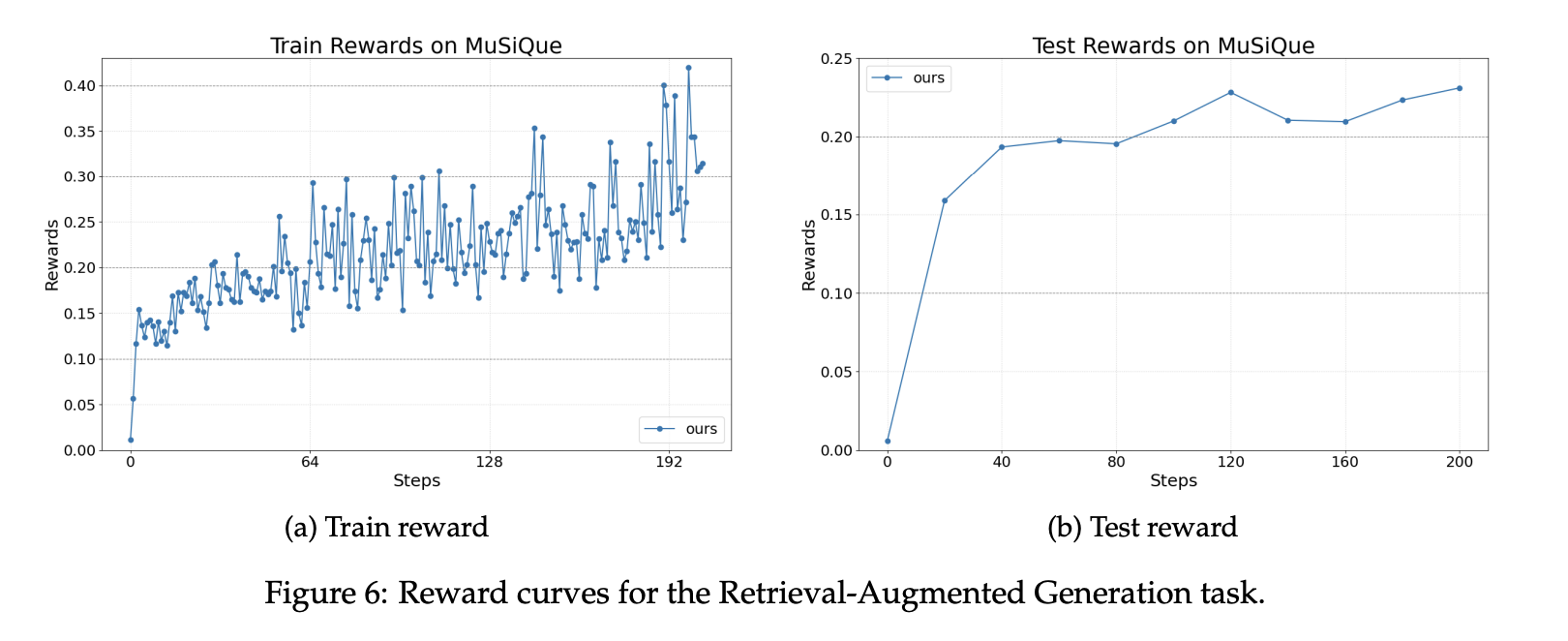

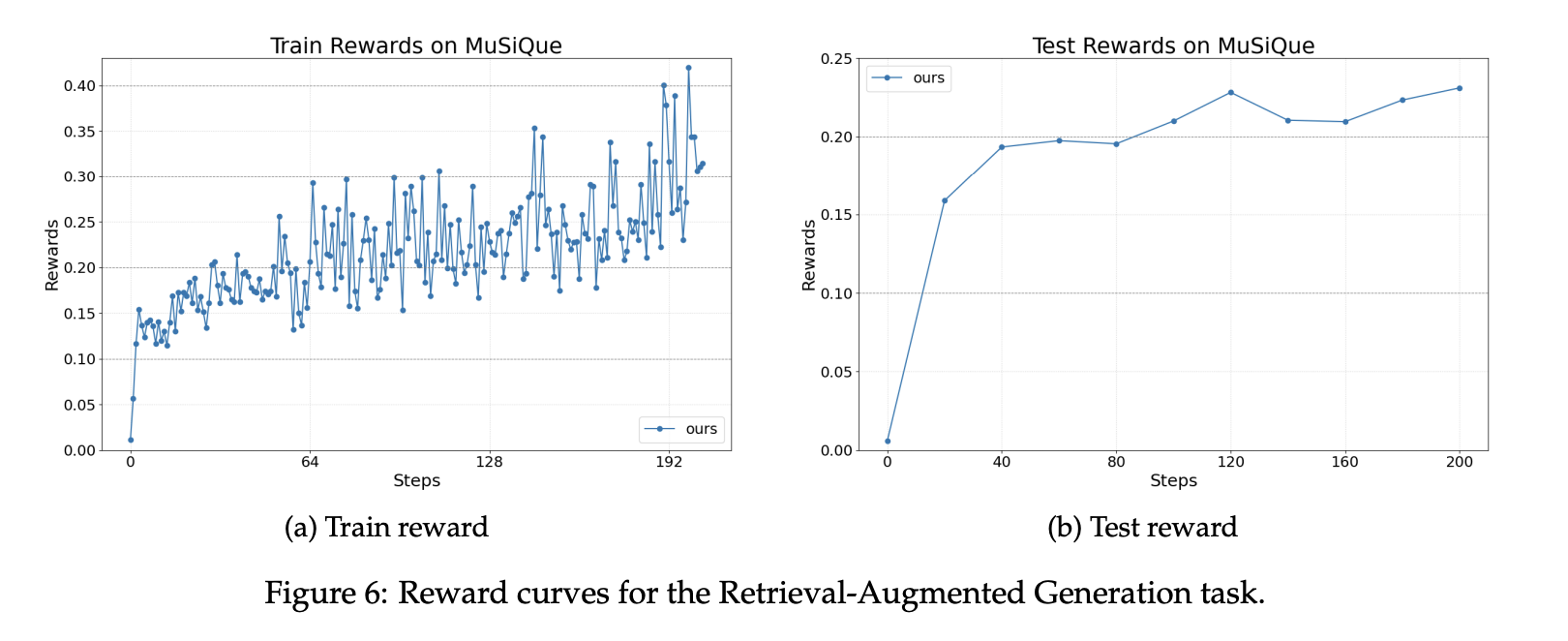

For retrieval augmented technology, the setup makes use of the MuSiQue benchmark and a Wikipedia scale index with about 21 million paperwork. The retriever makes use of BGE embeddings with cosine similarity. The agent is constructed with the OpenAI Brokers SDK. The reward is a weighted sum of a format rating and an F1 correctness rating. Reward curves present steady good points throughout coaching and analysis with the identical base mannequin.

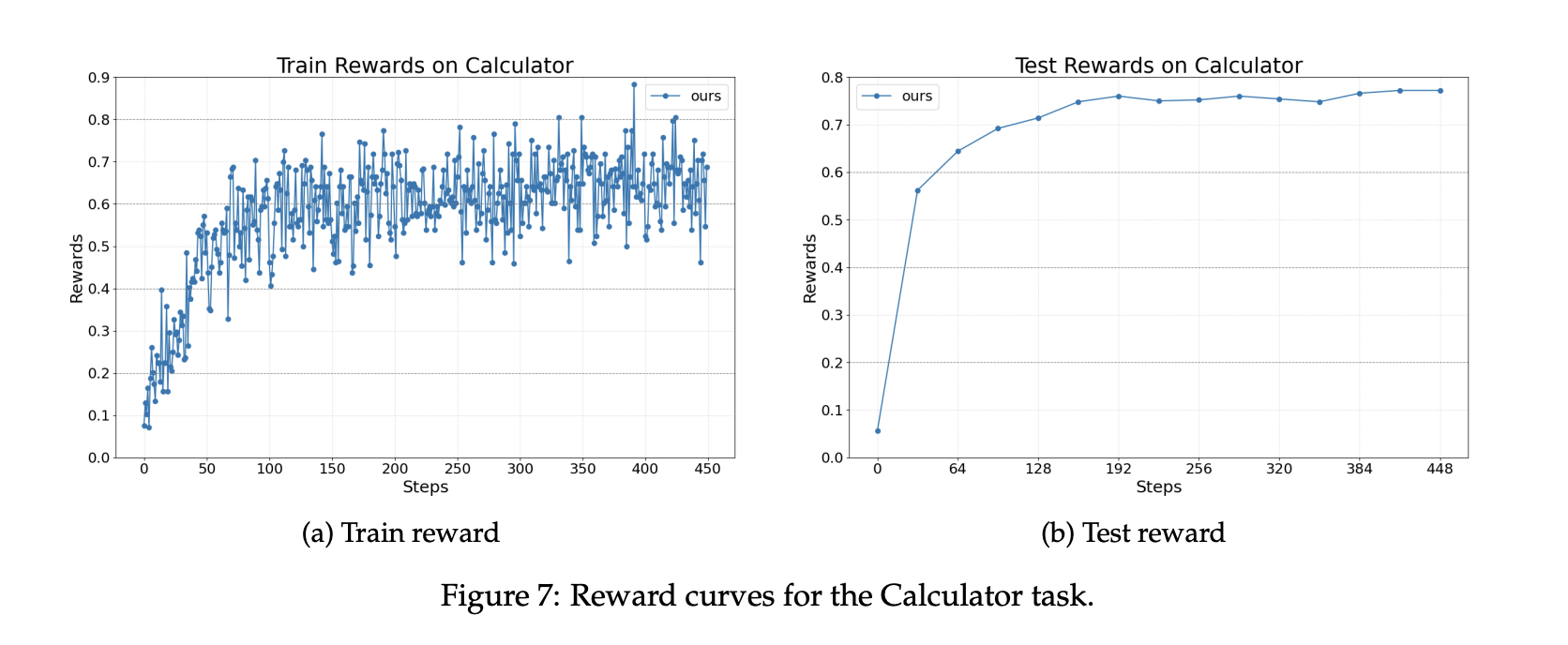

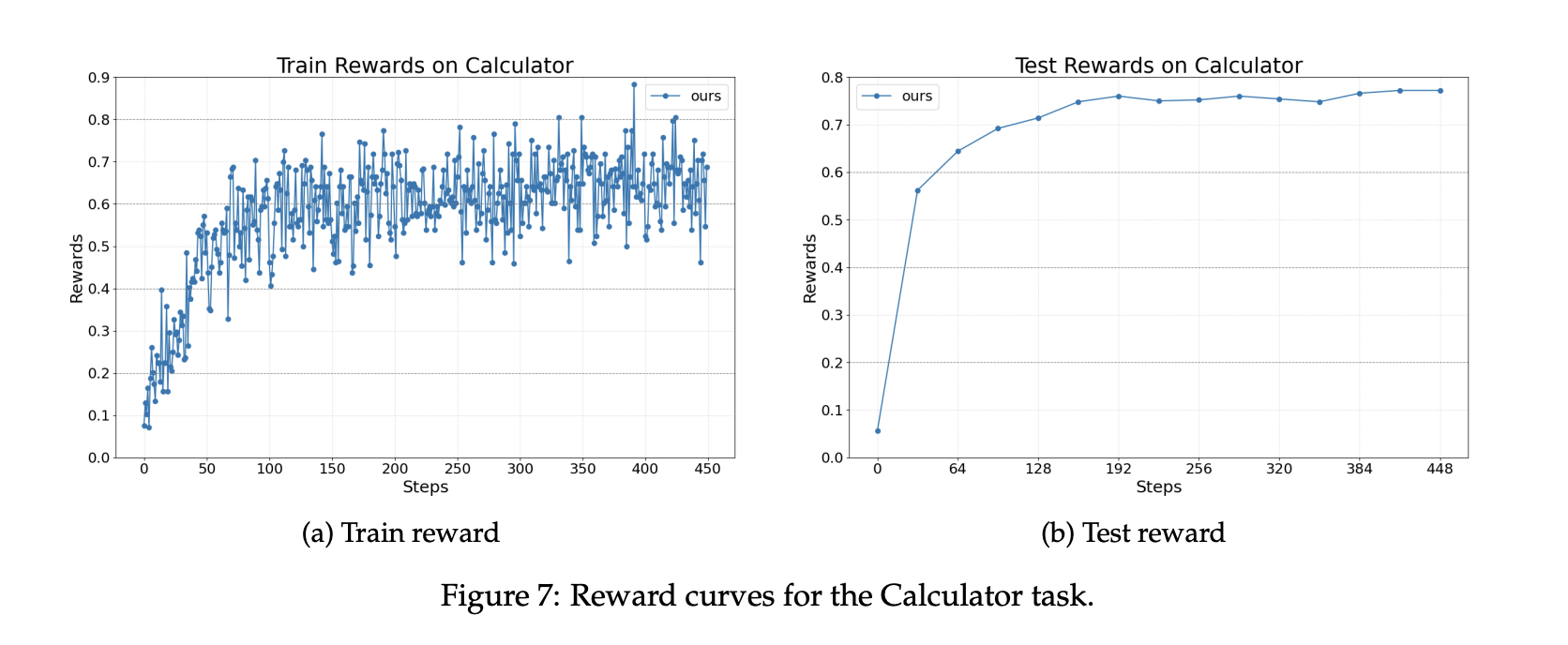

For math query answering with software use, the agent is carried out with AutoGen and calls a calculator software. The dataset is Calc X. The bottom mannequin once more is Llama 3.2 3B Instruct. Coaching improves the flexibility to invoke instruments accurately and combine outcomes into closing solutions.

Key Takeaways

- Agent Lightning makes use of Coaching Agent Disaggregation and a unified hint interface, so present brokers in LangChain, OpenAI Brokers SDK, AutoGen, or CrewAI join with close to zero code change.

- LightningRL converts trajectories to transitions. It applies credit score project to multi step runs, then optimizes the coverage with single flip RL strategies reminiscent of PPO or GRPO in customary trainers.

- Computerized Intermediate Rewarding, AIR, provides dense suggestions. AIR turns system alerts reminiscent of software return standing into intermediate rewards to cut back sparse reward points in lengthy workflows.

- The analysis evaluates textual content to SQL on Spider, RAG on MuSiQue with a Wikipedia scale index utilizing BGE embeddings and cosine similarity, and math software use on Calc X, all with Llama 3.2 3B Instruct as the bottom mannequin.

- The runtime data traces by way of OpenTelemetry, streams them to the coaching server, and exposes an OpenAI suitable endpoint for up to date fashions, enabling scalable rollouts with out shifting instruments.

Agent Lightning is a sensible bridge between agent execution and reinforcement studying, not one other framework rewrite. It formalizes agent runs as an Markov Determination Course of (MDP), introduces LightningRL for credit score project, and extracts transitions that slot into single flip RL trainers. The Coaching Agent Disaggregation design separates a consumer that runs the agent from a server that trains and serves an OpenAI suitable endpoint, so groups preserve present stacks. Computerized Intermediate Rewarding converts runtime alerts into dense suggestions, lowering sparse rewards in lengthy workflows. Total, Agent Lightning is a clear, minimal-integration path to make brokers study from their very own traces.

Try the Paper and Repo. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as nicely.

Michal Sutter is an information science skilled with a Grasp of Science in Knowledge Science from the College of Padova. With a strong basis in statistical evaluation, machine studying, and information engineering, Michal excels at remodeling advanced datasets into actionable insights.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s traits right this moment: learn extra, subscribe to our e-newsletter, and turn out to be a part of the NextTech neighborhood at NextTech-news.com