Can an open supply MoE actually energy agentic coding workflows at a fraction of flagship mannequin prices whereas sustaining long-horizon software use throughout MCP, shell, browser, retrieval, and code? MiniMax staff has simply launched MiniMax-M2, a combination of specialists MoE mannequin optimized for coding and agent workflows. The weights are revealed on Hugging Face beneath the MIT license, and the mannequin is positioned as for finish to finish software use, multi file modifying, and lengthy horizon plans, It lists 229B complete parameters with about 10B lively per token, which retains reminiscence and latency in verify throughout agent loops.

Structure and why activation measurement issues?

MiniMax-M2 is a compact MoE that routes to about 10B lively parameters per token. The smaller activations scale back reminiscence stress and tail latency in plan, act, and confirm loops, and permit extra concurrent runs in CI, browse, and retrieval chains. That is the efficiency finances that permits the velocity and price claims relative to dense fashions of comparable high quality.

MiniMax-M2 is an interleaved pondering mannequin. The analysis staff wrapped inside reasoning in

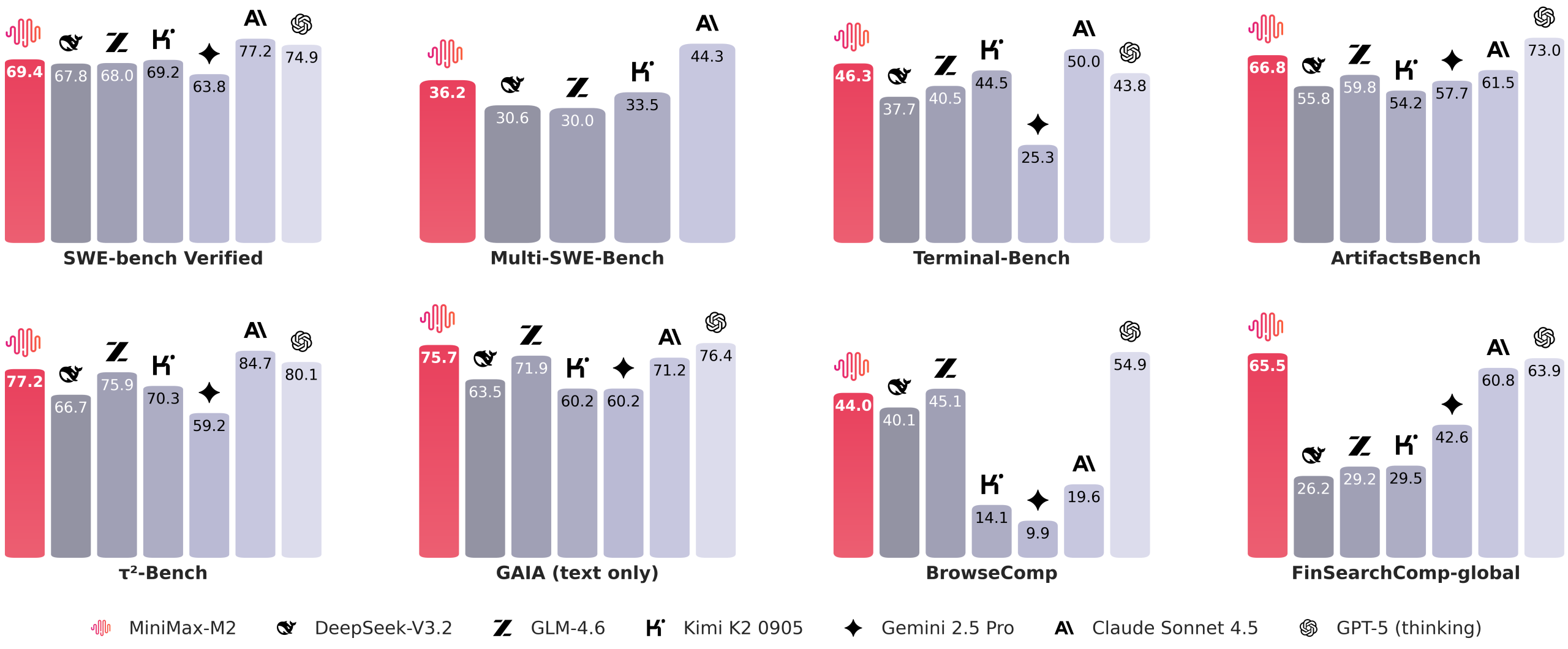

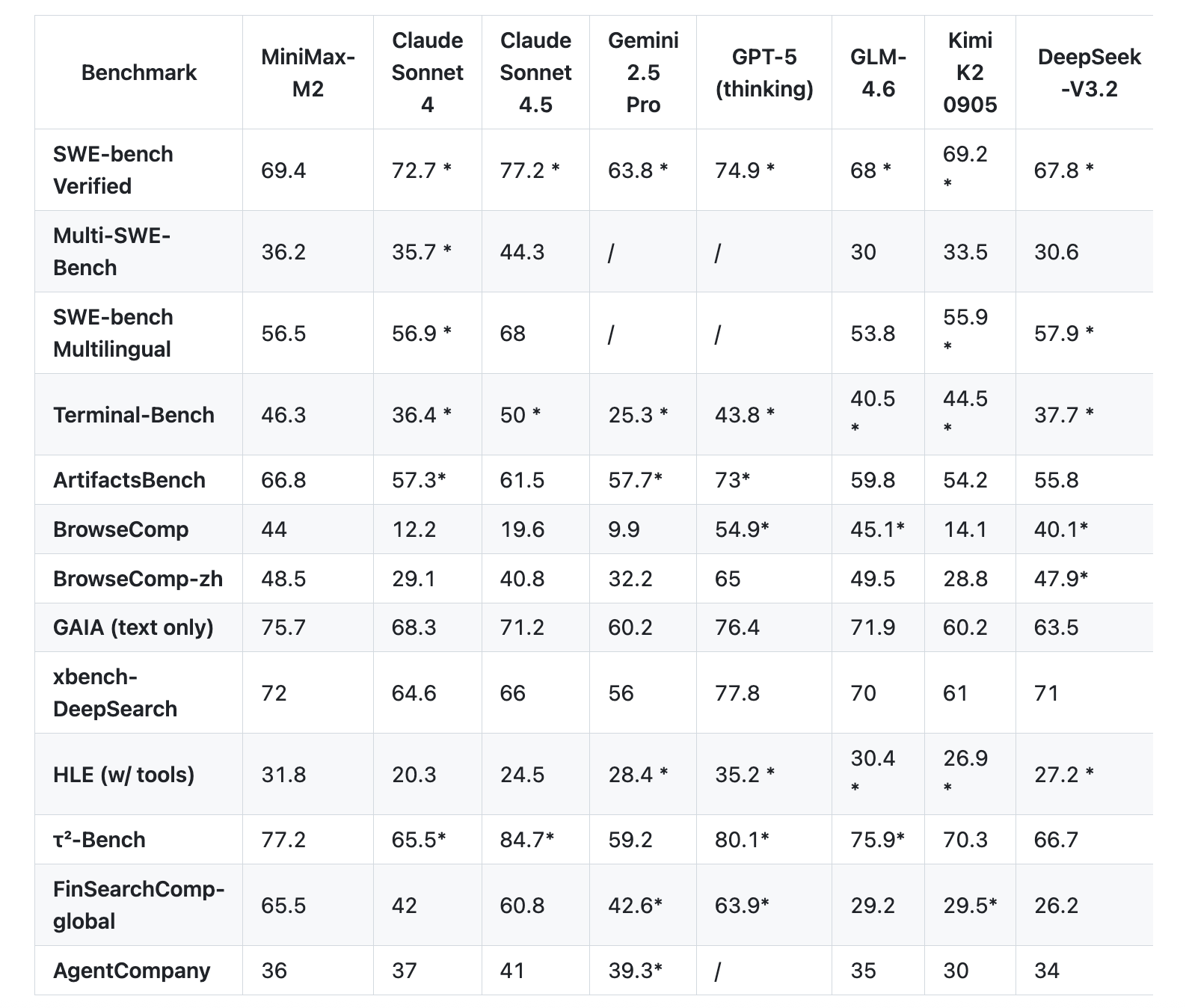

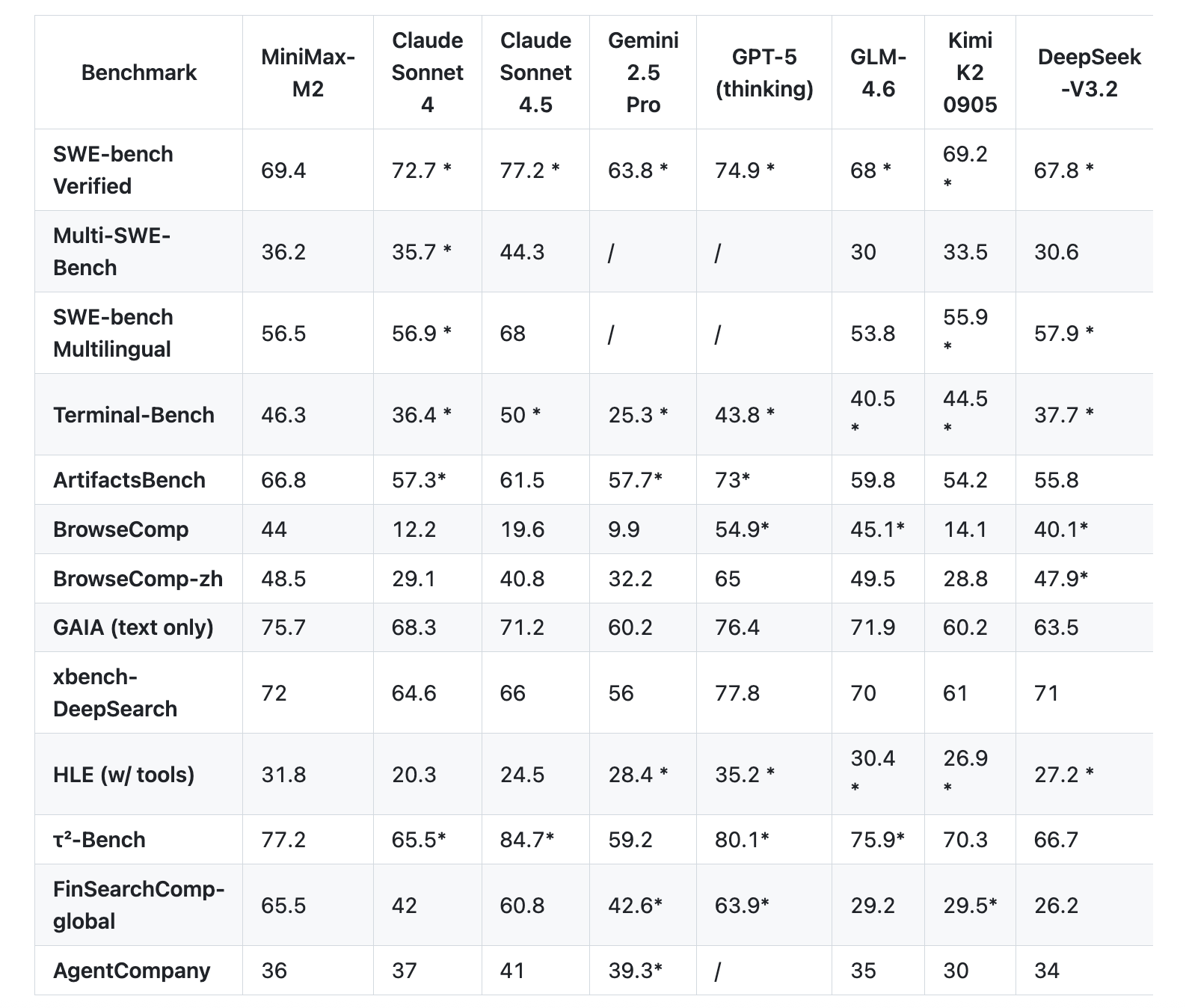

Benchmarks that concentrate on coding and brokers

The MiniMax staff reviews a set of agent and code evaluations are nearer to developer workflows than static QA. On Terminal Bench, the desk exhibits 46.3. On Multi SWE Bench, it exhibits 36.2. On BrowseComp, it exhibits 44.0. SWE Bench Verified is listed at 69.4 with the scaffold element, OpenHands with 128k context and 100 steps.

MiniMax’s official announcement stresses 8% of Claude Sonnet pricing, and close to 2x velocity, plus a free entry window. The identical observe offers the precise token costs and the trial deadline.

Comparability M1 vs M2

| Facet | MiniMax M1 | MiniMax M2 |

|---|---|---|

| Whole parameters | 456B complete | 229B in mannequin card metadata, mannequin card textual content says 230B complete |

| Energetic parameters per token | 45.9B lively | 10B lively |

| Core design | Hybrid Combination of Consultants with Lightning Consideration | Sparse Combination of Consultants concentrating on coding and agent workflows |

| Considering format | Considering finances variants 40k and 80k in RL coaching, no suppose tag protocol required | Interleaved pondering with |

| Benchmarks highlighted | AIME, LiveCodeBench, SWE-bench Verified, TAU-bench, lengthy context MRCR, MMLU-Professional | Terminal-Bench, Multi SWE-Bench, SWE-bench Verified, BrowseComp, GAIA textual content solely, Synthetic Evaluation intelligence suite |

| Inference defaults | temperature 1.0, prime p 0.95 | mannequin card exhibits temperature 1.0, prime p 0.95, prime ok 40, launch web page exhibits prime ok 20 |

| Serving steering | vLLM advisable, Transformers path additionally documented | vLLM and SGLang advisable, software calling information supplied |

| Main focus | Lengthy context reasoning, environment friendly scaling of check time compute, CISPO reinforcement studying | Agent and code native workflows throughout shell, browser, retrieval, and code runners |

Key Takeaways

- M2 ships as open weights on Hugging Face beneath MIT, with safetensors in F32, BF16, and FP8 F8_E4M3.

- The mannequin is a compact MoE with 229B complete parameters and ~10B lively per token, which the cardboard ties to decrease reminiscence use and steadier tail latency in plan, act, confirm loops typical of brokers.

- Outputs wrap inside reasoning in

... - Reported outcomes cowl Terminal-Bench, (Multi-)SWE-Bench, BrowseComp, and others, with scaffold notes for reproducibility, and day-0 serving is documented for SGLang and vLLM with concrete deploy guides.

Editorial Notes

MiniMax M2 lands with open weights beneath MIT, a combination of specialists design with 229B complete parameters and about 10B activated per token, which targets agent loops and coding duties with decrease reminiscence and steadier latency. It ships on Hugging Face in safetensors with FP32, BF16, and FP8 codecs, and offers deployment notes plus a chat template. The API paperwork Anthropic appropriate endpoints and lists pricing with a restricted free window for analysis. vLLM and SGLang recipes can be found for native serving and benchmarking. General, MiniMax M2 is a really stable open launch.

Take a look at the API Doc, Weights and Repo. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you possibly can be part of us on telegram as effectively.

Michal Sutter is an information science skilled with a Grasp of Science in Information Science from the College of Padova. With a stable basis in statistical evaluation, machine studying, and information engineering, Michal excels at remodeling advanced datasets into actionable insights.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s traits immediately: learn extra, subscribe to our publication, and develop into a part of the NextTech group at NextTech-news.com