How far can we push giant language mannequin velocity by reusing “free” GPU compute, with out giving up autoregressive degree output high quality? NVIDIA researchers suggest TiDAR, a sequence degree hybrid language mannequin that drafts tokens with diffusion and samples them autoregressively in a single ahead go. The principle aim of this analysis is to achieve autoregressive high quality whereas considerably growing throughput by exploiting free token slots on fashionable GPUs.

Methods motivation, free token slots and the standard drawback

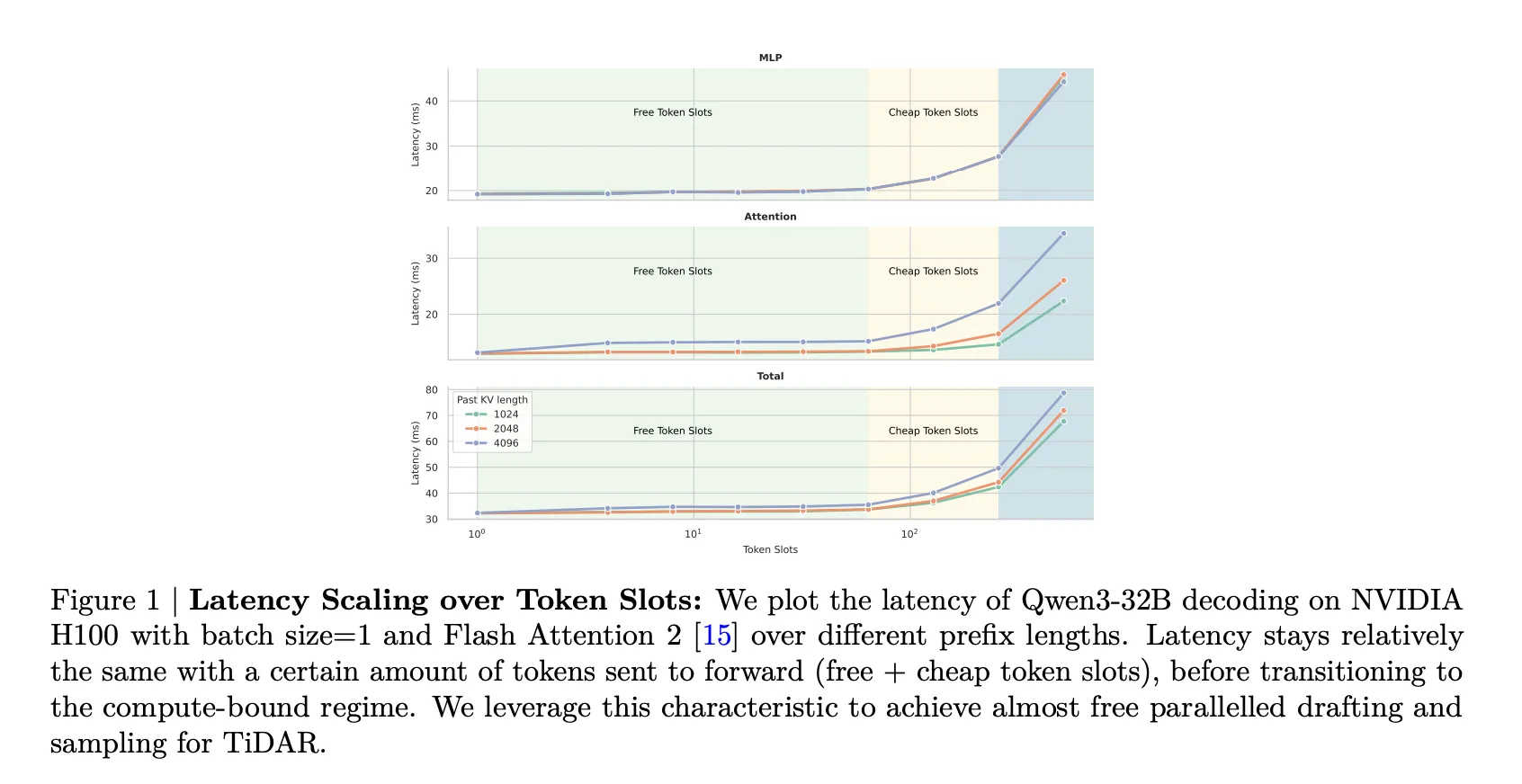

Autoregressive transformers decode one token per step. At real looking batch sizes, decoding is normally reminiscence sure, as a result of latency is dominated by loading weights and KV cache, not by floating level operations. Growing the variety of tokens within the enter sequence throughout the reminiscence sure area doesn’t change latency a lot, for the reason that identical parameters and cache are reused.

Masked diffusion language fashions already exploit this. Given a prefix, they will append a number of masked positions and predict a number of tokens in parallel in a single denoising step. The analysis workforce calls these further positions free token slots, as a result of profiling reveals that sending extra tokens on this regime barely modifications the ahead time.

Nevertheless, diffusion LLMs like Dream and Llada nonetheless underperform sturdy autoregressive baselines on high quality. When these fashions decode a number of tokens in the identical step, they pattern every token independently from a marginal distribution, given a noised context. This intra step token independence hurts sequence degree coherence and factual correctness, and the highest quality is normally obtained when decoding only one token per step. In apply, this removes a lot of the theoretical velocity benefit of diffusion decoding.

TiDAR is designed to protect the compute effectivity of diffusion whereas recovering autoregressive high quality, utilizing a single spine and customary transformer infrastructure.

Structure, twin mode spine and a focus masks

At a excessive degree, TiDAR partitions the sequence at every era step into three sections:

- A prefix of accepted tokens.

- Tokens drafted within the earlier step.

- Masks tokens that can maintain pre drafted candidates for the subsequent step.

The mannequin applies a structured consideration masks throughout this sequence. Prefix tokens attend causally, which helps chain factorized subsequent token prediction, as in an ordinary autoregressive transformer. Tokens within the drafting area and masks area attend bidirectionally inside a block, which permits diffusion fashion marginal predictions over many positions in parallel. This structure is a modification of the Block Diffusion masks, the place solely the decoding block is bidirectional and the remainder of the sequence stays causal.

To allow each modes in the identical spine, TiDAR doubles the sequence size at coaching time. The unique enter occupies the causal part, and a corrupted copy occupies the diffusion part. Within the causal part, labels are shifted by 1 token to match the subsequent token prediction goal. Within the diffusion part, labels are aligned with the enter positions.

Crucially, TiDAR makes use of a full masks technique. All tokens within the diffusion part are changed by a particular masks token, moderately than sampling a sparse corruption sample. This makes the diffusion loss dense, retains the variety of loss phrases in diffusion and autoregressive elements equal to the sequence size, and simplifies balancing the 2 losses with a single weighting issue. The analysis workforce set this weighting issue to 1 in most experiments.

Self speculative era in a single ahead go

Era is formulated as a self speculative course of that runs in a single community operate analysis per step.

Step 1, given the immediate, TiDAR encodes the prefix causally and performs one step diffusion over the masks positions, producing a block of drafted tokens.

Step 2 and later steps, every ahead go performs two operations directly

- Verification of drafted tokens utilizing autoregressive logits over the prolonged prefix with a rejection sampling rule, related in spirit to speculative decoding.

- Pre drafting of the subsequent block utilizing diffusion, conditioned on all potential acceptance outcomes of the present step.

Accepted tokens are added to the prefix, and their KV cache entries are retained. Rejected tokens are discarded, and their cache entries are evicted. The drafting and verification share the identical spine and a focus masks, so diffusion computation makes use of the free token slots in the identical ahead go.

The mannequin helps two sampling modes, trusting autoregressive predictions or trusting diffusion predictions, which management how strongly the ultimate pattern follows every head. Experiments present that for the 8B mannequin, trusting diffusion predictions is usually helpful, particularly on math benchmarks, whereas retaining autoregressive high quality by means of rejection sampling.

On the programs facet, the eye structure and variety of tokens per step are mounted. TiDAR pre initialises a block consideration masks and reuses slices of this masks throughout decoding steps utilizing Flex Consideration. The structure helps precise KV cache, like Block Diffusion. The implementation by no means recomputes KV entries for accepted tokens and introduces no additional inference time hyperparameters.

Coaching recipe and mannequin sizes

TiDAR is instantiated by continuous pretraining from Qwen2.5 1.5B and Qwen3 4B and 8B base fashions. The 1.5B variant is educated on 50B tokens with block sizes 4, 8 and 16. The 8B variant is educated on 150B tokens with block measurement 16. Each use most sequence size 4096, cosine studying fee schedule, distributed Adam, BF16, and a modified Megatron LM framework with Torchtitan on NVIDIA H100 GPUs.

Analysis covers coding duties HumanEval, HumanEval Plus, MBPP, MBPP Plus, math duties GSM8K and Minerva Math, factual and commonsense duties MMLU, ARC, Hellaswag, PIQA, and Winogrande, all applied through lm_eval_harness.

High quality and throughput outcomes

On generative coding and math duties, TiDAR 1.5B is very aggressive with its autoregressive counterpart, whereas producing a median 7.45 tokens per mannequin ahead. TiDAR 8B incurs solely minimal high quality loss relative to Qwen3 8B whereas growing era effectivity to eight.25 tokens per ahead go.

On data and reasoning benchmarks evaluated by chance, TiDAR 1.5B and 8B match the general behaviour of comparable autoregressive fashions, as a result of chances are computed with a pure causal masks. Diffusion baselines equivalent to Dream, Llada and Block Diffusion require Monte Carlo primarily based chance estimators, that are dearer and fewer straight comparable.

In wall clock benchmarks on a single H100 GPU with batch measurement 1, TiDAR 1.5B reaches a median 4.71 occasions speedup in decoding throughput relative to Qwen2.5 1.5B, measured in tokens per second. TiDAR 8B reaches 5.91 occasions speedup over Qwen3 8B, once more whereas sustaining comparable high quality.

In contrast with diffusion LLMs, TiDAR persistently outperforms Dream and Llada in each effectivity and accuracy, underneath the constraint that diffusion fashions decode 1 token per ahead go for highest quality. In contrast with speculative frameworks equivalent to EAGLE-3 and coaching matched Block Diffusion, TiDAR dominates the effectivity high quality frontier by changing extra tokens per ahead into actual tokens per second, due to the unified spine and parallel drafting and verification.

Key Takeaways

- TiDAR is a sequence degree hybrid structure that drafts tokens with diffusion and samples them autoregressively in a single mannequin go, utilizing a structured consideration masks that mixes causal and bidirectional areas.

- The design explicitly exploits free token slots on GPUs, it appends diffusion drafted and masked tokens to the prefix in order that many positions are processed in a single ahead go with virtually unchanged latency, enhancing compute density throughout decoding.

- TiDAR implements self speculative era, the identical spine each drafts candidate tokens with one step diffusion and verifies them with autoregressive logits and rejection sampling, which avoids the separate draft mannequin overhead of traditional speculative decoding.

- Continuous pretraining from Qwen2.5 1.5B and Qwen3 4B and 8B with a full masks diffusion goal permits TiDAR to achieve autoregressive degree high quality on coding, math and data benchmarks, whereas conserving precise chance analysis by means of pure causal masking when wanted.

- In single GPU, batch measurement 1 settings, TiDAR delivers about 4.71 occasions extra tokens per second for the 1.5B mannequin and 5.91 occasions for the 8B mannequin than their autoregressive baselines, whereas outperforming diffusion LLMs like Dream and Llada and shutting the standard hole with sturdy autoregressive fashions.

Comparability

| Facet | Customary autoregressive transformer | Diffusion LLMs (Dream, LLaDA class) | Speculative decoding (EAGLE 3 class) | TiDAR |

|---|---|---|---|---|

| Core thought | Predicts precisely 1 subsequent token per ahead go utilizing causal consideration | Iteratively denoises masked or corrupted sequences and predicts many tokens in parallel per step | Makes use of a draft path to suggest a number of tokens, goal mannequin verifies and accepts a subset | Single spine drafts with diffusion and verifies with autoregression in the identical ahead go |

| Drafting mechanism | None, each token is produced solely by the principle mannequin | Diffusion denoising over masked positions, typically with block or random masking | Light-weight or truncated transformer produces draft tokens from the present state | One step diffusion in a bidirectional block over masks tokens appended after the prefix |

| Verification mechanism | Not separate, sampling makes use of logits from the identical causal ahead | Often none, sampling trusts diffusion marginals inside every step which might cut back sequence degree coherence | Goal mannequin recomputes logits for candidate tokens and performs rejection sampling in opposition to the draft distribution | Identical spine produces autoregressive logits on the prefix that confirm diffusion drafts by means of rejection sampling |

| Variety of fashions at inference | Single mannequin | Single mannequin | No less than one draft mannequin plus one goal mannequin within the traditional setup | Single mannequin, no additional networks or heads past AR and diffusion output projections |

| Token parallelism per ahead | 1 new decoded token per community operate analysis | Many masked tokens up to date in parallel, efficient window depends upon schedule and remasking coverage | A number of draft tokens per step, last accepted tokens normally fewer than drafted ones | Round 7.45 tokens per ahead for 1.5B and round 8.25 tokens per ahead for 8B underneath the reported setup |

| Typical single GPU decoding speedup vs AR (batch measurement 1) | Baseline reference, outlined as 1 occasions | Greatest tuned variants can attain round 3 occasions throughput versus sturdy AR baselines, typically with high quality commerce offs on math and coding duties | Empirical reviews present round 2 to 2.5 occasions throughput versus native autoregressive decoding | Reported 4.71 occasions speedup for 1.5B and 5.91 occasions for 8B in comparison with matched autoregressive Qwen baselines on a single H100 with batch measurement 1 |

| High quality versus sturdy AR baseline | Reference high quality on coding, math and data benchmarks | Aggressive in some regimes however delicate to decoding schedule, high quality can drop when step rely is lowered to chase velocity | Often shut to focus on mannequin high quality when acceptance fee is excessive, can degrade when draft mannequin is weak or misaligned | Matches or carefully tracks autoregressive Qwen baselines on coding, math and data duties whereas attaining a lot greater throughput |

| Chance analysis assist | Actual log chance underneath causal factorisation, customary lm eval harness appropriate | Usually wants Monte Carlo fashion estimators or approximations for sequence degree chance | Makes use of the unique autoregressive mannequin for log chance, so analysis is precise however doesn’t use the velocity methods | Makes use of pure causal masks throughout analysis, so chances are computed precisely like an autoregressive transformer |

| KV cache behaviour | Customary cache, reused for all earlier tokens, one token added per step | Cache use depends upon particular diffusion design, some strategies repeatedly rewrite lengthy segments which will increase cache churn | Wants KV cache for each draft and goal fashions, plus additional bookkeeping for verified and rejected tokens | Actual KV cache sharing throughout diffusion and autoregressive elements, accepted tokens are cached as soon as and by no means recomputed, rejected tokens are evicted |

TiDAR is a helpful step towards bridging autoregressive decoding and diffusion language fashions utilizing one unified spine. By exploiting free token slots and self speculative era, it raises tokens per community operate analysis with out degrading GSM8K, HumanEval, or MMLU efficiency relative to Qwen baselines. The complete masks diffusion goal and precise KV cache assist additionally make it sensible for manufacturing fashion serving on H100 GPUs. General, TiDAR reveals that diffusion drafting and autoregressive verification can coexist in a single environment friendly LLM structure.

Take a look at the PAPER. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be a part of us on telegram as nicely.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies right this moment: learn extra, subscribe to our e-newsletter, and turn out to be a part of the NextTech group at NextTech-news.com