NVIDIA introduced immediately a major enlargement of its strategic collaboration with Mistral AI. This partnership coincides with the discharge of the brand new Mistral 3 frontier open mannequin household, marking a pivotal second the place {hardware} acceleration and open-source mannequin structure have converged to redefine efficiency benchmarks.

This collaboration is a large leap in inference velocity: the brand new fashions now run as much as 10x quicker on NVIDIA GB200 NVL72 programs in comparison with the earlier era H200 programs. This breakthrough unlocks unprecedented effectivity for enterprise-grade AI, promising to unravel the latency and value bottlenecks which have traditionally plagued the large-scale deployment of reasoning fashions.

A Generational Leap: 10x Quicker on Blackwell

As enterprise demand shifts from easy chatbots to high-reasoning, long-context brokers, inference effectivity has grow to be the essential bottleneck. The collaboration between NVIDIA and Mistral AI addresses this head-on by optimizing the Mistral 3 household particularly for the NVIDIA Blackwell structure.

The place manufacturing AI programs should ship each sturdy consumer expertise (UX) and cost-efficient scale, the NVIDIA GB200 NVL72 supplies as much as 10x increased efficiency than the previous-generation H200. This isn’t merely a achieve in uncooked velocity; it interprets to considerably increased power effectivity. The system exceeds 5,000,000 tokens per second per megawatt (MW) at consumer interactivity charges of 40 tokens per second.

For information facilities grappling with energy constraints, this effectivity achieve is as essential because the efficiency increase itself. This generational leap ensures a decrease per-token value whereas sustaining the excessive throughput required for real-time functions.

A New Mistral 3 Household

The engine driving this efficiency is the newly launched Mistral 3 household. This suite of fashions delivers industry-leading accuracy, effectivity, and customization capabilities, masking the spectrum from large information heart workloads to edge system inference.

Mistral Massive 3: The Flagship MoE

On the high of the hierarchy sits Mistral Massive 3, a state-of-the-art sparse Multimodal and Multilingual Combination-of-Specialists (MoE) mannequin.

- Complete Parameters: 675 Billion

- Lively Parameters: 41 Billion

- Context Window: 256K tokens

Skilled on NVIDIA Hopper GPUs, Mistral Massive 3 is designed to deal with advanced reasoning duties, providing parity with top-tier closed fashions whereas retaining the flexibleness of open weights.

Ministral 3: Dense Energy on the Edge

Complementing the big mannequin is the Ministral 3 collection, a set of small, dense, high-performance fashions designed for velocity and flexibility.

- Sizes: 3B, 8B, and 14B parameters.

- Variants: Base, Instruct, and Reasoning for every dimension (9 fashions complete).

- Context Window: 256K tokens throughout the board.

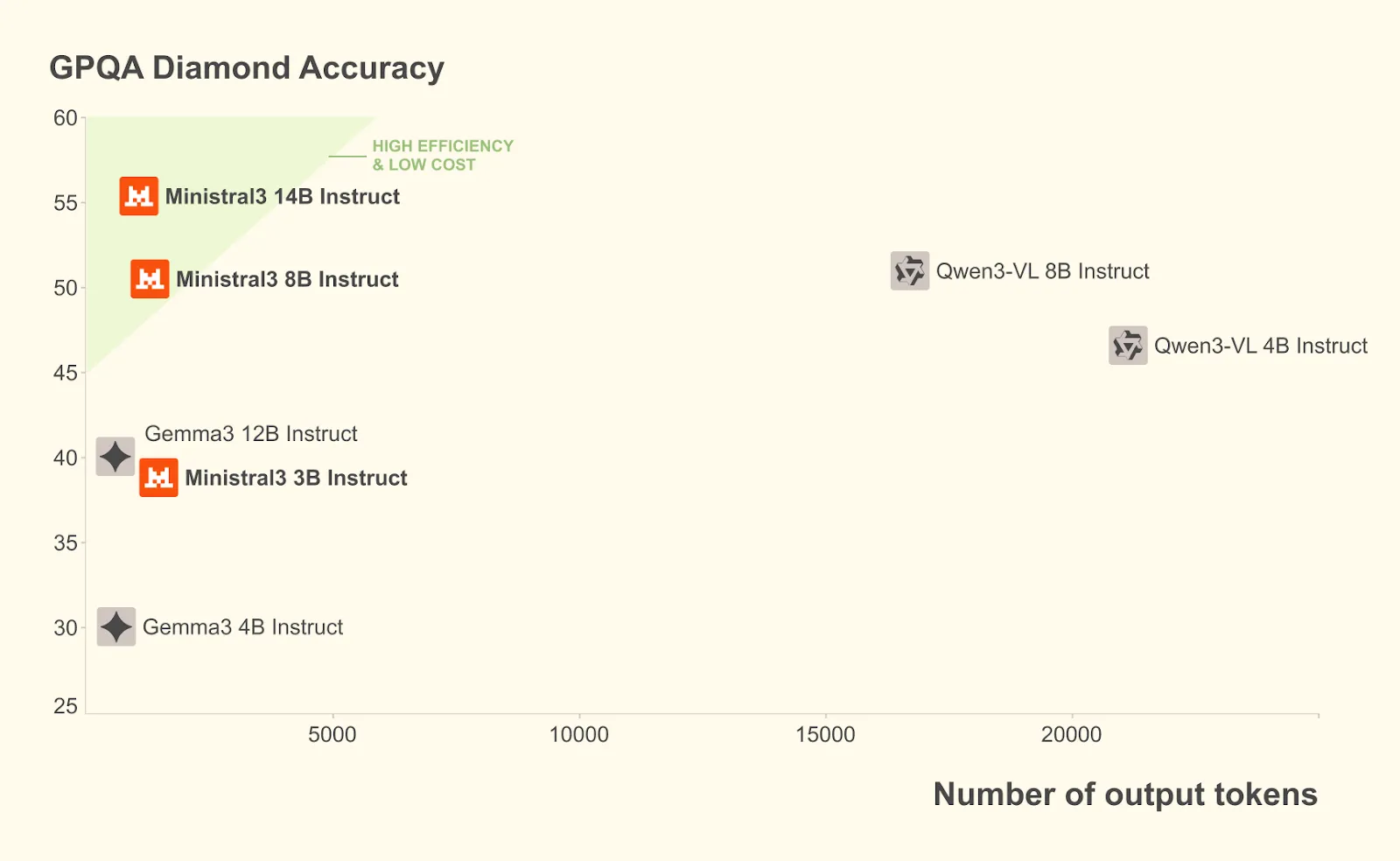

The Ministral 3 collection excel at GPQA Diamond Accuracy benchmark by using 100 much less tokens whereas supply increased accuracy :

Important Engineering Behind the Pace: A Complete Optimization Stack

The “10x” efficiency declare is pushed by a complete stack of optimizations co-developed by Mistral and NVIDIA engineers. The groups adopted an “excessive co-design” strategy, merging {hardware} capabilities with mannequin structure changes.

TensorRT-LLM Huge Knowledgeable Parallelism (Huge-EP)

To totally exploit the large scale of the GB200 NVL72, NVIDIA employed Huge Knowledgeable Parallelism inside TensorRT-LLM. This expertise supplies optimized MoE GroupGEMM kernels, skilled distribution, and cargo balancing.

Crucially, Huge-EP exploits the NVL72’s coherent reminiscence area and NVLink cloth. It’s extremely resilient to architectural variations throughout giant MoEs. As an illustration, Mistral Massive 3 makes use of roughly 128 specialists per layer, about half as many as comparable fashions like DeepSeek-R1. Regardless of this distinction, Huge-EP allows the mannequin to comprehend the high-bandwidth, low-latency, non-blocking advantages of the NVLink cloth, making certain that the mannequin’s large dimension doesn’t lead to communication bottlenecks.

Native NVFP4 Quantization

One of the vital technical developments on this launch is the help for NVFP4, a quantization format native to the Blackwell structure.

For Mistral Massive 3, builders can deploy a compute-optimized NVFP4 checkpoint quantized offline utilizing the open-source llm-compressor library.

This strategy reduces compute and reminiscence prices whereas strictly sustaining accuracy. It leverages NVFP4’s higher-precision FP8 scaling components and finer-grained block scaling to regulate quantization error. The recipe particularly targets the MoE weights whereas protecting different elements at unique precision, permitting the mannequin to deploy seamlessly on the GB200 NVL72 with minimal accuracy loss.

Disaggregated Serving with NVIDIA Dynamo

Mistral Massive 3 makes use of NVIDIA Dynamo, a low-latency distributed inference framework, to disaggregate the prefill and decode phases of inference.

In conventional setups, the prefill part (processing the enter immediate) and the decode part (producing the output) compete for sources. By rate-matching and disaggregating these phases, Dynamo considerably boosts efficiency for long-context workloads, akin to 8K enter/1K output configurations. This ensures excessive throughput even when using the mannequin’s large 256K context window.

From Cloud to Edge: Ministral 3 Efficiency

The optimization efforts lengthen past the large information facilities. Recognizing the rising want for native AI, the Ministral 3 collection is engineered for edge deployment, providing flexibility for a wide range of wants.

RTX and Jetson Acceleration

The dense Ministral fashions are optimized for platforms just like the NVIDIA GeForce RTX AI PC and NVIDIA Jetson robotics modules.

- RTX 5090: The Ministral-3B variants can attain blistering inference speeds of 385 tokens per second on the NVIDIA RTX 5090 GPU. This brings workstation-class AI efficiency to native PCs, enabling quick iteration and better information privateness.

- Jetson Thor: For robotics and edge AI, builders can use the vLLM container on NVIDIA Jetson Thor. The Ministral-3-3B-Instruct mannequin achieves 52 tokens per second for single concurrency, scaling as much as 273 tokens per second with a concurrency of 8.

Broad Framework Assist

NVIDIA has collaborated with the open-source group to make sure these fashions are usable in every single place.

- Llama.cpp & Ollama: NVIDIA collaborated with these well-liked frameworks to make sure quicker iteration and decrease latency for native improvement.

- SGLang: NVIDIA collaborated with SGLang to create an implementation of Mistral Massive 3 that helps each disaggregation and speculative decoding.

- vLLM: NVIDIA labored with vLLM to develop help for kernel integrations, together with speculative decoding (EAGLE), Blackwell help, and expanded parallelism.

Manufacturing-Prepared with NVIDIA NIM

To streamline enterprise adoption, the brand new fashions shall be out there by NVIDIA NIM microservices.

Mistral Massive 3 and Ministral-14B-Instruct are presently out there by the NVIDIA API catalog and preview API. Quickly, enterprise builders will be capable of use downloadable NVIDIA NIM microservices. This supplies a containerized, production-ready resolution that permits enterprises to deploy the Mistral 3 household with minimal setup on any GPU-accelerated infrastructure.

This availability ensures that the particular “10x” efficiency benefit of the GB200 NVL72 could be realized in manufacturing environments with out advanced customized engineering, democratizing entry to frontier-class intelligence.

Conclusion: A New Commonplace for Open Intelligence

The discharge of the NVIDIA-accelerated Mistral 3 open mannequin household represents a serious leap for AI within the open-source group. By providing frontier-level efficiency beneath an open supply license, and backing it with a strong {hardware} optimization stack, Mistral and NVIDIA are assembly builders the place they’re.

From the large scale of the GB200 NVL72 using Huge-EP and NVFP4, to the edge-friendly density of Ministral on an RTX 5090, this partnership delivers a scalable, environment friendly path for synthetic intelligence. With upcoming optimizations akin to speculative decoding with multitoken prediction (MTP) and EAGLE-3 anticipated to push efficiency even additional, the Mistral 3 household is poised to grow to be a foundational aspect of the subsequent era of AI functions.

Obtainable to check!

In case you are a developer trying to benchmark these efficiency beneficial properties, you may obtain the Mistral 3 fashions instantly from Hugging Face or check the deployment-free hosted variations on construct.nvidia.com/mistralai to guage the latency and throughput on your particular use case.

Try the Fashions on Hugging Face. Yow will discover particulars on Company Weblog and Technical/Developer Weblog.

Due to the NVIDIA AI group for the thought management/ Sources for this text. NVIDIA AI group has supported this content material/article.

Jean-marc is a profitable AI enterprise govt .He leads and accelerates progress for AI powered options and began a pc imaginative and prescient firm in 2006. He’s a acknowledged speaker at AI conferences and has an MBA from Stanford.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s tendencies immediately: learn extra, subscribe to our publication, and grow to be a part of the NextTech group at NextTech-news.com