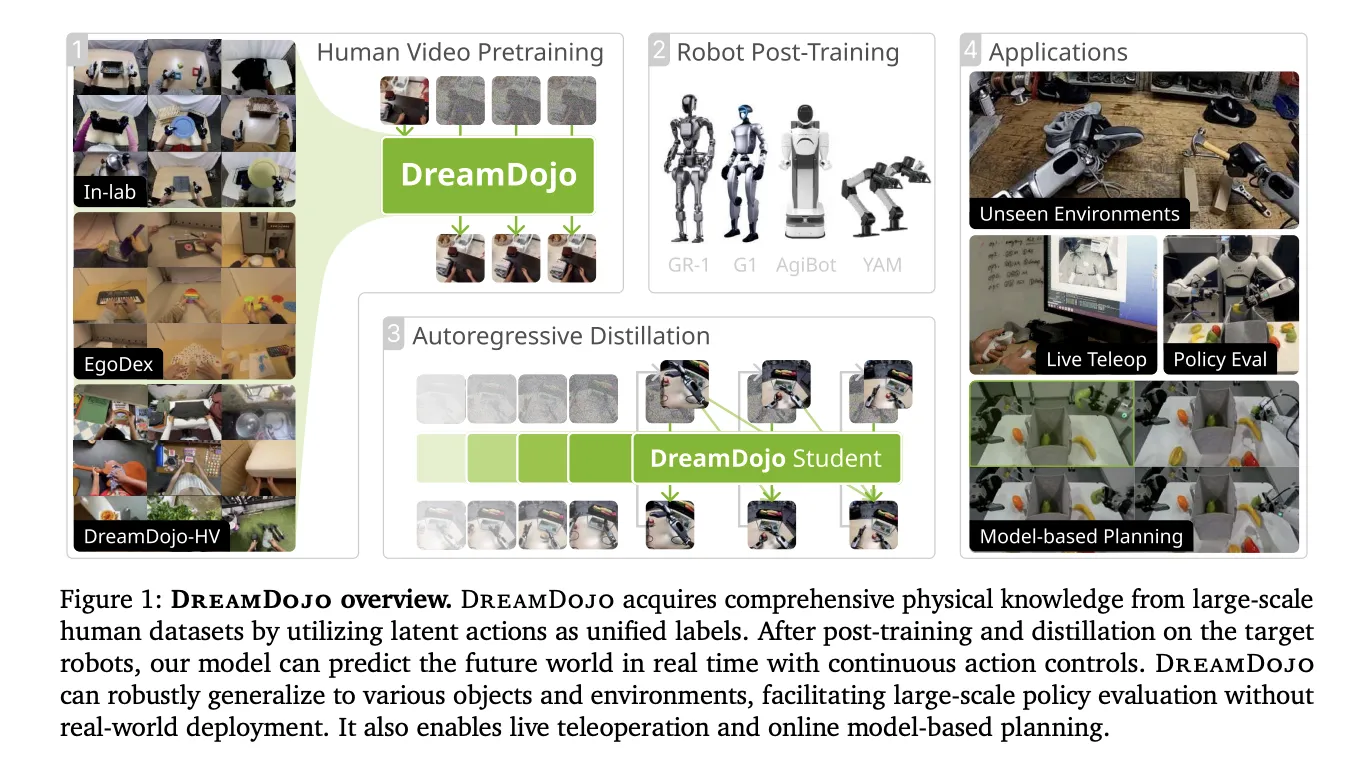

Constructing simulators for robots has been a long run problem. Conventional engines require guide coding of physics and excellent 3D fashions. NVIDIA is altering this with DreamDojo, a totally open-source, generalizable robotic world mannequin. As an alternative of utilizing a physics engine, DreamDojo ‘desires’ the outcomes of robotic actions instantly in pixels.

Scaling Robotics with 44k+ Hours of Human Expertise

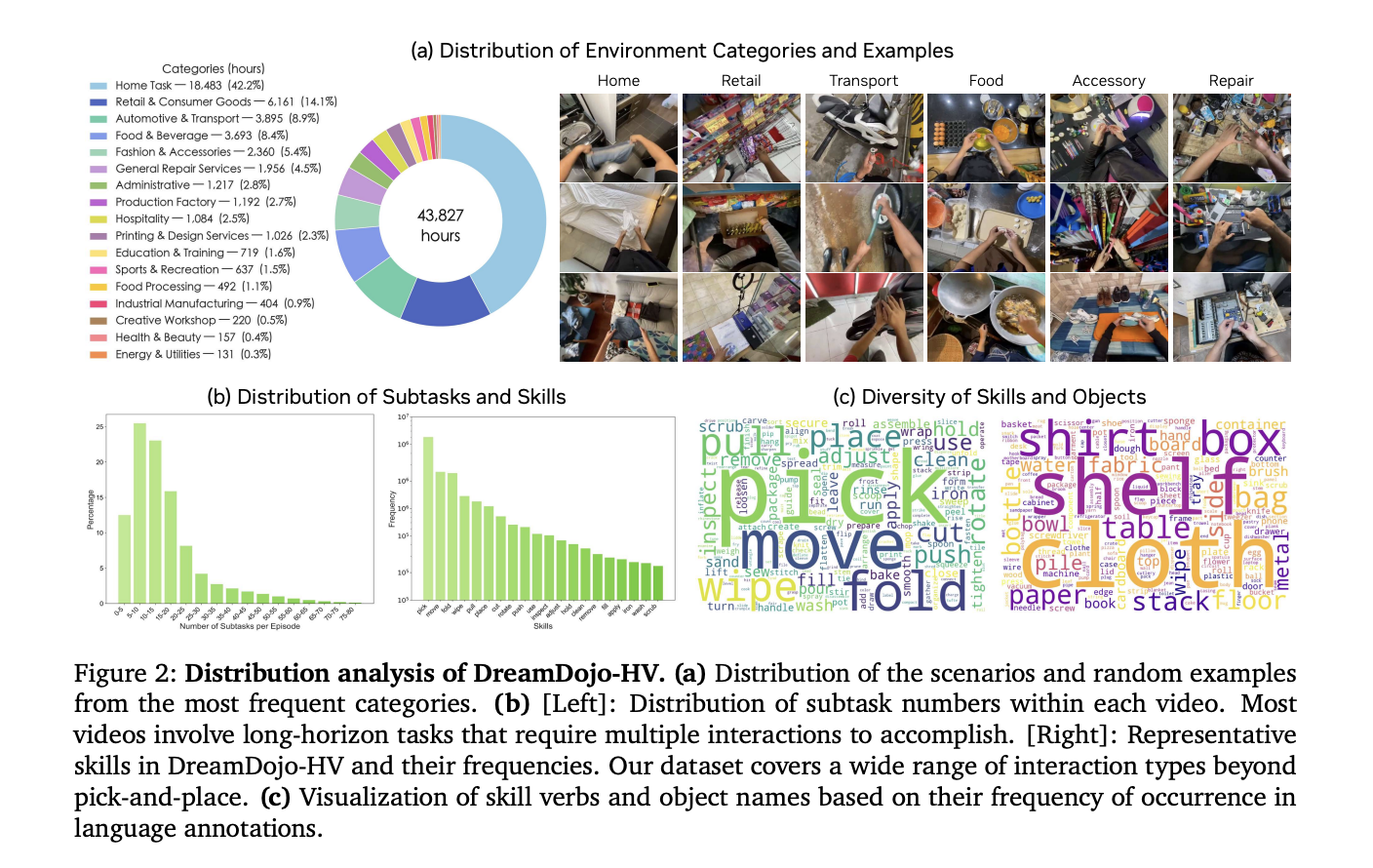

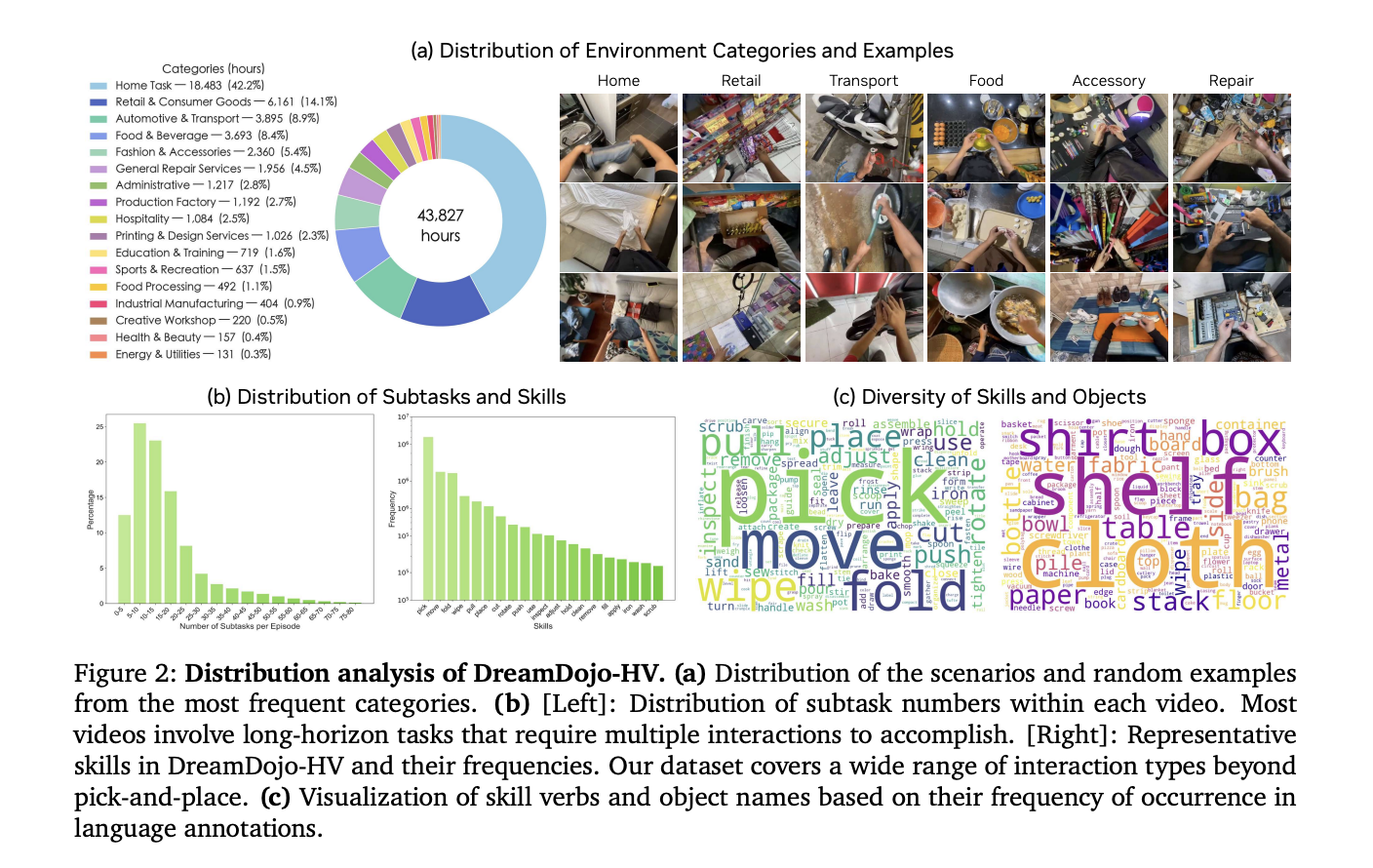

The most important hurdle for AI in robotics is knowledge. Gathering robot-specific knowledge is dear and sluggish. DreamDojo solves this by studying from 44k+ hours of selfish human movies. This dataset, referred to as DreamDojo-HV, is the biggest of its form for world mannequin pretraining.

- It options 6,015 distinctive duties throughout 1M+ trajectories.

- The information covers 9,869 distinctive scenes and 43,237 distinctive objects.

- Pretraining used 100,000 NVIDIA H100 GPU hours to construct 2B and 14B mannequin variants.

People have already mastered complicated physics, resembling pouring liquids or folding garments. DreamDojo makes use of this human knowledge to present robots a ‘frequent sense’ understanding of how the world works.

Bridging the Hole with Latent Actions

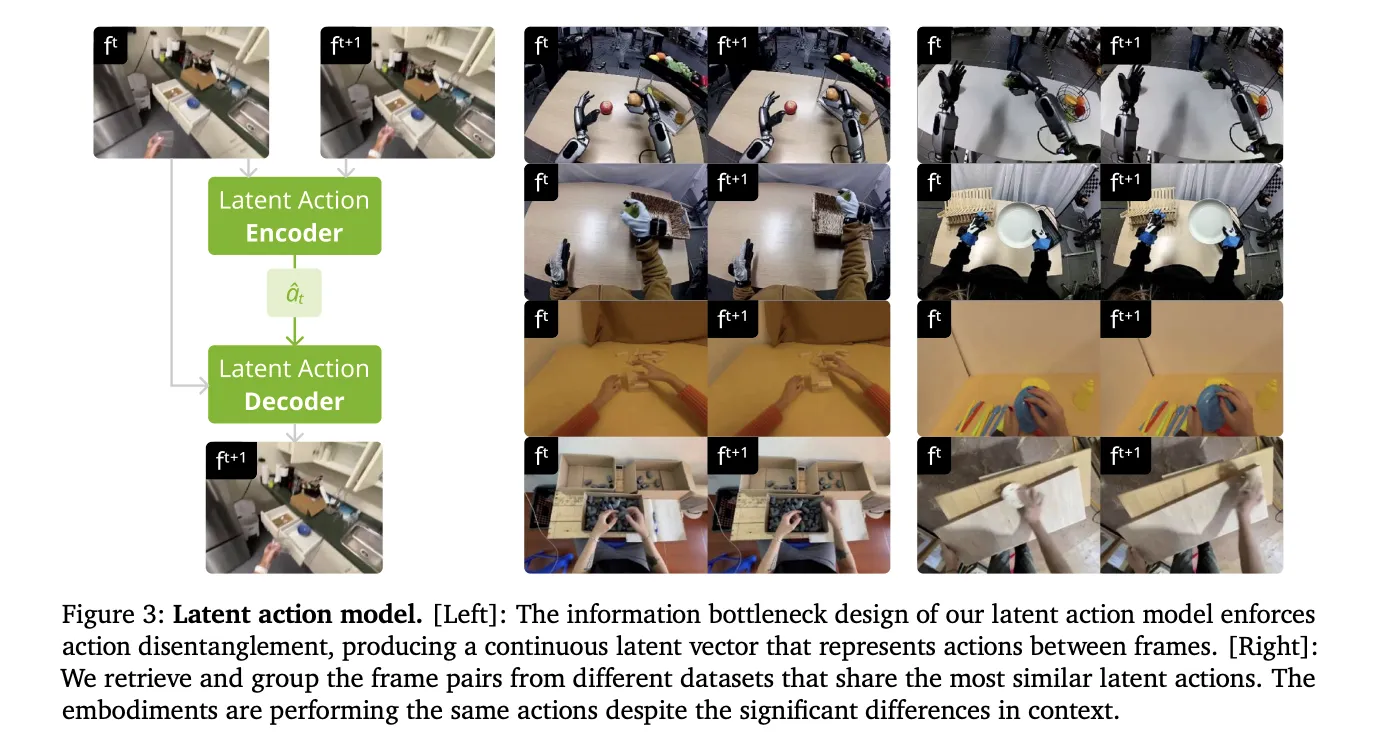

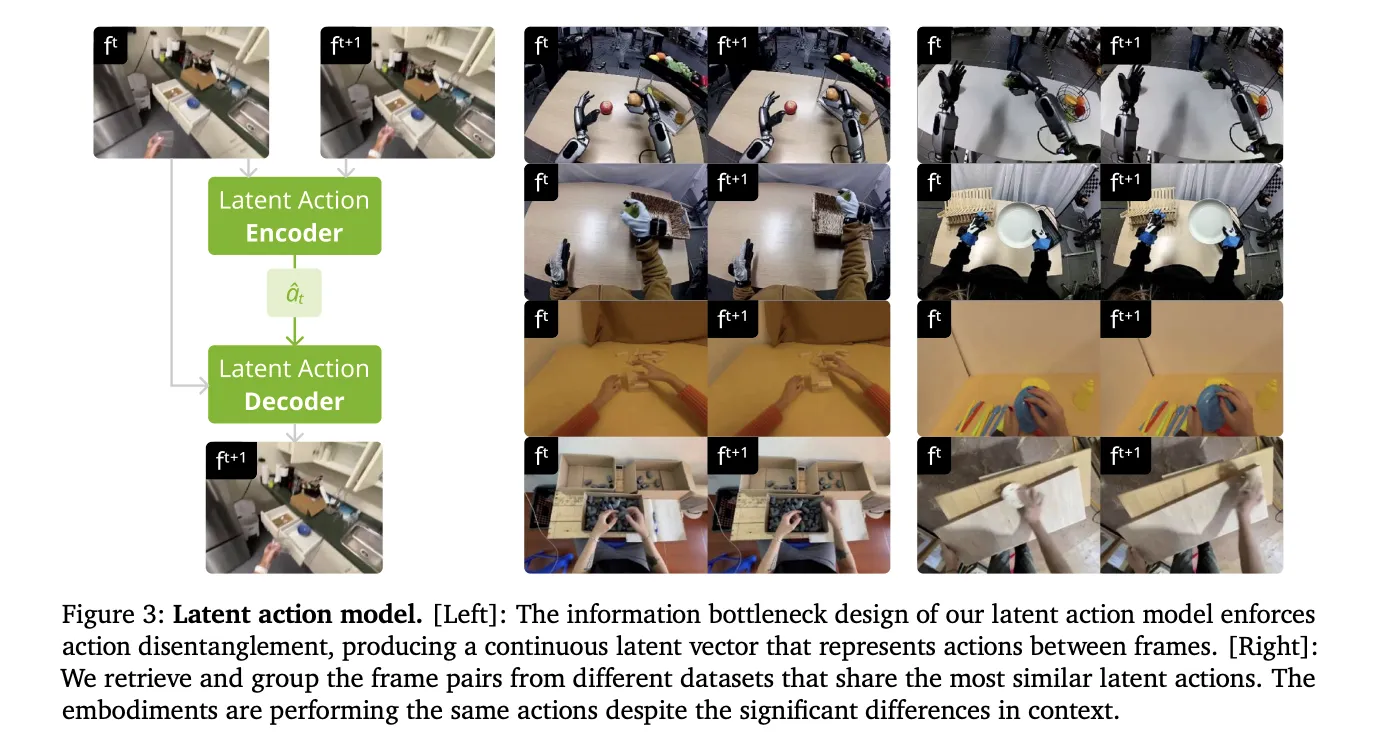

Human movies should not have robotic motor instructions. To make these movies ‘robot-readable,’ NVIDIA’s analysis staff launched steady latent actions. This method makes use of a spatiotemporal Transformer VAE to extract actions instantly from pixels.

- The VAE encoder takes 2 consecutive frames and outputs a 32-dimensional latent vector.

- This vector represents essentially the most important movement between frames.

- The design creates an info bottleneck that disentangles motion from visible context.

- This enables the mannequin to be taught physics from people and apply them to totally different robotic our bodies.

Higher Physics by Structure

DreamDojo is predicated on the Cosmos-Predict2.5 latent video diffusion mannequin. It makes use of the WAN2.2 tokenizer, which has a temporal compression ratio of 4. The staff improved the structure with 3 key options:

- Relative Actions: The mannequin makes use of joint deltas as an alternative of absolute poses. This makes it simpler for the mannequin to generalize throughout totally different trajectories.

- Chunked Motion Injection: It injects 4 consecutive actions into every latent body. This aligns the actions with the tokenizer’s compression ratio and fixes causality confusion.

- Temporal Consistency Loss: A brand new loss operate matches predicted body velocities to ground-truth transitions. This reduces visible artifacts and retains objects bodily constant.

Distillation for 10.81 FPS Actual-Time Interplay

A simulator is just helpful whether it is quick. Customary diffusion fashions require too many denoising steps for real-time use. NVIDIA staff used a Self Forcing distillation pipeline to resolve this.

- The distillation coaching was carried out on 64 NVIDIA H100 GPUs.

- The ‘pupil’ mannequin reduces denoising from 35 steps right down to 4 steps.

- The ultimate mannequin achieves a real-time pace of 10.81 FPS.

- It’s steady for steady rollouts of 60 seconds (600 frames).

Unlocking Downstream Purposes

DreamDojo’s pace and accuracy allow a number of superior functions for AI engineers.

1. Dependable Coverage Analysis

Testing robots in the actual world is dangerous. DreamDojo acts as a high-fidelity simulator for benchmarking.

- Its simulated success charges present a Pearson correlation of (Pearson 𝑟=0.995) with real-world outcomes.

- The Imply Most Rank Violation (MMRV) is just 0.003.

2. Mannequin-Primarily based Planning

Robots can use DreamDojo to ‘look forward.’ A robotic can simulate a number of motion sequences and choose the very best one.

- In a fruit-packing activity, this improved real-world success charges by 17%.

- In comparison with random sampling, it supplied a 2x enhance in success.

3. Dwell Teleoperation

Builders can teleoperate digital robots in actual time. NVIDIA staff demonstrated this utilizing a PICO VR controller and a neighborhood desktop with an NVIDIA RTX 5090. This enables for secure and fast knowledge assortment.

Abstract of Mannequin Efficiency

| Metric | DREAMDOJO-2B | DREAMDOJO-14B |

| Physics Correctness | 62.50% | 73.50% |

| Motion Following | 63.45% | 72.55% |

| FPS (Distilled) | 10.81 | N/A |

NVIDIA has launched all weights, coaching code, and analysis benchmarks. This open-source launch means that you can post-train DreamDojo by yourself robotic knowledge as we speak.

Key Takeaways

- Large Scale and Range: DreamDojo is pretrained on DreamDojo-HV, the biggest selfish human video dataset thus far, that includes 44,711 hours of footage throughout 6,015 distinctive duties and 9,869 scenes.

- Unified Latent Motion Proxy: To beat the dearth of motion labels in human movies, the mannequin makes use of steady latent actions extracted by way of a spatiotemporal Transformer VAE, which serves as a hardware-agnostic management interface.

- Optimized Coaching and Structure: The mannequin achieves high-fidelity physics and exact controllability by using relative motion transformations, chunked motion injection, and a specialised temporal consistency loss.

- Actual-Time Efficiency by way of Distillation: By way of a Self Forcing distillation pipeline, the mannequin is accelerated to 10.81 FPS, enabling interactive functions like stay teleoperation and steady, long-horizon simulations for over 1 minute.

- Dependable for Downstream Duties: DreamDojo capabilities as an correct simulator for coverage analysis, displaying a 0.995 Pearson correlation with real-world success charges, and may enhance real-world efficiency by 17% when used for model-based planning.

Take a look at the Paper and Codes. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you possibly can be a part of us on telegram as nicely.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the most recent breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s traits as we speak: learn extra, subscribe to our publication, and turn into a part of the NextTech neighborhood at NextTech-news.com