Most AI functions nonetheless showcase the mannequin as a chat field. That interface is straightforward, but it surely hides what brokers are literally doing, equivalent to planning steps, calling instruments, and updating state. Generative UI is about letting the agent drive actual interface components, for instance tables, charts, varieties, and progress indicators, so the expertise seems like a product, not a log of tokens.

What’s Generative UI?

The CopilotKit workforce explains Generative UI as to any consumer interface that’s partially or totally produced by an AI agent. As an alternative of solely returning textual content, the agent can drive:

- stateful elements equivalent to varieties and filters

- visualizations equivalent to charts and tables

- multistep flows equivalent to wizards

- standing surfaces equivalent to progress and intermediate outcomes

The important thing thought is that the UI continues to be carried out by the applying. The agent describes what ought to change, and the UI layer chooses how you can render it and how you can maintain state constant.

Three most important patterns of Generative UI:

- Static generative UI: the agent selects from a set catalog of elements and fills props

- Declarative generative UI: the agent returns a structured schema {that a} renderer maps to elements

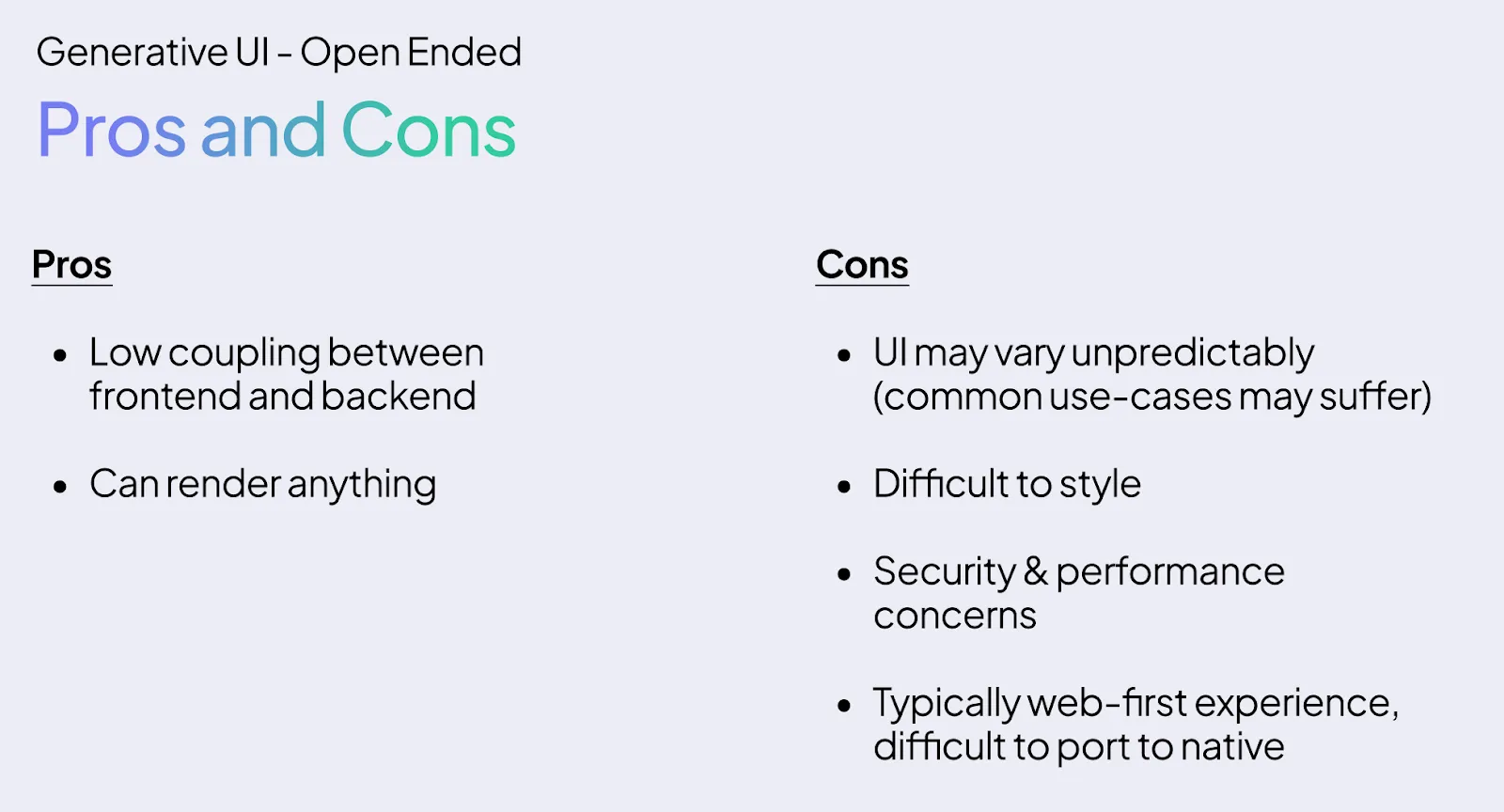

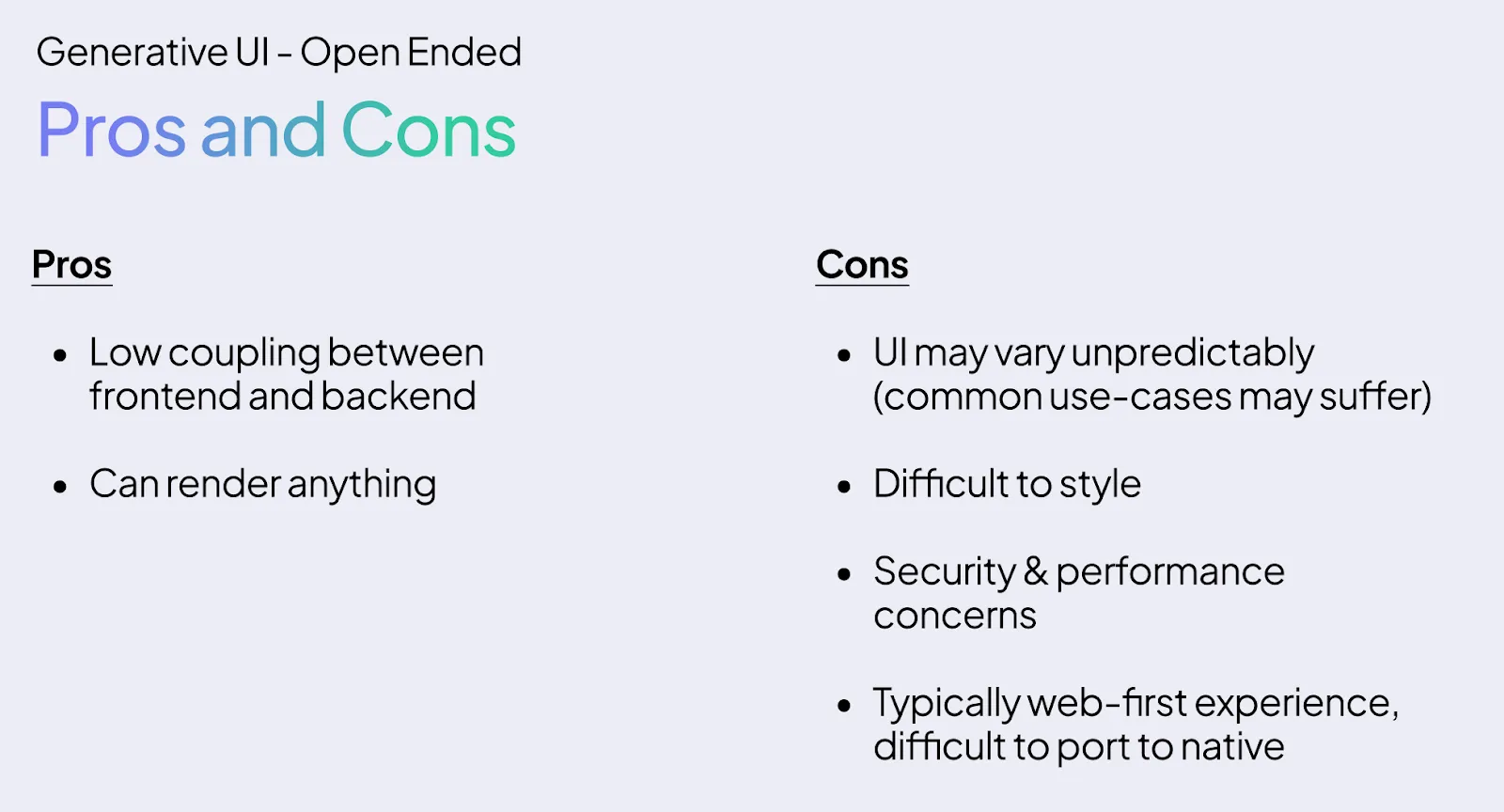

- Totally generated UI: the mannequin emits uncooked markup equivalent to HTML or JSX

Most manufacturing methods right now use static or declarative varieties, as a result of they’re simpler to safe and take a look at.

You may as well obtain the Generative UI Information right here.

However why is it wanted for Devs?

The principle ache level in agent functions is the connection between the mannequin and the product. And not using a customary strategy, each workforce builds customized web-sockets, ad-hoc occasion codecs, and one off methods to stream instrument calls and state.

Generative UI, along with a protocol like AG-UI, offers a constant psychological mannequin:

- the agent backend exposes state, instrument exercise, and UI intent as structured occasions

- the frontend consumes these occasions and updates elements

- consumer interactions are transformed again into structured alerts that the agent can cause over

CopilotKit packages this in its SDKs with hooks, shared state, typed actions, and Generative UI helpers for React and different frontends. This allows you to give attention to the agent logic and area particular UI as a substitute of inventing a protocol.

How does it have an effect on Finish Customers?

For finish customers, the distinction is seen as quickly because the workflow turns into non-trivial.

An information evaluation copilot can present filters, metric pickers, and stay charts as a substitute of describing plots in textual content. A help agent can floor file enhancing varieties and standing timelines as a substitute of lengthy explanations of what it did. An operations agent can present job queues, error badges, and retry buttons that the consumer can act on.

That is what CopilotKit and the AG-UI ecosystem name agentic UI, consumer interfaces the place the agent is embedded within the product and updates the UI in actual time, whereas customers keep in management via direct interplay.

The Protocol Stack, AG-UI, MCP Apps, A2UI, Open-JSON-UI

A number of specs outline how brokers specific UI intent. CopilotKit’s documentation and the AG-UI docs summarize three most important generative UI specs:

- A2UI from Google, a declarative, JSON primarily based Generative UI spec designed for streaming and platform agnostic rendering

- Open-JSON-UI from OpenAI, an open standardization of OpenAI’s inner declarative Generative UI schema for structured interfaces

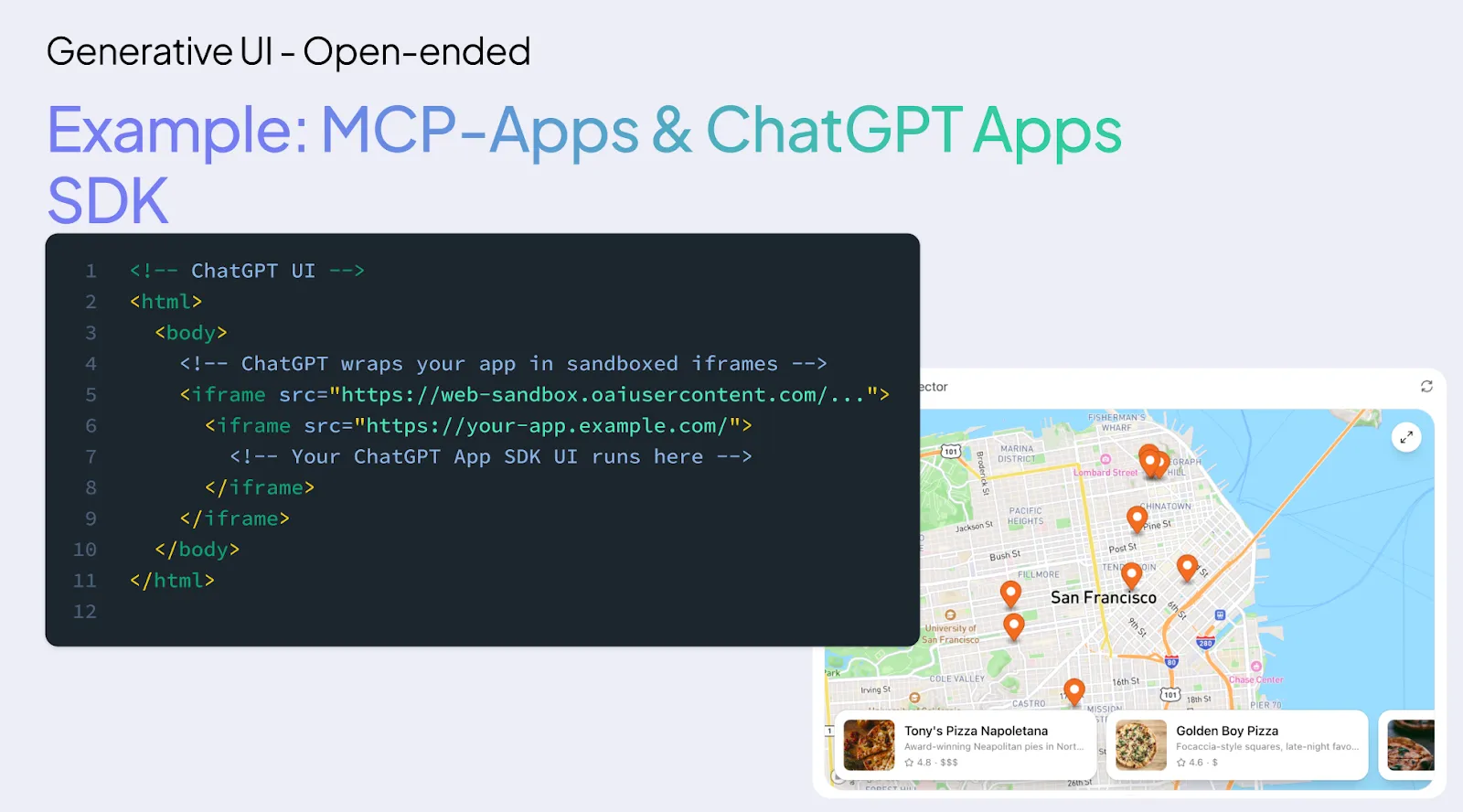

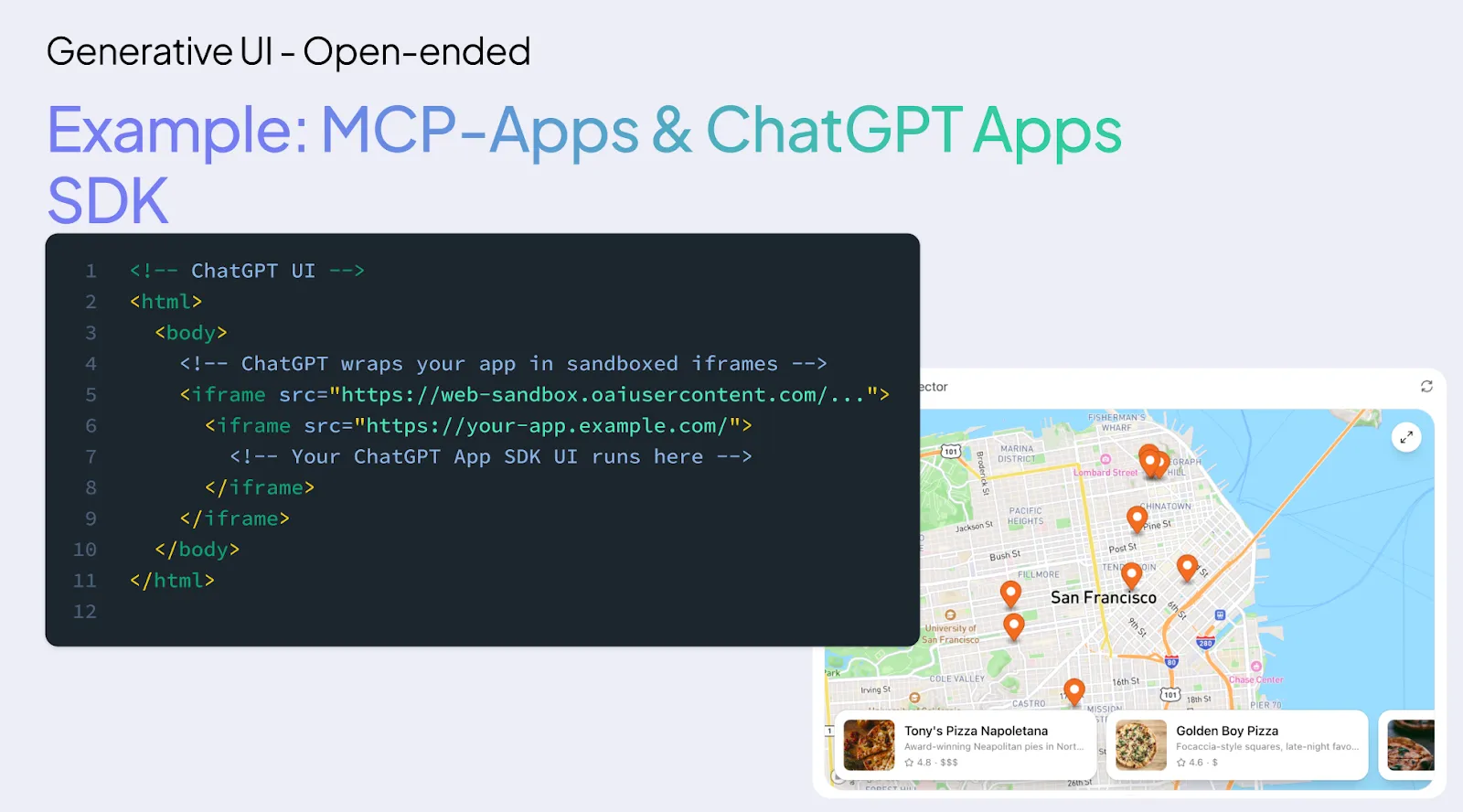

- MCP Apps from Anthropic and OpenAI, a Generative UI layer on prime of MCP the place instruments can return iframe primarily based interactive surfaces

These are payload codecs. They describe what UI to render, for instance a card, desk, or type, and the related knowledge.

AG-UI sits at a distinct layer. It’s the Agent Person Interplay protocol, an occasion pushed, bi-directional runtime that connects any agent backend to any frontend over transports equivalent to server despatched occasions or WebSockets. AG-UI carries:

- lifecycle and message occasions

- state snapshots and deltas

- instrument exercise

- consumer actions

- generative UI payloads equivalent to A2UI, Open-JSON-UI, or MCP Apps

MCP connects brokers to instruments and knowledge, A2A connects brokers to one another, A2UI or Open-JSON-UI outline declarative UI payloads, MCP Apps defines iframe primarily based UI payloads, and AG-UI strikes all of these between agent and UI.

Key Takeaways

- Generative UI is structured UI, not simply chat: Brokers emit structured UI intent, equivalent to varieties, tables, charts, and progress, which the app renders as actual elements, so the mannequin controls stateful views, not solely textual content streams.

- AG-UI is the runtime pipe, A2UI and Open JSON UI and MCP Apps are payloads: AG-UI carries occasions between agent and frontend, whereas A2UI, Open JSON UI, and MCP UI outline how UI is described as JSON or iframe primarily based payloads that the UI layer renders.

- CopilotKit standardizes agent to UI-wiring: CopilotKit supplies SDKs, shared state, typed actions, and Generative UI helpers so builders don’t construct customized protocols for streaming state, instrument exercise, and UI updates.

- Static and declarative Generative UI are manufacturing pleasant: Most actual apps use static catalogs of elements or declarative specs equivalent to A2UI or Open JSON UI, which maintain safety, testing, and format management within the host utility.

- Person interactions develop into top quality occasions for the agent: Clicks, edits, and submissions are transformed into structured AG-UI occasions, the agent consumes them as inputs for planning and power calls, which closes the human within the loop management cycle.

Generative UI sounds summary till you see it operating.

When you’re curious how these concepts translate into actual functions, CopilotKit is open supply and actively used to construct agent-native interfaces – from easy workflows to extra complicated methods. Dive into the repo and discover the patterns on GitHub. It’s all constructed within the open.

You could find right here extra studying supplies for Generative UI. You may as well obtain the Generative UI Information right here.

Generative-UI

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a world community of future-focused thinkers.

Unlock tomorrow’s developments right now: learn extra, subscribe to our publication, and develop into a part of the NextTech group at NextTech-news.com