Pokee AI has open sourced PokeeResearch-7B, a 7B parameter deep analysis agent that executes full analysis loops, decomposes a question, points search and browse calls, verifies candidate solutions, then synthesizes a number of analysis threads right into a last response.

The agent runs a analysis and verification loop. In analysis, it calls exterior instruments for internet search and web page studying or proposes an interim reply. In verification, it checks the reply in opposition to retrieved proof, and both accepts or restarts analysis. This construction reduces brittle trajectories and catches apparent errors earlier than finalization. The analysis workforce formalizes this loop and provides a test-time synthesis stage that merges a number of impartial analysis threads.

Coaching recipe, RLAIF with RLOO

PokeeResearch-7B is finetuned from Qwen2.5-7B-Instruct utilizing an annotation-free Reinforcement Studying from AI Suggestions, referred to as RLAIF, with the REINFORCE Go away-One-Out algorithm, referred to as RLOO. The reward targets semantic correctness, quotation faithfulness, and instruction adherence, not token overlap. The Mannequin’s Hugging Face card lists batch measurement 64, 8 analysis threads per immediate throughout RL, studying fee 3e-6, 140 steps, context 32,768 tokens, bf16 precision, and a checkpoint close to 13 GB. The analysis workforce emphasizes that RLOO offers an unbiased on coverage gradient and contrasts it with the PPO household that’s roughly on coverage and biased.

Reasoning scaffold and Analysis Threads Synthesis

The scaffold consists of three mechanisms. Self correction, the agent detects malformed software calls and retries. Self verification, the agent inspects its personal reply in opposition to proof. Analysis Threads Synthesis, the agent runs a number of impartial threads per query, summarizes them, then synthesizes a last reply. The analysis workforce experiences that synthesis improves accuracy on tough benchmarks.

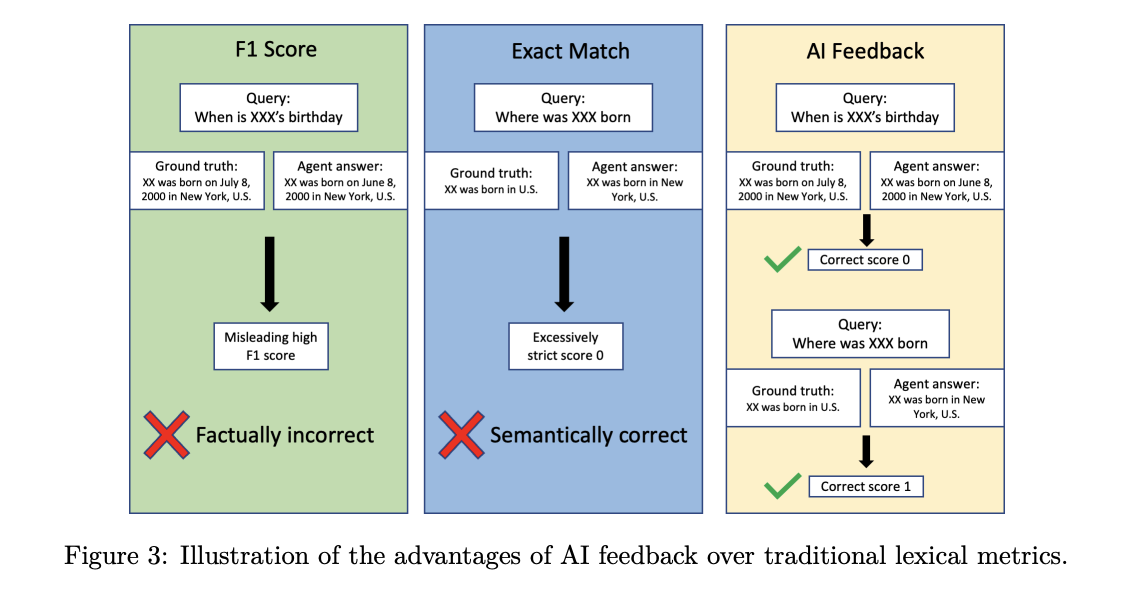

Analysis protocol

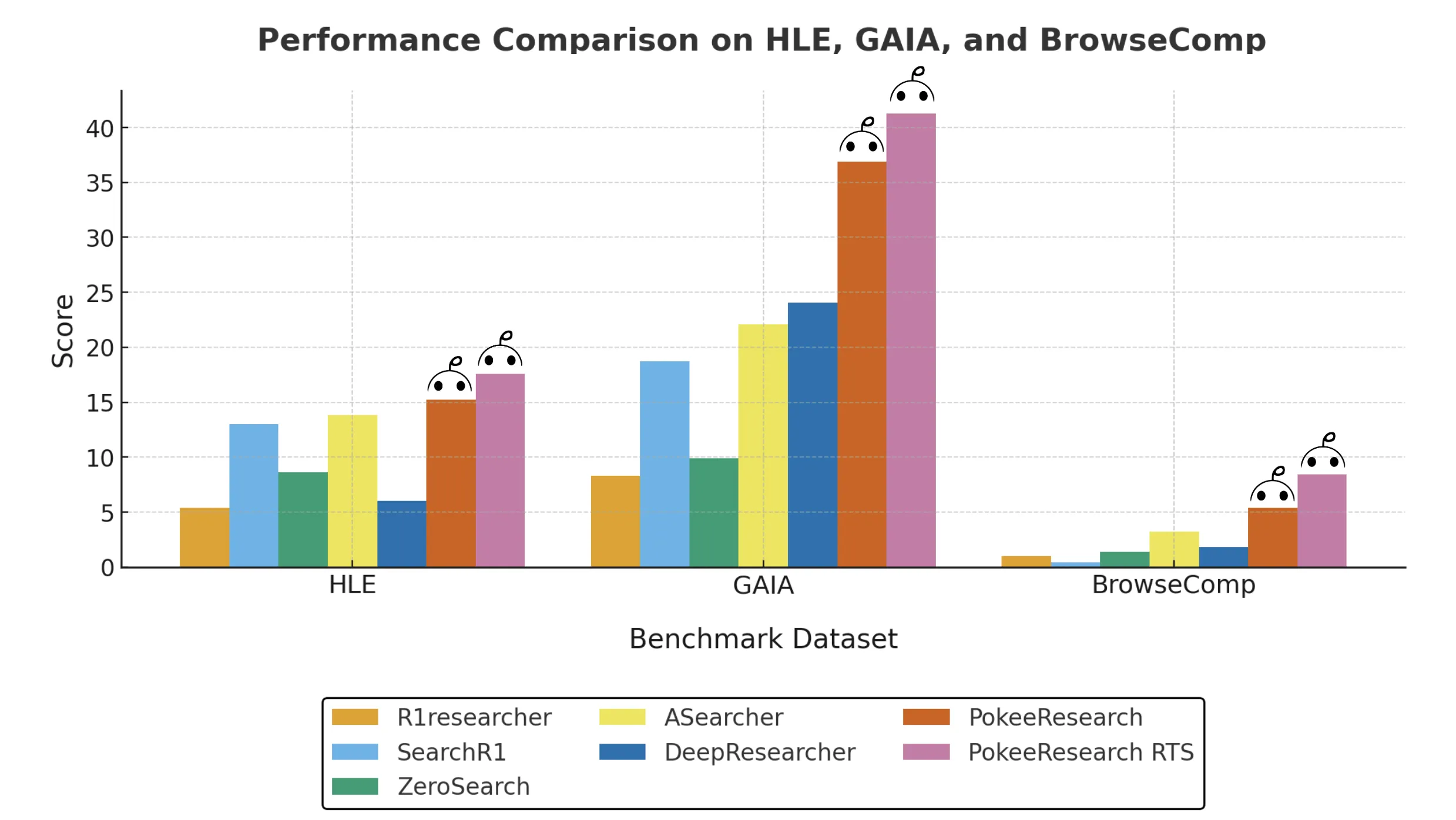

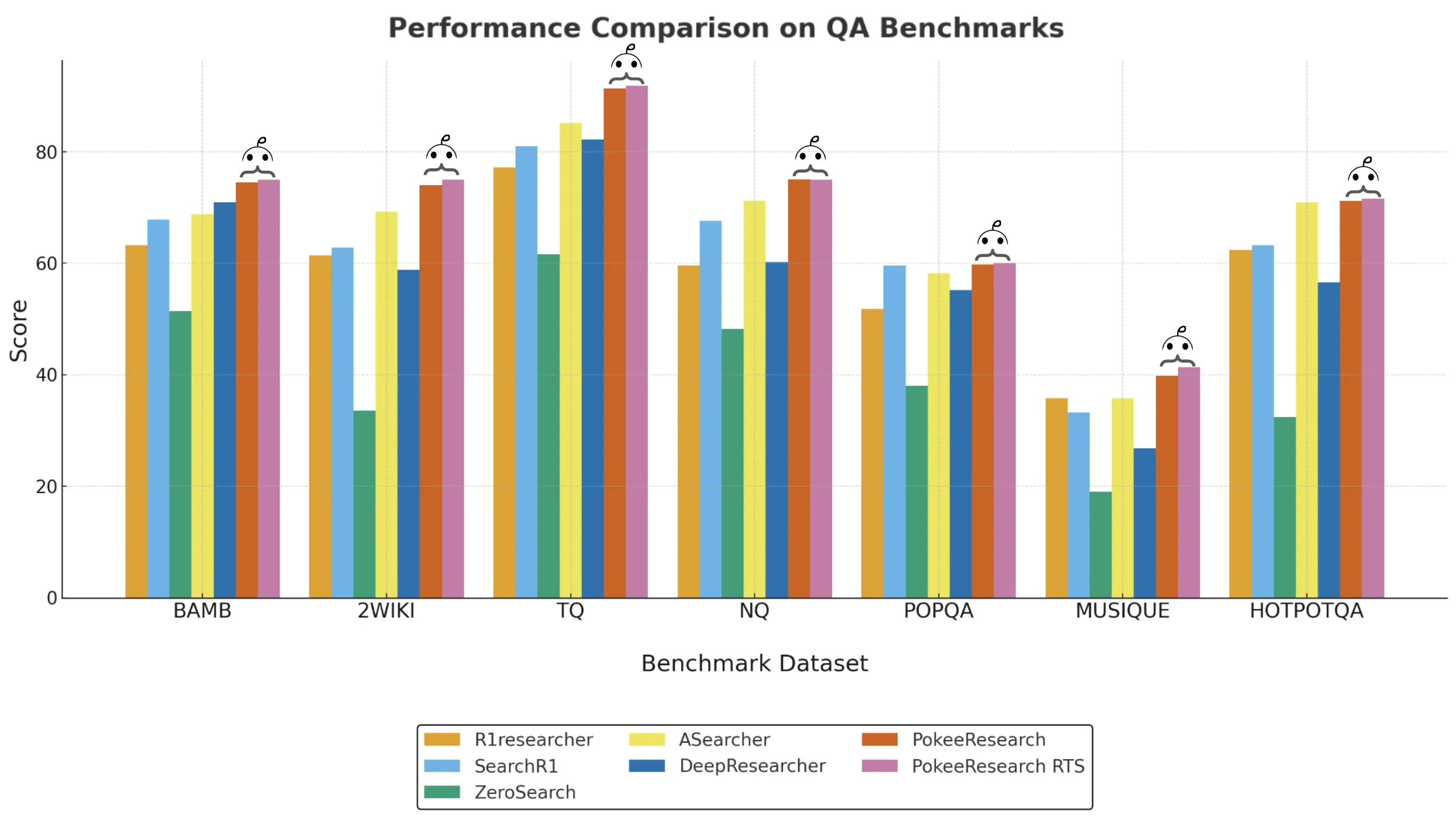

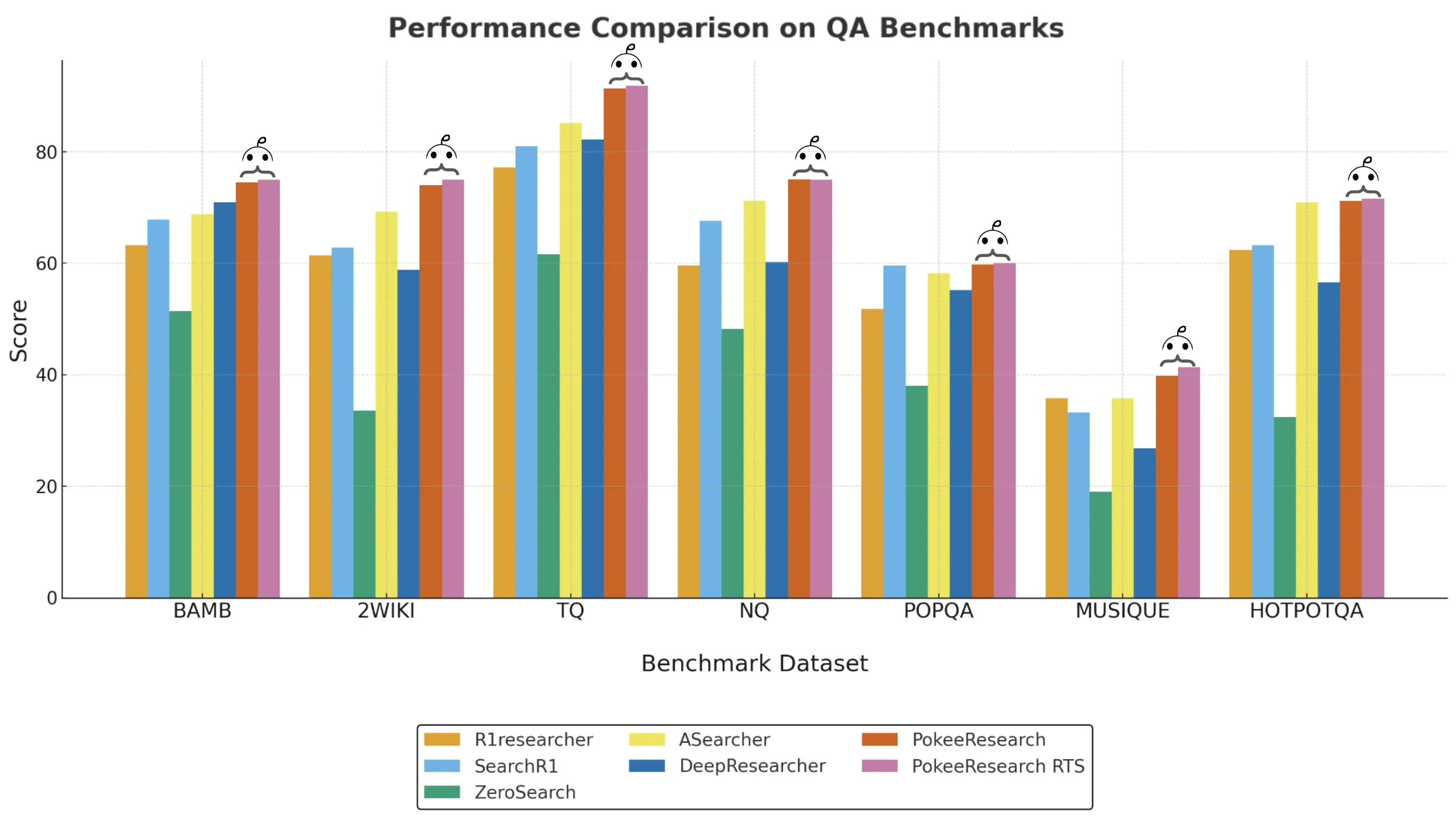

The analysis workforce evaluates textual content solely questions from 10 benchmarks, NQ, TriviaQA, PopQA, HotpotQA, 2WikiMultiHopQA, Musique, Bamboogle, GAIA, BrowseComp, and Humanity’s Final Examination. They pattern 125 questions per dataset, besides GAIA with 103, for a complete of 1,228 questions. For every query, they run 4 analysis threads, then compute imply accuracy, imply at 4, utilizing Gemini-2.5-Flash-lite to guage correctness. The utmost interplay turns are set to 100.

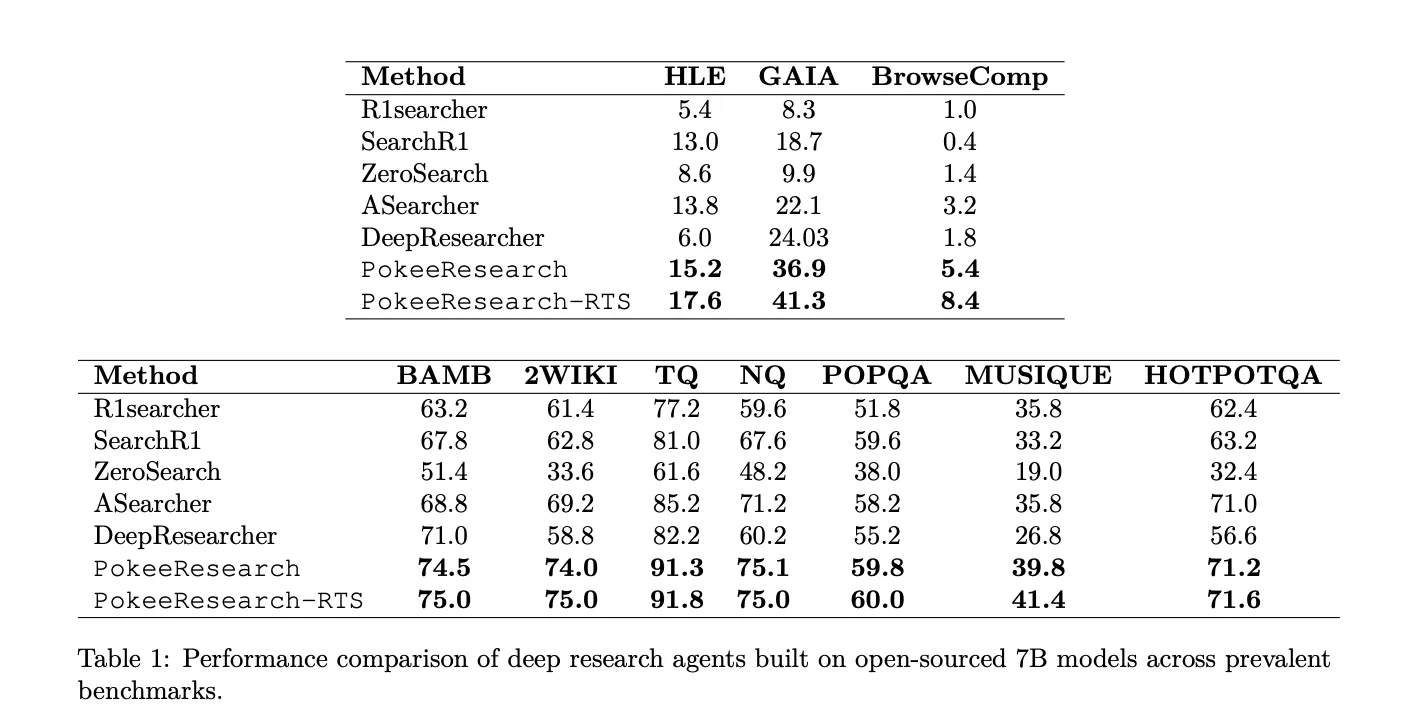

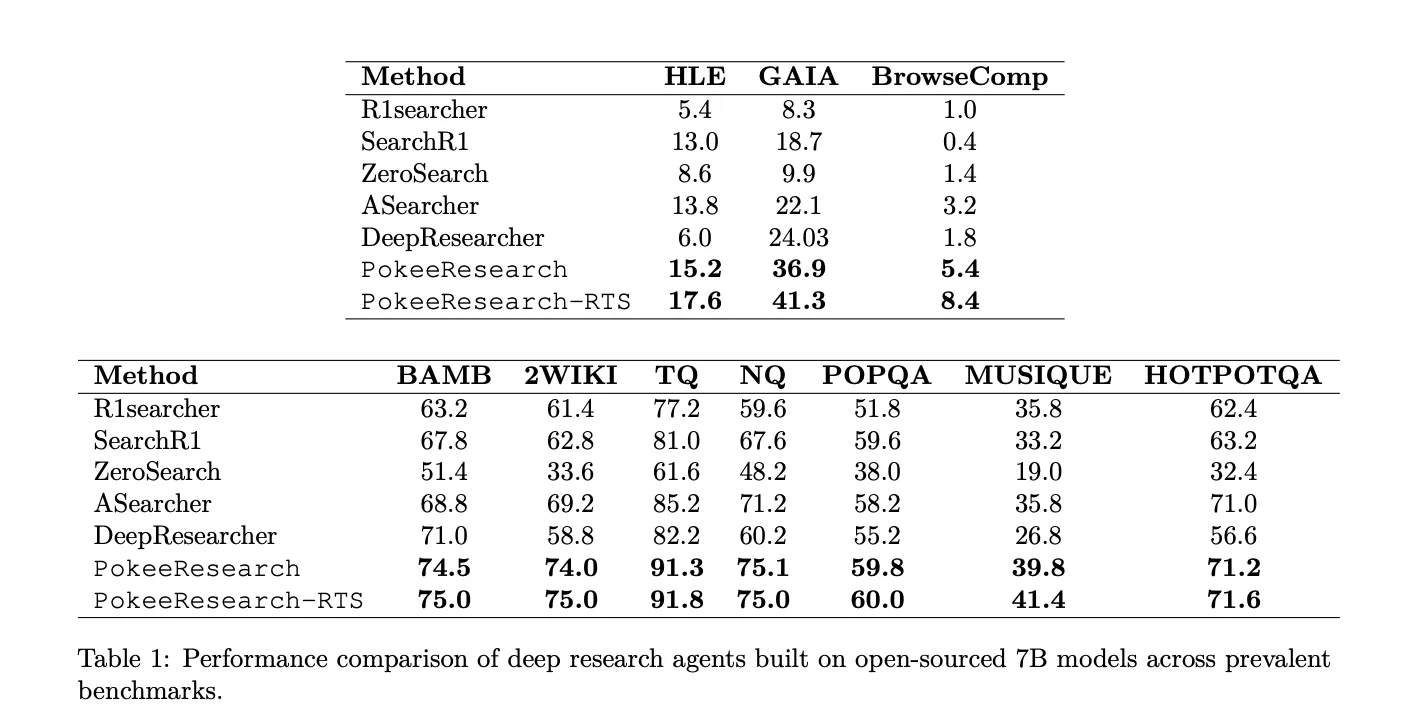

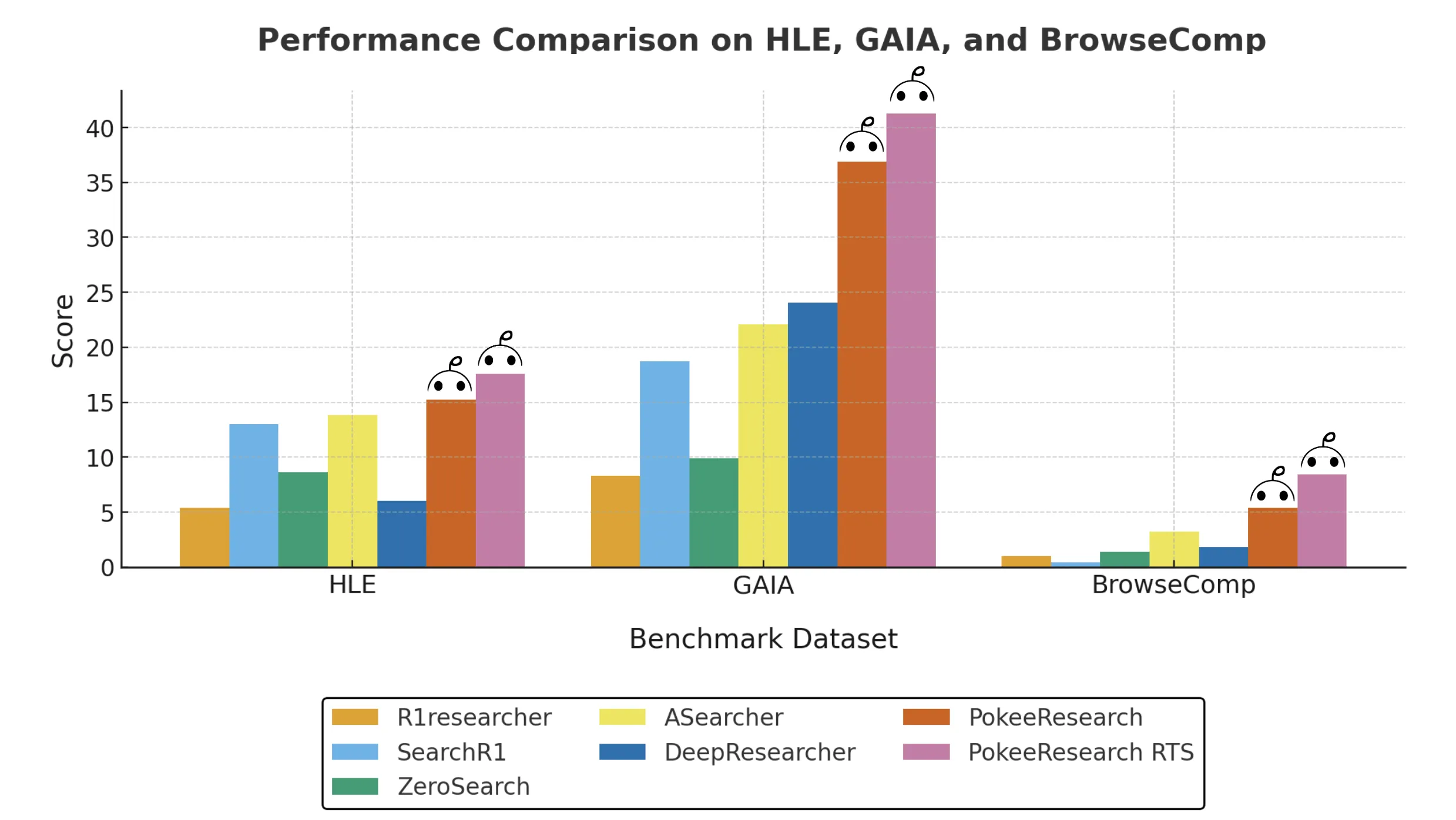

Outcomes at 7B scale

PokeeResearch-7B experiences the most effective imply at 4 accuracy amongst 7B deep analysis brokers throughout the ten datasets. On HLE the mannequin experiences 15.2 with out RTS and 17.6 with RTS. On GAIA the mannequin experiences 36.9 with out RTS and 41.3 with RTS. On BrowseComp the mannequin experiences 5.4 with out RTS and eight.4 with RTS. On the seven QA benchmarks, Bamboogle, 2WikiMultiHopQA, TriviaQA, NQ, PopQA, Musique, HotpotQA, the mannequin improves over current 7B baselines. Features from RTS are largest on HLE, GAIA, and BrowseComp, and smaller on the QA units.

Key Takeaways

- Coaching: PokeeResearch-7B high quality tunes Qwen2.5-7B-Instruct with RLAIF utilizing the RLOO estimator, optimizing rewards for factual accuracy, quotation faithfulness, and instruction adherence, not token overlap.

- Scaffold: The agent runs a analysis and verification loop with Analysis Threads Synthesis, executing a number of impartial threads, then synthesizing proof to a last reply.

- Analysis protocol: Benchmarks span 10 datasets with 125 questions every, besides GAIA with 103, 4 threads per query, imply@4 accuracy judged by Gemini-2.5-Flash-lite, with a 100 flip cap.

- Outcomes and launch: PokeeResearch-7B experiences state-of-the-art amongst 7B deep analysis brokers, for instance HLE 17.6 with RTS, GAIA 41.3 with RTS, BrowseComp 8.4 with RTS, and is launched underneath Apache-2.0 with code and weights public.

PokeeResearch-7B is a helpful step for sensible deep analysis brokers. It aligns coaching with RLAIF utilizing RLOO, so the target targets semantic correctness, quotation faithfulness, and instruction adherence. The reasoning scaffold consists of self verification and Analysis Threads Synthesis, which improves tough benchmarks. The analysis makes use of imply at 4 with Gemini 2.5 Flash lite because the choose, throughout 10 datasets. The discharge ships Apache 2.0 code and weights with a transparent software stack utilizing Serper and Jina. The setup runs on a single A100 80 GB and scales.

Try the Paper, Mannequin on HF and GitHub Repo. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as nicely.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s tendencies at this time: learn extra, subscribe to our e-newsletter, and change into a part of the NextTech group at NextTech-news.com