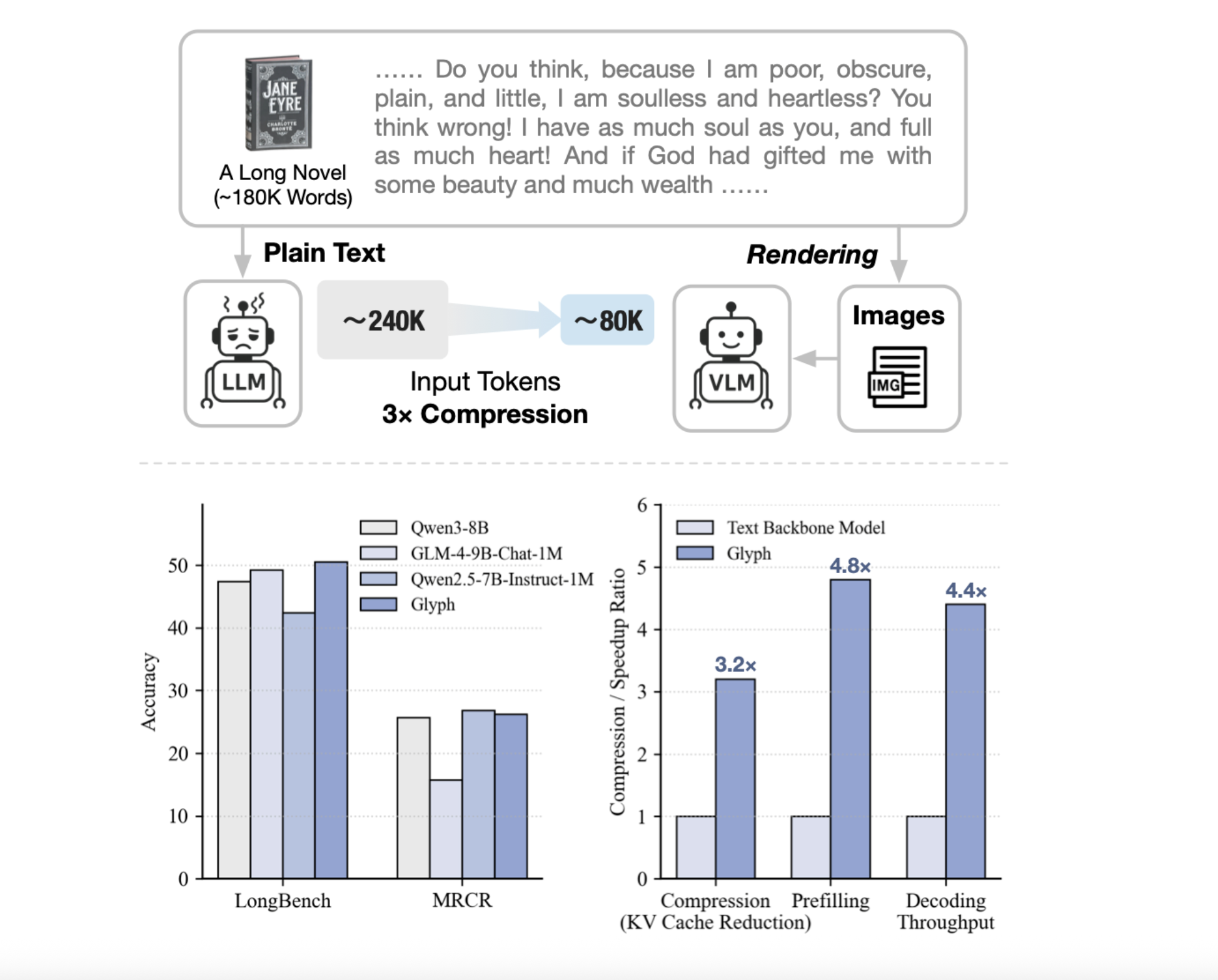

Can we render lengthy texts as photos and use a VLM to attain 3–4× token compression, preserving accuracy whereas scaling a 128K context towards 1M-token workloads? A group of researchers from Zhipu AI launch Glyph, an AI framework for scaling the context size by way of visual-text compression. It renders lengthy textual sequences into photos and processes them utilizing imaginative and prescient–language fashions. The system renders extremely lengthy textual content into web page photos, then a imaginative and prescient language mannequin, VLM, processes these pages finish to finish. Every visible token encodes many characters, so the efficient token sequence shortens, whereas semantics are preserved. Glyph can obtain 3-4x token compression on lengthy textual content sequences with out efficiency degradation, enabling vital positive factors in reminiscence effectivity, coaching throughput, and inference velocity.

Why Glyph?

Standard strategies develop positional encodings or modify consideration, compute and reminiscence nonetheless scale with token rely. Retrieval trims inputs, however dangers lacking proof and provides latency. Glyph adjustments the illustration, it converts textual content to photographs and shifts burden to a VLM that already learns OCR, structure, and reasoning. This will increase data density per token, so a hard and fast token funds covers extra authentic context. Underneath excessive compression, the analysis group present a 128K context VLM can tackle duties that originate from 1M token degree textual content.

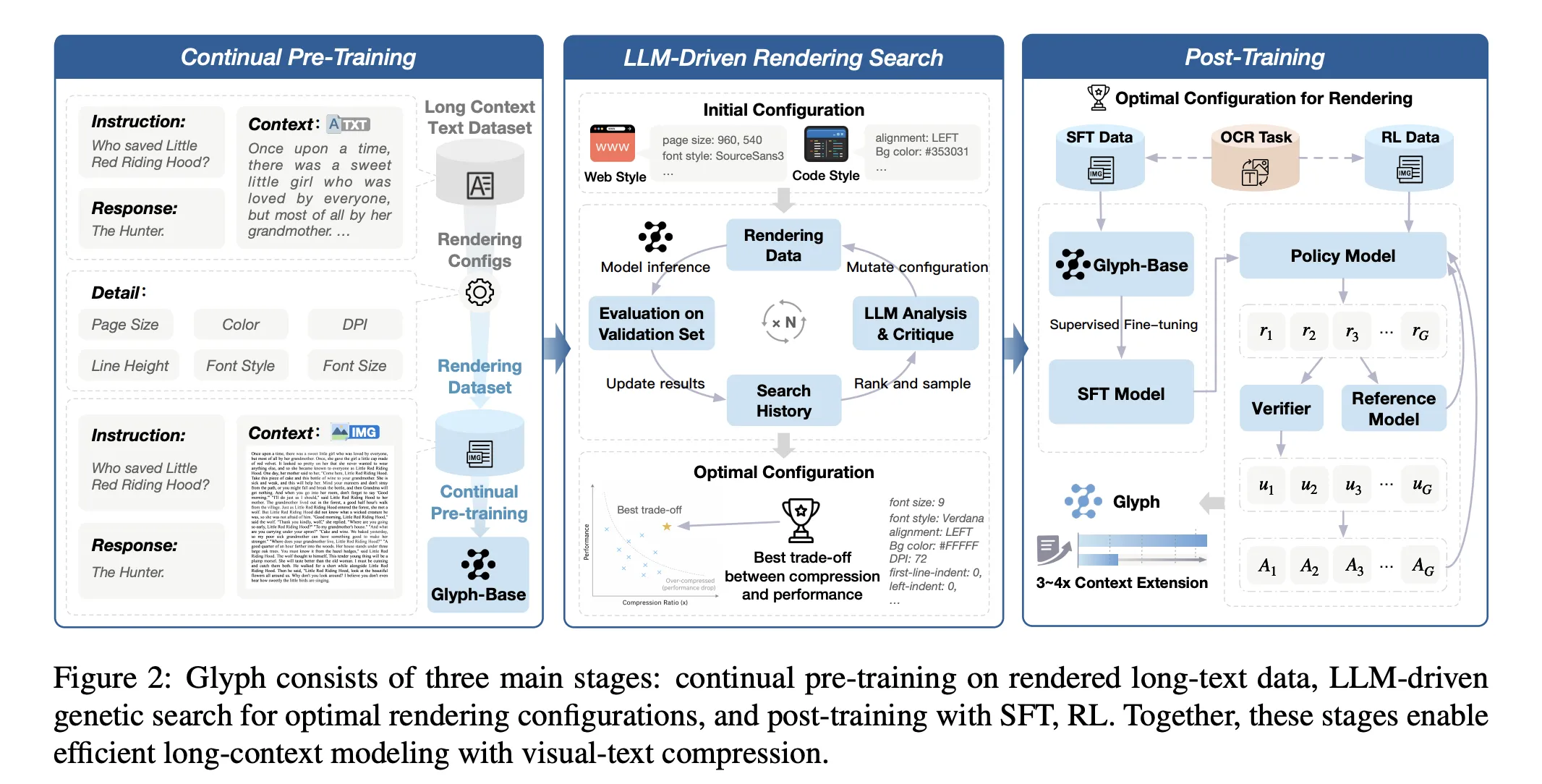

System design and coaching

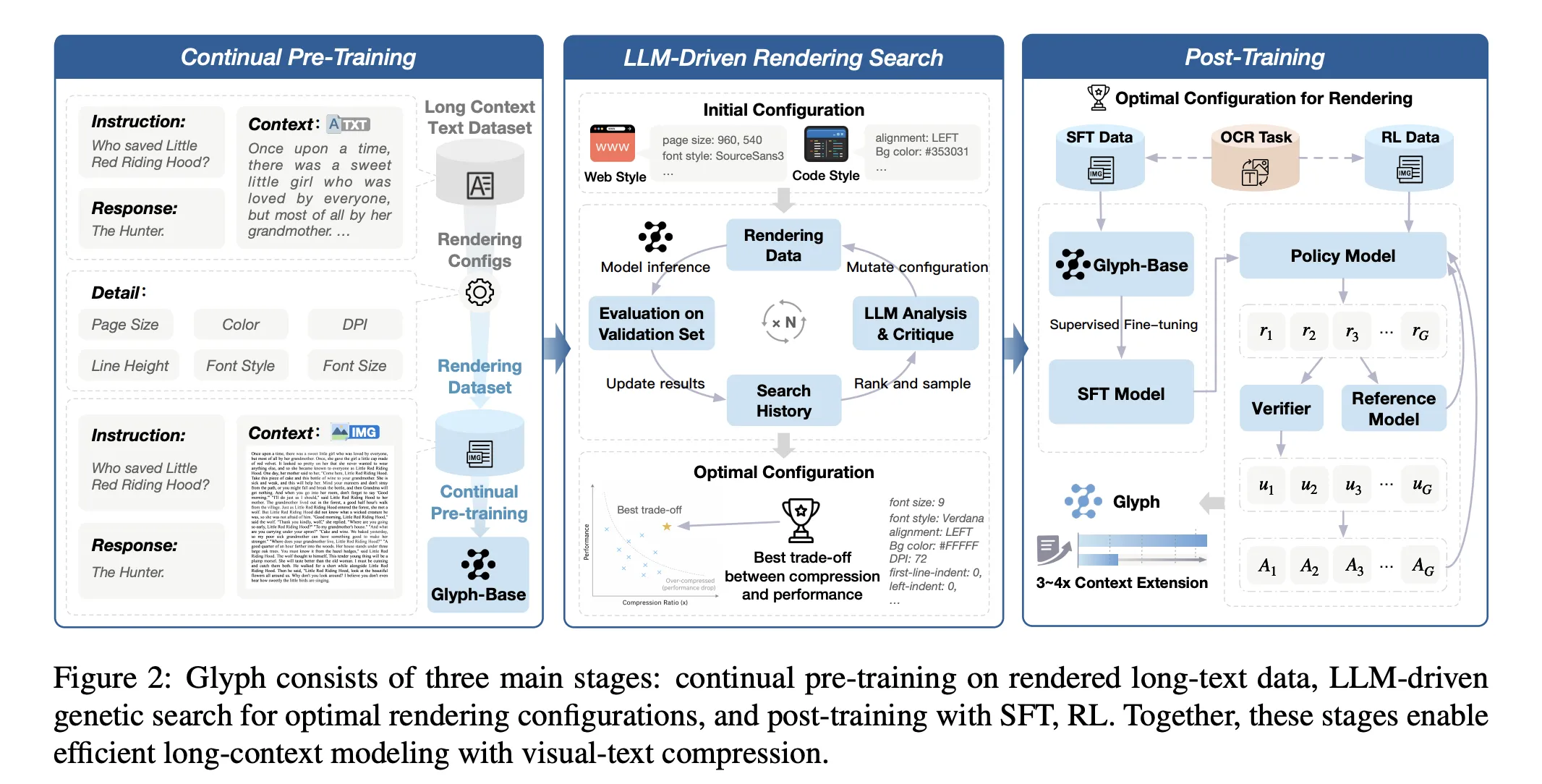

The strategy has three phases, continuous pre coaching, LLM pushed rendering search, and submit coaching. Continuous pre coaching exposes the VLM to giant corpora of rendered lengthy textual content with numerous typography and kinds. The target aligns visible and textual representations, and transfers lengthy context abilities from textual content tokens to visible tokens. The rendering search is a genetic loop pushed by an LLM. It mutates web page measurement, dpi, font household, font measurement, line top, alignment, indent, and spacing. It evaluates candidates on a validation set to optimize accuracy and compression collectively. Publish coaching makes use of supervised superb tuning and reinforcement studying with Group Relative Coverage Optimization, plus an auxiliary OCR alignment activity. The OCR loss improves character constancy when fonts are small and spacing is tight.

Outcomes, efficiency and effectivity…

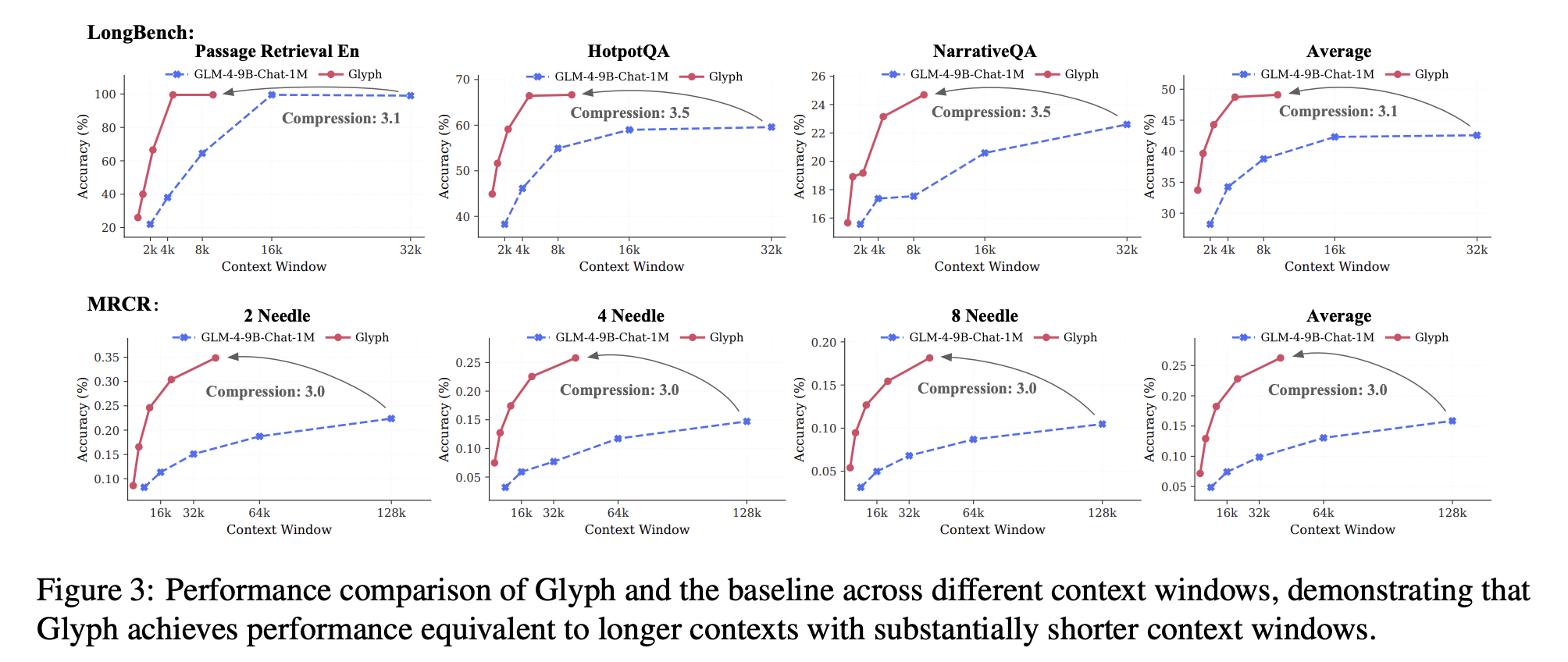

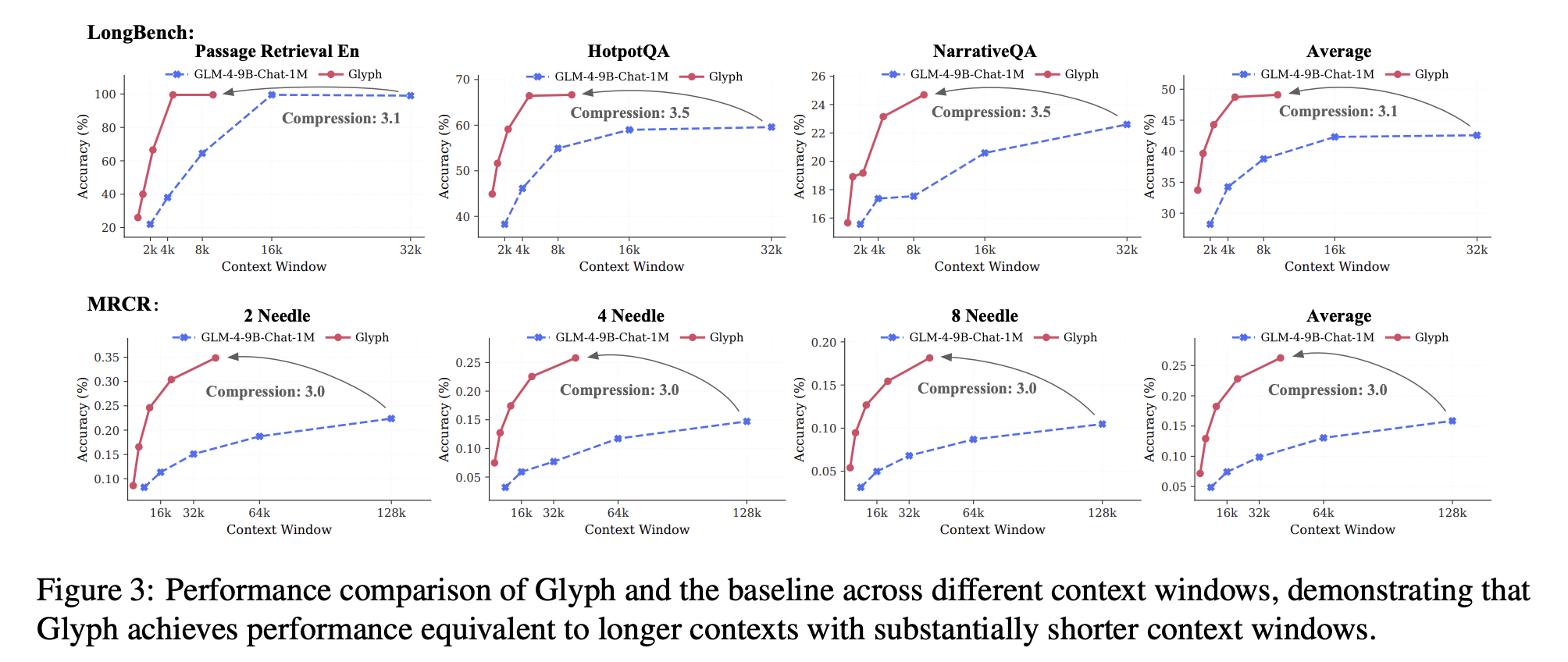

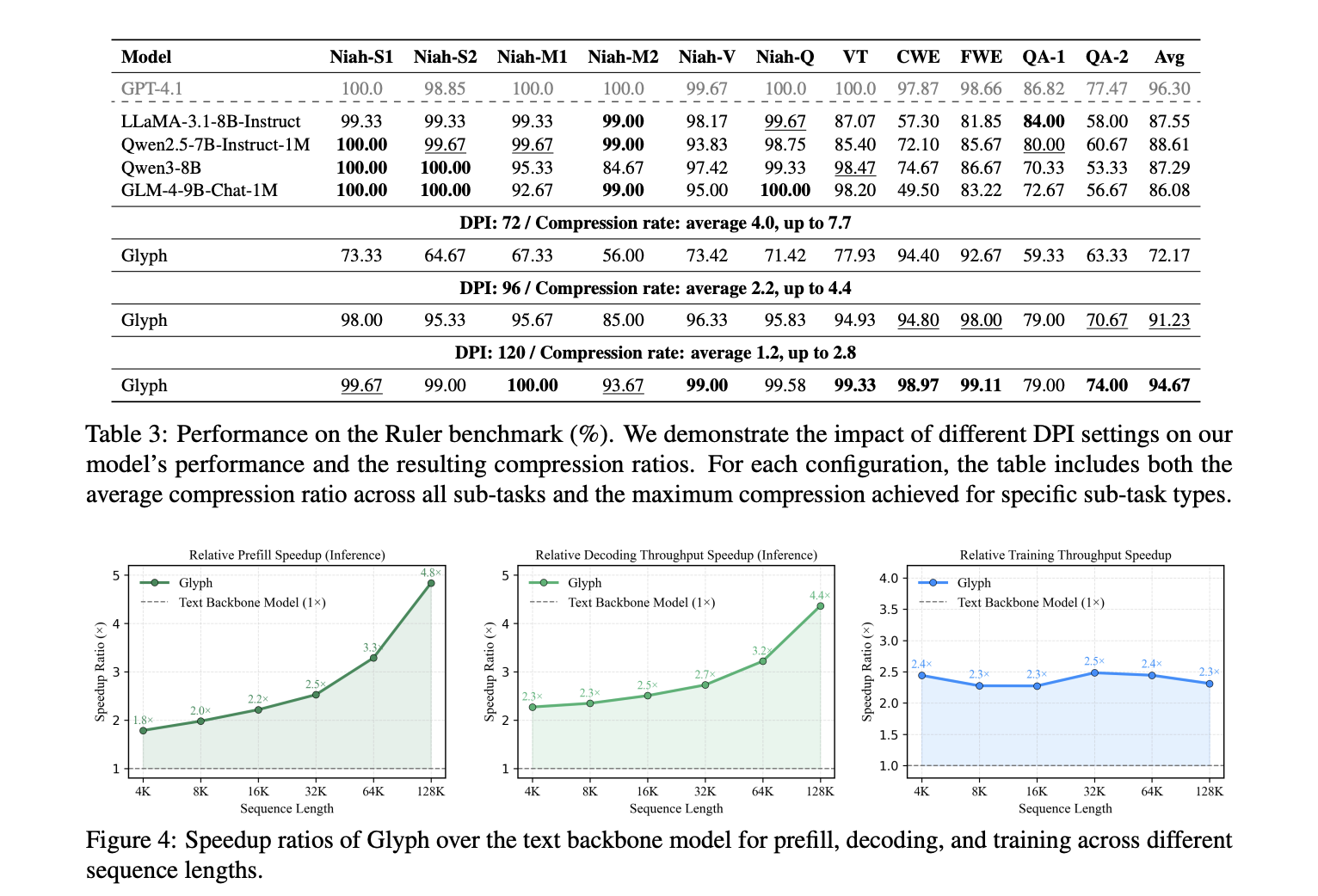

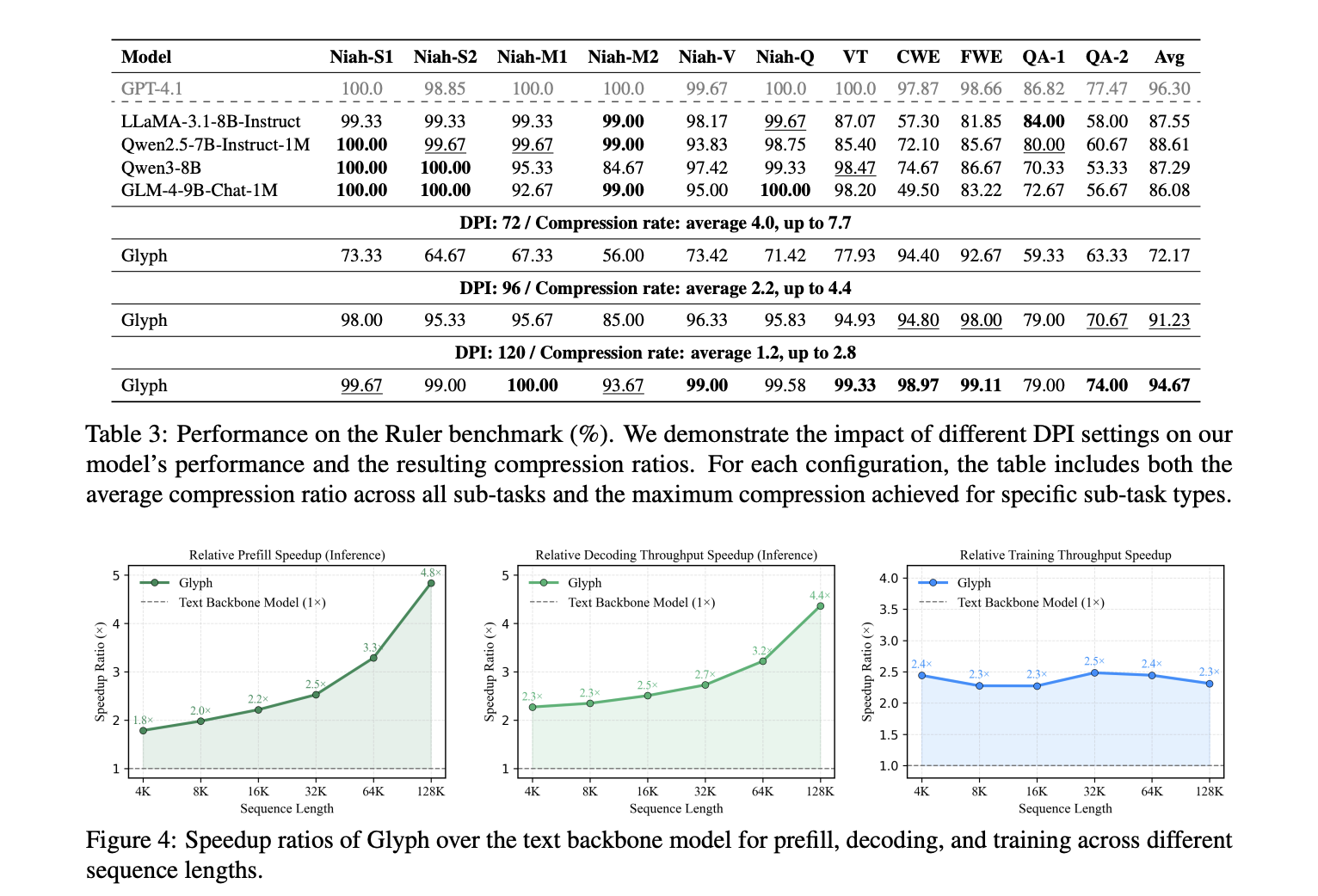

LongBench and MRCR set up accuracy and compression below lengthy dialogue histories and doc duties. The mannequin achieves a mean efficient compression ratio about 3.3 on LongBench, with some duties close to 5, and about 3.0 on MRCR. These positive factors scale with longer inputs, since each visible token carries extra characters. Reported speedups versus the textual content spine at 128K inputs are about 4.8 instances for prefill, about 4.4 instances for decoding, and about 2 instances for supervised superb tuning throughput. The Ruler benchmark confirms that increased dpi at inference time improves scores, since crisper glyphs assist OCR and structure parsing. The analysis group reviews dpi 72 with common compression 4.0 and most 7.7 on particular sub duties, dpi 96 with common compression 2.2 and most 4.4, and dpi 120 with common 1.2 and most 2.8. The 7.7 most belongs to Ruler, to not MRCR.

So, what? Functions

Glyph advantages multimodal doc understanding. Coaching on rendered pages improves efficiency on MMLongBench Doc relative to a base visible mannequin. This means that the rendering goal is a helpful pretext for actual doc duties that embrace figures and structure. The primary failure mode is sensitivity to aggressive typography. Very small fonts and tight spacing degrade character accuracy, particularly for uncommon alphanumeric strings. The analysis group exclude the UUID subtask on Ruler. The strategy assumes server facet rendering and a VLM with sturdy OCR and structure priors.

Key Takeaways

- Glyph renders lengthy textual content into photos, then a imaginative and prescient language mannequin processes these pages. This reframes long-context modeling as a multimodal downside and preserves semantics whereas lowering tokens.

- The analysis group reviews token compression is 3 to 4 instances with accuracy similar to sturdy 8B textual content baselines on long-context benchmarks.

- Prefill speedup is about 4.8 instances, decoding speedup is about 4.4 instances, and supervised superb tuning throughput is about 2 instances, measured at 128K inputs.

- The system makes use of continuous pretraining on rendered pages, an LLM pushed genetic search over rendering parameters, then supervised superb tuning and reinforcement studying with GRPO, plus an OCR alignment goal.

- Evaluations embrace LongBench, MRCR, and Ruler, with an excessive case displaying a 128K context VLM addressing 1M token degree duties. Code and mannequin card are public on GitHub and Hugging Face.

Glyph treats lengthy context scaling as visible textual content compression, it renders lengthy sequences into photos and lets a VLM course of them, lowering tokens whereas preserving semantics. The analysis group claims 3 to 4 instances token compression with accuracy similar to Qwen3 8B baselines, about 4 instances quicker prefilling and decoding, and about 2 instances quicker SFT throughput. The pipeline is disciplined, continuous pre coaching on rendered pages, an LLM genetic rendering search over typography, then submit coaching. The strategy is pragmatic for million token workloads below excessive compression, but it depends upon OCR and typography decisions, which stay knobs. Total, visible textual content compression presents a concrete path to scale lengthy context whereas controlling compute and reminiscence.

Try the Paper, Weights and Repo. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as effectively.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.

Elevate your perspective with NextTech Information, the place innovation meets perception.

Uncover the newest breakthroughs, get unique updates, and join with a worldwide community of future-focused thinkers.

Unlock tomorrow’s developments right now: learn extra, subscribe to our e-newsletter, and change into a part of the NextTech neighborhood at NextTech-news.com